How to Combine Sparse Mapping with Visual SLAM in Autonomous Driving?

![]() 10/28 2025

10/28 2025

![]() 555

555

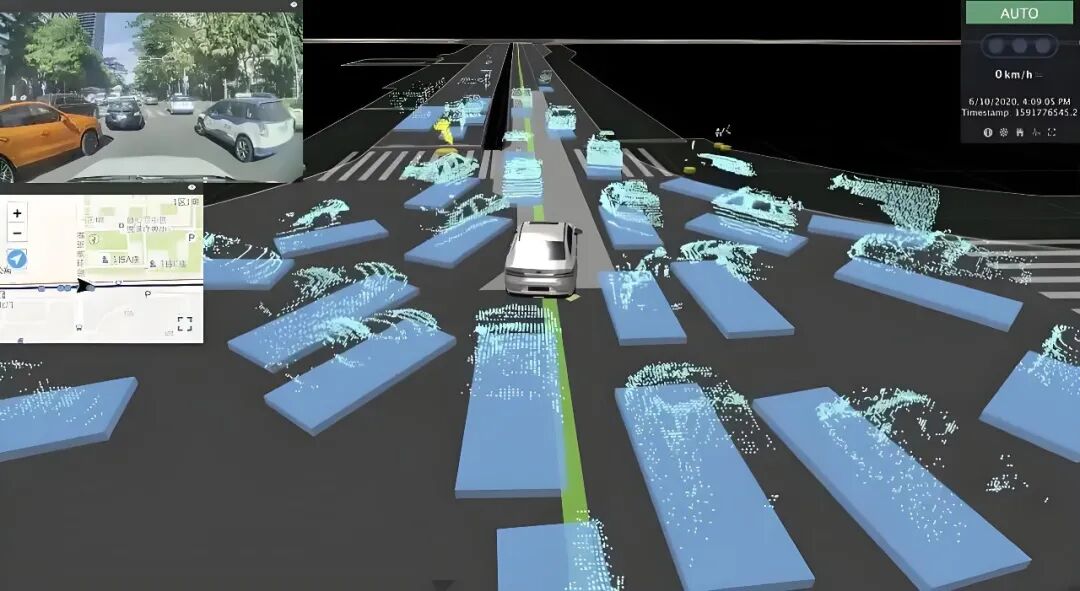

In autonomous driving scenarios, sparse maps are typically composed of a series of keyframes and several 3D sparse feature points. Each feature point includes 3D coordinates and descriptors representing its appearance. Visual SLAM, on the other hand, is a real-time algorithm running on the vehicle. It estimates the vehicle's position via a camera while constructing or updating the map in unknown environments. The purpose of combining the two is clear: leveraging a pre-built sparse map as prior information to enable more stable and precise localization for online visual SLAM, while allowing the map to be reused and continuously maintained, avoiding the need to start mapping from scratch each time.

Why Combine Sparse Mapping with Visual SLAM?

Visual SLAM excels in short-term tracking and loop closure detection but is susceptible to drift, illumination changes, occlusions, and dynamic objects during long-term operation. Sparse mapping compensates for these weaknesses by supporting offline optimization, significantly improving geometric accuracy through global bundle adjustment. It stores stable semantic or landmark features (e.g., streetlights, traffic signs, building corners) to enable long-term reliable localization. Additionally, it can be reused across different tasks, effectively conserving online computational resources.

Integrating sparse mapping with visual SLAM introduces high-quality offline map information into the online localization process. When entering a new scene, the vehicle can quickly 'relocalize' to the map coordinate system, effectively suppressing long-term drift. When encountering a loop closure, the map assists the system in rapidly identifying and correcting pose errors. For autonomous driving, this means more stable lane-level or meter-level localization, reduced reliance on GNSS alone, and enhanced system robustness in complex scenarios like tunnels and urban canyons.

Specific Integration Methods and Technical Details

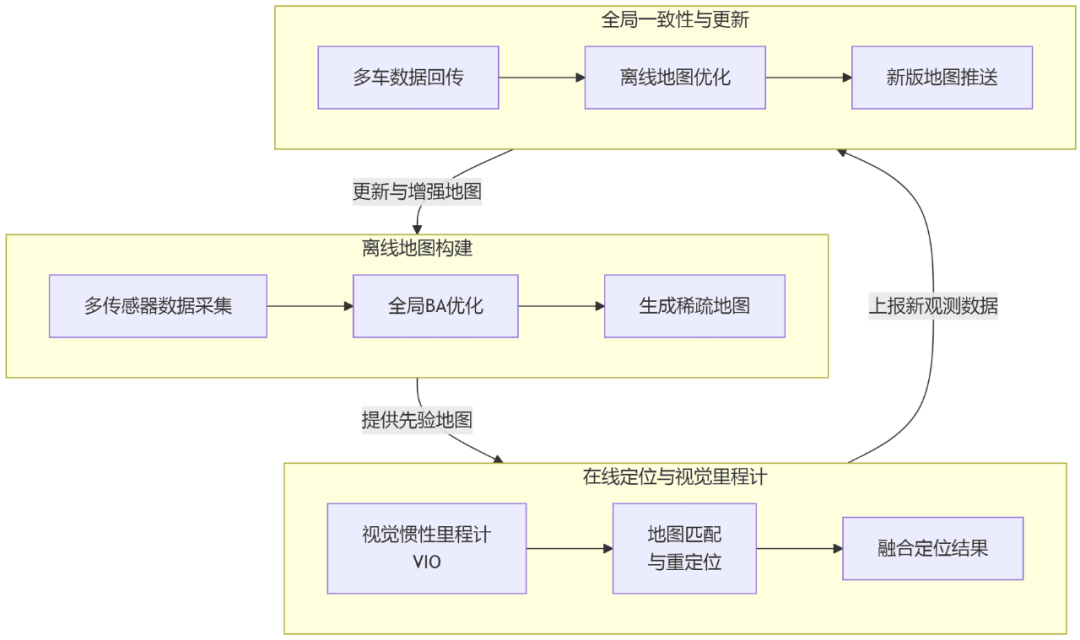

Combining sparse mapping with visual SLAM is essentially a cyclic system engineering process of 'preparation-usage-maintenance.' To clearly understand its entirety, we can break down its workflow into three interconnected yet distinct mainlines: 'offline mapping,' 'online localization and visual odometry,' and 'global consistency and updating.' These mainlines do not exist in isolation but form a complete ecological closed loop (closed loop).

1) Offline Mapping

Most teams begin by collecting data from the target road using high-quality SLAM systems or multi-sensor combinations (e.g., visual + IMU + RTK-GNSS), followed by offline optimization of camera poses, keyframes, and 3D feature points.

Key focuses at this stage include selecting stable features (e.g., ORB, SIFT, SuperPoint), maintaining coverage and viewpoint diversity among keyframes, performing global bundle adjustment to eliminate weakly constrained points, and filtering out dynamic or transient features (e.g., foliage, moving vehicles).

Additionally, to support rapid online matching, feature descriptors (traditional ORB or deep learning-based) are typically extracted and stored for each keyframe. A bag-of-words model or global descriptor (e.g., NetVLAD) is established to accelerate retrieval.

Structured storage of the offline map is also necessary. Information such as keyframe poses, camera intrinsics, sparse point coordinates, their visible keyframes, and feature descriptors should be properly saved. If feasible, semantic labels (e.g., 'traffic sign,' 'streetlight') and reliability scores can also be included.

2) Online Localization

With a sparse map available, the focus of visual SLAM shifts from 'simultaneous mapping' to 'local tracking + offline map matching.' After capturing a new image, the camera first performs short-term tracking via visual odometry or visual-inertial odometry to maintain low-latency, smooth pose estimation. Simultaneously, the system attempts relocalization based on image retrieval, using a bag-of-words model or global descriptor to find potential offline keyframe candidates. Next, 2D-3D matching is performed on the candidate keyframes (associating current frame feature points with 3D points in the sparse map), and the absolute camera pose in the map coordinate system is solved using PnP combined with RANSAC.

Effective association strategies (descriptor matching supplemented by scale, position priors, and geometric verification) are required for 2D-3D matching during this process. Robust estimation methods (e.g., RANSAC) are used to eliminate outliers. If the number of matched points is sufficient and the inlier-to-outlier ratio is ideal, the PnP solution can be directly used as the localization result; otherwise, the system falls back to odometry estimation or waits for more observations.

Under normal circumstances, the localization result is added as a constraint to a short-term sliding window optimizer for pose-only optimization or local bundle adjustment to further refine the result. Large-scale global optimization is unsuitable for real-time operation but can be performed at a low frequency in the background.

3) Coordinate System and Transformation Handling

Offline maps typically reside in a global or local map coordinate system, while online visual odometry outputs relative poses in the vehicle body coordinate system. To combine the two, the transformation matrix from the vehicle body coordinate system to the map coordinate system must be estimated. This transformation can be obtained through a successful PnP relocalization or registered using GNSS/RTK information.

In practical engineering, this transformation is often treated as a state variable. Upon successful relocalization, a fixed 'map-to-vehicle' transformation is set and continuously optimized. If subsequent drift in the transformation is detected (e.g., due to offline map updates), mechanisms for smooth adjustment or triggering relocalization are required.

4) Global Consistency and Map Updating

As the vehicle operates, it continuously generates new observations that may reveal defects in the map or changes in the environment. Therefore, the system needs two capabilities: first, to transmit online observations back to the offline side for fusion and map maintenance; second, to handle map version changes on the online side (e.g., receiving new map versions pushed from the cloud).

The online system should possess certain fault tolerance to handle inconsistencies in the map, employing robust data association strategies and assigning appropriate weights to map constraints to avoid localization failures caused by a single erroneous match.

Upon receiving data collected by multiple vehicles or multiple runs, the offline side should perform map merging, remove low-confidence landmarks, add new landmarks, and generate a new map version through global bundle adjustment. Version control, timestamp management, and geographically partitioned storage are essential engineering considerations in this process.

5) Perception and Semantic Fusion

Pure geometric features are prone to limitations in large-scale, long-term operation environments. Combining sparse mapping with semantic information (e.g., using traffic signs, lane markings, and light poles as semantic landmarks) significantly enhances system relocalization robustness across different seasons and lighting conditions.

A common practice currently is to bind semantic labels to 3D points in the offline map and use semantic information for strong verification during online matching. Semantic features are generally more stable (e.g., traffic sign positions are unlikely to change) and offer greater interpretability for end-to-end systems.

6) Key Algorithms and Toolchains

To facilitate the integration of sparse mapping and visual SLAM, commonly used technical components include bag-of-words retrieval (e.g., DBoW2/3) and global descriptors (e.g., NetVLAD) for coarse retrieval, feature matching (e.g., ORB, SuperPoint+SuperGlue) for fine matching, PnP+RANSAC for pose estimation, and sliding window optimization or pose graph optimization (using tools like g2o, Ceres, GTSAM) for local and global optimization.

To enhance efficiency, KD-tree/FLANN is employed for nearest neighbor search, inverted indices are used to accelerate retrieval, and geographic partitioning mechanisms restrict the search range and reduce memory usage. For large-scale maps, keyframe thinning and landmark downsampling are typically performed to ensure the online system only loads local submaps near the current position.

Common Issues and Countermeasures in Combining Sparse Mapping with Visual SLAM

1) Illumination and Seasonal Changes

Drastic illumination changes or seasonal transitions significantly reduce matching success rates based on appearance descriptors. To address this, descriptors more robust to illumination variations (e.g., deep learning-based descriptors) can be used; semantic landmarks (e.g., traffic signs) can be introduced as supplements; color normalization or histogram matching can be applied during preprocessing; and if possible, data collected at different times of the day can be incorporated during offline map construction to generate multiple map versions for online system selection as needed.

2) Dynamic Objects and Erroneous Matching

Dynamic objects such as vehicles and pedestrians on the road can lead to outliers in map matching. Therefore, dynamic features should be filtered out during the offline stage (judged by consistency across multiple observations), and strict RANSAC parameters and geometric verification mechanisms should be set during online matching to avoid erroneous 2D-3D matches affecting localization results. Motion segmentation or semantic segmentation techniques can also be employed to mask feature points on moving objects.

3) Relocalization Failure and Insufficient Map Coverage

When the vehicle enters an area not covered by the map, localization based on that map naturally fails. Therefore, map coverage should be planned in advance; a fallback mechanism (e.g., downgrading to VIO+GNSS localization) should be set up in the system; and newly collected data should be transmitted back to the offline side for expanding map coverage.

4) Map Version Management and Multi-Vehicle Collaboration

When multiple vehicles use the same map, frequent updates occur. Version control and rollback mechanisms must be established to ensure new map versions do not affect online services before thorough validation. Geographically partitioned map loading can significantly reduce memory usage and retrieval latency.

5) Computational Resources and Latency Trade-offs

Online matching and local optimization both consume computational resources. In practical systems, relocalization and map matching tasks are typically placed in separate threads with time budgets (e.g., the entire retrieval and matching process is controlled within tens to hundreds of milliseconds). When time is insufficient, the system can downgrade to using only visual odometry for prediction or output poses with lower confidence. Global optimization is performed at a low frequency in the cloud or background to avoid affecting real-time control loops.

6) Accuracy Evaluation and Metrics

To continuously evaluate system performance, metrics such as absolute trajectory error, relative pose error, and relocalization success rate are commonly used. During evaluation, the coordinate system, alignment method (e.g., similarity transformation alignment using the Umeyama algorithm), and test scenarios (e.g., day/night, rainy/snowy weather, urban/rural areas) must be specified.

7) Privacy, Security, and Map Update Frequency

Sparse maps may contain sensitive information (e.g., residential house numbers). When uploading or sharing map data, desensitization processing is required, or only structural features truly necessary for localization should be retained. Additionally, the map update frequency needs to balance 'timeliness' and 'stability.' Frequent updates can cause the online side to constantly switch map versions, while overly delayed updates can lead to outdated maps. A common compromise is to rapidly upload statistical information of local observations in small batches, allowing the offline system to perform batch reconstruction and release thoroughly validated new map versions.

Final Remarks

Combining sparse mapping with visual SLAM essentially integrates the advantages of 'offline global accuracy' and 'online real-time performance.' It is crucial to avoid piling all functions into a single module and instead adopt a layered, fault-tolerant architecture. The offline side should focus on creating high-quality maps (multi-view, multi-time), while the online side should design a multi-layered system including short-term visual odometry, map-based relocalization, and background map updating. Long-term stable operation is ensured through semantic enhancement, robust matching, and version management.

A stable VIO can be deployed first as the odometry, followed by incorporating a bag-of-words model for rapid candidate retrieval and using PnP+RANSAC for initial relocalization. Subsequently, pose optimization is performed in a sliding window to refine the results, while observation data is periodically transmitted back to maintain the offline map. Following this layered design, autonomous driving systems will exhibit greater stability and maintainability in real-world conditions, making them easier to scale to large-scale, multi-vehicle deployment scenarios.

-- END --