What Factors Influence the Quality of Autonomous Driving Cameras?

![]() 09/15 2025

09/15 2025

![]() 543

543

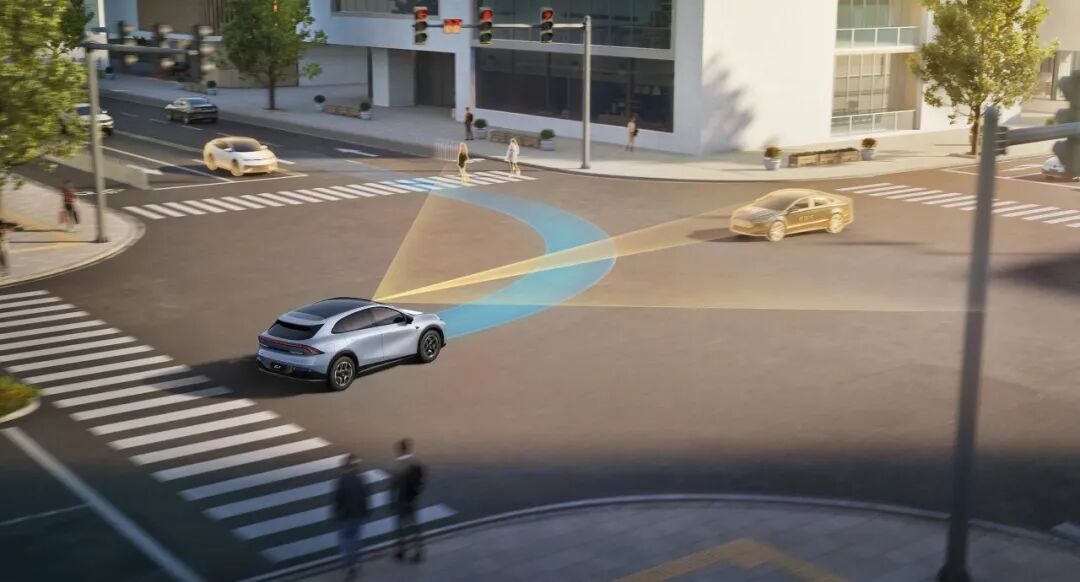

Cameras play a pivotal role in autonomous driving systems. They are far from mere devices that "snap a few extra pictures." Instead, they serve as a comprehensive perception subsystem encompassing optics, electronics, and software. The goal of integrating an optical glass, an image sensor, an ISP (Image Signal Processor), and a data link is to generate "trustworthy images" that algorithms can utilize reliably and engineers can maintain over the long term. So, what exactly determines the quality of autonomous driving cameras?

Key Evaluation Factors for Camera Quality

When assessing camera capabilities, it's essential to consider not just physical quantitative metrics but also how these metrics interact in real-world scenarios and ultimately impact the success rate of perception algorithms and the reliability of vehicle decisions. In essence, a camera's "quality" should be judged not solely by extreme numerical values but by its ability to perform tasks effectively, robustly, and maintainably within a specific scenario, algorithm, and vehicle architecture.

Pixel count is frequently emphasized when discussing cameras, yet pixels alone do not equate to visual capability. While pixel count determines the theoretical resolution limit, the size and quantum efficiency of individual pixels are often more critical. Larger pixels capture more photons, enhancing the signal-to-noise ratio (SNR) in low-light conditions. Conversely, dividing the same sensor area into more, smaller pixels may increase nominal resolution but can exacerbate noise in low-light scenes. Designers must strike a balance between "pixel density" and "single-pixel sensitivity," especially in systems requiring both long-range forward identification (detecting small, distant targets) and panoramic views (covering wide angles). Additionally, parameters like sensor quantum efficiency, dark current, and readout noise directly affect low-light performance, necessitating empirical comparisons.

Dynamic range is another frequently tested metric in practical applications. High-contrast lighting in urban environments at dawn or dusk, intense backlighting at tunnel entrances and exits, and bright reflections on wet roads push camera dynamic ranges to their limits. Although sensor physical dynamic ranges are constrained, cameras often employ HDR (High Dynamic Range) solutions, such as multi-frame exposure fusion, pixel-level multi-gain, or rapid gain switching, to extend the usable lighting range. However, HDR is not a universal remedy; it may introduce motion artifacts or time delays, particularly when vehicles or targets move rapidly, causing "ghosting" in multi-frame fusion. Thus, evaluating a camera requires examining not just labeled dB or stop values but also the manufacturer's HDR implementation details and algorithms for mitigating artifacts in real-time moving scenarios. For enterprises, a more effective approach is to test cameras in tunnels, high-contrast parking lots, and dusk scenes with pedestrian crossings, observing detail retention at target edges and shadows rather than relying solely on static test images.

The interplay between exposure, frame rate, and shutter type is also crucial in autonomous driving scenarios. Higher frame rates enable smoother target trajectories and lower latency, which are beneficial for highway driving. However, shorter exposure times per frame reduce SNR, resulting in darker and noisier images. The choice between global and rolling shutters is not merely a cost consideration—global shutters avoid geometric distortions at high speeds, favoring edge- and geometry-based vision algorithms, but often sacrifice some light sensitivity or increase costs. Rolling shutters perform well in static or slow-motion scenes and may offer pixel design advantages. Therefore, system designers should consider these dimensions holistically based on target applications. For consumer-grade mass-produced L2/L3 models, cost is a significant constraint, while high-end global shutters with superior optics may be necessary for controlled L4 campus or industrial vehicles.

Optics are often underestimated, yet lenses are the final step in projecting the external light field onto the sensor. The field of view determines coverage; wide angles reduce blind spots but decrease effective resolution and increase distortion at the periphery, imposing additional preprocessing burdens on detection algorithms. Lens MTF (Modulation Transfer Function) directly reflects detail retention, with MTF50 being a common metric indicating the spatial frequency at which an image loses half its contrast. A camera with "many pixels" paired with a low-quality lens may lose edge details entirely, resulting in perception performance inferior to a lower-pixel, higher-optical-quality setup. Aperture and depth of field also matter—large apertures benefit low-light conditions but narrow the depth of field and may introduce aberrations, while small apertures extend the depth of field but reduce light intake. Anti-glare coatings, lens coatings, and shading designs are often decisive in intense backlighting or direct sunlight scenarios, requiring real-world validation on prototype vehicles.

Spectral response differences become pronounced in certain night vision or enhancement scenarios. Human visible light sensitivity does not fully align with sensor response curves; some sensors are more sensitive to near-infrared, which is advantageous for night vision enhancement with infrared light sources. Monochrome sensors typically exhibit better nighttime SNR than color sensors due to the absence of Bayer mosaic filters, allowing each pixel to receive more light with less noise interference and higher spectral utilization. Thus, in nighttime safety-critical scenarios (e.g., unlit rural roads), selecting monochrome-optimized sensors or incorporating near-infrared enhancement modules at specific angles significantly improves the detectability of distant figures or road signs. Algorithm training and white balance adjustments must also account for these spectral characteristics.

The conflict between noise, noise reduction, and detail preservation persists in visual systems. Many technical solutions employ noise reduction strategies to create visually "cleaner" images, but excessive smoothing can erase valuable high-frequency information, leading to missed detections of distant small targets. Ideal ISPs should allow adjustable noise reduction intensity or directly output selectable RAW streams for adaptive algorithm processing. When selecting cameras, evaluating SNR curves (SNR under varying illuminance) and detail retention across different ISO/gain settings is more valuable than relying solely on a manufacturer's claim of "good night vision." Furthermore, high-quality cameras should maintain controllable noise spectra under high gain, enabling algorithms to compensate via temporal or spatial filtering without complete hardware dependence.

Time synchronization and latency characteristics are central to multi-sensor fusion. Cameras must align temporally with IMUs (Inertial Measurement Units), radars, LiDARs (Light Detection and Ranging), and other sensors. Any timestamp jitter or camera-side uncertainty can bias multi-sensor fusion algorithm outputs, affecting localization and tracking. High-precision hardware triggering, stable inter-frame delays, and configurable timestamp outputs are essential for automotive-grade cameras. A common engineering pitfall is high-quality images marred by inaccurate timestamp and CAN (Controller Area Network) message alignment, causing "drift" in forward target fusion results and reduced detection rates. Such issues stem from sensor timing problems, not algorithmic flaws.

Data links and compression strategies significantly impact camera deployment. RAW data is invaluable during training and validation but consumes substantial bandwidth and increases storage costs in mass production, necessitating compression. Video compression artifacts at low bitrates strongly affect edge and small target detection, especially with inter-frame compression, where keyframe losses cause short-term target information gaps. Many technologies adopt configurable compression strategies, preserving high-quality or low-compression outputs for critical scenes or cameras while applying higher compression elsewhere. Further advancements involve lightweight preprocessing at the camera end (e.g., ROI [Region of Interest] priority encoding, edge preservation) to transmit critical information to the central processor within limited bandwidth.

An often overlooked yet critical issue is environmental adaptability and long-term reliability. Vehicle-mounted cameras face dust, rain, frost, vibration, and temperature cycles. Lens anti-fog coatings, heating designs, IP (Ingress Protection) protection ratings, anti-vibration structures, and temperature resistance directly affect camera usability in extreme climates. Common testing practices include temperature cycling, salt spray aging, vibration fatigue, and long-term optical aging tests under high UV exposure. Many "cheap and good" cameras degrade significantly after a year or two due to lens coating peeling, seal failure, or electrical contact corrosion, becoming maintenance nightmares and incurring extra costs post-scale deployment. Thus, long-term engineering metrics must be considered during selection.

Beyond hardware, software support, calibration, and supply chain stability are also vital for determining a "good camera." Autonomous driving systems rely on precise intrinsic and extrinsic camera calibrations; any minor displacement or rotation amplifies errors during 3D geometric reconstruction. Factors like stable factory calibration data, support for online or rapid recalibration, and user-friendly calibration tools and processes directly influence system integration speed and post-sale maintenance costs. Equally important are driver maturity, firmware upgrade paths, and remote diagnostic capabilities, as frequent firmware defects or upgrade interruptions can disrupt fleet operations. For commercial operations, these maintenance costs often exceed initial equipment price differences.

How to Evaluate Camera Quality?

So, how can we assess a camera's quality in laboratories and road tests? The safest approach is to divide the evaluation into two layers: controlled metric experiments and scenario-based real-world road tests. In laboratories, repeatable and comparable measurements are essential, including MTF and resolution tests, dynamic range and SNR curve tests, detail retention in low-light and high-light scenes, glare and lens flare tests, geometric distortion measurements, and latency and timestamp jitter assessments. These tests require standard light sources, test charts, and strictly recorded lighting conditions, with results presented as curves and numerical tables for horizontal comparison across solutions. Laboratory data reveals sensor and optical theoretical capability limits under ideal conditions but does not guarantee consistent performance on all streets.

Thus, installing cameras on real vehicles and conducting end-to-end evaluations with the intended perception algorithms is indispensable. Road tests should cover diverse scenarios, including daytime, dusk, nighttime, rain, snow, fog, tunnels, and complex urban lighting. Using LiDAR or high-resolution reference cameras for ground truth annotation and consistent perception algorithms to calculate detection rates, false alarm rates, localization errors, and target loss times as final metrics is recommended. Do not rely solely on subjective image quality assessments; use algorithm output performance to determine a camera's value for your system. Additionally, long-term durability testing is crucial, particularly observing camera lens covers, encapsulation, and electrical interface stability under temperature, humidity, and vibration.

When selecting cameras, a scenario-driven trade-off principle is practical. For systems targeting highway scenarios, prioritize long-range identification capabilities; narrow-field, high-pixel-density lenses with good MTF and high frame rates typically yield the most direct benefits. For complex urban environments, wide-angle views and good near-field performance are more critical, necessitating additional lateral or near-field modules alongside forward main cameras to eliminate blind spots. Parking and low-speed scenarios prioritize near-field details, color accuracy, and ultra-low-light detail retention, not necessarily ultra-high pixels. For high-availability L4 applications, multi-sensor redundancy (multiple cameras + LiDAR + millimeter-wave radar) and strictly automotive-grade camera hardware (including global shutters, automotive-grade thermal designs, and IP protection) are usually necessary.

Final Thoughts

To conclude, here are several practical suggestions: retain RAW outputs as baseline data during prototype vehicle stages to enable algorithm teams to compare different ISP strategies; conduct joint testing of camera timing, triggering, and synchronization capabilities with vehicle buses before actual deployment to avoid attributing timing errors to algorithms during system integration; request detailed environmental reliability reports and long-term degradation data from suppliers, not just initial prototype performance; and document calibration procedures in daily maintenance manuals to ensure repeatable recalibration schemes during lens repairs or replacements. The ultimate criterion for "quality" is whether the camera can consistently, predictably, and maintainably complete tasks within your closed loop, not a single parameter record.

Camera selection and tuning constitute a systematic engineering endeavor, requiring engineers to understand both optics and sensor physics and algorithm sensitivity to input data while considering vehicle costs and maintenance. While technical details abound, the principles are straightforward: rely on data, validate with scenarios, and measure against final algorithm performance. Relying solely on parameter sheets is one-sided and risky; both rigorous laboratory quantification and real-road end-to-end validation are necessary. Pursue optimal image quality while considering long-term reliability and supply chain robustness. By adhering to these principles, you can select cameras truly suitable for your system.

-- END --