Waymo's Latest Explorations in Autonomous Driving: World Models, Long-Tail Problems, and the Most Critical Elements

![]() 09/15 2025

09/15 2025

![]() 590

590

The assisted driving/autonomous driving industries are expected to eventually converge, as the underlying logic of their algorithm software is the same. In our previous article, 'IAA 2025 Munich Auto Show: Chinese Automakers Transition from Trade Exports to Unstoppable Momentum,' we shared that Chinese companies pioneering assisted driving algorithms, such as Momenta, Yuanrong, and DJI, are also mining opportunities for L4 autonomous driving across Europe and the Middle East, just like companies specializing in L4.

This article summarizes the content of a speech given by Ms. Wu Chen, head of Waymo's perception algorithm team, at this year's CVPR, and analyzes autonomous driving algorithms, current development status, and what is truly most important for autonomous driving based on personal experience.

Building a World Model

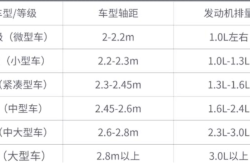

Waymo stated that they have developed a large-scale AI model called the Waymo Foundational Model, which supports vehicles in perceiving their surroundings, predicting the behavior of other vehicles on the road, simulating scenarios, and making driving decisions.

The functionality of this massive model is similar to that of large language models (LLMs) like ChatGPT, which are trained on vast datasets to learn patterns and make predictions. Just as companies like OpenAI and Google have built newer multimodal models to integrate different types of data (e.g., text, images, audio, or video), Waymo's AI can integrate sensor data from multiple sources to understand its surroundings.

The Waymo Foundational Model is a single, large-scale model, but the vehicle-end model is smaller. However, this model is 'distilled' from the larger model—because it needs to be compact enough to be deployed on the vehicle.

The large model is used as a 'teacher' model to impart its knowledge and capabilities to a smaller 'student' model—a process widely used in the field of generative AI. The small model is optimized for speed and efficiency and runs in real-time on each vehicle while retaining the critical decision-making capabilities required for driving.

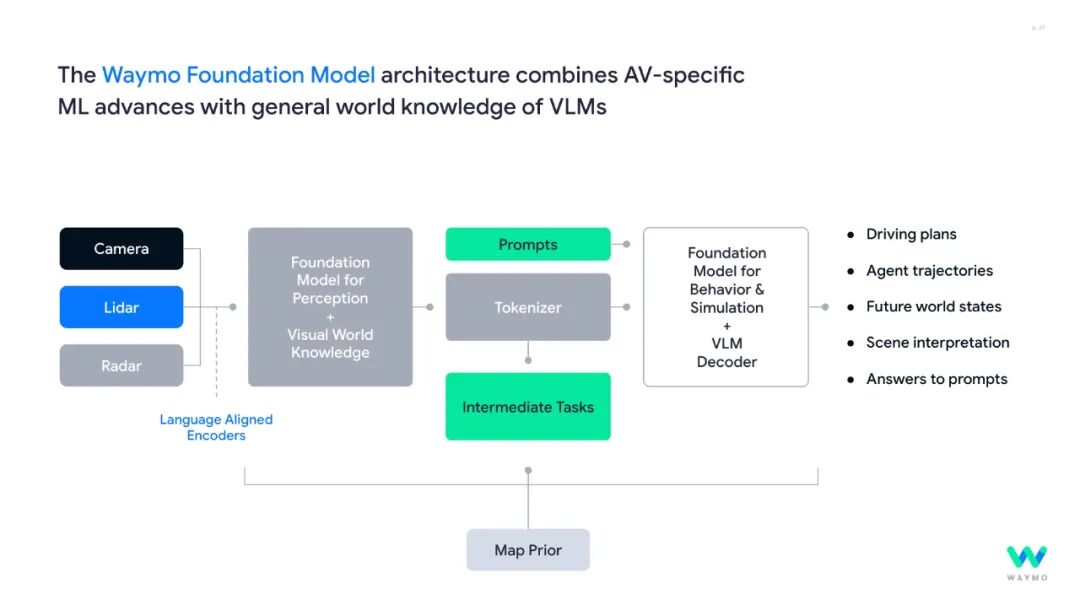

Therefore, perception and behavioral tasks (including perceiving objects, predicting the behavior of other road users, and planning the vehicle's next actions) can all be performed in real-time on the vehicle.

The larger model in the cloud can also simulate real driving environments, allowing for virtual testing and verification of decisions before deployment in Waymo vehicles.

Thus, Waymo's world model can encode all sensor data (cameras, radar, lidar) and incorporate built-in world knowledge, decode all driving-related tasks (distilled and scaled down for perception and control on the vehicle, with virtual simulation in the cloud), enabling powerful generalization capabilities and rapid adaptation to different platforms.

With this world model algorithm, daily autonomous driving problems are essentially solved.

The Next Task: Solving Long-Tail Problems

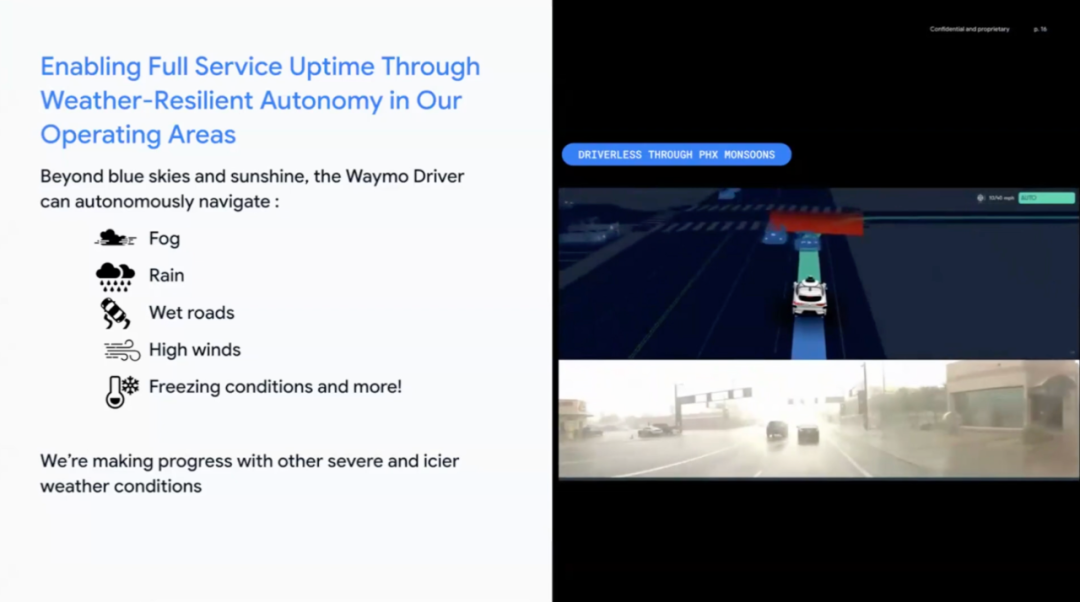

The long-tail problems in autonomous driving primarily involve complex scenarios such as adverse weather, low visibility, occlusions, and construction zones. While these issues may seem straightforward in theory, they pose significant challenges for autonomous driving.

Weather:

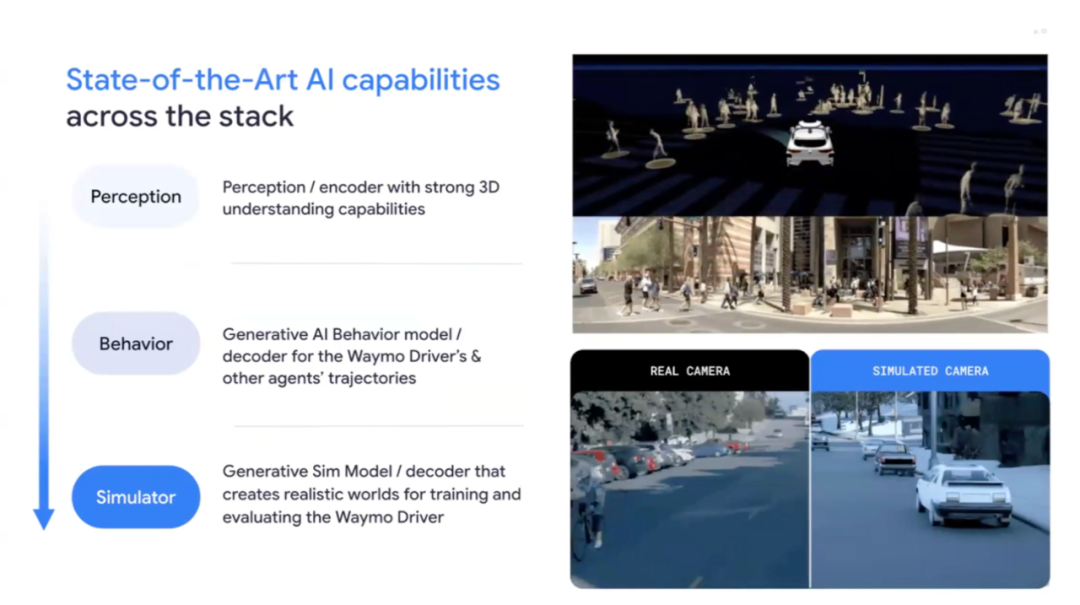

For example, puddles on the road after rain, as well as rare floods, require autonomous driving algorithms to judge water depth and a wealth of contextual information, demanding extremely high accuracy and recall rates, along with substantial spatial information.

Waymo's solution is to use VLM, but the prerequisite is a large corpus of such data.

Snow driving places high demands on vehicle hardware, requiring sensors with heating and cleaning functions to handle blockages. Challenges in snow driving also include determining the driving route (whether maps remain important), identifying tire tracks, and estimating friction.

Low Visibility and Occlusions:

Under extreme low-visibility conditions, such as pedestrians or vehicles on highways at night, a single sensor may fail to detect them, necessitating collaboration among multimodal sensors.

Phoenix's unique dust storms (haboobs) also pose challenges for sensor identification, although lidar can clearly see pedestrians in dust storms.

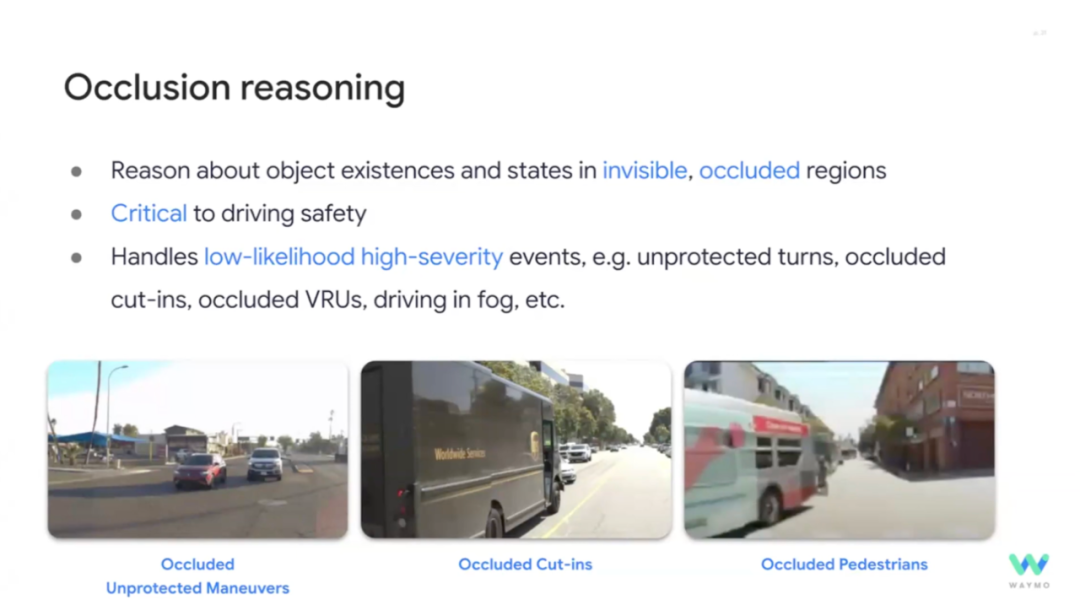

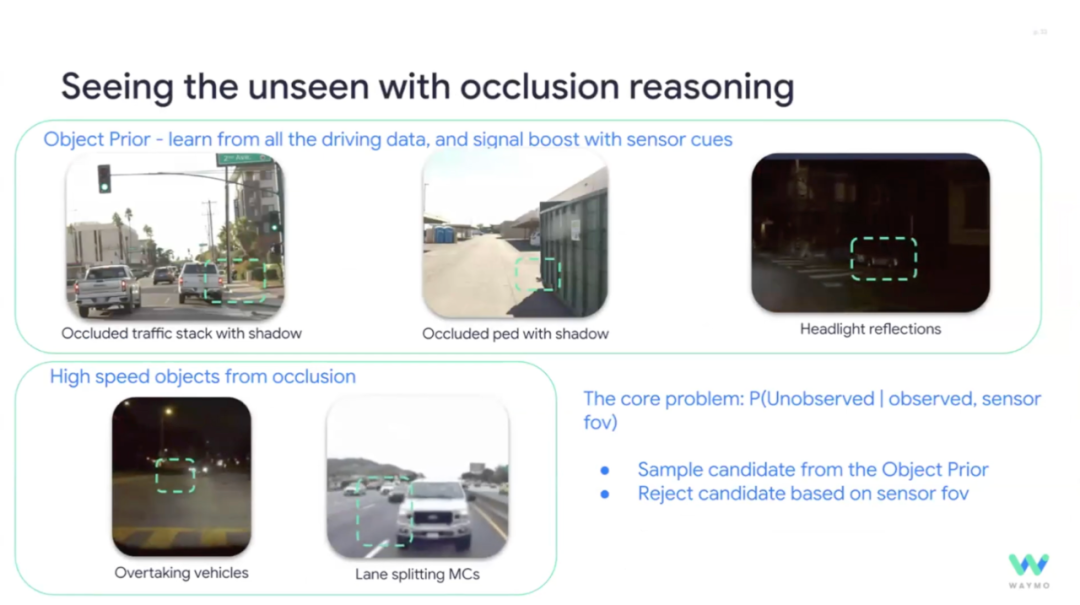

Occlusion Reasoning:

The most common scenario involves poor visibility, where it is uncertain whether a pedestrian might suddenly appear ('ghost probing') or if another vehicle might cut in. The presence and state of objects in these unseen areas are crucial for driving safety.

Challenges include ambiguity, non-determinism, lack of ground truth for benchmarking, subjectivity, diversity of traffic participants, and high contextual dependence.

Humans often adopt defensive driving in such scenarios.

Waymo's solutions include estimating prior information for uncertain objects (by learning driving data statistics and utilizing weak sensor cues, essentially leveraging existing data) and accurately estimating the vehicle's speed prior (at intersections with high uncertainty, incorrect speed estimates can cause problems).

Complex Scenario Understanding:

Construction zones: require identifying signs, reasoning about driving geometry, and adjusting routes based on objects like cones.

Dynamic scenarios: such as traffic officers' hand gestures, require real-time responses to dynamic signals.

Active accident scenes: involve numerous emergency vehicles and road blockages, requiring holistic scenario understanding to reason and determine the best course of action, rather than merely identifying individual objects (e.g., barricades).

Overall, for complex scenarios, it is not simply about identifying characteristic elements; it requires using LLMs to understand the scenario and then make decisions based on the scenario's content. Waymo stated that they are also exploring this for complex scenarios.

What Is Truly Most Important for Autonomous Driving Development?

Autonomous driving is a scenario for AI implementation, so the most critical elements for autonomous driving are the three pillars of AI: data, algorithms, and computing power.

However, Waymo's autonomous driving team only emphasized data among these three pillars. Waymo stated that having a large amount of data is fundamental, but data screening and organization are even more critical. Efficient and high-quality data ensures that the model focuses on solving the right problems.

Waymo uses technologies such as language search, embedding-based search (for appearance and behavior), few-shot learning, and active learning.

For autonomous driving, data includes massive amounts of video. Mining high-quality videos from this data requires video search capabilities that are crucial for understanding the meaning of events (e.g., car collisions, drifting, wheelies).

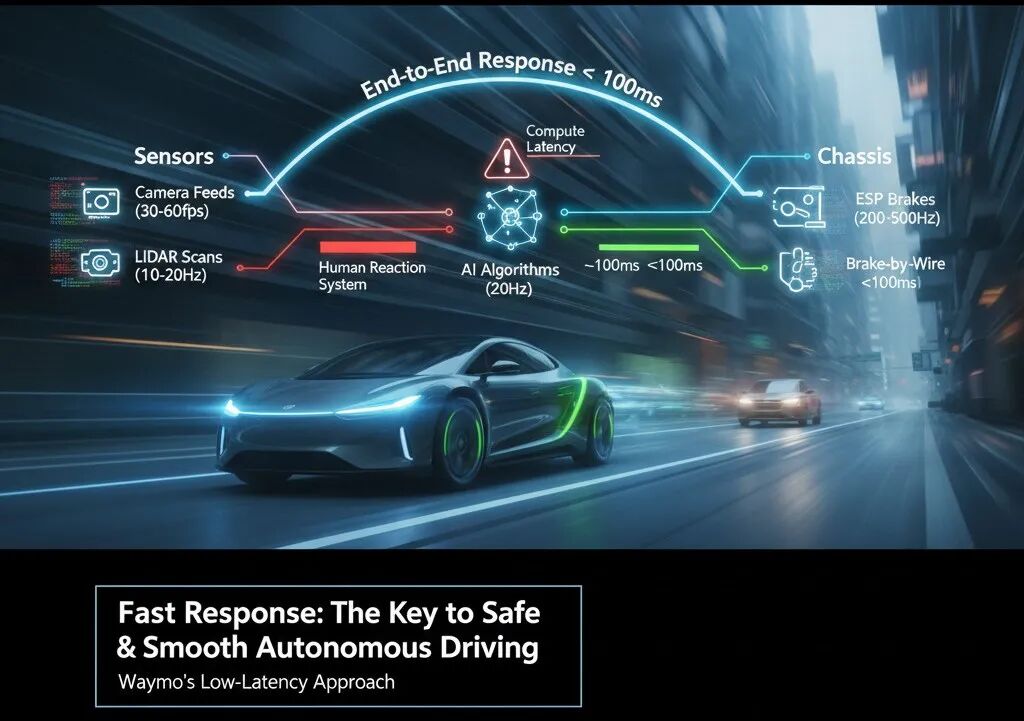

Rapid Real-Time Decision-Making: In martial arts, speed is key, and the same applies to autonomous driving. Waymo stated that the shorter the time it takes for an algorithm to execute a decision, the safer and smoother the autonomous driving experience will be.

Understanding rapid response in autonomous driving involves breaking down the process from sensor input (e.g., cameras) to the algorithm's computational conclusions being delivered to the chassis and other actuators.

Sensor input response primarily depends on the camera's FPS and lidar frame rates. Currently, camera frame rates exceed 24Hz.

Algorithm response depends on how quickly the algorithm can process frames and deliver them to the chassis actuators at frame rates such as 10Hz or 20Hz.

Chassis response: This is why traditional hydraulic engines and chassis are no longer suitable in this era. Motor control frequencies are already very high. For example, chassis brake ESP responses are in the hundreds of Hz.

Therefore, current rapid response decision-making is primarily constrained by the processing and output response frequencies of each company's algorithms.

Finally, Waymo believes that its Depots (operational parking lots) and modification factories are the most critical. Since Waymo focuses on L4 autonomous driving, these facilities help ensure smooth and rapid L4 operations.

Waymo's Depots: Currently, vehicles can autonomously enter the parking lot, find empty charging spots, and after charging, automatically drive out for operation once the charging gun is removed.

Waymo's modification workshops: Once sensors are installed on autonomous vehicles, the vehicles can automatically drive off the production line and into transport trucks or directly begin operations.

In Conclusion

Of course, Waymo's discussion at CVPR primarily focused on algorithms, development, and a small amount of operations. However, mass production and operations for assisted driving/autonomous driving, like automobile manufacturing, involve significant engineering challenges—dirty work that Waymo may not have reached yet.

Engineering implementation is a major barrier in the autonomous driving industry, requiring coordination with automobile development and testing operations. It is said that successful autonomous driving companies in the industry often recruit traditional automotive engineers from companies like Bosch.

The assisted driving/autonomous driving industries are expected to eventually converge, as the underlying logic of their algorithm software is the same.

*Unauthorized reproduction or excerpting is strictly prohibited-