Which processor is used in domestic humanoid robots?

![]() 09/21 2025

09/21 2025

![]() 416

416

In the complex physical world, humanoid robots rely on powerful AI computing power for autonomous navigation, precise operations, and environmental interaction, all of which hinge on a robust processor. As the computing foundation of the robotics industry chain, processor performance directly determines the intelligence level and application potential of humanoid robots.

01

The humanoid robot industry is on the verge of explosion, with chips as a key variable

The global humanoid robot market is on the brink of explosion, showcasing remarkable growth potential. Data indicates that the global market size for humanoid robots was approximately 9 billion yuan in 2025 and is projected to soar to 150 billion yuan by 2029, with a compound annual growth rate (CAGR) exceeding 75%. Industrial handling and medical scenarios will be the core engines driving market growth.

As software and hardware technologies for humanoid robots continue to iterate, expanding application scenarios has become a focal point for the industry. The International Federation of Robotics (IFR) noted in its latest paper released in the second half of 2025 that while development paths for humanoid robots vary across countries due to differing technological foundations and application purposes, the overall trend is clear: short-term pilot deployments, mid-term gradual scale-up in manufacturing and service sectors, and long-term potential for household adoption. In this process, high-end system-on-chips (SoCs) will play an increasingly critical role as core components supporting complex robotic functions.

From a technical perspective, the 'intelligent operation' of humanoid robots relies on a complete 'brain-cerebellum-limb' collaborative system: the 'brain' handles high-level cognitive functions such as speech recognition and environmental perception, decomposing and planning tasks after receiving instructions; the 'cerebellum' manages motion control tasks like optimal path planning; and finally, the 'limbs' are controlled via drive servo systems to execute tasks. Throughout this process, main chips represented by CPUs, GPUs, and NPUs form the core foundation for complex algorithm computations and intelligent decision-making, serving as the true 'wisdom core' of robots.

02

Which processors are used in humanoid robots?

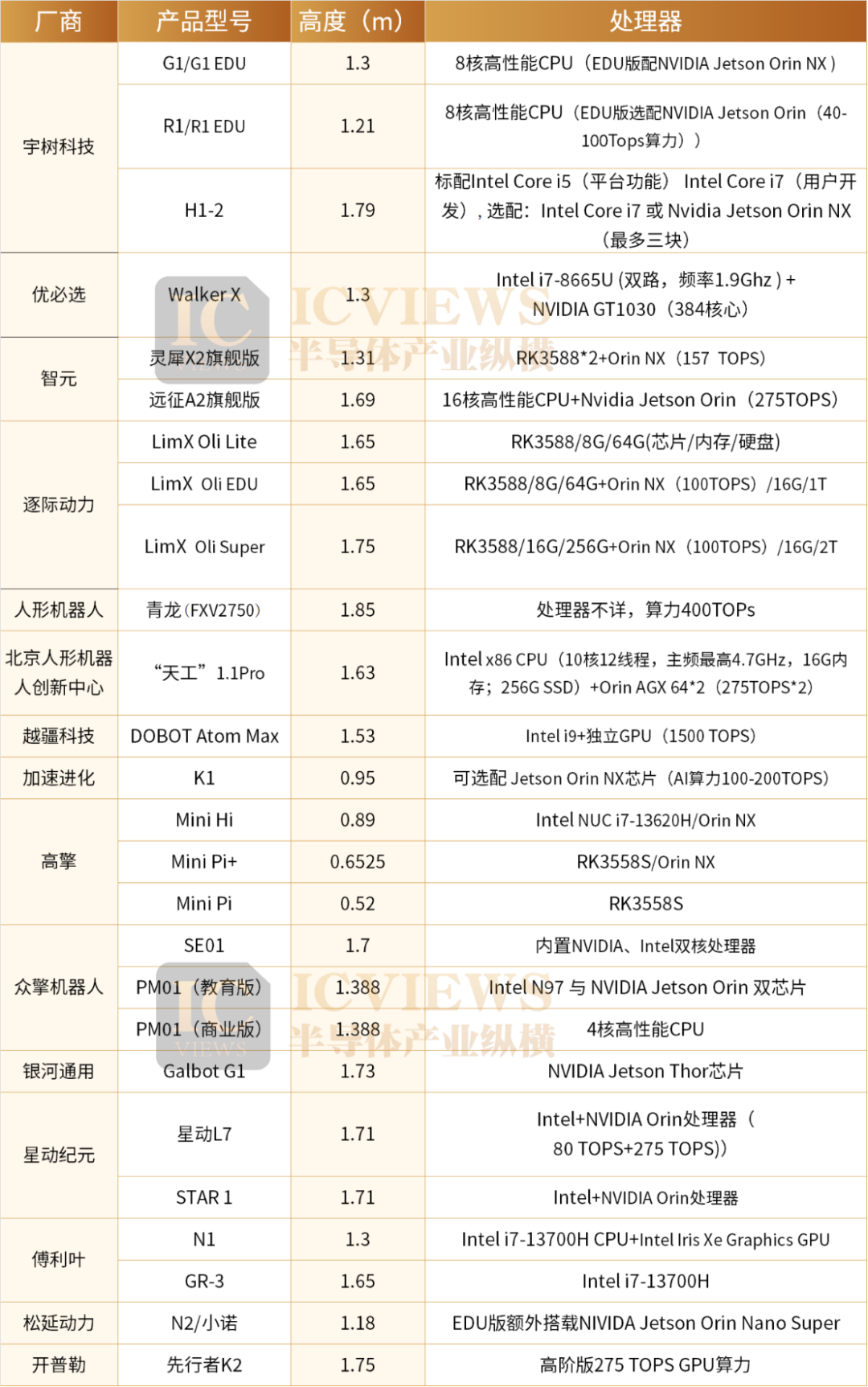

Currently, the processor supply for the global humanoid robot market is primarily dominated by two giants: NVIDIA and Intel, while domestic chips are still in the catch-up phase. Notably, among numerous domestic and international humanoid robot manufacturers, only Tesla possesses the capability to independently develop chips—its Dojo chip is used for AI model training, while the FSD chip is deployed on the robot's end for real-time computation and control. Most other manufacturers rely on externally purchased Intel and NVIDIA chips to build their computing systems. For example, the UBTECH Walker X uses an Intel i7-8665U (dual-channel, 1.9GHz) and an NVIDIA GT1030 graphics card (384 cores), while Unitree's H1-2 comes standard with an Intel Core i5 (for platform functions) or an Intel Core i7 (for user development), with optional upgrades to an Intel Core i7 or Nvidia Jetson Orin NX (up to three units).

Specific application scenarios are shown in the table below:

From a functional perspective, the 'big brain' and 'little brain' of humanoid robots are typically supported by different types of chips:

The 'cerebellum' (motion control): Generally employs Intel CPUs to handle low-level motion control tasks such as balance maintenance, trajectory planning, and force control, ensuring precise and stable movements. The 'brain' (cognitive decision-making): Primarily uses NVIDIA GPUs for high-level cognitive functions like environmental perception, speech understanding, and task planning. However, due to NVIDIA's high computing power and relatively expensive pricing, its chips are usually only included as optional configurations in flagship or high-end humanoid robot products.

As standard chips for electric control and platform functions, Intel Core i5/i7 processors offer multi-core processing capabilities, with the i7 typically outperforming the i5 in core frequency and thread count. They can meet the demands of basic robot control, data processing, and user development environment setup, supporting algorithm execution and system management without requiring extreme computing power.

Among NVIDIA's chip offerings, the Jetson Orin series and Jetson Orin NX are the most widely adopted. The Jetson Orin series includes seven modules with consistent architectures, delivering up to 275 trillion operations per second (TOPS) of computing power—eight times that of the previous-generation multimodal AI inference chip—while supporting high-speed interfaces. Its accompanying software stack includes pre-trained AI models, reference AI workflows, and vertical application frameworks, significantly accelerating end-to-end development of generative AI, edge AI, and robotics applications. The Jetson Orin NX, on the other hand, emphasizes cost-effectiveness, providing up to 100 TOPS of computing power and enabling parallel processing of complex AI tasks such as visual perception and path planning, making it a popular choice for mid-range to high-end robots.

On August 25, 2025, NVIDIA further unveiled the Jetson AGX Thor developer kit and mass production module, specifically designed for robotics. Now available globally (including in China), the developer kit starts at $3,499. NVIDIA CEO Jensen Huang hailed it as the 'ultimate supercomputer to propel the era of physical AI and general-purpose robotics,' a sentiment echoed by industry leaders such as Wang Xingxing, founder of Unitree Technology, and Wang He, founder of Galaxy General.

According to the latest research by TrendForce, the Jetson Thor, powered by Blackwell GPU and 128GB memory, delivers 2,070 FP4 TFLOPS of AI computing power—7.5 times that of the previous-generation Jetson Orin. At the recently concluded WRC 2025 conference, Galaxy General's humanoid robot, Galbot, became one of the first products worldwide to integrate the Jetson Thor chip, showcasing impressive autonomous box-handling capabilities on site. Wang He, founder and CTO of Galaxy General, stated, 'For all robotics companies, including NVIDIA and Galaxy General, our shared goal now is to create general-purpose robots.'

03

Domestic breakthroughs: Multiple vendors accelerate chip R&D, leveraging cost-effectiveness and customization

Facing the dominant market position of foreign chips, domestic manufacturers have begun accelerating independent R&D of humanoid robot chips, seeking breakthroughs in this field. The industry generally believes that achieving large-scale adoption of humanoid robots requires deep integration of general intelligence with real-world scenario demands—a goal that hinges on four core technologies: algorithms, data, computing power, and hardware. China already boasts strong leading advantages in the hardware supply chain, making the development of 'big brain' and 'little brain' processors for humanoid robots the next priority.

Rockchip's RK3588 and RK3588S chips have been adopted by humanoid robots such as the Zhiyuan Lingxi X2, LimX Oli from ZhiJi Dynamics, and GaoQing Pi/Pi+. The core architectures and computing power of these two chips are identical, with their main differences lying in interface expandability, package size, and power consumption. The RK3588 supports a richer set of interfaces, making it suitable for scenarios requiring extensive external device connections, while the RK3588S features a smaller package size and lower power consumption, catering to robot products sensitive to space and energy efficiency.

As Rockchip's 8K flagship SoC chip, the RK3588 adopts an ARM architecture and was originally designed for PCs, edge computing devices, personal mobile internet devices, and digital multimedia applications. Now, it demonstrates strong potential in the robotics field. It integrates a quad-core Cortex-A76 and quad-core Cortex-A55 processor, paired with a dedicated NEON coprocessor, supporting 8K video encoding and decoding. It also includes various high-performance embedded hardware engines to optimize performance for premium applications. In terms of AI computing power, the RK3588's NPU supports mixed operations of INT4/INT8/INT16/FP16, delivering up to 6 TOPS of computing power with strong compatibility—network models based on mainstream frameworks like TensorFlow, MXNet, PyTorch, and Caffe can be easily converted and adapted.

Horizon Robotics' latest RDK S100 developer kit, launched by its subsidiary Digua Robotics, innovatively integrates the robot's 'brain' (computing functions) and 'cerebellum' (control functions) into a single SoC chip, significantly simplifying the robot's hardware architecture. Available in a board form, the developer kit provides abundant peripheral interfaces, allowing direct connection to components such as cameras, sensors, and actuators for easy integration into various robotic systems.

From a technical architecture standpoint, the RDK S100 board features a heterogeneous CPU+BPU+MCU computing architecture, capable of simultaneously handling high-performance AI computing and real-time motion control—achieving a full closed-loop of 'environmental perception-decision planning-low-level servo control.' This means a single RDK S100 board can replace the traditional combination of an 'edge AI board + standalone controller,' serving as the robot's 'intelligent hub' and significantly reducing system complexity and development costs.

Specifically, the single SoC chip in the RDK S100 integrates three types of cores working in tandem:

The 'brain' section: Composed of a 6-core CPU and a high-computing-power BPU (Brain Processing Unit). The 6-core general-purpose processor handles complex logical operations and task scheduling, while the BPU, based on Horizon Robotics' next-generation self-developed 'Nash' architecture, is optimized for deep neural networks (CNNs/Transformers), delivering 80 TOPS (RDK S100) or 128 TOPS (RDK S100P) of AI inference computing power to meet cognitive demands such as environmental perception and speech understanding. The 'cerebellum' section: Comprises four Cortex-R52+ cores operating in Lock-Step mode to ensure high reliability and functional safety in motion control, precisely coordinating joint motors and maintaining robot balance.

Black Sesame Technologies is collaborating with multiple domestic humanoid robot companies on embodied AI technology research, with its most representative partnership being the strategic cooperation with the team led by Academician Liu Sheng, a member of the Chinese Academy of Sciences and executive dean of the Institute of Industrial Science at Wuhan University. Using Wuhan University's self-developed first humanoid robot, 'Tianwen,' as the core platform, Black Sesame Technologies provides a dual-chip solution featuring the 'Huashan A2000' ('brain') and 'Wudang C1236' ('cerebellum'). The A2000 chip offers computing power comparable to four NVIDIA Orin X chips, supporting embodied AI algorithms to process multimodal environmental information and make intelligent decisions. The C1236 chip enables parallel processing of AI computing and control tasks, ensuring stability in complex environments.

Yun Tian Li Fei also stated on its investor relations platform that the company is developing a new-generation 'brain' chip, the DeepXBot series, to accelerate inference tasks for perception, cognition, decision-making, and control in humanoid robots.

From a competitive standpoint, the core strengths of domestic chips lie in higher cost-effectiveness and more market-oriented customization services. Taking Digua Robotics' RDK S100 as an example, priced at just 2,799 yuan, it is nearly half the cost of NVIDIA's equivalent computing power solution, significantly reducing R&D and production costs for mid-range to low-end humanoid robots. Meanwhile, domestic manufacturers can tailor chip functions and interfaces to specific scenario demands of robotics companies (such as industrial handling, household services, and educational research), offering more flexible solutions.

04

Future trends: Integration of 'big brain' and 'little brain' as the breakthrough direction

Similar to the functional division of the human brain, current humanoid robot controllers generally adopt a separated 'brain-cerebellum' architecture: the 'brain' handles environmental perception, route planning, and intelligent decision-making (e.g., recognizing gestures, understanding speech, and autonomously learning new skills), while the 'cerebellum' acts as a 'motion expert,' coordinating joint motors at frequencies of up to a thousand times per second to ensure the robot can dance without falling and handle objects steadily.

The 'brain-cerebellum integration' architecture refers to the deep collaboration between the cognitive decision-making system ('brain') and the motion control system ('cerebellum'), achieving seamless integration of 'perception-decision-execution' through hardware-software co-design. The emergence and evolution of this architecture represent the core trajectory of embodied AI development—a concept originating from the intersection of brain science and AI, aiming to simulate the collaborative division of labor between high-level cognition and motor coordination in the human nervous system, enabling robots to synchronize 'thinking' and 'actions' more efficiently.

The current mainstream 'separated brain-cerebellum' approach has gradually exposed significant bottlenecks:

Surge in computing power demand: Robots need to simultaneously handle real-time control ('cerebellum') and complex decision-making ('brain') tasks, leading to a substantial increase in heterogeneous computing power requirements and rising hardware costs. Noticeable communication latency: As the 'brain' and 'cerebellum' reside in separate hardware systems, data transmission delays may cause asynchrony between robot actions and decisions, affecting operational precision. High development costs: Developers must maintain two independent codebases—control code may run on Arm CPUs or x86 CPUs, while AI algorithms require execution on GPUs or other specialized modules, increasing code adaptation and debugging complexity. Sensor fusion challenges: Hardware separation complicates the efficient integration of data from multiple sensors (e.g., cameras, force sensors, gyroscopes), impairing the robot's comprehensive environmental judgment.

In contrast, the 'brain-cerebellum integration' architecture can address these issues through single-chip or integrated hardware designs, making it the mainstream development direction for future humanoid robot controllers.

Recently, NVIDIA and Intel announced a partnership. According to public information, in the data center sector, Intel will customize x86 CPUs for NVIDIA, which will integrate them into its AI infrastructure platforms for market release. In the personal computing sector, Intel will produce and supply x86 system-on-chips (SoCs) integrating NVIDIA RTX GPUs. NVIDIA will invest $5 billion in Intel's common stock at a price of $23.28 per share.

It is worth noting that in the field of humanoid robots, most of the current solutions adopt the 'big and small brain' separated architecture featuring Intel CPUs and NVIDIA GPUs. However, with the collaboration between the two parties, a System on Chip (SoC) with a 'big and small brain integrated' architecture may be introduced in the future. The SoC with an integrated architecture can better integrate into the X86 and CUDA ecosystems, providing developers with a more powerful intelligent core.

Despite the broad market prospects for humanoid robots, there are still numerous challenges that need to be addressed before large-scale mass production and commercialization can be achieved:

Insufficient data accumulation: Embodied intelligence requires a large amount of real-world scenario data to train models. However, the current application scenarios for humanoid robots are limited, making it difficult to meet the demands for general intelligence in terms of data volume and diversity. Hardware architecture optimization needed: In addition to 'big and small brain integration,' the computational density, power consumption control, and thermal performance of chips still need improvement to meet the compact space and mobility requirements of robots. High costs: The prices of core components such as high-end chips, precision servo motors, and sensors are expensive, resulting in an excessively high overall cost for humanoid robots, making it difficult to popularize them in the consumer market. Safety enhancement needed: When robots interact with humans (e.g., in household services, medical care), they need to ensure motion safety (avoiding collisions) and data security (protecting user privacy), which places higher demands on the safety design of both hardware and software.

The perspective of Li Yan, Senior Director of EIS at Intel China's Edge Computing Solutions Division, is quite representative: 'The embodied intelligence industry, represented by humanoid robots, is developing rapidly, but it also faces issues such as system architecture inconsistency, insufficient generalization capabilities of solutions, and high complexity in scenario adaptation.' In the future, only through collaborative innovation across the entire industrial chain (such as joint research and development between chip manufacturers and robot manufacturers, and deep cooperation between algorithm companies and hardware manufacturers) can these challenges be gradually overcome, enabling humanoid robots to truly enter our daily lives.