By 2025, the Entrepreneurial Logic of AI Has Undergone a Transformation

![]() 10/13 2025

10/13 2025

![]() 593

593

Online Learning: Ushering in the Next AI Revolution

Last month, the MIT NANDA project unveiled its '2025 State of Business AI Report,' sending shockwaves through the AI sector and triggering a notable downturn in AI-related stocks.

The report's findings were staggering: despite a staggering $40 billion investment in generative AI, 95% of organizations reported negligible returns.

These figures mask a growing paradox. On one hand, AI technology is advancing at an unprecedented pace, with models evolving rapidly. Yet, on the other, expensive enterprise AI tools are being quietly sidelined by employees, giving rise to a vast 'shadow AI economy.' Over 90% of workers now prefer personal tools like ChatGPT for their tasks.

This trend forces us to confront a fundamental shift: by 2025, the underlying logic of AI entrepreneurship has undergone a radical transformation. Success no longer hinges solely on possessing more powerful models but on enabling AI to continuously learn and evolve in real-world business contexts. While this may sound like a cliché, the true challenge lies in execution, not conception.

01 The Demise of Old Logic

With 95% of AI investments failing to yield returns, it's imperative to re-examine past approaches.

Many enterprises treat AI as plug-and-play software, deploying it once and expecting perpetual effectiveness. However, AI more closely resembles an expert than standardized software. Experts require continuous learning, feedback, and experience accumulation to maintain proficiency. An AI system that cannot learn and improve through use depreciates in value over time, akin to an outdated textbook.

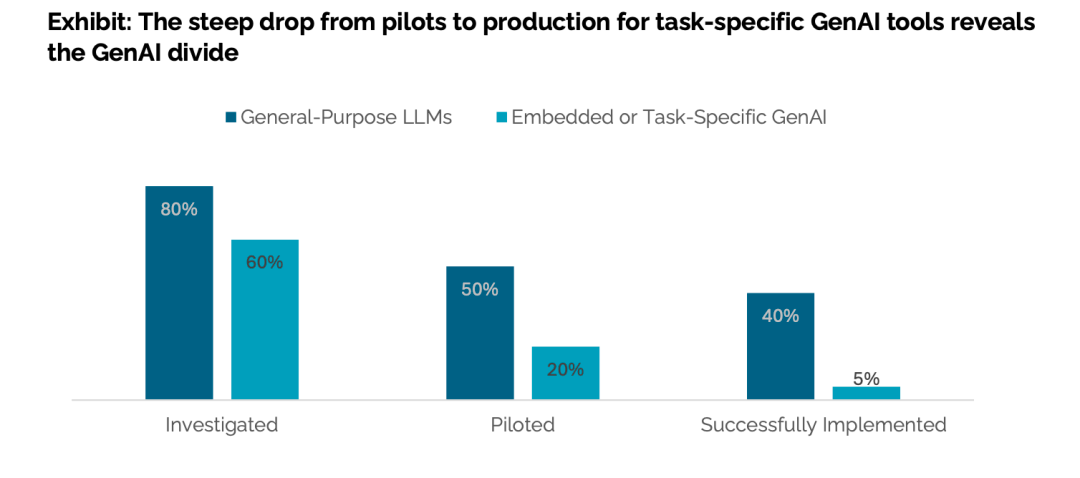

MIT's data also challenges conventional wisdom regarding 'general-purpose' versus 'specialized' AI. Initially, 60% of companies considered task-specific generative AI, but this figure plummeted to just 5% during implementation. In contrast, general-purpose large language models demonstrated far greater resilience.

This suggests that highly customized AI systems face significant adoption hurdles. Such systems often demand complex scenario adaptation, high customization and maintenance costs, and risk becoming isolated 'process silos' that employees circumvent due to their inflexibility.

The prevalence of shadow AI reflects employees' silent vote. When corporate tools are clumsy and context-ignorant, workers naturally opt for more agile, personalized AI solutions. This exposes a critical flaw in enterprise AI: designed top-down, it fails to adapt organically to human work habits or establish closed-loop feedback mechanisms for real-time improvement.

From this perspective, the old logic centered on 'delivery as completion,' prioritizing checkbox-style feature lists. However, reality demands 'delivery as commencement,' where value accumulates through continuous learning and optimization during use.

02 The Rise of New Logic

Amidst the 95% failure rate, 5% of companies found success. What did they do differently? Their approach offers crucial insights for redefining AI entrepreneurship.

As previously mentioned, successful organizations redefined their relationship with AI, treating it as an external expert requiring co-evolution. For instance, when a design firm selected an AI assistant, the winning choice wasn't the most feature-rich system but one that best understood designers' needs and evolved with the team's style. Weekly reviews with the AI team analyzed which suggestions were adopted, which were ignored, and what confusion designers encountered.

This relationship may also reshape business models. Traditional software licensing is giving way to 'growth services'—enterprises now purchase not just current capabilities but mechanisms ensuring AI's continuous improvement. It's akin to hiring a consultant: the value lies not in existing knowledge but in their potential to grow within your business context.

Moreover, while most users complain about enterprise AI repeating errors, the core issue isn't model size but missing learning mechanisms.

The successful 5% prioritized 'continuous AI learning' in their technical architecture. This requires building true Online Learning systems—the hottest AI concept today. Technically, it enables models to interact continuously with environments, dynamically optimizing intelligence through reward signals.

Simply put, these systems don't rely solely on periodic training from user feedback but adjust strategies instantaneously during each interaction. Think of it as a new interaction-reasoning paradigm where models learn during testing, akin to humans adjusting tactics in real-time—a fundamental shift from traditional pre-training-focused AI.

Three key features emerged in these systems:

1. Contextual Understanding: Advanced AI remembers user preferences and applies them in subsequent interactions. For example, when a marketing specialist requests 'create a social media poster' again, the AI proactively uses the previously validated style and tone instead of starting anew.

2. Memory Management: AI systems build personalized memory profiles for users, recording effective work patterns and decision logic. These memories aren't mere chat logs but distilled work habits that activate contextually.

3. Feedback Loop Design: Failed AI systems reduce feedback to tedious 'like/dislike' buttons. Successful systems embed learning mechanisms seamlessly into workflows.

Shifting from feature checklists to learning capability assessments reflects evolving enterprise AI standards. Previously, procurement focused on functional breadth; now, the emphasis is on how quickly AI acquires new skills. An AI adapting to a company's reporting style in a week holds far more value than a feature-rich but rigid system.

Representative cases include an e-commerce firm selecting a customer service AI not for its comprehensive features but for its ability to rapidly adjust response strategies based on complaint data. Months later, this 'imperfect but adaptable' AI outperformed feature-rich rivals in handling specific returns.

03 Identifying Competitive Edge in the Post-2025 Era

At this inflection point, several survival principles are emerging for AI entrepreneurs.

As scenario depth replaces technological breadth as the new competitive barrier, large models are becoming ubiquitous infrastructure. True value creators, however, are those who master stable resources. Just as reliable electricity doesn't guarantee good appliances, accessing powerful base models doesn't solve practical problems.

In specialized fields like healthcare, teams are abandoning 'general practitioner AI' ambitions to focus on dermatology diagnosis, imaging analysis, etc. By continuously learning from specific case data, they achieve practical accuracy in vertical scenarios.

ROI perceptions also need recalibration. With 70% of AI budgets allocated to marketing, enterprises treat AI as a growth tool. Yet 'cost-saving AI' often delivers more direct, measurable returns.

The question arises: How will Online Learning reshape the AI market?

The answer: A complete overhaul.

As OpenAI, Anthropic, and others push model capability boundaries, enterprises relying on static training data face mounting pressure. Model freshness is dwindling; real-time learning will become the new divider.

AI's industrial implementation logic is also being rewritten. In recommendation systems, minute-level updates already transform user experiences. In high-stakes fields like medical diagnostics, AI capable of refining judgment criteria through continuous clinical feedback will see exponential value growth.

This 'learn-to-be-precise' trait will disrupt customized model business models. Consequently, computational resource allocation is shifting: one-time training investments may cede to continuous optimization inference pools, with model update frequency surpassing parameter scale as the key metric.

This means the second half of AI entrepreneurship belongs to teams demonstrating the strongest adaptability in hyper-specific scenarios. It's time to abandon obsession with model scale and return to creating tangible value.