What is "Reinforcement Learning" Often Cited in Autonomous Driving?

![]() 10/31 2025

10/31 2025

![]() 434

434

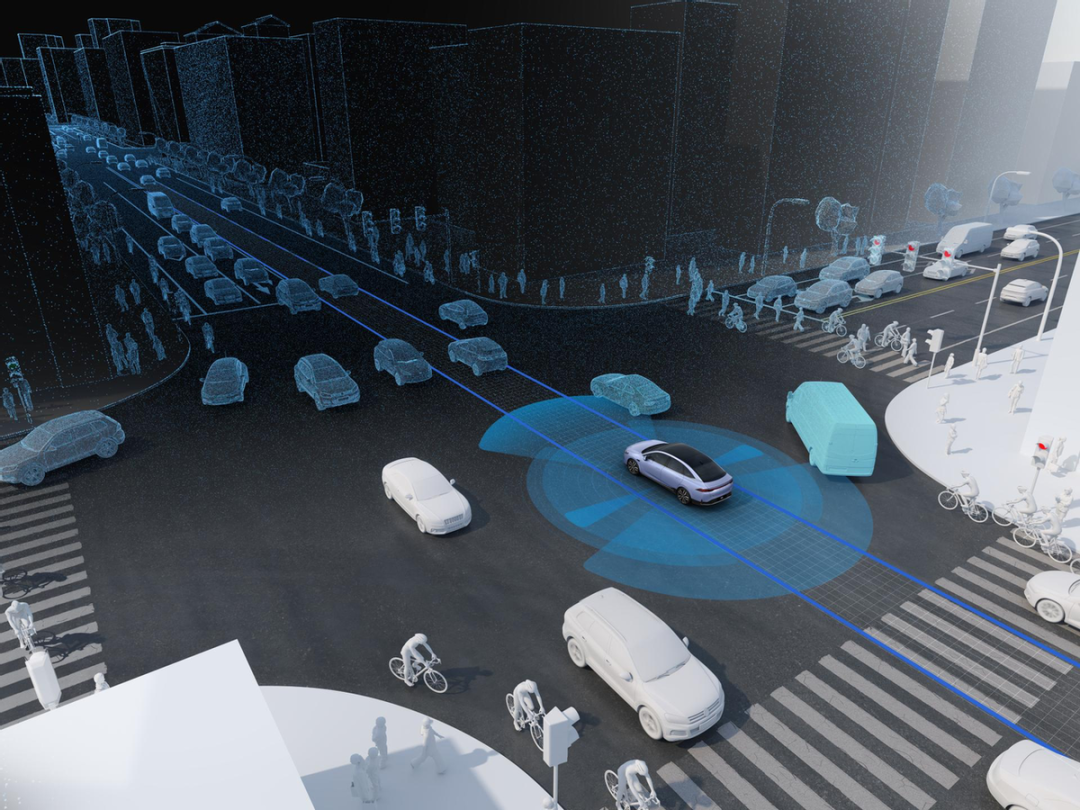

When autonomous driving is the topic of discussion, some solutions highlight "Reinforcement Learning (abbreviated as RL)." Reinforcement learning is a technology that empowers machines to learn decision-making through a process of trial and error. In simpler terms, an intelligent agent interacts with its environment, gathers information about that environment, and then takes an action. Subsequently, the environment provides feedback in the form of a reward or penalty. The agent's objective is to maximize the cumulative long-term rewards. Unlike supervised learning, which relies on a direct, one-to-one "correct answer" for guidance, reinforcement learning discovers desirable and undesirable behaviors through its interactions with the environment and self-exploration. When reinforcement learning is discussed, terms such as "state," "action," "reward," "policy," and "value" frequently arise. These terms correspond to the environmental information perceived by the agent, the possible actions it can undertake, the positive or negative evaluations provided by the environment, the rules governing action selection, and the estimated future reward obtainable from a particular state, respectively.

In the context of an autonomous vehicle, the vehicle itself serves as the intelligent agent. The road and other traffic participants form the environment. The sensor outputs represent the states, with vehicle steering, acceleration, and braking being the actions. Factors like safety, smoothness of the ride, and punctuality can be combined to constitute the rewards. The core strength of reinforcement learning lies in its capacity to directly optimize long-term objectives, such as making safe and efficient decisions at complex intersections. However, its trial-and-error nature poses obvious safety risks on real roads. Consequently, reinforcement learning typically depends on high-fidelity simulations, offline data, and hybrid approaches for implementation.

Application Scenarios of Reinforcement Learning in Autonomous Driving

Typical applications of reinforcement learning in autonomous driving encompass low-level control, behavioral decision-making, local trajectory optimization, and policy learning. In low-level control, reinforcement learning can learn strategies for steering and speed control under specific vehicle dynamics. Its advantage becomes evident under non-linear and complex friction conditions, where it can perform more robustly. For behavioral decision-making, long-term trade-off problems, such as determining the optimal timing to proceed through a yellow light at intersections, formulating lane-changing strategies, and adjusting following distances, can be naturally optimized by reinforcement learning. This optimization places safety, comfort, and efficiency on an equal footing within the same objective framework. When it comes to local trajectory optimization, reinforcement learning can generate short-cycle trajectory adjustments in the presence of dynamic obstacles and complex constraints, rather than merely relying on model-based optimal control to solve each step.

At present, numerous companies are exploring end-to-end solutions in autonomous driving. End-to-end implies directly mapping camera or sensor inputs to control commands. End-to-end reinforcement learning can yield highly impressive results in laboratory settings. Nevertheless, it suffers from significant shortcomings in terms of sample efficiency, interpretability, and safety verification. Therefore, reinforcement learning is often employed as an auxiliary tool or a policy search tool, rather than as a direct replacement for the entire autonomous driving system stack.

Key Implementation Aspects and Technologies of Reinforcement Learning

For reinforcement learning, the initial challenge is to define states and rewards appropriately. The state should encompass sufficient information to enable the policy to make correct decisions, without being overly redundant, which could complicate the learning process. Reward design is highly sensitive. Unreasonable reward signals can lead to "reward hacking" or "shortcut-taking" phenomena, where the learned policy appears to achieve high scores but behaves dangerously. Consequently, in autonomous driving, rewards are typically a combination of multiple factors. They not only include substantial negative scores associated with safety violations (such as collisions or encroaching on the opposite lane) but also provide detailed positive and negative feedback based on comfort, trajectory deviation, and arrival time. Simultaneously, constraints or penalty terms are utilized to ensure the minimum safety boundary, rather than relying solely on sparse arrival rewards.

Sample efficiency is also a critical technical factor for reinforcement learning. Many classical reinforcement learning algorithms demand massive amounts of interaction data. However, real road data in autonomous driving is extremely costly to acquire. Therefore, high-quality simulation environments are commonly relied upon for training, combined with techniques such as domain randomization, domain adaptation, and model pre-training to bridge the gap between simulation and reality. Another approach is offline reinforcement learning, which utilizes a large volume of recorded driving trajectories for policy learning, thereby avoiding the risks associated with real-time exploration. Nevertheless, offline reinforcement learning itself imposes special requirements regarding distribution bias and conservatism.

Algorithm selection and architecture remain crucial for reinforcement learning. Value-based algorithms (such as Q-learning and its deep version, DQN) are well-suited for discrete action spaces. However, actual vehicle control is usually continuous. As a result, policy gradient methods (such as REINFORCE and PPO) or Actor-Critic architectures are more commonly employed. Actor-Critic combines the advantages of direct policy optimization and value estimation, performing well in terms of sample utilization and stability. For scenarios that necessitate a combination of long-term planning and short-term control, hierarchical reinforcement learning can separate high-level decision-making (such as choosing to change lanes or remain in the current lane) from low-level control (such as specific steering angles), reducing complexity and enhancing interpretability.

Safety and stability are of paramount importance for reinforcement learning in autonomous driving. Therefore, safety filters, verifiable constraint layers, or backup control strategies need to be introduced during the training process. During deployment, a "safety shell" design can be adopted. In this design, the reinforcement learning policy outputs suggested actions, but these actions are first subjected to model-based constraint checks or verified follow-up controllers before being executed. This ensures that even if the reinforcement learning policy malfunctions, the vehicle can revert to conservative and safe behaviors.

To explore long-tail scenarios, clustering-based sampling, risk-driven prioritized experience replay, and scenario-based Curriculum Learning (gradual training from simple to complex scenarios) should be employed to guide the learning process. Adversarial training is also frequently utilized to generate more challenging scenarios, thereby enhancing policy robustness.

Limitations, Risks, and Engineering Implementation Recommendations

A core limitation of reinforcement learning is its verifiability and reliability. Autonomous driving is a system with stringent safety requirements, and regulatory and commercial deployments necessitate strong interpretability and reproducible verification processes. Systems that rely solely on black-box reinforcement learning strategies are challenging to pass regulatory and safety reviews. Consequently, many companies utilize reinforcement learning as a tool for policy optimization and capability enhancement, rather than as a replacement for existing baseline control and rule engines.

Another common issue in reinforcement learning is immature reward design, which can lead to seemingly "perfect" but actually harmful behaviors. For a straightforward example, if "reaching the destination as quickly as possible" is set as the primary goal without sufficient penalties for safety disturbances, the model may engage in risky overtaking maneuvers in complex traffic conditions. Therefore, hard safety constraints should be given priority, with efficiency and comfort serving as secondary optimization goals. Potential "reward black box" problems should be identified through detailed simulation scenarios and adversarial testing.

To implement autonomous driving technology, a hierarchical strategy should be adopted. Reinforcement learning should be used for policy search and parameter tuning in simulations. The generated candidate policies should then be validated on offline playback data, followed by manned or remote-controlled testing in controlled closed areas, and a gradual expansion of the testing scenarios. The reinforcement learning module should be designed as a pluggable and retractable subsystem, equipped with clear monitoring indicators and safety fallback mechanisms. Comprehensive experimental records of data and models should be maintained to support offline auditing and playback reproduction.

Hybrid methods are generally more practical than pure reinforcement learning. Utilizing imitation learning to initialize policies can significantly reduce training difficulty. Combining model-based planning with learning-based policies can strike a balance between theoretical interpretability and empirical performance. The application of techniques such as offline reinforcement learning, conservative policy gradients, and safety-constrained optimization are common compromise solutions.

How to Safely Integrate Reinforcement Learning into Autonomous Driving

Reinforcement learning does not offer a ready-made solution for autonomous driving; rather, it serves as a potent decision-making optimization tool. It excels at handling tasks with long-term dependencies, sparse feedback, and complex interactions. However, it still requires engineering enhancements in areas such as sample efficiency, safety verification, and interpretability. To safely integrate reinforcement learning into autonomous driving, a more rational approach is to use it as a supplement and enhancement. Policies should be explored in simulation environments, stabilized with offline data, and ensured for safety through rules and constraints. Gradual validation on real roads should be conducted, with fallback mechanisms in place. Only by clearly defining boundaries and establishing strict testing and rollback mechanisms during the design phase can reinforcement learning transform its advantages into deployable and auditable autonomous driving capabilities.

-- END --