How Do Autonomous Vehicles Pinpoint Their Position and Lane?

![]() 11/18 2025

11/18 2025

![]() 603

603

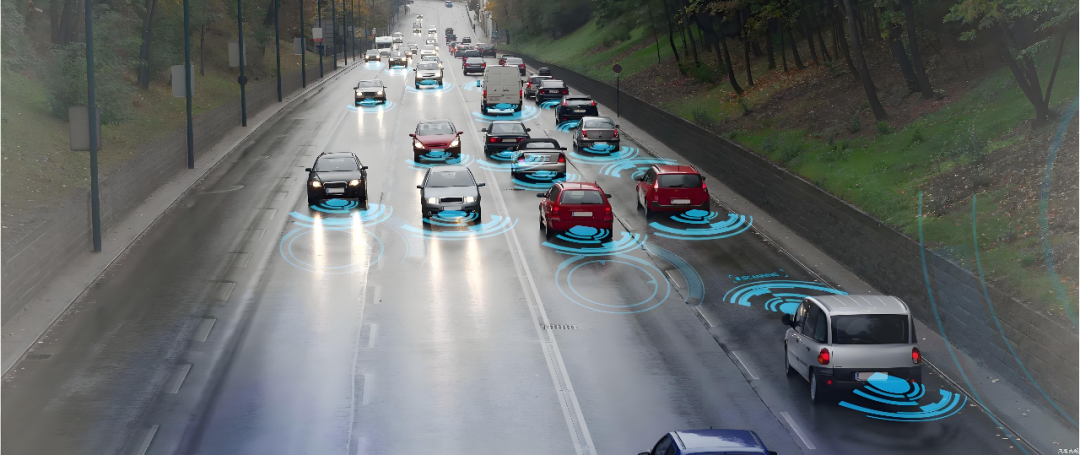

When we're behind the wheel, figuring out which road and lane we're in is a breeze. A quick glance at the navigation, a few looks at the lane markings, and listening to prompts are all it takes. But for autonomous vehicles, this seemingly straightforward task is a double-edged sword. It's straightforward because autonomous vehicles are equipped with more "senses" and boast greater computing power than humans. However, it's also incredibly complex, as the task demands pinpoint accuracy (meter-level or even decimeter-level), stability, continuity, and reliability under a variety of extreme conditions. So, how exactly do autonomous vehicles determine their position and lane?

Image source: Internet

How Do Vehicles Perceive Their Surroundings?

To answer the question "Where am I?", autonomous vehicles must first get a good grasp of their surroundings. Their "senses" include satellite positioning systems, inertial measurement units, speed sensors, cameras, and LiDAR. Each sensor has its own set of strengths and weaknesses, which is why they're often used in tandem to complement one another.

Satellite positioning (e.g., GPS, Beidou, GLONASS) provides a rough idea of latitude, longitude, and altitude. Its accuracy can reach several meters, but the raw signals alone fall short of meeting the lane-level (less than one meter) precision requirements. To enhance accuracy to sub-meter or even decimeter levels, differential techniques, Real-Time Kinematic (RTK) positioning, or satellite-based augmentation systems are frequently employed. Nevertheless, these technologies are susceptible to base station coverage, signal obstruction, and multipath reflections. Signal loss or increased errors may occur in areas with tall buildings, under overpasses, or in tunnels.

Image source: Internet

Inertial measurement units (IMUs) are packed with accelerometers and gyroscopes, providing short-term attitude changes and acceleration information. Their perks include fast response and short-term stability, but they tend to drift over time. Wheel odometers or speed sensors offer travel distance information, yet tire slippage or diameter errors can throw off their accuracy.

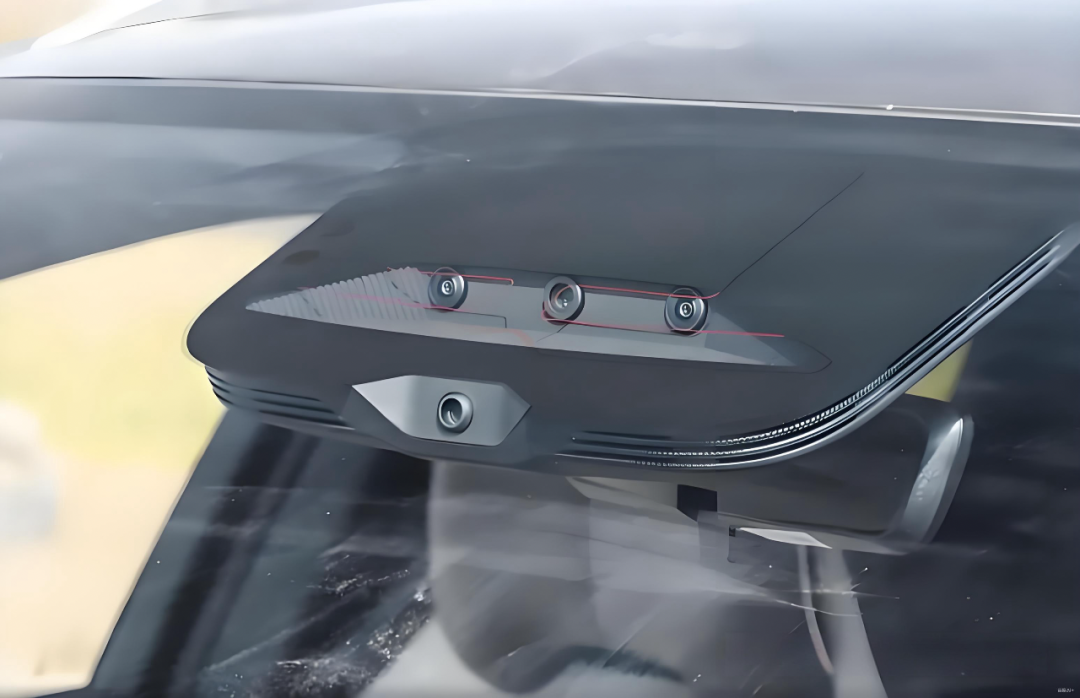

Cameras are adept at capturing lane markings, curbs, traffic signs, and surrounding vehicles, making them crucial sensors for determining "which lane." However, they're sensitive to lighting conditions, rain, snow, fog, and dirt. Under optimal visibility, they provide a wealth of semantic information. LiDAR, on the other hand, accurately measures three-dimensional distances to surrounding objects, generating point clouds for matching high-definition maps or extracting road geometry. LiDAR isn't affected by light but its point cloud quality takes a hit in rain, snow, or fog. By integrating information from these sensors, autonomous vehicles build a comprehensive awareness of their position and surroundings.

Sensor Fusion and Filtering: Transforming "Chaotic Information" into Reliable Positioning

Fusing data from satellite positioning, IMUs, odometers, LiDAR, and cameras requires mathematical tools such as Kalman filters (and their variants), particle filters, and modern machine learning methods to handle uncertainty. Each sensor provides an estimate along with its uncertainty, which are then fused into an optimal pose estimate with uncertainty through state-space and observation models.

Extended Kalman filters (EKFs) or Unscented Kalman Filters (UKFs) are commonly used for fusing IMU and wheel speed sensor data (high-frequency, short-term) with GPS data (low-frequency, absolute). This corrects IMU drift when GPS is available and maintains short-term stability in the absence of GPS. Particle filters (Monte Carlo localization) are widely adopted for map matching, especially in scenarios with nonlinear and non-Gaussian noise. Modern techniques often rely on hierarchical fusion architectures: high-frequency IMUs for real-time state propagation, mid-level odometry and visual/LiDAR odometry for short-term constraints, and periodic GPS or map matching for global calibration.

Image source: Internet

Time synchronization and spatial calibration are of utmost importance. Sensor data must be aligned to the same timestamp; otherwise, the fusion results will be skewed. Spatial calibration determines each sensor's position and orientation within the vehicle's coordinate system. Calibration errors directly translate into positioning errors. To prevent this, strict time synchronization mechanisms (e.g., PPS signals, PTP network time protocol) and calibration procedures (e.g., calibration boards, static point cloud registration) are employed.

Managing uncertainty is equally crucial. Fusion algorithms must not only output optimal estimates but also confidence levels or covariances. This confidence is paramount for upper-level decision-making. When positioning confidence is low, the vehicle must take proactive measures such as reducing speed, increasing following distance, enhancing environmental perception, or switching to a more conservative driving strategy.

Maps and Positioning: From "Which Road" to "Which Lane"

Satellite and inertial information can roughly locate vehicles on a road network, but precise lane-level positioning necessitates high-definition maps and map matching. High-definition maps encompass lane centerlines, lane boundaries, curbs, lane topology, crosswalks, traffic signs, and road geometry, with centimeter-level accuracy. By matching real-time sensor observations with the map, vehicles achieve lane-level positioning.

Map matching employs methods like Iterative Closest Point (ICP) or Normal Distributions Transform (NDT) for LiDAR point cloud alignment, correcting pose by registering current point clouds with map data. Visual methods match road features (e.g., curbs, ground markings, buildings) captured by cameras with visual markers or semantic information in the map. Modern techniques leverage deep learning to extract robust feature descriptors for improved matching.

Determining the specific lane requires real-time detection and tracking of lane markings. Cameras are commonly used for lane detection, combined with bird's-eye view transformations and multi-frame tracking algorithms to stably estimate lateral deviation and heading angle error relative to the lane centerline. LiDAR can also lend a hand in judging lane boundaries in certain scenarios by analyzing height differences or continuity between the ground and curbs in point clouds. Matching detected lane markings with map data clarifies "which lane and position within the lane."

Maps aren't infallible. Road construction, temporary traffic controls, or faded lane markings can lead to discrepancies between the map and real-world conditions. Thus, the positioning system must be adept at detecting map-reality inconsistencies and adjusting strategies accordingly.

What Happens When Maps Are Missing or Mismatched?

When maps are unavailable or mismatched, how do autonomous vehicles determine their position? Techniques like visual/LiDAR SLAM (Simultaneous Localization and Mapping), visual odometry, and learning-based scene recognition and relocalization come to the rescue. SLAM aims to simultaneously estimate pose and incrementally build environmental maps, reducing cumulative errors through loop closure detection for better trajectory consistency.

Visual odometry estimates relative motion by tracking feature points or using optical flow between adjacent frames. Combined with IMUs, it provides reliable displacement estimates for short periods without maps or GPS, maintaining positioning continuity. LiDAR odometry relies on continuous point cloud registration, which is robust to lighting changes but may struggle in areas with sparse point clouds or severe occlusions.

Given the uncertainties inherent in all methods, redundancy design is of paramount importance. Even if GPS fails, vehicles can rely on IMUs and odometry for short-term positioning. If cameras are ineffective at night or in fog, LiDAR provides structural information. If LiDAR is occluded or fails, visual and map data assist in positioning. The system continuously evaluates sensor health and data quality, dynamically selecting the most reliable combination.

Image source: Internet

Beyond sensor redundancy, software-level redundancy strategies are indispensable. When global matching fails, the system enters "degraded mode," requiring the vehicle to slow down, increase lateral tolerance, adopt conservative trajectory planning, or prompt manual takeover. In fully unmanned scenarios, the vehicle must cautiously exit the main road and enter a safe parking area until positioning is restored.

For autonomous vehicles, positioning isn't the ultimate goal but the foundation for decision-making and control. Collected road references, lane availability, and traffic regulation-related position annotations are fed to the path planning module. The planning module needs to know the vehicle's exact lane position and future lane topology (meters to hundreds of meters ahead) to execute lane changes, overtakes, turns, and other maneuvers.

Final Thoughts

Determining which lane an autonomous vehicle is in—a task that seems simple at first glance—requires a sophisticated blend of satellite positioning, inertial navigation, visual and LiDAR perception, high-definition maps, real-time filtering and map matching algorithms, sensor redundancy, time and space synchronization, and a comprehensive fault-tolerance and degradation strategy. These modules are akin to instruments in an orchestra, each playing its part while collaborating seamlessly.

-- END --