Li Feifei's Latest 10,000-Word Interview: Transformer May Be Obsolete in the Next Five Years

![]() 11/26 2025

11/26 2025

![]() 723

723

Edited by Key Focus Editor

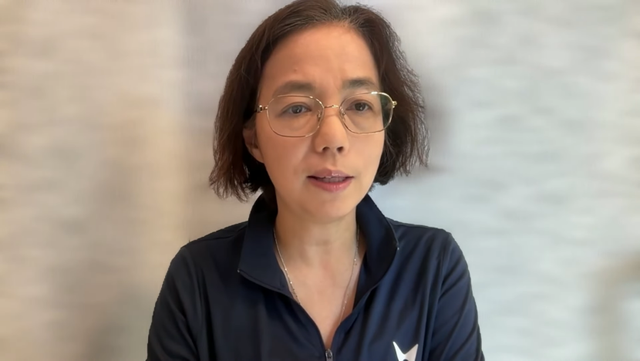

On November 24, Professor Li Feifei from Stanford University and founder of World Labs participated in a podcast interview, where she detailed her vision for Spatial Intelligence and discussed her differing intellectual approaches with Yann LeCun regarding World Models.

In constructing world models, Li Feifei and former Meta Chief Scientist Yann LeCun are often seen as representing two distinct schools of thought. LeCun favors models that learn abstract 'implicit representations' of the world without needing to reconstruct every pixel frame, whereas Li Feifei's Marble aims to generate explicit representations from abstract internal representations, clearly outputting visual 3D worlds.

Li Feifei does not see these approaches as mutually exclusive. She stated that if the goal is to build a universal world model, both implicit and explicit representations will ultimately be necessary. World Labs adopts 'deliberate' explicit outputs because its commercial objectives serve human creators in fields like game development, visual effects, and architectural design, who require a visual, interactive 3D output for their daily work. However, within the model, RTFM also incorporates implicit representations. She believes future architectures will be a hybrid of both.

As the founder of World Labs, Li Feifei revealed the company's first product, Marble, and its underlying technology, the 'Real-Time Frame Model' (RTFM). Unlike models that simply generate videos, Marble focuses on creating consistent and persistent 3D spaces. Its model is not only multimodal, supporting text, images, videos, and even rough 3D layouts as input, but more importantly, it attempts to maintain object consistency during inference.

She noted that current Large Language Models (LLMs) primarily learn from massive textual data. While language models are impressive, much of human knowledge cannot be captured through language alone. To build truly general artificial intelligence, AI must transcend textual limitations and experience the physical world through vision and action. Human learning is inherently embodied; we interact extensively with the world without language, perceiving light, touch, gravity, and spatial relationships.

When asked whether current AI truly 'understands' the physical world, Li Feifei argued that most generated videos showing water flow or tree swaying are not based on Newtonian mechanics calculations but on statistical emergences from vast datasets. While AI may fit laws of motion through data, there is currently insufficient evidence that the Transformer architecture can achieve abstractions on the level of Einstein's theory of relativity.

Regarding the pace of technological evolution, Li Feifei believes significant progress in AI's understanding of the physical world is likely within five years. She envisioned a future landscape based on 'Multiverse': by drastically lowering the barrier to 3D content generation, humans could inexpensively create countless parallel worlds. These digital parallel worlds would serve as infinite extensions of human physical experiences, reshaping entertainment, education, remote collaboration, and scientific exploration.

Key Highlights from Li Feifei's Interview:

1. Spatial Intelligence as the Core

Language alone is insufficient for building Artificial General Intelligence (AGI). Much of human intelligence (e.g., spatial reasoning, emergency responses) is non-verbal. AI must acquire 'Spatial Intelligence,' possessing deep perceptual spatial abilities like living organisms, to establish a complete loop between seeing, doing, and imagining.

2. A New Paradigm for World Models

The core product of World Labs, Marble, differs from ordinary video generation models primarily in its 'Object Permanence.' In Marble's generated worlds, objects remain consistent when you turn away and return, rather than randomly deforming like in dreams.

Li Feifei's team proposed the 'Real-Time Frame Model' (RTFM), aiming to achieve efficient 3D spatial reasoning under the computational constraints of a single H100 GPU. This is to construct a geometrically consistent and temporally persistent 'digital container,' laying the foundation for future AI capable of understanding physical laws.

3. Harmony in Diversity with Yann LeCun

While LeCun advocates for abstract 'implicit representations' in world models, Li Feifei's Marble strives to generate explicit representations from abstract internal representations. Li Feifei believes implicit and explicit representations must ultimately converge, but currently, World Labs deliberately chooses to output explicit 3D representations to empower humans.

Future AI should not merely be a black box but a 'Neural Spatial Engine' for game developers, architects, and artists. It combines the deterministic rules of traditional physics engines (e.g., Unreal) with the statistical creativity of generative AI, enabling ordinary people to instantly construct complex 3D interactive worlds.

4. The 'Next Token' Challenge in Vision

Language models have a perfect 'predict next token' objective function. However, in vision, 'predict next frame' is powerful but imperfect, as it compresses the 3D world into 2D, losing structural information. The search for a 'universal task function' in vision remains an unsolved mystery.

5. AI Still Doesn't Understand Physics

AI-generated physical phenomena (e.g., gravity, collisions) mostly stem from imitating statistical patterns rather than causal understanding of physical laws. The existing Transformer architecture may struggle to produce advanced abstractions like 'relativity.' Over the next five years, the industry needs to find a new architectural breakthrough to enable AI to transition from statistical correlations to true causal logic and physical reasoning.

Excerpts from Li Feifei's Interview:

Host: Welcome to this podcast episode. I'm honored to have Dr. Li Feifei, a pioneer in artificial intelligence, join me again. Several years ago, I invited Feifei on my podcast, and I highly recommend listeners check out that episode.

In today's conversation, we'll explore her insights on 'World Models' and the importance of 'Spatial Intelligence'—key elements for building AI that truly understands and interacts with the real world. While Large Language Models (LLMs) are impressive, much (even most) human knowledge isn't captured in text. To achieve broader AI, models need firsthand experience of the world or at least learn through videos. We'll also discuss her startup, World Labs, and their first product, Marble, which can generate incredibly complex 3D spaces from internal model representations.

1. Evolution from Computer Vision to Spatial Intelligence

Host: I'd like to shift the focus away from Marble and your new model for generating consistent, persistent 3D worlds for viewers to explore and instead ask why you emphasize world models and spatial intelligence. Why are these necessary beyond mere language learning?

Additionally, how does your approach differ from Yann LeCun's? Is your current work on world models an extension of your past research on Ambient Intelligence, or is it a parallel track?

Li Feifei: The work on spatial intelligence I've been contemplating for the past few years is actually a continuation of the focus throughout my computer vision career.

I emphasize 'spatial intelligence' because our technology has reached a stage where its complexity and deep capabilities extend beyond merely 'staring' at an image or even simple video understanding. The core now lies in deeply perceptual spatial intelligence, closely tied to robotics, Embodied AI, and Ambient Intelligence. From this perspective, it's a natural extension of my career in computer vision and AI.

Host: As you and many others have pointed out, language models learn from human knowledge encoded in text, but that's only a very limited subset of human knowledge. Human learning occurs through extensive interaction with the world without language. Thus, developing models that directly experience and learn from the world is crucial if we aim to surpass the current limitations of impressive yet constrained Large Language Models.

Regarding specific methods, take Marble as an example: your approach extracts the world's internal representations learned by the model and creates a corresponding external visual reality. In contrast, Yann LeCun's method primarily builds internal representations to enable the model to learn physical laws of motion. Is there a parallel relationship here? Are these methods complementary, or do they overlap?

Li Feifei: First, I wouldn't position myself against Yann LeCun. I believe we're intellectually on the same continuum, just taking different entry points into spatial intelligence and world modeling.

If you read my recently published long essay on 'Spatial Intelligence' (which I call a manifesto), I make this point clear. I argue that if we aim to build a universal, all-encompassing world model, implicit representations and some degree of explicit representations will likely be necessary, especially at the output layer.

For instance, World Labs' current world model, Marble, does explicitly output 3D representations, but internally, it also incorporates implicit representations. Honestly, I believe both are ultimately indispensable.

The same applies to input modalities. Yes, learning from video is crucial, as the entire world can be seen as a massive input of continuous frames. However, true intelligence, whether for animals or machines, isn't just passive observation. It involves embodied experiences of action and interaction, as well as touch, sound, smell, physical forces, temperature, etc. Thus, I consider this deeply multimodal.

Marble as a model is just the first step. In our technical report released a few days ago, we made it quite clear: multimodality is both a learning paradigm and an input paradigm. There's much discussion in academia currently, showing how exciting and early-stage this field is. Arguably, our exploration of exact model architectures and representation methods is far from over.

2. Beyond Text: Multimodal Input and Learning Paradigms

Host: In your world model, is the primary input video?

Li Feifei: If you've experienced Marble, you'll know our world model's input is quite multimodal. You can use pure text, one or multiple images, handle video, and even input rough 3D layouts (like boxes or voxels). It's multimodal, and I believe as we progress, these capabilities will deepen further.

Text is just one form. Yes, but this is where we diverge. Most animals don't learn through complex language, but humans do. However, our AI world models today will learn from massive language inputs alongside other modalities, not just through language for information compression and transmission.

Host: This is also a limitation of Large Language Models (LLMs)—their parameters are fixed after training. So they don't continuously learn, despite some degree of learning during inference testing. Is this a problem you're trying to address in building world models? Since we can infer that world models should continuously learn when encountering new environments.

Li Feifei: Yes, the Continuous Learning paradigm is indeed crucial. That's how it works for living organisms, and it's how we humans learn. Even in biological learning, there's a distinction between online and offline learning. In our current world model form, we're still more in batch or offline learning mode. But we're absolutely open, especially regarding future online learning and multimodal fusion.

Host: What would that look like? Would it be a completely different architecture or merely an engineering implementation?

Li Feifei: I'll maintain an open mindset. I believe it will be a hybrid. Obviously, excellent engineering implementation is needed, such as fine-tuning and online learning, but new architectures may also emerge.

Host: Can you discuss the Real-Time Frame Model and your work on world models?

Li Feifei: You're referring to our technical blog published a few weeks ago, which specifically delved into our Real-Time Frame Model. World Labs is a research-focused organization; while we care about products, much of our work at this stage is model-first. We're focusing on how to advance Spatial Intelligence. This particular work is dedicated to achieving frame-based generation while maintaining geometric consistency and persistence as much as possible.

In early frame-based generation operations, object persistence was often lost when moving the viewpoint forward. In this specific case, we strived to achieve balance and accomplish this computationally efficiently during inference, using only a single H100 GPU. We're unclear about other frame-based models' situations, as they haven't disclosed how many chips they use during inference, but we assume it's quite a significant computational consumption.

3. Searching for a 'Universal Task Function' in Spatial Intelligence

Host: In your 'manifesto,' you discussed the need for a 'Universal Task Function,' similar to 'Next Token Prediction' in language models. Does it involve predictive elements?

Li Feifei: One of the most significant breakthroughs in generative AI has indeed been the discovery of the objective function of 'next token prediction.' It's a very elegant formulation because language exists in a serialized manner, and you can tokenize language into this sequential representation. The learning function you use for next token prediction is exactly what's needed during inference. Whether language is generated by humans or computers, it's essentially about advancing tokens one after another. Having an objective function that is perfectly (100%) aligned with the final actual task is excellent because it allows optimization to be fully targeted.

But in computer vision or world modeling, things aren't so simple. Language is inherently human-generated; there's no 'language' in nature that you stare at—even if you eventually learn to read, it's because it has already been generated. However, our relationship with the world is much more multimodal: the world is there waiting for you to observe, interpret, reason about, and interact with it. Humans also have a 'Mind's Eye' capable of constructing different versions of reality, imagining, and generating stories. This is far more complex.

Host: So, what defines this universal task? Or what could be a universal objective function we can use? Is there something as powerful as 'next token prediction'? Is it 3D reconstruction?

Li Feifei: That's a very profound question. Some would actually argue that the universal task of world modeling might just be 3D reconstruction of the world. If that were the objective function and we achieved it, many things would fall into place. But I don't think so because most animal brains don't necessarily perform precise 3D reconstruction, yet tigers or humans are such powerful visual agents in space.

'Next frame prediction' does have some power. First, there's a vast amount of data available for training; second, to predict the next frame, you must learn the structure of the world because the world isn't white noise—there's a tremendous amount of structural connection between frames.

But it's also unsatisfying because you're treating the world as two-dimensional, which is a terrible way to compress it. Even if done perfectly, the 3D structure is only implicit, and this frame-based approach loses a lot of information. So there's still a lot of exploration to be done in this area.

Host: I have to ask you: Did you name your model RTFM (Real-Time Frame Model) as a joke?

Li Feifei: It was indeed a brilliant 'performance.' The name wasn't mine; it was one of our researchers who has a real talent for naming things. We thought it was funny to play around with that name.

Host: But RTFM is predicting the next frame with 3D consistency, right?

Li Feifei: Yes.

Host: That's where the interesting part of the model's internal representation comes in. For example, when I look at a computer screen, even if I can't see the back, I know what it looks like because I have an internal representation of it in my mind. That's why you can move objects around on the screen—a two-dimensional plane—and still perceive the other side. The model has an internal representation of the 3D object, even if its current viewpoint doesn't see the back. When you talk about spatial intelligence, does that include natural physical laws? Like understanding that you can't pass through a solid object? Or if standing on the edge of a cliff, it knows that's the edge and would fall instead of floating in the air if it walked over?

Li Feifei: What you're describing has both physical and semantic aspects. Falling off a cliff depends heavily on the laws of gravity, but passing through a wall is based on material and semantics (solid vs. non-solid). Currently, as an existing model, RTFM hasn't focused on explicit physics yet.

Much of the 'physics' actually emerges from statistics. Many generative video models showing water flowing or trees moving aren't computed based on Newtonian mechanics and mass calculations but rather follow a vast amount of statistical patterns. World Labs is still focused on generating and exploring static worlds, but we'll also explore dynamics, much of which will be statistical learning.

I don't think today's AI has the ability to abstract across different levels and derive physical laws. On the other hand, we have spatial physics engines like Unreal, where explicit physical laws are at play. Eventually, these game engines/physics engines will merge with world-generating models into what I call a 'Neural Spatial Engine.' We're moving in that direction, but it's still early days.

4. The Value of Explicit Representations: Empowering Creators and Industries

Host: I'm not trying to pit you against Yann LeCun. But it seems like you're focused on generating explicit representations from abstract internal ones, whereas Yann just focuses on the internal representations.

Li Feifei: In my view, they'll combine beautifully. We're exploring both. Outputting explicit representations is actually a very deliberate approach because we want this to be useful to humans.

We want this to be useful for people in creation, simulation, and design. If you look at today's industries—whether you're making visual effects (VFX), developing games, designing interiors, or simulating for robotics or autonomous vehicles (digital twins)—these workflows are heavily 3D-dependent. We want these models to be absolutely useful for individuals and businesses.

Host: That brings us back to the topic of continual learning. For example, a model on a robot moving through the world with cameras, eventually not just learning the scene but understanding the physics of space, and then combining that with language? Does that require continual learning?

Li Feifei: Absolutely. Especially as you approach certain use cases, continual learning becomes crucial. This can be achieved in multiple ways: in language models, using context itself as input is a form of continual learning (as memory); there's also online learning and fine-tuning. In spatial intelligence, whether it's personalized robots or artists with specific styles, it will ultimately drive the technology to become more responsive across different timescales to meet specific use case needs.

5. Future Outlook: Technological Leaps in AI Models

Host: Your progress has been incredibly rapid. Especially considering you once ran a dry-cleaning business in New Jersey—even if it was just for a short time—the leap is truly astonishing. What's your judgment on how developed this technology will be in five years? Will models have some kind of built-in physics engine internally, or possess longer-timescale learning abilities to build richer internal representations? In other words, will models begin to truly understand the physical world?

Li Feifei: Actually, as a scientist, it's hard to give a precise timeline because some technological advancements happen much faster than I expect, while others are much slower. But I think this is a very good goal, and five years is a reasonably solid estimate. I don't know if we'll get there faster, but in my view, it's far more reliable than guessing fifty years—or five months, for that matter.

Host: Could you elaborate a bit on why you believe 'Spatial Intelligence' is the next frontier? As we know, human knowledge contained in text is only a subset of all human knowledge. While it's incredibly rich, you can't expect an AI model to understand the world through text alone. Could you specifically talk about why this matters? And how are Marble and World Labs connected to this larger goal?

Li Feifei: Fundamentally, technology should help humanity. At the same time, understanding the science of intelligence itself is the most fascinating, bold, and ambitious scientific exploration I can think of—it's a 21st-century endeavor.

Whether you're driven by scientific curiosity or motivated by using technology to help humanity, it all points to one thing: much of our intelligence—and the intelligence we apply in our work—transcends language. I've jokingly said before that you can't put out a fire with language. In my manifesto, I gave several examples: whether it's spatial reasoning, deriving the DNA double helix structure, or a first responder collaborating with a team to put out a fire in a rapidly changing situation, much of it transcends language.

So from an application standpoint, it's obvious; as a scientific exploration, we should do our best to crack how to develop spatial intelligence technologies that take us to the next level. From a macro perspective, this is the dual motivation driving me: scientific discovery and building useful tools for humanity.

We can dive deeper into its practicality. Whether we're talking about creativity, simulation, design, immersive experiences, education, healthcare, or even manufacturing, there's so much that can be done with spatial intelligence. I'm actually very excited because many people who care about education, immersive learning, and experiences have told me that Marble (the first model we need to release) has inspired them to think about how to use it for immersive experiences, making learning more interactive and fun. It's so natural because children who haven't even learned to speak yet learn entirely through immersive experiences. Even as adults, most of our lives are immersed in this world—yes, it includes listening, speaking, reading, and writing, but it also includes practicing, interacting, and enjoying.

Host: Yes. One thing that impressed everyone about Marble was that it wasn't just generating the next frame but moving through a space—and it was running on just a single H100 GPU. I've heard you mention 'experiencing the multiverse' in other talks. Everyone got excited until they realized it would require massive computational power and expensive costs. Do you really think this is a step toward creating virtual worlds for education? Because it seems like you've already been able to reduce the computational burden.

Li Feifei: First, I truly believe we'll accelerate in terms of inference—we'll become more efficient, better, larger in scale, and higher in quality. That's the trend of technology. I also believe in the concept of the multiverse. As far as we know, the entirety of human history's experiences has existed in just one world—the physical entity of Earth, to be precise. While a tiny handful of people have walked on the moon, that's about it. We build civilizations, live, and do everything in 3D space.

But with the digital revolution and explosion, we're transferring parts of our lives into the digital world, and there's a lot of overlap between the two. I don't want to paint a dystopian picture where we abandon the physical world, nor do I want to depict an extreme utopian virtual world where everyone wears helmets and never appreciates the real world—the most fulfilling part of life. I reject both extremes.

But from a practical standpoint and future vision, the digital world is boundless. It's infinite, offering more dimensions and experiences than the physical world allows. For example, we've discussed learning. I wish I could have learned chemistry in a more interactive, immersive way. I remember my university chemistry classes had a lot about molecular arrangements, understanding symmetry, and asymmetry in molecular structures. I wish I could have physically experienced those things in an immersive environment.

Many creators I've met have countless ideas swirling in their minds every moment, but they're constrained by tool limitations. For example, using Unreal Engine, expressing a world from your mind takes weeks or even hours of work. Whether you're making a fantasy musical or designing a bedroom for a newborn, it would be fascinating if we allowed people to fully utilize the digital universe for trial and error, communication, and creation—just as we do in the physical world.

Moreover, the digital age is helping us break physical boundaries and labor constraints. Think about teleoperating robots. I can easily imagine creators collaborating globally through embodied avatars, using robotic arms or any form and digital space to work in both the physical and digital worlds. The film industry will also be completely transformed; currently, movies are passive experiences—wonderful though they may be—but we'll change how entertainment is consumed. So all of this requires multiple worlds.

Host: And about teleportation or teleoperating robots—some people talk about mining rare earths on asteroids. If you don't need to be physically present but can remotely operate robots, you've achieved that in those spaces. What you're talking about is creating explicit representations of 3D spaces that people can experience. To what extent does your model itself 'understand' the space it's in? Does it internalize this information, or is it merely projecting it explicitly?

This is a process toward truly understanding-the-world AI. Not just having a representation of 3D space but truly understanding physical laws, what it sees—even including the value, usefulness, and how to manipulate the physical world. How much of this understanding currently exists, in your view? And what needs to happen for those models to truly understand the world?

Li Feifei: That's a great question. 'Understanding' is a profound word. When AI 'understands' something, it's fundamentally different from human understanding. Partly because we're very different beings. Humans are embodied; we exist in tangible bodies. For example, when we truly understand 'my friend is happy,' it's not just abstract understanding. You feel the chemical reactions happening inside you, releasing happy hormones, your heartbeat speeds up, emotions change. That level of understanding is vastly different from an abstract AI agent.

An AI agent can correctly assign meaning and make connections. For example, in Marble—our product—you can enter an advanced mode of world generation for editing. You can preview the world and say, 'I don't like this sofa being pink; change it to blue.' Then it changes it to blue. Does it understand the meaning of 'blue sofa' and the word 'change'? Yes. Because without that understanding, it couldn't perform the task.

But does it understand everything about a sofa the way you and I do—including its purpose or even useless information? Does it have memories about sofas? Would it generalize the concept of 'sofa' to many other things? No, it doesn't. As a model, its capabilities are limited to creating a space with a blue sofa upon request.

So I do think AI 'understands,' but don't misinterpret this understanding as anthropomorphic, human-level comprehension. This understanding is more semantic than the kind that involves light hitting the retina and producing perceptual experiences.

Host: I saw your discussion with Peter Diamandis and Eric Schmidt. One thing that impressed me was the discussion about AI potentially having creativity or being used to aid scientific research. The analogy given was: If AI had existed before Einstein discovered relativity, could it have reasoned out that discovery? What's missing for AI to have that kind of creativity at the level of scientific reasoning?

Li Feifei: I think we're closer to having AI derive the double helix structure than to having it propose special relativity. Partly because we've already seen a lot of excellent work on protein folding. Inferring the double helix representation is more rooted in space and geometry.

The formulation of special relativity, however, operates at an abstract level. Everything we observe in physics, from Newton's laws to quantum mechanics, involves abstracting effects to the causal level. Concepts like mass and force, for instance, are abstracted to a level beyond what is merely generated by purely statistical patterns. Language can be statistical, and the dynamics of the 3D or 2D world can be statistical, but the abstraction of force, mass, magnetism, and causality is not purely statistical; it is deeply causal and abstract.

Eric and I have both mentioned on stage that if we were to aggregate all the data from celestial observations and satellite data and feed it into today's AI, it might be able to derive and fit Newton's laws of motion through the data.

Host: Since AI can infer the laws of motion given the data, why do you think it cannot derive the laws of relativity?

Fei-Fei Li: When we say those laws were 'derived,' Newton had to derive and abstract concepts like 'force,' 'mass,' 'acceleration,' and those fundamental constants. Those concepts reside at an abstract level that I have not yet seen in current AI.

Today's AI can leverage vast amounts of data, but there is not much evidence yet that it can achieve that level of abstract representation, variables, or relationships. I don't know everything happening in AI, and if I'm proven wrong, I'd be happy to accept it. But I haven't heard of any work that can achieve that level of abstraction within the architecture of Transformer models. I don't see where that abstraction would come from, which is why I'm skeptical. It requires constructing internal representations of internal abstractions and applying rules of logical knowledge. This may require us to make more progress in infrastructure and algorithms.

Host: That's exactly what I wanted to ask. You've been discussing post-Transformer architectures with people. Do you anticipate a new architecture emerging that could unlock some of these capabilities?

Fei-Fei Li: I agree; I do think we will have architectural breakthroughs. I don't believe the Transformer is the last invention in AI. On a macroscopic timescale, compared to the history of the universe, human existence hasn't been very long, but in our brief history, we've never stopped innovating. So I don't think the Transformer is the ultimate algorithmic architecture for AI.

Host: You once mentioned that you envisioned that if you could get an AI system to label images or generate descriptions, that would have been the pinnacle of your career. Of course, you've long surpassed that. So now, what do you imagine the pinnacle of your future career achievements would be from today onward?

Fei-Fei Li: I do think it's important to unlock 'spatial intelligence.' Creating a model that truly connects perception with reasoning: from 'seeing' to 'doing,' including planning and imagination, and translating imagination into creation. That would be truly remarkable. A model capable of doing all three simultaneously.