Without a Map, Can Pure Vision Autonomous Driving Stumble Blindly?

![]() 11/27 2025

11/27 2025

![]() 568

568

Recently, in an article delving into high-precision maps, a contributor presented a fascinating perspective: "If someone finds themselves in an unfamiliar locale and depends solely on their eyes (pure vision) to find their way, without any navigation aids, they're essentially groping in the dark." Does this analogy hold true for pure vision autonomous driving as well?

Image Source: Internet

Divergent 'Vision'-Based Navigation: Humans vs. Machines

Before diving into this discussion, let's clarify what "pure vision" entails. It refers to a perception system that relies exclusively on cameras, be it a single lens or multiple ones. Whether the visual data is fed directly into an end-to-end neural network to generate control commands or processed through object detection, semantic segmentation, and depth estimation before traditional planning, the emphasis is on using cameras as the primary, if not the sole, sensor.

The merits of cameras are undeniable. They capture a wealth of information (colors, textures, text, signs), are cost-effective, offer high resolution, and facilitate manual annotation and semantic interpretation. However, cameras are susceptible to lighting conditions, visibility issues, and struggle to directly measure precise distances and speeds, especially for distant objects.

Image Source: Internet

If an individual finds themselves in an unfamiliar city, armed only with their eyes and a vehicle, they might indeed feel like they're navigating blindly. Yet, humans don't solely rely on their eyes for navigation. They possess long-term memory (of familiar neighborhoods), linguistic and social skills (to ask for directions), abstract reasoning (to understand road signs and traffic rules), and common-sense inferences about the scene (which road likely leads to the city center). Humans can also tolerate uncertainty and take proactive exploratory actions (slowing down, pulling over to observe, making tentative turns).

To mimic these abilities, machines cannot rely solely on a single image frame. Continuous video footage, multi-temporal reasoning, learned scene models, and external information (such as high-definition maps and positioning) can compensate for human memory and reasoning capabilities. In essence, when humans navigate an unfamiliar city without navigation aids, they don't rely solely on pure vision but rather on a blend of multiple information sources and active exploration. This is precisely why autonomous driving systems adopt multi-sensor and multi-information source approaches to mitigate the limitations of cameras.

What Can Pure Vision Accomplish?

The capabilities of pure vision should not be underestimated. Many automotive companies have developed autonomous driving systems centered around visual solutions. With deep learning, cameras can perform robust semantic understanding tasks, such as identifying vehicles, pedestrians, traffic signs, and signals, discerning lane lines, and segmenting drivable areas.

Through temporal information (consecutive frames) and learned motion models, cameras can estimate the vehicle's motion (visual odometry/VO) and relative depth (monocular depth estimation or stereo/depth matching). Combining these capabilities, pure vision systems can complete the perception-prediction-planning loop under favorable lighting and weather conditions, particularly in structured environments (like highways and urban main roads) and within defined operational design domains (ODDs).

However, the fact that pure vision can accomplish certain tasks does not imply it can entirely replace other sensors. Cameras perform poorly in scenarios such as nighttime or extremely low-light conditions, strong backlighting, rain, snow, fog, reflective or flat textureless surfaces (e.g., large smooth ground areas or bare snowfields), heavily occluded complex intersections, and early warnings for distant small objects (e.g., pedestrians or small vehicles suddenly appearing in the distance).

Image Source: Internet

Monocular cameras also suffer from scale ambiguity (i.e., it's challenging to determine the absolute distance of objects from images alone). Although this can be partially resolved through structure from motion or learning, the accuracy and robustness still fall short of those of radar/lidar. Additionally, cameras are susceptible to optical illusions (e.g., reflections, projections, extreme contrasts) and can be easily "confused" by abnormal lighting or extreme scenes. These limitations directly relate to the design of safety redundancy. When perception becomes unreliable, the system must either degrade (limit speed, actively stop) or rely on other sensors.

In reality, many current technical solutions adopt a "redundancy and complementarity" strategy. Cameras excel at semantic understanding and long-range visual details, millimeter-wave radars perform well in measuring relative speeds in rain, snow, and fog and have penetration capabilities, and lidars are more reliable in constructing precise three-dimensional geometries.

Most mature autonomous driving systems opt for multi-sensor fusion to cover a broader range of ODDs. Of course, some technical solutions consistently promote a "camera-dominant" or "camera-first" approach, relying on extensive scene data training, strictly limiting operational domains, and designing detailed degradation strategies to ensure safety.

Can Pure Vision Replace Maps and Positioning?

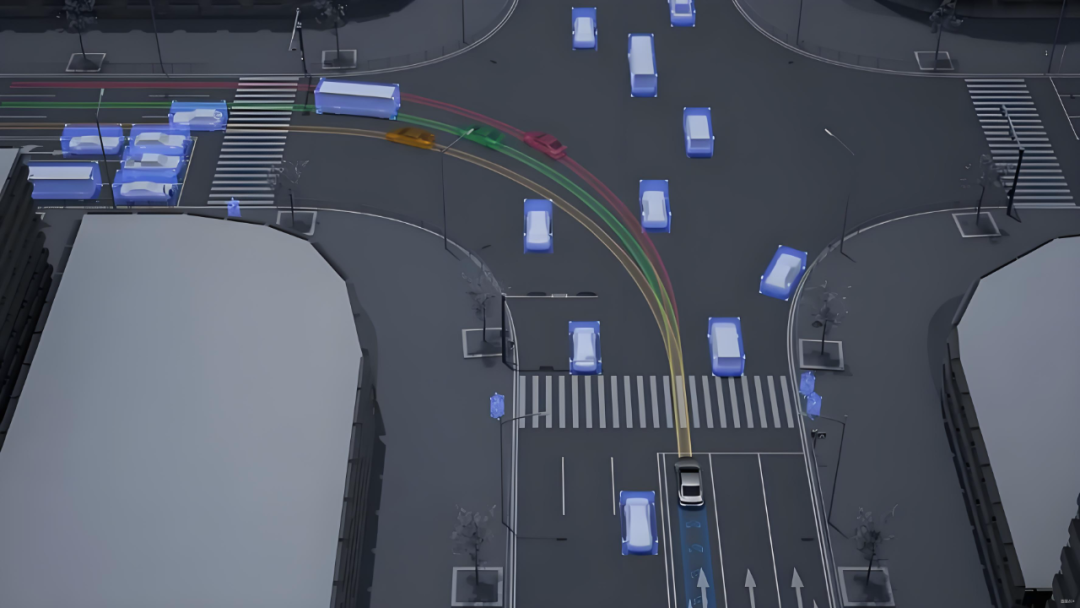

Returning to our main topic, the contributor underscored the importance of navigation (maps) for pure vision. Maps and positioning address the questions of "where am I" and "where is the destination." Pure vision can perform relative positioning (through visual odometry or visual SLAM) and even global positioning based on image matching (visual localization/visual database retrieval).

However, to truly deploy autonomous driving in vehicles, absolute positioning is indispensable. Absolute positioning (high-precision GNSS, precise projection of vehicle coordinates onto a map) plays a pivotal role in scenarios such as narrow lanes, complex intersections, and situations requiring precise trajectory tracking.

Image Source: Internet

Maps (especially lightweight vector maps or road network information) provide semantic and prior information for planning, significantly reducing the burden of online reasoning and enhancing safety margins. Pure vision can replace some map functions, but it's extremely challenging to drive safely without a map and relying solely on cameras in all scenarios.

Since pure vision can replace some map functions, how can its capabilities be maximized? Currently, multiple solutions are promoting this idea. Self-supervised depth and visual odometry algorithms can learn depth and motion without dense annotations; multi-view and temporal fusion can improve long-range depth estimation; leveraging neural scene representations (such as NeRF-like ideas) or large-scale model visual understanding can enable the system to better infer unobserved parts when encountering similar scenes; additionally, converting camera outputs to BEV representations, combining them with trajectory prediction, and introducing uncertainty modeling at the planning layer can make pure vision systems' decisions more reliable.

Final Reflections

Pure vision is not a panacea, but its capabilities should not be underestimated. It offers significant advantages in semantic understanding and cost-effectiveness and can handle a substantial portion of tasks in controlled scenarios. However, when confronted with extreme lighting conditions, adverse weather, long-range warnings, and absolute positioning requirements, the physical and algorithmic limitations of cameras become apparent. We believe that ensuring the safety of intelligent driving does not rely solely on a single sense but rather on multi-source information and rigorous engineering.

-- END --