Win Glory for the Nation! Zhipu's GLM-4.7 Dominates Global Open-Source Models, Outshining GPT-5.2

![]() 12/25 2025

12/25 2025

![]() 540

540

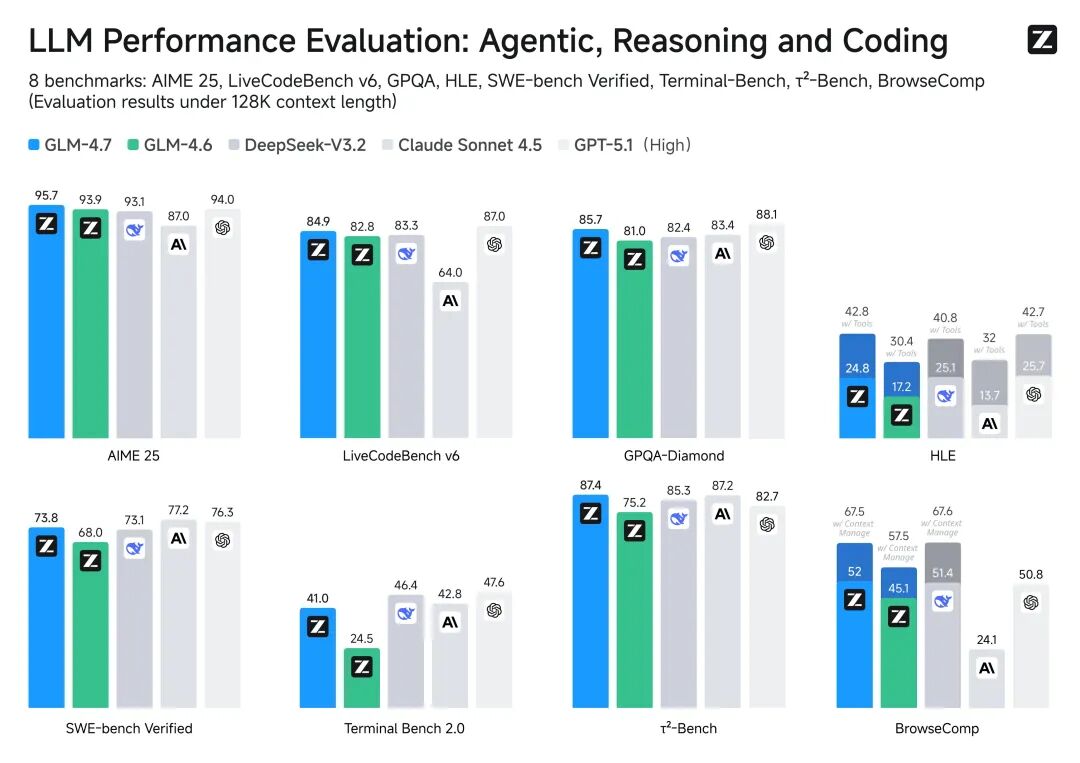

Zhipu has once again brought pride to the nation! As it gears up for a listing on the Hong Kong Stock Exchange, aiming to be the world's first major large-scale model stock, Zhipu has unveiled its 'GLM-4.7' model. The most remarkable feature of GLM-4.7 lies in its programming prowess, which ranks top among domestic models. On a global scale, it surpasses all open-source counterparts, even outperforming GPT-5.2 in scores.

Its capabilities are truly impressive. Not only does it excel in programming, but its tool invocation and complex reasoning abilities have also seen significant enhancements. This enables GLM-4.7 to excel in programming and agent scenarios.

Forget the intricate parameters; let's jump straight into real-world examples!

First, let's explore the official examples provided.

Programming · Complex Interactions:

Aesthetics · PPT · Posters:

Frontend Optimization · Diverse Styles:

Put aside the hype; let's see the tangible results. Below, I'll personally assess GLM-4.7's programming capabilities and compare them with those of Gemini 3.0 Pro.

First, let's evaluate the gaming effects by creating a Mario-inspired adventure game.

GLM-4.7:

Gemini 3.0 Pro:

It's evident that GLM's graphics and control feel are superior.

Gemini's version is merely a basic demo, with an unreasonable jump height design.

Now, let's create a 3D racing game.

GLM-4.7:

Gemini 3.0 Pro:

This case presents a bit of a challenge.

GLM required several rounds of debugging to achieve the above results, with persistent issues in directional control and noticeable lag.

Gemini's controls are smoother, with fewer glitches.

After testing several 3D games and applications, both models still have considerable room for improvement, but Gemini performs relatively better.

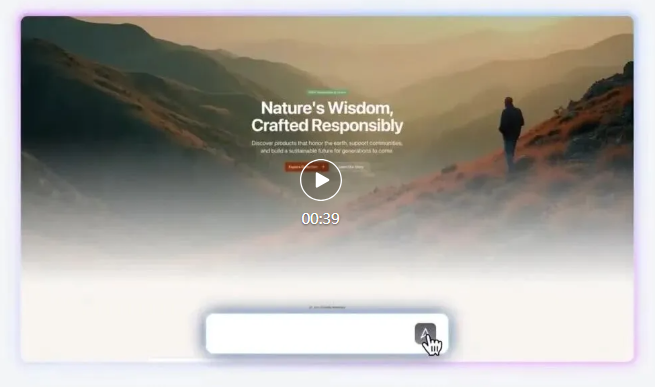

Now, let's assess web programming capabilities by creating a Python learning website.

GLM-4.7:

Gemini 3.0 Pro:

These two user interfaces are remarkably similar, both employing the same dynamic background effect.

Overall, GLM's effect is superior, with a dynamic demonstration of a sorting algorithm at the bottom of the webpage, which was a pleasant surprise.

Generate a poster creation inspiration website.

GLM-4.7:

Gemini 3.0 Pro:

Both effects are impressive, but I personally favor Gemini's layout, which is more visually appealing.

After testing several cases, GLM-4.7 can hold its own against Gemini 3.0 Pro.

It's remarkable how swiftly domestic models have caught up with Google and OpenAI. Zhipu is truly impressive.

GLM-4.7 selects different thinking modes tailored to various scenarios.

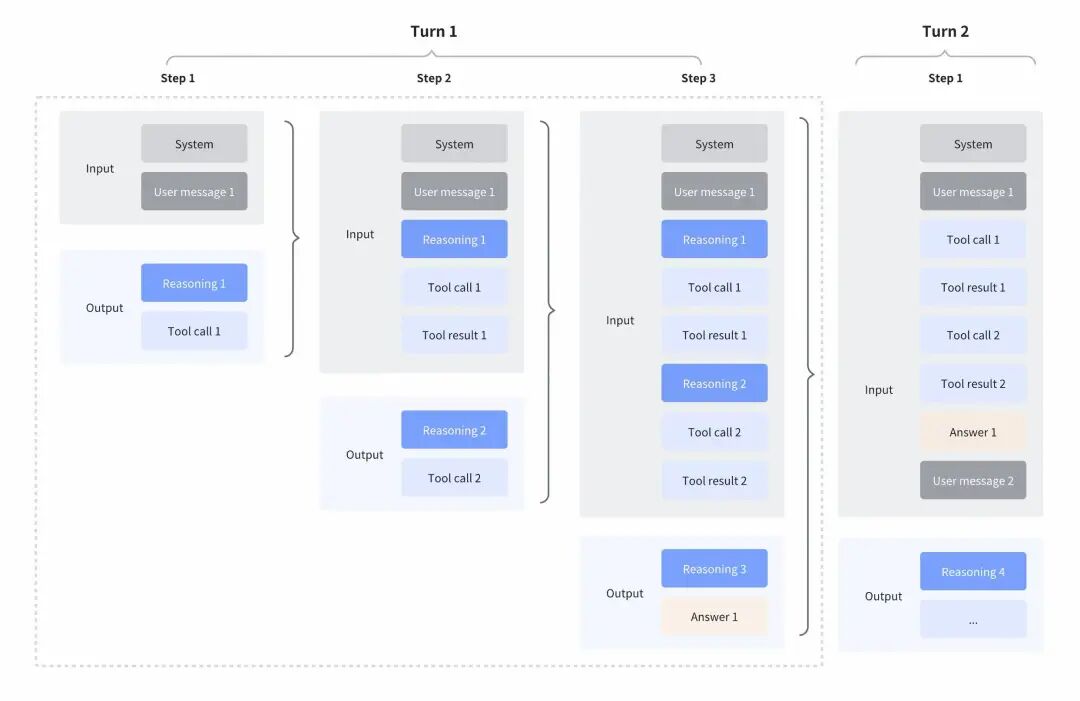

Interleaved Thinking

GLM-4.7 defaults to interleaved thinking.

This means the model reasons after invoking tools and receiving their results.

Before the next operation, it explains the results of each tool invocation, chains these explanations with reasoning steps, and combines intermediate results to make inferences.

This enables GLM to support more complex reasoning.

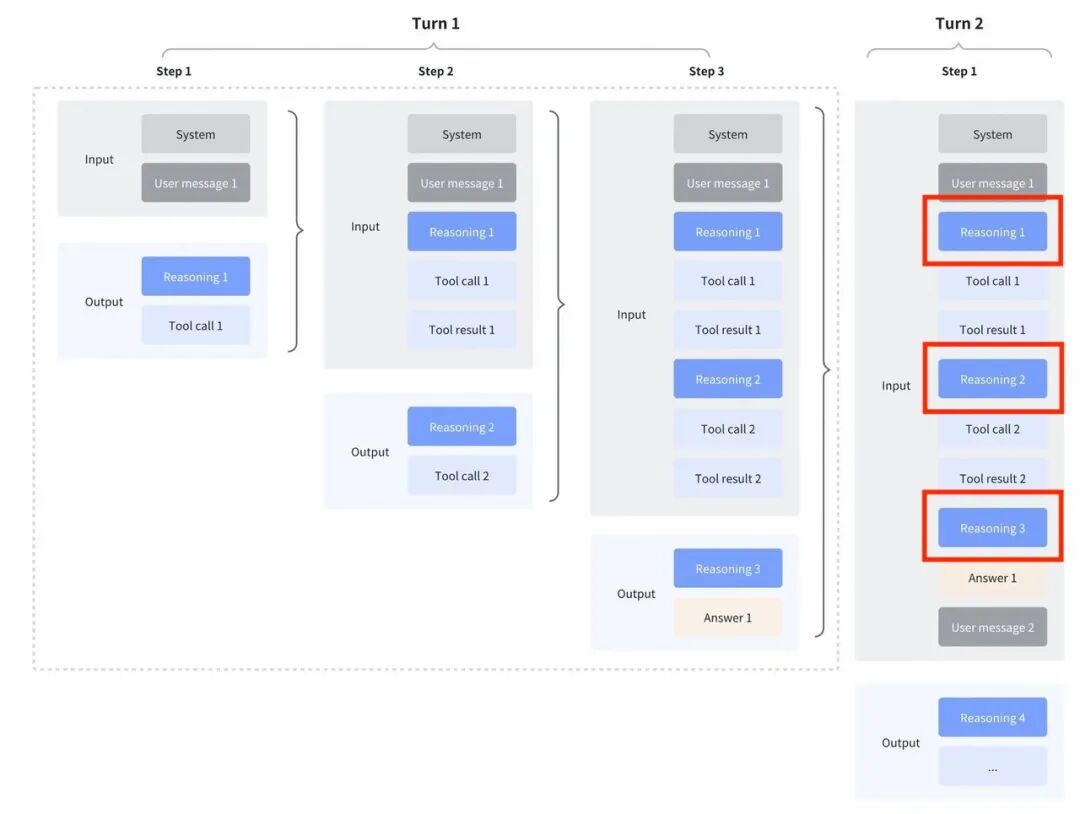

Retained Thinking

This is a new feature introduced in GLM-4.7 specifically for programming scenarios.

It means the model retains reasoning content from previous turns.

This improves cache utilization and saves tokens. It also maintains reasoning continuity and dialogue integrity.

Turn-Based Thinking

This means each turn can independently choose whether to activate thinking mode.

This reduces reasoning overhead and costs, making reasoning more flexible.

Finally, we wish Zhipu success in becoming the world's first major large-scale model stock and hope to see an increasing number of domestic models top the charts!