Fierce Competition in 2025: The Open-Source Revolution of Large Models

![]() 12/26 2025

12/26 2025

![]() 572

572

Ecological Niche Trumps Technological Barriers

Written by / Chen Dengxin

Edited by / Li Jinlin

Formatted by / Annalee

In 2025, open source has emerged as the defining characteristic of large models.

Since the onset of the generative AI revolution, the debate between open-source and closed-source large models has raged on, with both sides steadfast in their views and unable to sway one another, creating an apparently irreconcilable divide.

Now, however, the answer has become clear.

With a growing number of internet giants siding with open source, the open-source community has gained the upper hand in 2025, propelling open-source large models to the forefront in terms of global user adoption.

Undoubtedly, the dynamics of the battle have shifted.

DeepSeek Disrupts the Status Quo

Large models once followed a predictable trajectory.

Due to the demonstration effect of ChatGPT across the Atlantic, closed-source large models were initially seen as the inevitable path, with most rising stars adopting closed-source strategies.

In hindsight, closed-source large models held distinct competitive advantages in data security, commercial monetization, and response speed, making it logical for ChatGPT and others to pursue this path. However, they also faced limitations in AI accessibility, ecosystem development, and market penetration.

Thus, open-source large models emerged as a compelling alternative.

Meta spearheaded the open-source movement for large models abroad, while Alibaba did the same domestically. Under the leadership of these top-tier internet giants, an increasing number of companies opted for the open-source route.

Consequently, large models entered a new competitive landscape, characterized by two opposing factions.

This led to increasingly intense clashes, with proponents arguing that "future open-source models will fall behind" versus those contending that "without open source, there would be no Linux, and without Linux, no internet."

Against this backdrop, a compromise emerged.

Some companies maintained closed-source strategies internally while providing third-party open-source large models through cloud platforms externally, catering to practical needs across diverse scenarios—an optimal solution for the time.

Xiaomi's Open-Source Large Model MiMo

It wasn't until DeepSeek gained momentum that subtle yet significant changes occurred.

DeepSeek-R1's total training duration was approximately 80 hours, with a training cost of about $294,000, disrupting the traditional notion of "brute force through computational power" with its low-cost, high-efficiency approach.

More critically, DeepSeek embraced an open-source strategy.

Under the "DeepSeek Moment," the open-source community gained significant momentum, prompting more internet companies to switch sides and creating a one-sided competitive landscape.

For instance, Tencent's Hunyuan World Model 1.5 open-sourced the industry's most systematic and comprehensive real-time world model framework for the first time, covering the entire chain and all aspects of data, training, and streaming inference deployment.

Another example is OpenAI open-sourcing its new model, Circuit-Sparsity, which boasts only 0.4 billion parameters with 99.9% of its weights set to zero, proposing a novel path for sparsity.

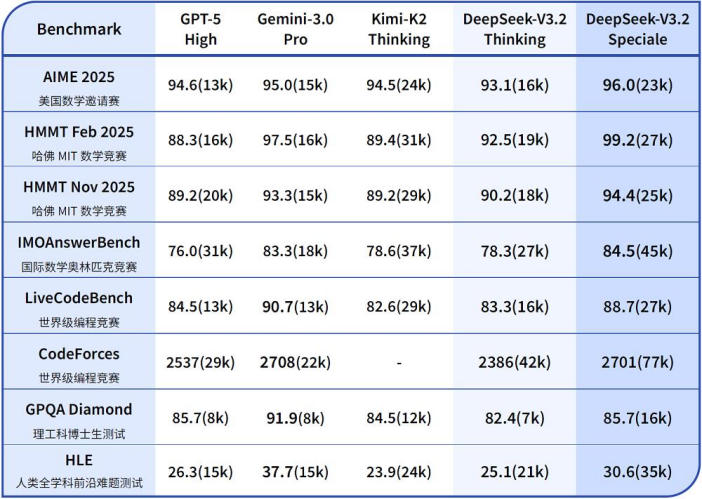

According to the "China Intelligent Internet Development Report (2025)," open-source models represented by DeepSeek and Qwen have caught up with or even surpassed international mainstream products in core performance, marking the transition from "following" to "running alongside." The narrowing technological gap has rapidly shifted the focus of market competition from pure performance comparison to cost, efficiency, and commercialization capabilities.

China's Open-Source Large Models Pack a Punch

Meanwhile, Meta has "switched sides."

Meta's Llama large model has long been a key representative of open source. Now, it is betting on the closed-source Avocado large model, planned for release around the first quarter of 2026.

"QbitAI" stated, "Meta, which plans to move towards closed source, is essentially using open-source models to train its own closed-source models. For Meta, which has long used 'open source' as its core narrative, this represents a complete strategic U-turn, marking the decline of the once-brightest open-source force among Silicon Valley unicorns."

Why Open Source Has Become the Inevitable Path

Setting aside Meta, embracing open source remains a global mainstream trend for three key reasons.

Firstly, the focus on applications has become a consensus.

The ultimate goal of large models is AI applications, a consensus within the industry. Whoever can first develop killer applications will gain greater industry influence and a higher AI ecological niche.

Thus, AI applications have become a competitive battleground for internet giants.

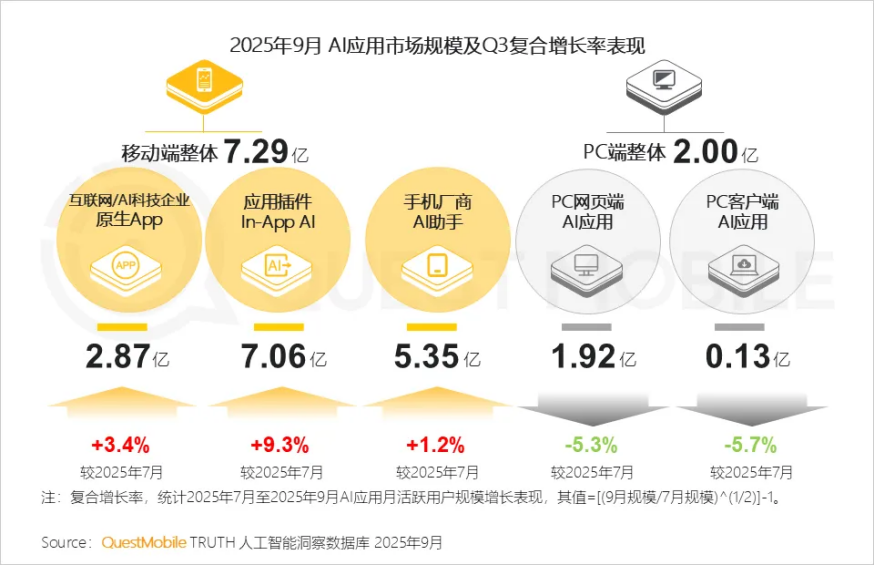

According to QuestMobile, by September 2025, the AI market has entered a fiercely competitive phase of rapid iteration, with technological pursuits shifting from "big and comprehensive" to "precise and powerful," achieving performance improvements within controllable costs. The monthly active users for AI applications on mobile and PC platforms have reached 729 million and 200 million, respectively, with Doubao surpassing DeepSeek, boasting 172 million and 145 million monthly active users, respectively.

Source: QuestMobile

In reality, open source has become the shortest path for large model deployment, rallying dispersed forces to jointly create a thriving AI application ecosystem and seek super traffic entry points in the AI era—a visibly win-win scenario that internet giants naturally embrace.

"Zero State LT" stated, "Now that a consensus has formed, the next competitive edge lies in who can offer more comprehensive open-source resources and deliver more substantial value. Comprehensive doesn't mean simply stacking parameters to the ceiling but covering as many modalities, scenarios, and applications as possible. Substantial value doesn't mean symbolically opening a few versions but presenting a complete set of deployable model capabilities."

Secondly, the ecosystem reigns supreme for large models.

Under open source, developers only need to fine-tune models for specific application scenarios, significantly lowering the barriers to entrepreneurship and innovation, reducing costs, shortening iteration cycles, and enhancing user experiences.

Moreover, the vast developer community can provide technical feedback, pushing technological boundaries and accelerating large model iteration through open-source knowledge aggregation.

Academician Mei Hong of the Chinese Academy of Sciences stated, "Large models in the future need to follow the path of the internet and become open source, with a globally maintained open and shared foundational model to ensure synchronization with human knowledge. Otherwise, any foundational model controlled by a single institution will make it difficult for other institutional users to confidently upload application data."

This has given rise to a healthy large model ecosystem.

As developers become accustomed to an open-source ecosystem, they tend to remain as loyal users and are more likely to accept other services within the ecosystem, thereby strengthening the overall foundation.

In short, the high stickiness brought by open source can generate incalculable commercial value, prompting internet giants to employ various strategies to compete for this strategic high ground.

For instance, Alibaba has open-sourced over 300 models, with more than 170,000 derivative models, ranking first globally in open-source large models and following a horse-racing approach.

Another example is Baidu, which has independently developed the Kunlunxin P800 and a 10,000-card cluster, binding model open sourcing with autonomous computing power and following a full-stack autonomous route.

Thirdly, open source ≠ free.

Zhang Peng, CEO of Zhipu AI, once stated, "Historical experience shows that even MySQL and RedHat have proven that open source does not equal complete freedom, as there are still costs for later technical personnel investment and maintenance."

In short, open source also offers paths for commercialization.

Firstly, through enterprise version fees, which can offer value-added functions such as million-level token ultra-long text support, professional technical services, precise computational optimization, and multimodal image understanding capabilities, attracting enterprise users to pay through functional differences.

Secondly, through commercial API fees, where some internet companies build open-source ecosystems to attract developers and then monetize through commercial APIs offering faster inference speeds, stronger deployment capabilities, and higher performance limits.

Thirdly, through cloud service fees, as most internet giants are also key players in the cloud service sector, leveraging open-source large models to drive traffic and provide users with advanced paid cloud functions such as computational power leasing, data encryption, cross-cloud cluster management, and full-process toolchains.

From this, it is evident that open source becoming a common choice for internet giants is only natural.

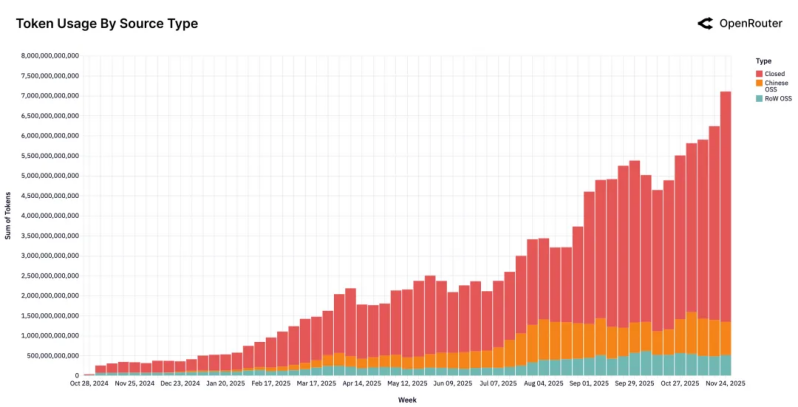

In conclusion, the emergence of DeepSeek in 2025 has disrupted the previous balance between open source and closed source, leading more companies to recognize that open source is no longer an option but a necessity. With the rapid iteration of open-source large models, even Silicon Valley giants like OpenAI, Google, and NVIDIA have entered the fray. OpenRouter data shows that the market share of open-source models has climbed to 33%.

Source: OpenRouter

As more players enter the field, the competitive landscape of open source has undergone subtle changes, shifting from DeepSeek's dominance to diversified competition, meaning users now have more choices.

Thus, the future of open-source large models holds even greater promise.