Hot Topic | Nvidia’s $20 Billion Acquisition of Groq: A Strategic Move to “Block” AI Inference Rivals

![]() 12/31 2025

12/31 2025

![]() 478

478

Preface:

On Christmas Eve in Silicon Valley, Nvidia CEO Jensen Huang unveiled what could be termed a “$20 billion Christmas gift”—a historic partnership with AI chip startup Groq.

This sum far exceeds the $7 billion Nvidia paid for Mellanox in 2019, marking the company’s largest-ever deal.

Behind this seemingly bold move lies Huang’s concern over the AI inference market and a deeper strategic defense of Nvidia’s supply chain dominance.

Inference Shakes the Landscape: Groq’s Rise as a Disruptor

For the past decade, Nvidia has dominated the AI training market through its GPU architecture and CUDA software ecosystem.

Data centers, research institutions, and most global AI model training rely heavily on Nvidia’s GPUs. However, as AI applications shift from training to inference between 2024-2025, demands for low latency, efficiency, and cost-effectiveness have surged. Traditional GPUs are no longer the optimal choice for such workloads.

Against this backdrop, inference-accelerating chips known as LPUs have emerged, with Groq becoming the sector’s most heavily capitalized star.

Founded by Jonathan Ross, a former core engineer of Google’s TPU, Groq’s chip architecture excels in inference performance and cost efficiency.

Groq’s LPU features fully static scheduling, no dynamic branching, and deterministic instruction-level execution—approaches that are nearly revolutionary in the GPU world but ideal for predictable latency, load-independent performance, and SLA-driven commercial deployments.

Public data indicates that Groq’s inference chips offer competitive speeds, potentially achieving up to 10 times higher efficiency than traditional GPUs.

This positions Groq as a clear technical leader in latency-sensitive, real-time AI applications.

If adopted on a large scale, this advantage could directly challenge Nvidia’s GPU-centric ecosystem.

Leveraging this differentiation, Groq quickly became a favorite among investors, raising $1.8 billion since 2016 from backers including BlackRock, Samsung, and Cisco.

A $750 million funding round in September 2025 valued the company at $6.9 billion.

At the time, Groq set a $500 million annual revenue target, secured strategic partnerships with Meta, IBM, and Saudi Aramco, and planned large-scale AI inference data centers.

No one expected that just three months later, this startup aiming to disrupt Nvidia would be “recruited” by the tech giant for $20 billion.

The $20 Billion Premium: What Is Nvidia Defending Against?

The most puzzling aspect of the deal is Groq’s sub-1% market share and 2025 revenue of just $172.5 million—far from posing a threat to Nvidia.

Why did Huang act so urgently? The answer lies in AI’s structural shifts and Nvidia’s supply chain vulnerabilities.

The AI industry is transitioning from training to inference. IDC projects that the global AI inference chip market will reach three times the size of the training market by 2025.

Unlike training chips, which prioritize raw computational power, inference chips must balance latency, throughput, and cost.

Scenarios like autonomous driving, high-frequency trading, and real-time chatbots demand cost-effective specialized chips over expensive general-purpose GPUs.

While Nvidia holds 90% of the training market, it faces multi-front competition in inference.

Google’s TPU is used by Apple and Anthropic for both training and inference. Meta, OpenAI, and others are developing proprietary inference chips to reduce reliance on external suppliers. Startups like Groq and Cerebras are capturing market share with differentiated technologies.

Huang admitted in an internal memo that acquiring Groq aims to “integrate its low-latency processors into Nvidia’s AI factory architecture for broader inference and real-time workloads,” openly acknowledging gaps in its inference portfolio.

More critical than filling gaps is defending against supply chain risks. Nvidia’s true competitive advantage lies not just in CUDA but in its buyer monopoly over critical components like HBM and CoWoS.

HBM and CoWoS are essential for high-end AI chips but face severe capacity constraints. TSMC plans to produce just 65,000–80,000 CoWoS wafers monthly by 2025, with over 60% allocated to Nvidia.

This means even superior chip designs from competitors may struggle to secure HBM and CoWoS supplies for mass production.

Groq’s technology bypasses both bottlenecks. Using on-chip SRAM, its chips eliminate the need for HBM and complex CoWoS packaging, enabling production via foundries like Intel.

If Groq’s approach succeeds, AI chip production thresholds will drop sharply, allowing other designers to mass-produce inference chips without relying on scarce supply chains—a existential threat to Nvidia’s “scarcity empire.”

“When you no longer depend on Micron’s or Samsung’s HBM or CoWoS capacity, manufacturing flexibility and options expand significantly,” analyst Max Weinbach noted.

Huang’s $20 billion payment is essentially a preemptive strike. Even if future breakthroughs bypass current systems, Nvidia must control the new path.

The deal also reflects strategic capital and geopolitical considerations. Groq’s investors include 1789 Capital, co-founded by Trump’s eldest son, and Saudi Arabia’s sovereign wealth fund.

Just a month before the deal, the U.S. government approved top-tier AI chip exports to Saudi Arabia, and Trump signed an executive order advancing unified AI federal regulation.

Analysts suggest Huang’s move aims to secure export exemptions and regulatory benefits from Washington and the Middle East.

Ultimately, Nvidia is leveraging its $60.6 billion cash reserves to dominate AI’s core resources through investments, acquisitions, and technology licensing.

From AI model developer Cohere to cloud provider CoreWeave and inference chipmaker Groq, and even massive investments in rivals Intel and OpenAI, Nvidia is weaving an impenetrable ecosystem.

Silicon Valley’s New Playbook: “Buy the Soul, Not the Shell” via Acqui-Hires

This blockbuster deal reeks of Silicon Valley tactics. Hours later, both sides clarified it was not a full acquisition but a non-exclusive technology licensing agreement.

Nvidia secured Groq’s core technology and talent while letting Groq operate independently.

This “acqui-hire” strategy is a calculated “hollow-out integration,” avoiding antitrust scrutiny while neutralizing threats.

Under the agreement, Nvidia gains non-exclusive rights to Groq’s core IP. Groq’s founder Jonathan Ross, president Sunny Madra, and key engineers join Nvidia.

Groq continues as an independent company, with its CFO becoming CEO and GroqCloud operating normally.

Such deals have become Silicon Valley norms.

In June 2025, Meta paid $15 billion to poach Scale AI’s founder and core engineers.

In July, Google acquired Windsurf’s founder and team for $2.4 billion, launching AI coding tool Antigravity.

In September, Nvidia hired Enfabrica’s CEO and team for $900 million, securing technology rights.

The logic is clear: in AI’s hyper-competitive landscape, talent and core tech trump corporate shells.

This model is reshaping industry dynamics. Giants use capital to absorb potential disruptors as technical modules, while startups pivot from “IPO dreams” to “being acquired.”

LPU’s Glory and Gaps: Technical Flaws and Industry Shifts

Despite Nvidia’s premium, Groq’s LPU chips have flaws. Their technical limitations reveal AI inference’s complex dynamics.

Groq’s strength—low latency and high efficiency—comes at the cost of memory capacity.

With just 230MB of SRAM per chip (vs. Nvidia H200’s 141GB), scaling LPU for large models requires far more chips than GPUs.

LPU’s 144-lane VLIW architecture also suffers from poor generality. Lacking hardware scheduling, it relies entirely on compilers to parallelize tasks. New model structures demand reconfiguring computation graphs, offering far less flexibility than GPUs.

This explains Groq’s shift from TSP to LPU branding—to downplay software limitations.

These flaws hindered commercialization. In July 2025, Groq slashed its revenue forecast from $2 billion to $500 million due to delayed orders and data center construction.

Yet Groq’s tech remains strategically invaluable. In latency-critical fields like autonomous driving and high-frequency trading, LPUs are unmatched.

Integrated into Nvidia’s AI factory, they could fill gaps in specialized inference, creating a full-stack training+inference advantage.

Epilogue

The next decade will see AI hardware dominated not by a single architecture but by a battlefield of diverse compute models, software ecosystems, and open standards.

Nvidia’s premium bet on inference technology addresses current gaps while staking an early claim on AI’s future.

As the global AI ecosystem expands, new competitors and innovation paths will continue to emerge.

Sources:

Silicon Insights Pro: [Market Share Below 1%, Yet a 3x Premium! What Is Nvidia Afraid Of?]

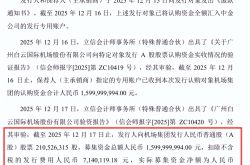

Cyzone: [Nvidia’s $20 Billion “Swallowing” of Groq; Chinese Rivals Borrow Shell Listings to Reach $22.9B Market Cap]

51CTO Tech Stack: [Nvidia’s Largest-Ever Acquisition! Huang Invests $20 Billion in Groq, Targeting AI Inference]

Tencent Tech: [Huang’s $20B Groq Rescue Pleases Middle Eastern Royals and Trump]