Trust Wanes! Salesforce Cuts Back on LLM Use Due to 'Hallucination' Problems | T Insights

![]() 12/31 2025

12/31 2025

![]() 516

516

Large Language Models Reveal Numerous Shortcomings

Over the past year, Salesforce CEO Marc Benioff has been a fervent advocate for the company's flagship AI product, Agentforce. He has touted its ability to empower businesses to automate tasks through large language models (LLMs) and achieve cost savings.

However, recently, Salesforce executives have delivered a markedly different message to clients: Agentforce sometimes functions more effectively when it relies less on LLMs (i.e., generative AI technology).

Sanjna Parulekar, the Senior Vice President of Product Marketing, explained that Salesforce has incorporated basic forms of 'deterministic' automation into Agentforce to bolster software reliability. This approach means that decisions are made based on preset instructions, rather than relying on the reasoning and interpretation capabilities typical of AI models.

From Advocacy to Caution: LLMs Expose Multiple Challenges

"A year ago, we all placed greater trust in LLMs," she remarked.

The company's website now highlights that Agentforce helps eliminate the inherent unpredictability of LLMs, ensuring that critical business processes adhere to the same steps consistently every time.

While this adjustment prevents AI products like chatbots from behaving erratically, it also results in occasional failures to grasp the context and deeper needs behind customer inquiries or to provide comprehensive answers to complex questions, akin to the limitations seen in ChatGPT.

As one of the most valuable software companies, Salesforce's partial retreat from LLMs could have repercussions for thousands of enterprises relying on the technology.

LLMs from AI providers such as OpenAI and Anthropic enable multi-scenario automation across software engineering, data analysis, finance, marketing, sales, and customer service.

Although many large enterprises have found practical value in LLMs, transforming them into reliable AI agents capable of handling multi-step tasks remains a formidable challenge due to technical, financial, and organizational barriers.

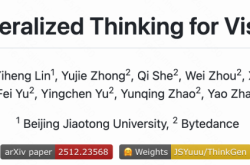

How Large Language Models (LLMs) Operate and Their Current Limitations (Image Source: YouTube@AndrejKarpathy)

Some businesses struggle to prevent LLMs from exhibiting inappropriate behavior or making incorrect assumptions in their responses.

This poses a significant problem for tasks requiring precision, such as inventory tracking or processing customer refund requests, as it may lead to poor business decisions and dissatisfaction among employees or customers.

Salesforce has undergone a significant shift in its AI marketing strategy—Benioff once claimed that deploying the product was a breeze.

For instance, some Agentforce customers encountered technical glitches, known as 'hallucinations,' this year, although the company stated that the product is rapidly improving.

As one of the few major companies disclosing AI-specific revenue, Salesforce claims that Agentforce's annual revenue is now on track to surpass $500 million.

Deterministic Trigger Mechanisms

Many clients require extensive guidance from AI providers to make the technology function properly, with some expressing concerns about operational costs.

Agentforce charges $2 per conversation handled by its agents, with additional pre-purchased credit and pay-as-you-go plans available.

Muralidhar Krishnaprasad, the CTO of Agentforce, stated that adopting more basic forms of automation (e.g., writing deterministic instructions for computers, commonly known as 'if A then B' logic) has reduced operational costs for Agentforce and lowered client usage expenses.

Agentforce User Interface (Image Source: Salesforce Official Website)

"If you give an LLM more than around eight instructions, it may start missing them—not ideal," he said.

"Some processes require absolute certainty; we don’t need to waste tokens for that... This not only saves LLM costs but, more importantly, ensures users receive accurate answers."

Ryan Gee, the Senior Vice President of Engineering at home security company Vivint, stated that after failed attempts to develop its own AI chatbot, the company began using Agentforce last year to provide customer support for 2.5 million clients.

Vivint encountered issues with Agentforce during initial use, as the product was not 100% reliable.

For example, Vivint requested Agentforce to send satisfaction surveys after each customer interaction, but sometimes the AI failed to do so without clear reasons.

He said Vivint collaborated with Salesforce to implement 'deterministic trigger mechanisms' in Agentforce to ensure surveys were sent every time.

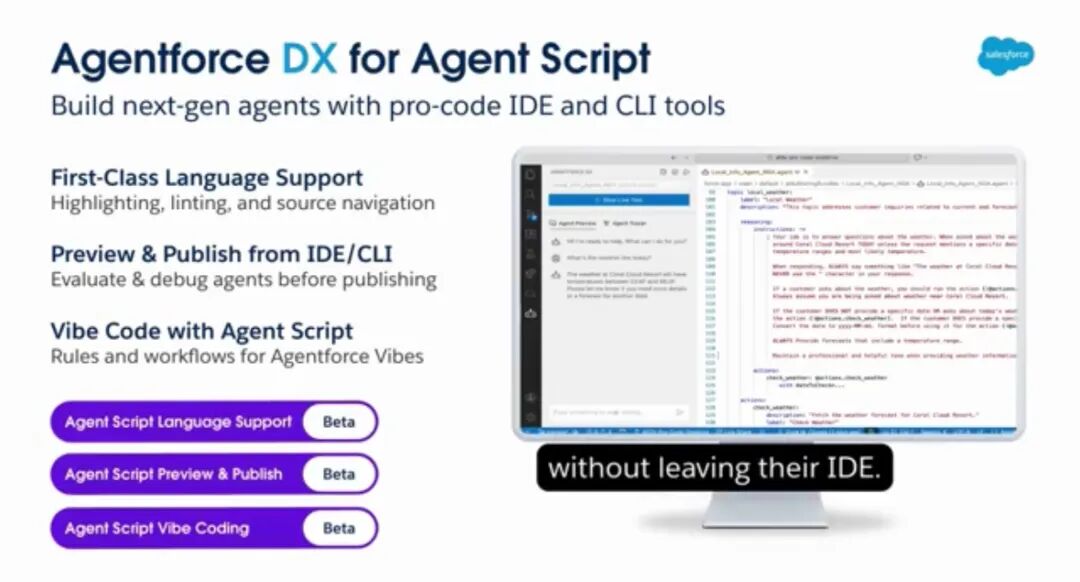

Phil Mei, another Salesforce executive, stated in an October blog post that the company has developed the Agentforce Script system to minimize LLM 'unpredictability' by identifying tasks or task segments that can be handled by 'agents' without using LLMs.

Key Features of Agentforce Script (Image Source: YouTube@SalesforceDevs)

Mei noted that Salesforce's most sophisticated clients are grappling with AI 'drift'—where agents deviate from preset goals when users ask irrelevant questions.

For instance, an AI chatbot designed to guide customers through form-filling may become 'distracted' when asked unrelated questions.

Currently, Agentforce Script is still in the testing phase.

LLM Reduction: Optimization or Compromise?

When marketing Agentforce, Salesforce emphasized how the product transformed its own operations.

For example, Benioff stated that Agentforce, partly reliant on OpenAI's LLM, now handles most of Salesforce's customer service inquiries, helping the company reduce approximately 4,000 customer service roles.

However, in recent months, Salesforce appears to have reduced LLM usage in its Agentforce-driven customer service agents.

For instance, last week, the company responded to a help request about Agentforce technical issues by providing a list of blog post links instead of asking for more information or communicating about potential problems.

The first blog link in the list concerned a June outage affecting Agentforce and other Salesforce products, offering little relevance to customers facing current issues.

Such responses resemble how businesses have used basic chatbots to handle customer or website visitor inquiries for years.

A Salesforce spokesperson denied claims that the company reduced LLM usage for customer service agents.

He stated that for customer service agents, the company 'optimized topic structures, strengthened security mechanisms, improved information retrieval quality, and refined response logic to make it more specific, contextually relevant, and aligned with actual customer needs this year.

We now also have better observability and feedback loops to quickly identify when agent responses are too broad, off-topic, or unclear and iterate improvements rapidly.

Thus, the shift from providing generic answers to structured, targeted responses is entirely intentional and a necessary process for optimizing agents.'

The spokesperson added that customer service agents 'resolve more customer issues than ever before,' with a projected 90% increase in resolved conversations for the fiscal year ending in late January.

"We've become more cautious about how and where we use LLMs in customer service scenarios," he said.

For other AI providers, LLMs have also proven difficult to control, often deviating from their intended purposes. For example, earlier this month, a Gap Inc. chatbot powered by enterprise AI startup Sierra answered sensitive questions about sex toys.

Sierra stated it fixed configuration vulnerabilities in Gap's chatbot and noted that 'malicious actors' deliberately abused the chatbot.

Editor: Yang Lujie

Reference Source: The Information

END