CES 2026 | AMD Lisa Su's Speech: Computing Infrastructure Blueprint from Cloud to Edge in the AI Era

![]() 01/07 2026

01/07 2026

![]() 558

558

Produced by Zhineng Zhixin

During the official opening keynote at CES 2026, AMD presented its computing landscape vision for the next five to ten years.

AI has evolved from a conceptual trend to a competition centered around infrastructure. Whether it's in data centers, endpoint PCs, robotics, healthcare, or scientific computing, almost all cutting-edge applications are simultaneously driving up the demand for computing power.

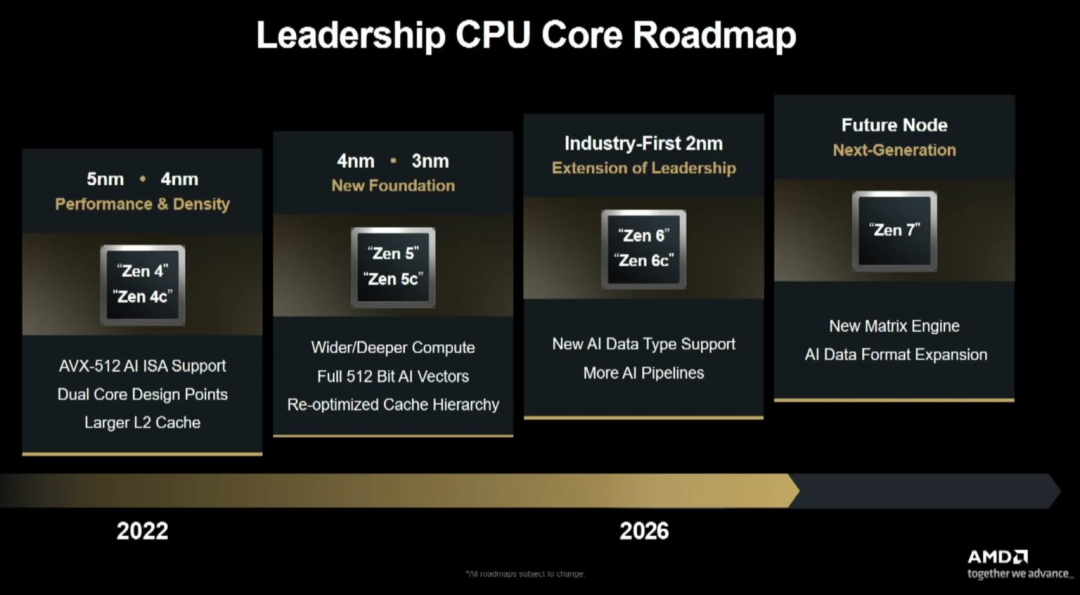

At CES 2026, AMD unveiled an engineering and systems-oriented roadmap. If the number of AI users truly reaches 5 billion within five years, the real bottleneck will no longer be the peak performance of a single generation of GPUs. Instead, it will be whether the entire computing ecosystem can form a scalable, affordable, and sustainable closed loop across cloud, edge, and endpoint devices.

At the outset of her speech, Lisa Su stated that '10 YottaFLOPS' is not just a performance slogan but a prerequisite. The widespread adoption of AI means that global computing capacity needs to undergo a quantum leap, which cannot be achieved through a single form of hardware alone.

Data Center

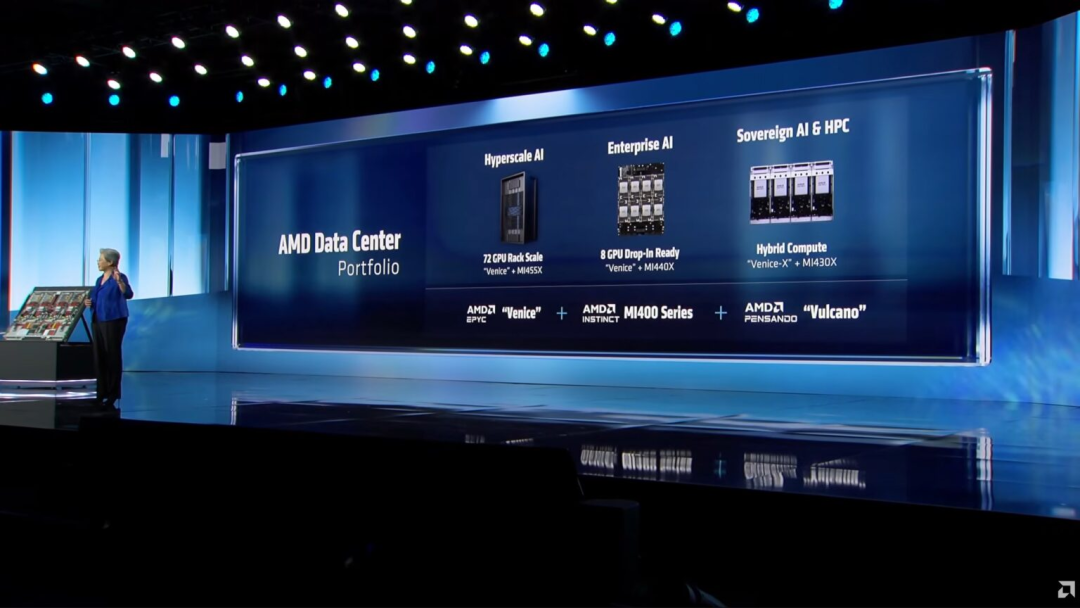

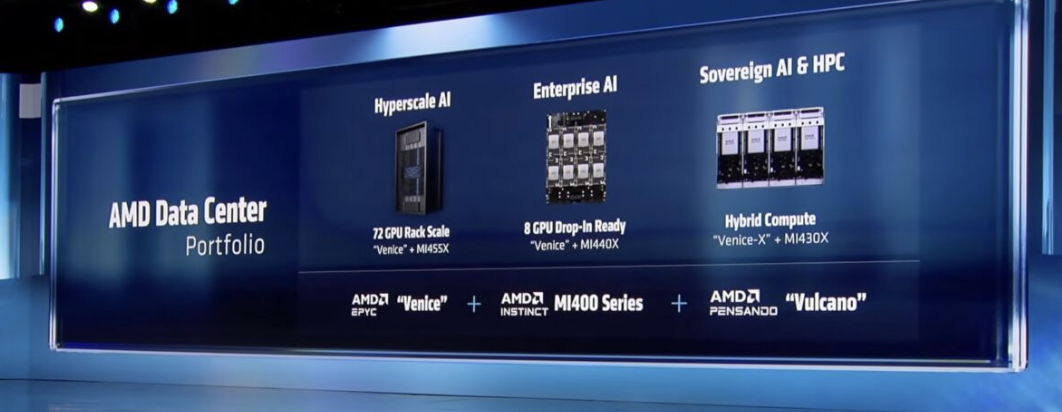

Against this backdrop, AMD underscored its data center strategy.

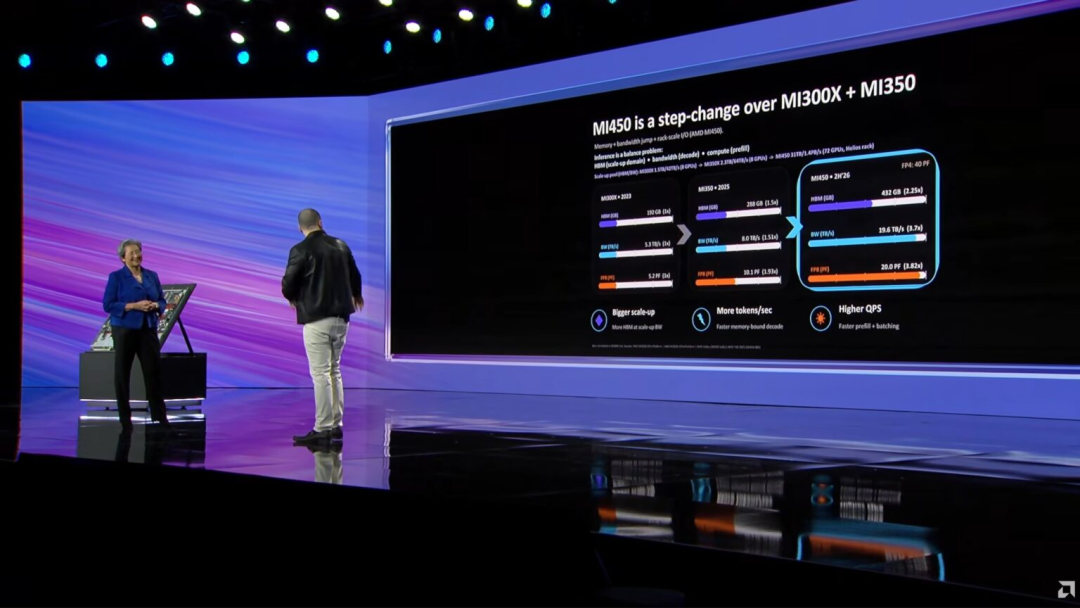

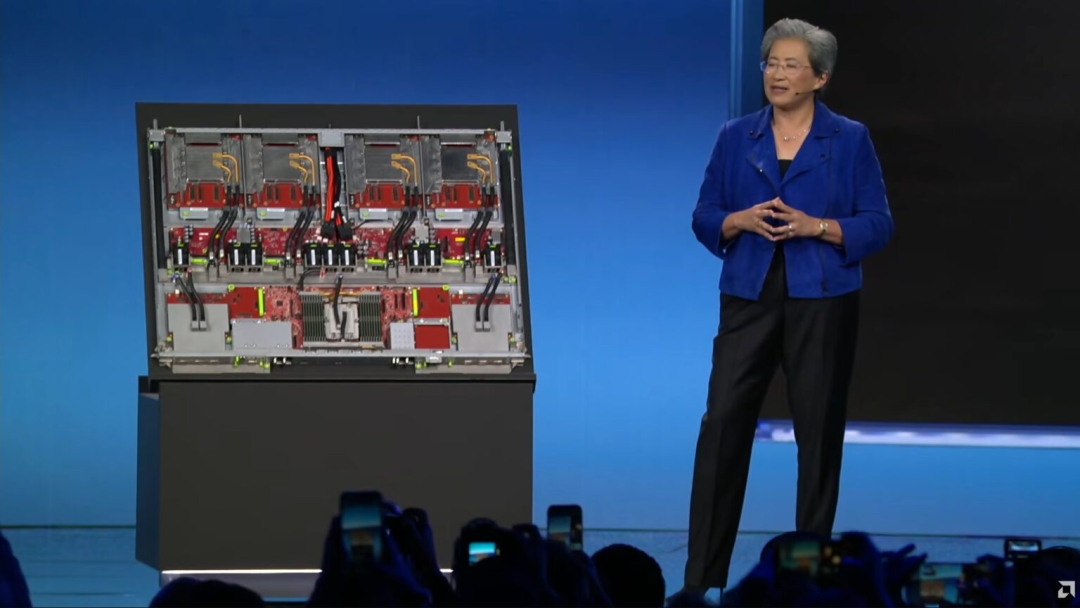

The introduction of the Helios rack-mounted platform and the Instinct MI400 series marks AMD's first engineering realization of the 'Yotta Scale AI' concept.

With 72 GPUs per rack, over 31TB of HBM4 memory, and 43TB/s of horizontal scaling bandwidth, AMD is striving to standardize rack-based forms to deliver supercomputing-level capabilities that can be deployed at scale by cloud providers and large AI customers.

Helios serves as AMD's key vehicle for entering the mainstream AI training market.

Whether it's for agent workflows, long-running inference tasks, or high-value scenarios like healthcare and education, the demand for computing resources is shifting from 'faster' to 'longer-lasting, more stable, and more scalable'.

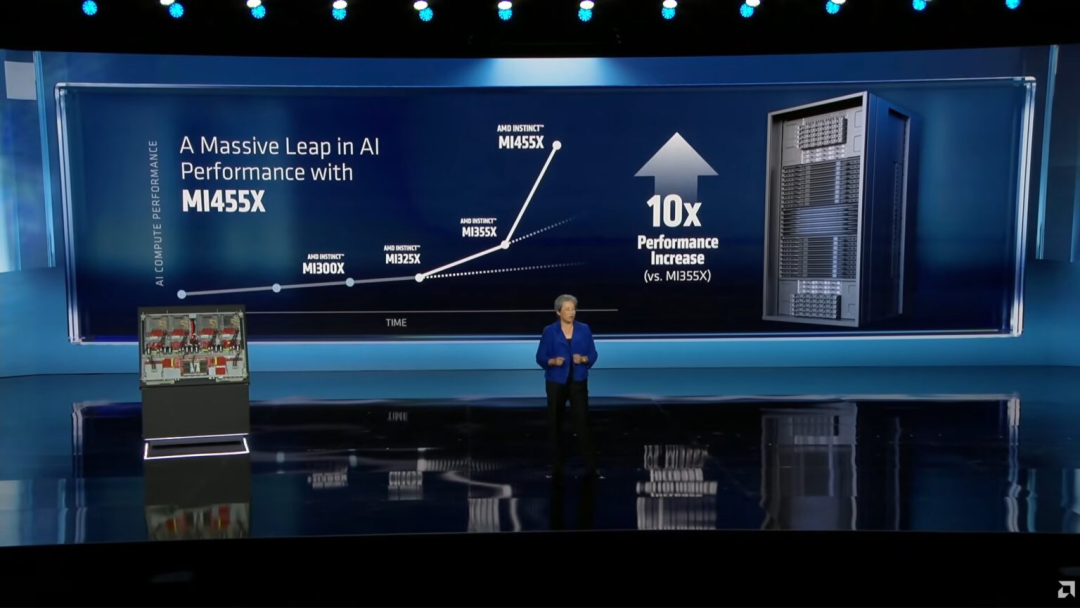

From the MI300 to the MI450 and then to the MI400, AMD emphasizes not only single-point performance superiority but also total cost of ownership (TCO), deployment density, and sustainable scalability. This directly addresses the current pain points in AI infrastructure: whether computing power can be affordable and stable over the long term.

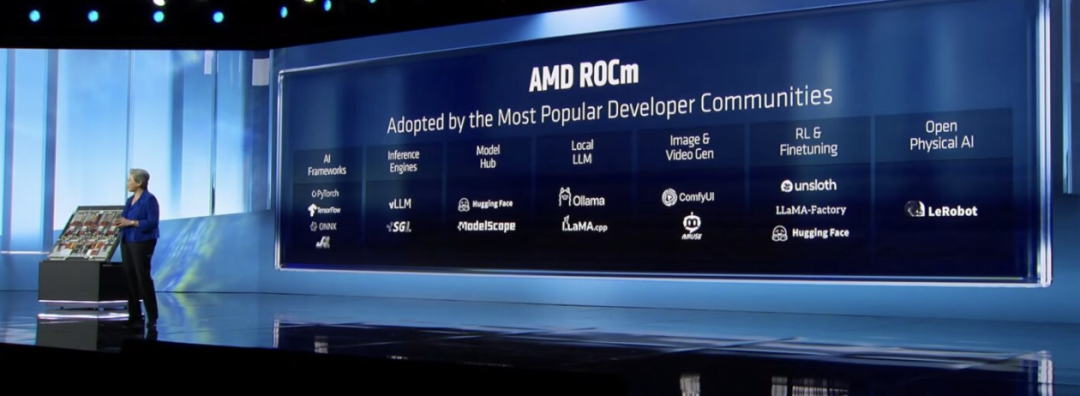

ROCm was also repeatedly emphasized in the speech as AMD's core differentiator in terms of 'openness' compared to its competitors.

Whether it's Luma AI running 60% of its inference workload on AMD GPUs or Liquid AI running local models directly on Ryzen AI platforms, AMD aims to become the 'universal foundation' for AI workloads through hardware coverage and software adaptability. This path aligns more closely with long-term industrial infrastructure.

AI PC

Turning to AI PCs, AMD adopts a similar strategy:

The Ryzen AI 400 series represents not a major architectural leap but a systemic enhancement of NPU, CPU, and GPU collaboration capabilities during the mid-cycle of the Zen 5 lifecycle.

Whether it's Liquid AI's local models or the developer community attracted by Ryzen AI Max, the focus is on 'which workloads should shift from the cloud to the edge'. Future AI cost structures will necessitate some inference and interaction tasks to be performed on endpoint devices, and AMD aims to secure this transition point in advance.

The launch of Ryzen AI Halo further clarifies this positioning.

As a reference platform for local AI development, it serves as AMD's 'low-barrier computing toolkit' for developers, directly competing with NVIDIA DGX Spark.

This is not a consumer product but an ecosystem-level offering designed to enable more AI applications to originate natively on AMD platforms rather than being migrated later.

The latter half of the speech devoted significant attention to healthcare, robotics, space, and scientific computing, highlighting AMD's focus on 'physical AI' and 'mission-critical computing'.

From Generative Bionics' bionic robots to Blue Origin's radiation-resistant embedded systems, truly high-value AI applications exist in the real world, where computing requirements are far more stringent than in internet services.

Lisa Su repeatedly emphasized at the conclusion that 'AI is different'. It is not merely a tool for efficiency gains but a foundational variable reshaping scientific research, healthcare, manufacturing, and exploration.

Rather than emphasizing the leadership of a single GPU generation, the key question is whether one has laid a sufficiently broad foundation when computing power becomes social infrastructure.

Summary

2026 will mark the year when AMD truly solidifies its strategic outline, utilizing a complete puzzle spanning from cloud to edge, software to systems, and commercial applications to national-level projects to demonstrate its long-term position in the AI era.