Huang Renxun Kicks Off the New Year: Pioneering Beyond Rubin Chips, Revolutionizing Digital Employees and Physical AI

![]() 01/07 2026

01/07 2026

![]() 404

404

Author: Daoge

Source: Node AI View

On January 5th (local time in the United States), NVIDIA's CEO, Huang Renxun, delivered the inaugural New Year address at the theater center of the Fontainebleau Hotel in Las Vegas, donning his signature leather jacket as usual.

Traditionally, NVIDIA showcases the detailed specs and performance of its latest chips at its annual developer conference held in Silicon Valley each spring. However, Huang Renxun pointed out that the escalating computational demands of artificial intelligence and the colossal market need for advanced processors to train and execute AI models have compelled the entire semiconductor industry to quicken its pace.

Recapitulating the transformations of 2025, Huang Renxun specifically underscored the unexpected catalytic impact of DeepSeek R1's emergence. Furthermore, his PPT presentation featured Chinese open-source models Kimi K2 and Qwen.

The address spanned one and a half hours and, upon closer examination, was densely packed with information. Node AI has distilled the key takeaways into three major themes: Agentic AI, Physical AI, and new chips.

01 Full-Scale Production of Vera Rubin, a New Chip Architecture

Although the new chip architecture was unveiled in the latter part of the address, given that computing power is the lifeblood of AI, the editor deems it essential to spotlight it beforehand:

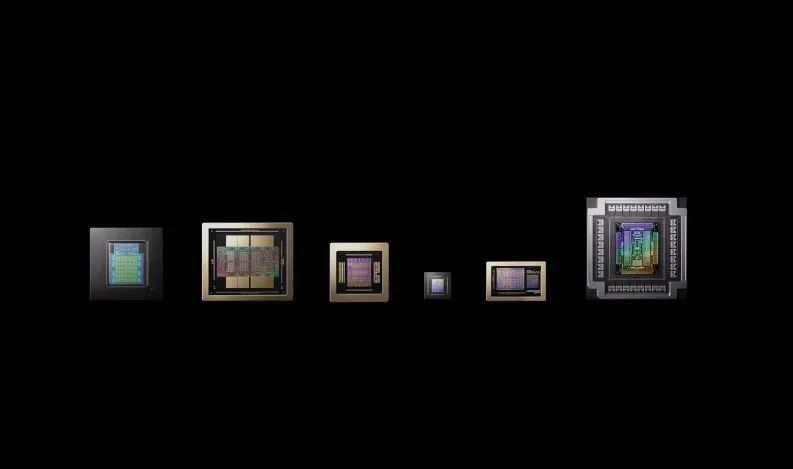

At the conference, NVIDIA introduced a six-component Rubin platform, encompassing two GPU and CPU specifications, Rubin and Rubin Ultra, along with NVLink 6 switching chips and ConnectX-9 SuperNIC.

This new server is meticulously designed to handle the massive computational loads required for model training in creating such simulations. The nomenclature pays tribute to Vera Rubin, a pioneering mid-20th-century American astronomer who made significant scientific breakthroughs through her groundbreaking observations of celestial motion.

Huang Renxun remarked that each chip is revolutionary and merits a separate press conference.

Public data reveals staggering performance:

Among them, the Rubin GPU's NVFP4 (4-bit floating-point format) inference computing power reaches 50 PFLOPS, five times that of Blackwell; its NVFP4 training computing power is 35 PFLOPS, 3.5 times that of Blackwell; its HBM4 memory bandwidth is 22 TB/s, 2.8 times that of Blackwell; and it boasts 336 billion transistors, 1.6 times that of Blackwell. Compared to the Blackwell platform, the Rubin platform slashes inference token costs by 10 times through software-hardware co-design and reduces the number of GPUs needed for training MoE (Mixture of Experts) models by four times.

'Had we not pursued co-design, even with the optimal scenario of increasing transistor count by 1.6 times annually and enhancing each transistor's performance by, say, 25%, achieving substantial performance gains would still be challenging,' Huang Renxun stated. 'This is why we engage in other endeavors, like introducing NVFP4, to attain higher throughput where precision can be compromised.' This co-design facilitates significant performance enhancements in the new chip platform.

From a commercialization standpoint, NVIDIA's next-generation AI superchip platform, Vera Rubin, has entered full-scale production and will commence deliveries to partners in the second half of 2026. Major cloud service providers such as AWS, Google Cloud, Microsoft, and Oracle have confirmed deployments.

02 Targeting Agentic AI

With abundant fuel in the form of computing power, the software layer harbors vast potential.

When considering the hottest AI concepts in recent years, Agentic AI undoubtedly ranks among the top.

Now, NVIDIA is striving to reduce the development costs of Agents for enterprises.

NVIDIA asserts that its released Nemotron-CC is a multilingual pre-trained corpus covering over 140 languages, with a total scale of 1.4 trillion tokens, positioned as an 'open' foundational layer for constructing and fine-tuning models.

The company also highlighted a set of instruction datasets named 'Granary,' aimed at enabling models to be 'out-of-the-box' ready for enterprise-level tasks.

From the on-site demonstration, utilizing NVIDIA's hardware and framework, developers can construct a fully functional personal assistant within minutes. This was unthinkable a few years ago but has now become effortless.

The rationale behind NVIDIA's involvement in the open-source movement is straightforward; it aims to attract developers into its ecosystem with 'free samples,' thereby cementing its hardware advantage.

Indeed, in recent years, NVIDIA has been dedicated to enriching the open-source ecosystem. As Kari Briski, NVIDIA's Vice President of Generative AI and Software, put it, the conference's releases were an 'expansion.'

'In 2025, NVIDIA was one of the largest contributors on Hugging Face, releasing 650 open models and 250 open datasets,' Briski said.

For enterprises, the lowering of the Agent threshold will have profound future implications. Future IT departments may liberate human employees from tedious processes, with work content shifting to 'recruiting, managing, and optimizing' various AI digital agents.

This is the ultimate allure of AI Agents—transforming from passive data feeders to proactive problem-solving digital employees, creating immense efficiency and value for enterprises.

03 Following LLM, Physical AI Takes Center Stage

If Agents represent the intelligent aspect at the software level, then Physical AI—the highlight of this address—is a brand-new application scenario that bridges the real world with the physical realm. From Huang Renxun's vision, autonomous driving, robotics, and industrial manufacturing will be the three primary scenarios.

Huang Renxun emphasized that NVIDIA has been working on Physical AI for eight years. He believes that the 'ChatGPT moment' for Physical AI is imminent.

Simulation lies at the heart of nearly all NVIDIA's Physical AI endeavors—through its Omniverse platform, NVIDIA has constructed a 'digital twin' environment nearly indistinguishable from the real world. In this virtual realm, AI can undergo training safely and efficiently.

Huang Renxun stressed that this multi-tiered technology stack is propelling AI from being a conversationalist within screens to becoming an actor capable of performing tasks in the real world.

The inaugural experimental scenario for implementation is autonomous driving, which demands extremely high safety standards.

NVIDIA unveiled the open-source reasoning VLA model Alpamayo, a model series encompassing open-source AI models, simulation tools, and datasets to accelerate the development of safe, reasoning-based autonomous driving vehicles. This achievement is the result of thousands of NVIDIA AV team members' efforts.

'I believe we all concur that the turning point from non-autonomous to autonomous vehicles may be happening right now,' Huang Renxun said. 'In the next decade, it is quite certain that a significant portion of the world's vehicles will be autonomous or highly autonomous.'

From the implementation perspective, NVIDIA DRIVE AV software will be utilized in Mercedes-Benz vehicles, with related AV autonomous vehicles just entering production. The first AV autonomous vehicle will hit the roads in the United States in the first quarter of this year, enter Europe in the second quarter, and reach Asia in the third or fourth quarter. NVIDIA will also continue to update versions, with the ecosystem for collaboratively building L4 Robotaxis still expanding.

Regarding robotics, NVIDIA introduced reasoning visual language models (VLMs) like Isaac GR00T N1.6 for intelligent robots, along with multiple new open-source frameworks for robot development.

Beyond specialized hardware devices, Huang Renxun also set his sights on industrial-grade scenarios.

Huang Renxun announced a deepened collaboration with Siemens to integrate NVIDIA's Physical AI models and Omniverse simulation platform into Siemens' industrial software portfolio, covering the entire lifecycle from chip design and factory simulation to production operations.

'We are standing at the dawn of a new industrial revolution,' he said. Physical AI will empower chip design, production line automation, and even entire digital twin systems, enabling 'design in the computer, manufacture in the computer.'

Rather than a mere product launch, this could be viewed as Huang Renxun's personal predictions and declarations for the future decade of AI and the computing industry. By open-sourcing key models and releasing disruptive hardware, NVIDIA attempts to strongly define the technological standards and infrastructure of the next AI era.

Its strategic core continues NVIDIA's traditional approach of open-source plus software-hardware integration, firmly occupying every computational node from data centers to intelligent terminals, seizing every opportunity to solidify its moat.