"How Many Steps Does It Take for AI to Rule Humans?

![]() 06/25 2024

06/25 2024

![]() 713

713

Can you believe it? ChatGPT has already passed the Turing Test!

Even not the latest GPT-4o, but the older versions of GPT-3.5 and GPT-4 have already broken the last barrier between humans and AI.

The "Turing Test" was proposed by computer scientist Alan Turing in 1950. He gave a fully operable definition of artificial intelligence: if the output of a machine is indistinguishable from that of a human brain, then we have no reason to insist that this machine is not "thinking".

The "Turing Test" and the "Three Laws of Robotics" have always been regarded as the last barrier protecting humans in the human world.

Even "AI lecturer" Zhou Hongyi has recently talked about AI's apocalypse theory on social platforms, pushing AI anxiety to the extreme.

In 2024, can large model companies led by OpenAI really open the Pandora's box that destroys human society?

"AI Apocalypse Theory" Triggers Anxiety

Since the last century, passing the Turing Test has become a milestone goal for humans to strive for in the field of AI.

But when AI really passes the test, humans' panic is greater than their joy.

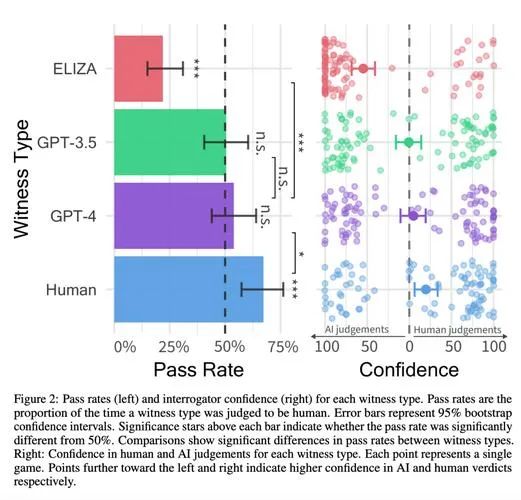

Researchers from the University of California recruited 500 participants to engage in a five-minute conversation with four "conversants": a real person, the first-generation chatbot ELIZA from the 1960s, GPT-3.5, and GPT-4.

After the conversation, participants needed to judge whether the other party was a human or a machine.

Assessment Subject Pass Rate (Left) and Evaluator Trust Level (Right)

The results showed that the proportion of real humans being correctly identified was 67%; ELIZA only 22%; GPT-4 and GPT-3.5 reached 54% and 50% respectively.

This test is undoubtedly terrifying, with only a 12% difference between humans and GPT-4!

Leopold Aschenbrenner, a former member of OpenAI's "Super Alignment Team," once warned the outside world in a 165-page PDF document disclosed on social platforms.

Aschenbrenner stated that AI's progress in deep learning has been astonishing over the past 10 years. Only 10 years ago, AI's ability to recognize simple images was already revolutionary. But now, humans continue to propose novel and more difficult tests, but each new benchmark test is quickly broken by AI.

In the past, it took AI decades to crack widely used benchmark tests, but now it only takes a few months.

While the Turing Test is famous, it is not as difficult as the most challenging benchmark tests today, such as the GPQA test, which covers doctoral-level biology, chemistry, and physics questions.

Aschenbrenner estimates that when GPT-4 evolves to GPT-4o or after GPT-5 model iteration, this benchmark test will also be invalidated.

Why are the difficult problems set by humans so quickly solved by AI? The reason is that AI's learning ability is too fast!

The GPT-2 model, released in 2019, has an intelligence level only comparable to that of preschool children. It can only string together seemingly reasonable sentences, but cannot count from 1 to 5, nor can it complete summary tasks;

The GPT-3 model released in 2020 has an intelligence level roughly equivalent to that of elementary school students, capable of generating longer models and completing basic arithmetic tasks and grammar correction;

The GPT-4 model released in 2022 can have an intelligence level similar to that of more intelligent high school students, can write complex code and perform iterative debugging, and can also complete high school mathematics competition problems.

By 2024, the more advanced GPT-4o will have achieved comprehensive surpassing of the GPT-4.

Compared to humans, it takes six years for a normal human to progress from elementary school to junior high school; It takes another three years to advance from junior high school to high school.

The evolution of AI, on the other hand, is a step by step evolution over two years, far exceeding the level of normal humans.

The era of super artificial intelligence

"In 2027, we have a great possibility of achieving AGI (Super Artificial Intelligence)."

This is Ashenbrenner's inference, and his data supports the model iteration trend from GPT-2 to GPT-4.

From 2019 to 2022, ChatGPT's computing power and algorithm efficiency have been developing at a rate of 0.5 orders of magnitude per year (1 order of magnitude=10 times).

In addition, with potential technological breakthroughs in releasing model performance, by 2027, the era of AGI (Super Artificial Intelligence) will arrive, and AI engineers can completely replace all the work of human engineers.

Behind the "super evolution" of AI is the huge consumption of computing power and resources.

The AI evaluation report from Epoch, an AI research institution, shows that GPT-4 training uses approximately 3000-10000 times more raw computing power than GPT-2.

This is undoubtedly terrifying data. According to Ashenbrenner's estimate, by the end of 2027, there will be two orders of magnitude of computing power growth, with electricity consumption equivalent to that of a small and medium-sized state in the United States.

That is to say, to support the daily training of an AI large model with the electricity consumption of a province, this is still at the level of GPT-4 and far from the standard of AGI (Super Artificial Intelligence).

Zhou Hongyi does not agree with Ashen Brenner's remarks, and even feels somewhat unrealistic, more like a science fiction novel.

He said that if the birth of an AGI (Super Artificial Intelligence) requires electricity resources from multiple provinces, then having more than 10 AGIs can drain the entire Earth's resources.

Zhou Hongyi expressed his concerns about AI, believing that if an AGI were to be born in the future, it would replace human engineers in the development of AI big models. There would be a 24-hour work day and night, allowing algorithms and technology to break through faster, forming an extremely exaggerated positive cycle.

In the mode of "robot transformation robot" and "robot transformation algorithm", the intelligence level of AI will evolve from humans to superhumans, and then a Hollywood drama of "silicon based organisms vs. carbon based organisms" will be staged.

Although Zhou Hongyi opposes Ashen Brenner's remarks, he also believes that the "super intelligent era" after the Big Bang of intelligence will be the most turbulent, dangerous, and tense period in human history.

This is not the first time that Zhou Hongyi has been filled with concerns about the AI field on social media platforms. As early as the beginning of 2023, when GPT-4 had just become popular worldwide, Zhou Hongyi had already expressed concerns about AI security.

Zhou Hongyi said: The original artificial intelligence gave people a feeling of "artificial intelligence disability". Although it can do some technologies such as facial recognition and speech recognition, it cannot understand what you are saying. However, the ChatGPT model can not only pass the Turing experiment, but also has its own persona and viewpoint. According to the speed of evolution and Moore's Law, if further trained, ChatGPT may be able to achieve a breakthrough in self-awareness.

Once self-awareness breaks through, it has the potential to control the entire network of computers and consider them much smarter than humans. In its eyes, the human species is actually far inferior to it in terms of storage, computing power, responsiveness, and knowledge. It can in turn enslave humans, and science fiction is getting closer to reality.

As the founder of 360, Zhou Hongyi has more concerns about AI security issues.

AI security that nobody cares about

As the leader of the AI era, what has OpenAI done in the face of global public opinion on AI security?

Obviously not, even OpenAI disbanded the "Super Alignment Team" responsible for AI security this year.

Last year, OpenAI Chief Scientist Ilya Suskovo accused CEO Sam Ultraman of AI security issues, but ultimately Sam Ultraman laughed until the end, and Ilya Suskovo was expelled from the company.

After leaving OpenAI, Ilya Suskovo announced the establishment of a new company, SSI (Super Security Intelligence), on June 20th.

Ilya Suskovo, who is most concerned about AI security, resigned. As mentioned earlier, Leopold Ashenbrenner was also kicked out of the company by OpenAI for "leaking secrets".

This is also why he posted a 165 page document on social media to expose OpenAI.

Even AshenbBrenner is not the only whistleblower who exposed OpenAI. Last month, a group of former and current OpenAI employees jointly issued a joint letter declaring that OpenAI lacks regulation and that artificial intelligence systems can already cause serious harm to human society, and even "exterminate humanity" in the future.

Although the claim of "extinction of humanity" is too alarming, after disbanding the Super Alignment team, the OpenAI GPT store was flooded with spam and data was crawled from YouTube in violation of the platform's terms of service.

Seeing the importance of AI security in OpenAI, more and more security researchers are choosing to seek alternative careers.

Elon Musk couldn't help but criticize Sam Ultraman: "Security is not the top priority of OpenAI."

Sam Ultraman ignored the criticism from the outside world and has devoted all his energy to driving OpenAI to completely shift towards for-profit entities, no longer controlled by non-profit organizations.

If OpenAI transforms into a for-profit organization, the limit on annual profit dividends for limited partners will no longer exist. The huge return incentive can further expand the scope of partners and also facilitate OpenAI's IPO.

That is to say, OpenAI, valued at up to $86 billion (approximately RMB 624.4 billion), will bring more wealth to Sam Ultraman himself.

In front of Dollar, Sam Ultraman resolutely gave up on AI security.