A Discussion on the Challenges and Innovations in Autonomous Driving Testing Technology

![]() 12/02 2024

12/02 2024

![]() 560

560

With the rapid development of autonomous driving technology, the importance of autonomous driving testing has become increasingly prominent. Autonomous driving testing not only requires verifying the independent performance of the vehicle's perception, decision-making, and control modules but also ensures the overall reliability of the system in complex scenarios. However, autonomous driving testing faces many technical challenges, including the diversity and accuracy of scenario generation, the precision verification of multi-sensor data fusion, efficient time synchronization mechanisms, and the matching of simulation platforms with real-world scenarios.

Necessity and Current Status of Autonomous Driving Testing

1.1 The Complexity of Autonomous Driving Technology Drives Testing Changes

The complexity of autonomous driving systems is unmatched by traditional vehicle systems. Taking Level 3 (L3) and above autonomous driving systems as examples, their operation relies on the coordination of multi-level modules, including multi-source data acquisition and fusion in the perception module, dynamic environment prediction and planning in the decision-making module, and path execution and adjustment in the control module. This complex technical requirement directly determines the necessity and difficulty of testing.

The perception module is the 'eyes' of the entire autonomous driving system, collecting environmental information through various sensors such as LiDAR, cameras, and millimeter-wave radars. These sensors not only have their own technical limitations (such as LiDAR performance degradation in rain and snow conditions and limited imaging capability of cameras under strong backlight), but their data also needs to be fused through algorithms to achieve consistent environmental perception. This multi-source data fusion technology places extremely high demands on time synchronization and spatial alignment, making testing tasks extremely complex.

The decision-making module needs to perform real-time analysis on the perceived environment, predict the behavior of other traffic participants, and plan safe paths. For example, at complex urban intersections, the system needs to consider factors such as traffic light status, surrounding pedestrian dynamics, and other vehicle behaviors simultaneously. The core of decision-making module testing is to verify the rationality and robustness of its planning strategies, especially its emergency response capabilities during unexpected events.

The control module translates decisions into specific vehicle operations, such as steering, acceleration, or braking. The performance of the control module directly affects the accuracy and response delay of vehicle execution. During testing, it is necessary to verify not only the accuracy of instruction transmission but also ensure vehicle stability under extreme conditions (such as wet roads and emergency braking).

1.2 Current Status and Deficiencies of the Testing System

Currently, autonomous driving testing mainly adopts three methods: closed-field testing, open-road testing, and simulation testing, but each has its limitations and cannot fully cover the testing needs of autonomous driving systems.

1. Closed-field Testing: Typically used to simulate standardized scenarios, such as urban intersections, roundabouts, and highway lane changes. This method effectively verifies vehicle performance in typical scenarios but insufficiently covers long-tail scenarios (low-probability but high-risk scenarios).

2. Open-road Testing: Running autonomous vehicles on real roads can reflect system performance in actual traffic environments. However, this method is costly and inefficient, and it is constrained by laws and regulations, making it difficult to test high-risk scenarios.

3. Simulation Testing: Simulating massive driving scenarios through virtual environments is currently a key area of exploration in the industry. However, there are differences between simulation environments and real-world scenarios, especially in dynamic traffic flow simulation and extreme weather reproduction, and the reliability of simulation testing still needs to be improved.

Challenges and Breakthroughs in Scenario Generation Technology

2.1 The Importance and Complexity of Scenario Generation

The operating environment of autonomous vehicles is extremely complex, including different terrains, weather conditions, traffic rules, and driving habits. For an autonomous driving system to adapt to all possible scenarios, its test coverage needs to reach a theoretical 'infinite complexity.' The diversity of real-world scenarios far exceeds testing capabilities, especially the generation of 'long-tail scenarios,' which is highly challenging. For example, the scenario of a pedestrian suddenly crossing the road at night may have a one-in-a-thousand probability of occurring but is crucial for system performance verification.

The core task of scenario generation is to accurately model complex real-world environments, including:

1. Dynamic objects: Motion patterns and behavior predictions of pedestrians, vehicles, bicycles, etc.

2. Static environment: Width of roads, lane markings, diversity of roadside facilities, etc.

3. Weather and lighting: Rain, snow, haze, and changes in lighting during sunrise and sunset. Differences in traffic regulations and infrastructure across different countries and regions also increase the complexity of scenario generation. For example, the design of roundabouts in Europe is significantly different from intersections in the United States, posing regional adaptation requirements for test scenario modeling.

2.2 Technical Approaches to Scenario Generation

Scenario generation technology has evolved from manually designed rules to data-driven generation and currently primarily includes the following methods:

1. Rule-based Scenario Modeling

This method defines scenarios by manually writing rules and logic. For example, when defining a 'rainy day intersection' scenario, variables such as rainfall, vehicle speed, and pedestrian behavior can be adjusted through parameters. The advantage of this method is that it has a high degree of controllability over the generated scenarios, but its scalability is poor, making it difficult to adapt to the needs of massive scenarios.

2. Data-driven Scenario Generation

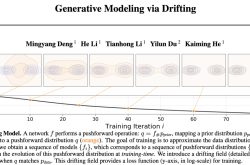

With the accumulation of road test data, it becomes possible to extract scenario features from real data using machine learning techniques and generate representative scenarios. For example, Generative Adversarial Networks (GANs) can be used to synthesize highly realistic dynamic traffic flow scenarios, especially advantageous in simulating complex urban traffic.

3. Adaptive Scenario Generation

Dynamically adjusting generation parameters based on test results to optimize coverage of long-tail scenarios. For example, when the system exhibits a high error rate in a certain type of scenario, similar scenarios can be prioritized for focused testing.

2.3 Current Bottlenecks and Outlook

Although significant progress has been made in scenario generation technology, there are still several main bottlenecks:

1. Balancing Realism and Diversity: Generated scenarios need to strike a balance between realism and diversity, such as accurately reflecting real-world environments while covering extreme conditions.

2. Improving Generation Efficiency: The current high-dimensional scenario generation has high computational costs, limiting the implementation of large-scale testing.

3. Cross-regional Scenario Adaptation: To further enhance the regional adaptability of scenario generation for unique road structures and traffic behaviors in different regions.

By combining big data technology and reinforcement learning algorithms, scenario generation technology is expected to achieve more efficient and precise automated upgrades, providing more comprehensive support for testing efforts.

Key Challenges and Solutions in Multi-sensor Fusion Testing Technology

3.1 The Necessity of Multi-sensor Fusion

In autonomous driving systems, sensor fusion is the core task of the perception module, aiming to integrate data from different sensors (such as LiDAR, cameras, millimeter-wave radars, ultrasonic radars, etc.) to generate a unified and high-precision environmental model. The advantage of this multi-modal data integration lies in compensating for the deficiencies of a single sensor, such as:

• LiDAR excels in accuracy but performs poorly in rain and snow conditions and at long distances;

• Cameras provide rich visual information but have limited depth perception capabilities;

• Millimeter-wave radars can penetrate rain, snow, and fog but have lower accuracy and resolution.

By fusing data from different types of sensors, autonomous driving systems can obtain a more comprehensive understanding of the environment, especially in dynamic scenarios such as pedestrians crossing and vehicles merging. However, this data fusion testing faces many technical challenges, particularly in terms of real-time performance, accuracy, and robustness.

3.2 Testing Challenges in Fusion Technology

1. Time Synchronization:

The sampling frequencies and response times of different sensors may vary. For example, LiDAR typically operates at 10 Hz or higher, while cameras may operate at 30 Hz or even higher. Time differences can lead to inconsistencies in fusion results, especially in high-speed scenarios (e.g., when a vehicle travels at 120 km/h, a per-second difference means a significant error). Testing needs to verify the performance of time synchronization algorithms (such as timestamp alignment).

2. Spatial Calibration:

Due to differences in installation positions and angles between sensors, extrinsic calibration is required to unify their coordinate systems under the same reference frame. Testing tasks include evaluating the tolerance of calibration errors and monitoring calibration deviations caused by vibrations or environmental changes during long-term operation.

3. Data Quality Differences:

The output data accuracy and noise characteristics of different sensors vary greatly. Testing needs to focus on evaluating how fusion algorithms handle the impact of high-noise data on overall perception accuracy. For example, cameras may produce overexposed images under strong light, while millimeter-wave radars may generate multipath effects around metallic objects.

4. Redundancy and Fault Detection:

To improve system robustness, multi-sensor fusion usually designs redundancy mechanisms to handle single sensor failures. Testing needs to verify whether the system can compensate using data from other sensors when some sensors fail or perform poorly.

3.3 Innovative Methods for Fusion Technology Testing

1. Multi-scenario Dynamic Testing:

By setting up dynamic test scenarios (such as movable pedestrian dummies and vehicle targets), the synchronization and fusion performance of sensors under dynamic conditions can be tested. For example, changing pedestrian speeds or vehicle spacing can assess the system's recognition accuracy for targets at different distances.

2. Hardware-in-the-Loop (HIL) Testing:

In an HIL environment, sensor inputs are provided by simulation data rather than relying on the real environment. This method allows rapid verification of the correctness of fusion algorithms, especially the robustness of time synchronization and spatial calibration.

3. Combined Simulation and Real-world Environment Testing:

The simulation environment is used to cover extreme scenarios, while the real environment is used to verify the generalization ability of the model. By comparing the consistency between simulation and actual test data, the adaptability of the fusion algorithm to different scenarios can be evaluated.

4. Online Fault Injection Testing:

Simulating the failure (such as LiDAR data loss) or sudden noise increase of a certain sensor can evaluate the effectiveness of redundancy mechanisms. For example, by injecting random noise or offsets, the system's ability to maintain perception stability can be tested.

3.4 Outlook: Future Directions of Fusion Technology

At the forefront of intelligent driving, the future focus of multi-sensor fusion testing technology will be on the following aspects:

• Testing of Adaptive Fusion Algorithms: With the application of machine learning and deep learning in fusion algorithms, verifying their real-time and interpretive performance will become a priority.

• Testing of Distributed Sensor Networks: With V2X (Vehicle-to-Everything) technology support, sensors will not be limited to a single vehicle but will also include data from roadside infrastructure. Testing needs to cover cross-device data transmission and synchronization performance.

• Optimization of Scenario Diversity Coverage: Future testing will increasingly rely on automated scenario generation technology to improve the efficiency and quality of long-tail scenario coverage.

High-precision Time Synchronization Verification Technology

4.1 Technical Principles of Time Synchronization

In autonomous driving systems, time synchronization is essential for accurate multi-sensor data fusion. High-precision time synchronization not only relies on the stability of hardware clocks but also requires algorithm optimization to achieve time alignment between different sensors.

• Hardware Timestamp: Attach precise time information to each frame of data through GPS clock signals or in-vehicle hardware timestamp mechanisms.

• Software Alignment Algorithm: Adjust the time data of different sensors at the software level through delay estimation or interpolation algorithms to align them logically.

4.2 Key Technical Challenges in Time Synchronization Testing

1. Delay Assessment and Compensation:

Testing needs to assess the transmission delay of each sensor and verify the accuracy of compensation algorithms. For example, LiDAR data typically has higher latency, and testing needs to ensure the real-time nature of data after compensation.

2. Clock Drift Detection:

Internal clocks of different sensors may drift due to hardware differences. Testing needs to verify whether the system can maintain high-precision alignment through clock synchronization protocols (such as PTP) during long-term operation.

3. Impact of Multipath Transmission:

In V2X communication scenarios, multipath transmission of signals may increase time synchronization errors. Testing needs to verify the system's robustness in complex communication environments.

4.3 Technical Path for Time Synchronization Verification

1. High-frequency Precision Comparison Testing:

Using high-frequency data recording systems, real-time comparisons of timestamp deviations among different sensor data can analyze the error range of synchronization mechanisms.

2. Dynamic Scenario Injection Testing:

Simulating rapidly changing scenarios (such as sharp turns and emergency braking) can verify the performance of time synchronization mechanisms under dynamic conditions.

3. Standardized Verification Tools:

Using standardized time synchronization evaluation tools (such as dedicated clock precision analyzers) can verify the overall time synchronization performance of the system.

Scenario Coverage Testing Technology

5.1 The Importance of Scenario Coverage Testing

Autonomous driving technology needs to cope with ever-changing road environments, making scenario coverage testing a crucial aspect. Traditional vehicle testing typically focuses on structured environments such as highways or standardized roads, while autonomous driving requires coverage of more unstructured scenarios, such as complex urban intersections, mountainous roads, and severe weather conditions. The goal of scenario coverage testing is to simulate as many driving scenarios as possible in the real world to ensure the functional stability and safety of autonomous driving systems under different conditions.

5.2 Main Technical Difficulties in Scenario Coverage Testing

1. Scenario Complexity and Diversity:

The complexity of scenarios directly affects the comprehensiveness of testing. A typical urban road scenario may include stationary obstacles (such as parked cars), dynamic targets (such as pedestrians crossing the road), complex traffic signals, and situations where road markings are blurred or missing.

Testing requires evaluating the system's decision-making and response capabilities in multi-target, multi-interference environments. Efficiently constructing and covering these complex scenarios is a technical challenge.

2. Reproducibility of Scenarios:

In autonomous driving testing, if a problem or failure point is discovered, test engineers need to be able to accurately reproduce the scenario for issue localization and algorithm optimization. However, many real-world scenarios are random and unpredictable, such as the degree of road slipperiness in rainy or snowy weather. This places high demands on scenario data recording and reproduction technology.

3. Testing Coverage of Extreme Scenarios:

Tail scenarios (edge cases) are often critical in causing errors in autonomous driving systems. These scenarios may include rare but potentially dangerous conditions, such as an animal suddenly appearing on a highway or a vehicle in front making an emergency U-turn at an unsignalized intersection. Covering these scenarios requires significant effort and resources.

5.3 Technical Solutions for Scenario Coverage Testing

1. Simulation-Based Scenario Generation

: Using advanced simulation platforms (such as CARLA, LGSVL), a large number of complex scenarios can be quickly generated. These platforms allow users to define scenario parameters (such as weather, time, road layout, traffic flow) and simulate different target behaviors. For example, by setting vehicle brake delays, the emergency response capability of autonomous driving systems can be evaluated.

By integrating with real-world data, simulation scenarios can be made more realistic. For example, real-world scene data recorded by sensors can be imported into the simulation platform to build high-fidelity test scenarios.

2. Automated Scenario Expansion Technology:

Utilize generative artificial intelligence or augmented reality technology to automatically expand existing scenario libraries. For example, generate multiple variations from a single urban intersection scenario, including irregular pedestrian behavior, abnormal vehicle driving paths, etc. This technology can significantly improve test scenario coverage.

3. Data-Driven Scenario Analysis:

Collect large amounts of driving data in the real world and use data mining techniques to identify high-risk or high-complexity scenarios. For example, analyzing driving recorder data can reveal common characteristics of accident-prone locations (such as complex intersections or blind spots). Then, targeted testing can be conducted for these key scenarios.

4. Reproducible Scenario Reconstruction Technology:

Use LiDAR mapping, video analysis, and scene modeling tools to transform real-world scenarios into test environments. For example, if a test reveals that the system cannot identify low obstacles behind a truck, the scenario can be reconstructed to study deficiencies in the system's perception algorithm.

5.4 Evaluation Metrics for Scenario Coverage

When conducting scenario coverage testing, it is important to assess the completeness and effectiveness of the testing.

• Scenario Diversity: Whether the test scenarios include various typical environments (such as urban roads, highways, rural roads).

• Environmental Condition Coverage: Whether scenarios under different weather conditions (sunny, rainy, foggy) and lighting conditions (daytime, nighttime, dawn) have been tested.

• Behavioral Richness: Whether various traffic participant behaviors are covered (such as vehicle overtaking, pedestrians crossing lanes, bicycles suddenly turning).

• Proportion of Tail Scenarios: Whether sufficient coverage has been provided for rare scenarios that may cause system errors.

5.5 Future Trends in Scenario Coverage Testing

1. Dynamic Scenario Adaptive Generation:

With the development of deep learning technology, future test systems can dynamically generate challenging scenarios based on the performance of autonomous driving algorithms. For example, when the system performs poorly in a certain type of scenario, similar but more complex scenarios can be automatically generated.

2. Distributed Testing Environment:

Utilize cloud computing technology to build a shared test scenario library globally. Multiple enterprises or research institutions can conduct joint testing on the same platform, thereby sharing resources and test results.

3. Scenario Perception-Driven Real-Time Evaluation:

In the future, scenario coverage testing will further integrate with real-road testing, with the on-board system sensing the environment in real-time and judging whether it is an untested scenario. Uncovered scenarios will be recorded and uploaded to the test platform, forming a closed-loop optimization.

Standardization of Autonomous Driving Testing Technology and Toolchain Development

6.1 Importance of Standardizing Testing Technology

Currently, there is a lack of unified testing specifications and evaluation criteria in the development and testing of autonomous driving technology. For example, there are significant differences in regulations and testing requirements for autonomous driving in different regions of the world. This non-standardization limits the cross-regional deployment of autonomous driving systems and increases development costs for enterprises. Standardizing testing technology can not only improve testing efficiency but also provide a foundation for data sharing and technical integration between different vendors.

6.2 Key Components of the Testing Toolchain

1. Data Collection and Processing Module: Supports synchronous collection, annotation, and analysis of multi-sensor data. For example, provides efficient time synchronization and spatial calibration tools for LiDAR point clouds and camera images.

2. Simulation Testing Module: Supports scenario construction and execution and provides comprehensive evaluation functions for autonomous driving system performance.

3. Performance Analysis Module: Provides separate test evaluation tools for each module of perception, planning, decision-making, and control.

4. Fault Injection and Debugging Module: Supports simulating sensor failures, data delays, and other scenarios and analyzing system robustness.

6.3 Directions for Standardization

• Unified Data Format: Establish unified multi-sensor data storage formats and interface protocols to facilitate data sharing and interoperability between different systems.

• Unified Performance Evaluation Metrics: Clarify the calculation methods and evaluation criteria for performance indicators such as perception module (e.g., object detection rate, false positive rate), planning module (path optimality, smoothness), and control module (following error, lateral offset).

• Standardized Testing Process: Form a complete and universal testing process framework from scenario design, data collection to performance analysis.

Future Development Directions of Autonomous Driving Testing Technology

7.1 AI-Based Test Automation

With the rapid development of autonomous driving technology, AI is gradually being integrated into the testing field, enabling the automation and intelligence of the testing process. This not only improves testing efficiency but also demonstrates unique advantages in many complex scenarios.

1. Intelligent Generation of Test Cases:

AI-based scenario generation algorithms can automatically create new test cases covering various potential issues. For example, Generative Adversarial Networks (GANs) are used to generate anomalous scenarios such as obstacles on the road or non-standard traffic signs. By combining with traditional rule-based generation methods, the breadth and depth of test cases can be significantly expanded.

2. Fault Prediction and Root Cause Analysis:

AI systems can discover hidden patterns and predict potential issues in large-scale test data. For example, by analyzing abnormal patterns in sensor data streams, potential failures in the perception module can be detected in advance. Additionally, AI can assist test engineers in root cause analysis to optimize algorithm and hardware performance.

3. Intelligent Filtering and Annotation of Test Data:

Processing and annotation of massive test data have always been a bottleneck. AI algorithms can automatically classify and filter data, quickly identify key problematic data, and perform efficient annotation. For example, for tail scenarios, AI can prioritize segments with potentially dangerous features in unlabeled raw data.

4. Real-Time Test Feedback:

AI technology can analyze the performance of autonomous driving systems in real-time and dynamically adjust test parameters. For example, during simulation testing, when the system detects a high failure rate in a certain type of scenario, it automatically adjusts the scenario complexity and generates more similar scenarios for further testing.

7.2 Application of Digital Twin Technology

Digital Twin technology is becoming an important trend in autonomous driving testing. By accurately reproducing the physical world digitally, testing and optimization can be performed more efficiently.

1. High-Precision Scenario Reconstruction:

Digital Twin technology combines high-precision maps, sensor data, and 3D modeling tools to achieve a high degree of reproduction of real-world road environments. For example, all roads, buildings, traffic signals, and vehicle traffic in an urban area can be reconstructed in a digital environment for simulation testing.

2. Real-Time Data Synchronization and Dynamic Updates:

As city infrastructure data changes, the Digital Twin system can dynamically update test scenarios. For example, when a new traffic sign is added to a road or a traffic light setting is changed, the testing platform can automatically update and rerun relevant test cases.

3. Combined Virtual-Real Testing:

On the Digital Twin platform, real and virtual vehicles can participate in testing together. The behavior of real vehicles is fed back into the virtual scenario, and the dynamic data of virtual vehicles can be used to interfere with the decision-making of real vehicles. This combined virtual-real testing can more comprehensively validate system performance in real-world environments.

7.3 Development of Testing Cloud Platforms

With the globalization of autonomous driving development, test data sharing and collaboration have become particularly important, and cloud-based testing platforms are gradually becoming a trend.

1. Distributed Scenario Library Management:

Cloud platforms can store global test scenario libraries, and users can select scenarios from specific regions for testing as needed. For example, complex highway scenarios in the US, narrow rural roads in Europe, or congested urban traffic in East Asia.

2. Collaboration in Testing Processes:

Development teams can share test results in real-time and collaboratively optimize algorithms through the cloud platform. For example, issues discovered during road testing in China can be quickly shared with the US team through the cloud platform for joint optimization.

3. Sharing of Computing Resources:

Simulation testing for autonomous driving has extremely high computational resource requirements. Through the cloud platform, enterprises can rent high-performance computing resources on demand, avoiding the need to build expensive computing clusters themselves.

7.4 Testing of New Sensors and V2X Technology

With the continuous advancement of sensors and Vehicle-to-Everything (V2X) technology, autonomous driving testing needs to conduct more research on new technologies.

1. Collaborative Testing of Multi-Modal Sensors:

New sensors (such as spectral sensors or quantum sensors) are gradually being applied in autonomous driving systems. Testing needs to evaluate the collaborative performance of multi-modal sensors under different environmental conditions. For example, the fusion effect of thermal imaging sensors and LiDAR data at night.

2. V2X Scenario Testing:

V2X technology relies on communication between vehicles and infrastructure, and its performance directly affects the decision-making efficiency of autonomous driving. Testing needs to verify the low latency and high reliability of V2X communication, especially under conditions of signal congestion or insufficient coverage.

3. Adaptability Testing in Dynamic Environments:

The performance of V2X devices in dynamic scenarios needs special verification. For example, how to receive signals and make corresponding decisions within a short time when vehicles pass through an area at high speed.

7.5 Ethical and Privacy Testing of Autonomous Driving Technology

Autonomous driving technology must not only pass technical performance tests but also undergo strict scrutiny in terms of ethics and privacy protection.

1. Ethical Decision-Making Testing:

Autonomous driving systems may face ethical choices in complex situations, such as scenarios where avoiding pedestrians may cause injury to passengers. Testing needs to verify whether the system's decision-making logic meets ethical requirements and minimizes the risk of harm.

2. Privacy Protection Testing:

Autonomous vehicles collect a large amount of environmental and passenger data, which may raise privacy concerns. Testing needs to verify whether the system's data encryption, storage, and access control mechanisms comply with international privacy protection regulations (such as GDPR). Additionally, the effectiveness of data anonymization techniques also needs to be rigorously evaluated.

7.6 Integration of Testing Technology and Policy Regulations

As autonomous driving policies and regulations improve, testing technology needs to closely align with relevant legal requirements. For example, different countries have different regulations on testing environments and data recording, and the testing platform needs to have the ability to flexibly adjust. At the same time, standardized test report output functions required by regulations also need to be integrated into testing tools.

Conclusion

Autonomous driving testing technology is crucial for ensuring system safety and reliability. With increasing scenario complexity, technological development, and regulatory requirements, testing technology is evolving towards diversity, intelligence, and standardization. In the future, innovations based on AI and Digital Twin technology, as well as globally collaborative testing platforms, will further promote the implementation and popularization of autonomous driving technology. At the same time, the introduction of ethical and privacy testing will lay a solid foundation for the social acceptance of autonomous driving systems. Driven by both technology and policy, autonomous driving testing technology is bound to usher in broader development prospects.

-- END --