What role does end-to-end play in autonomous driving?

![]() 10/23 2025

10/23 2025

![]() 652

652

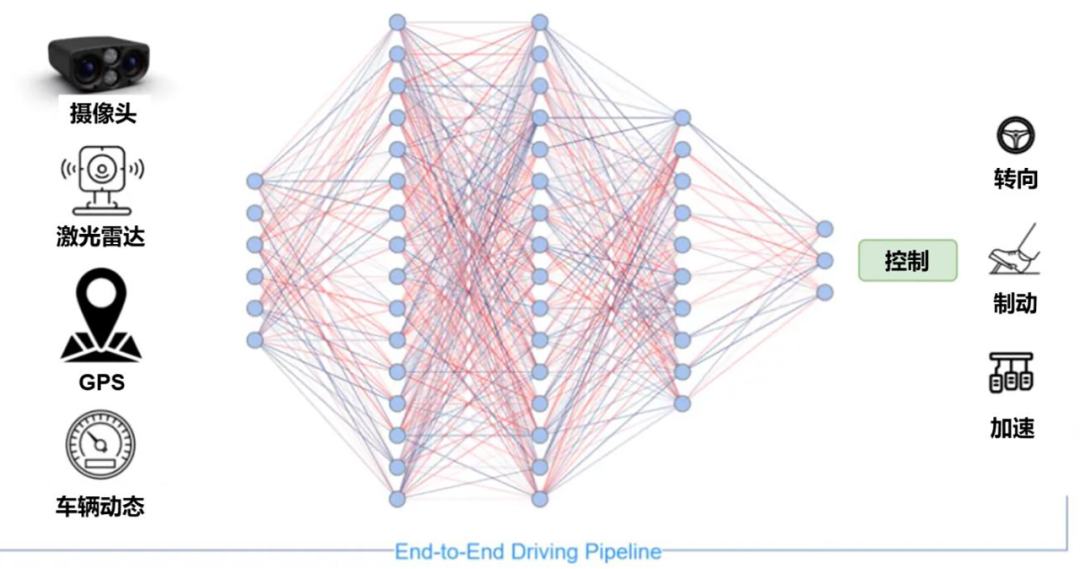

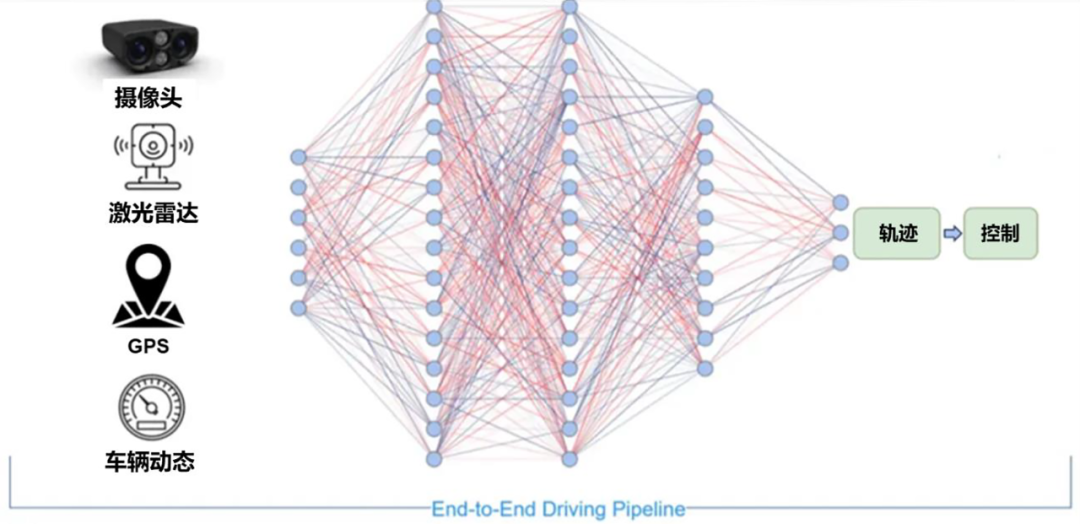

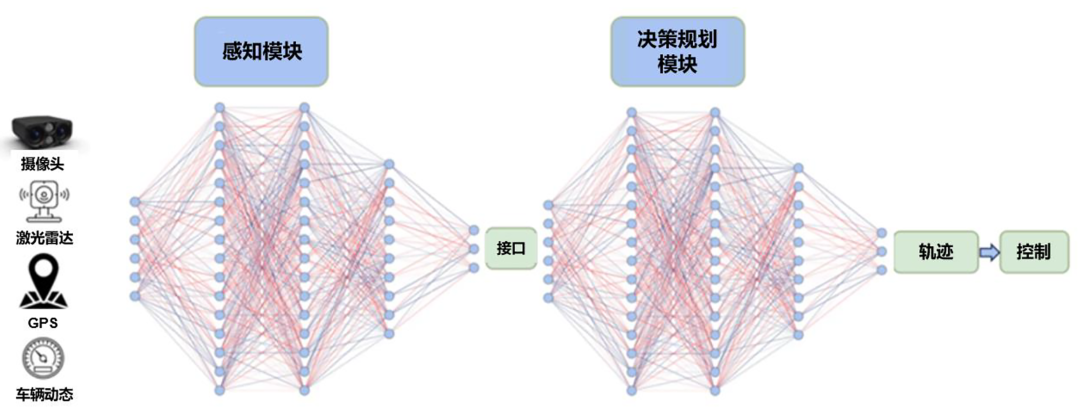

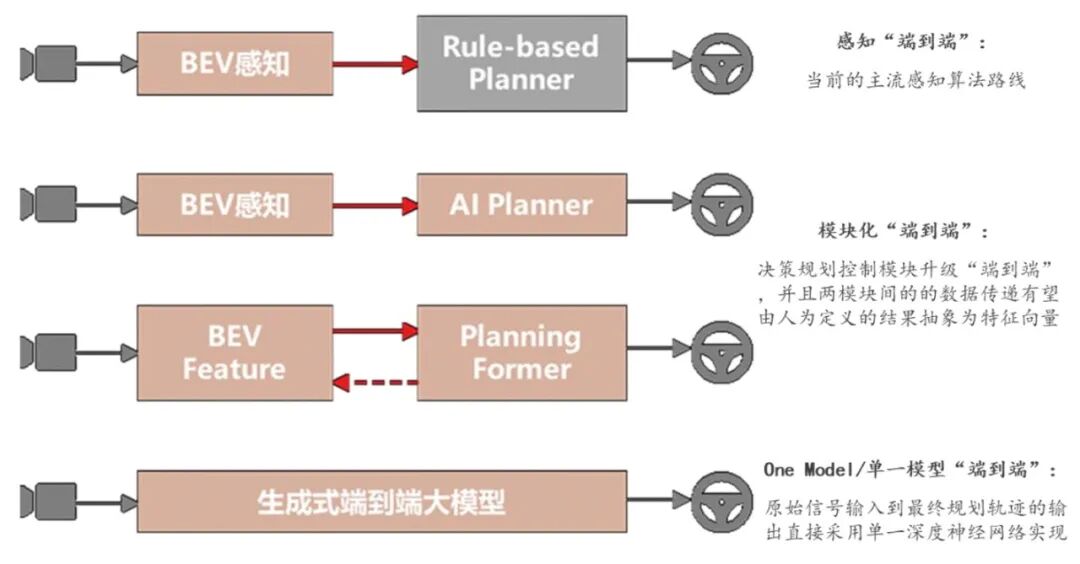

With the development of autonomous driving technology, end-to-end systems are increasingly favored by companies due to their unique advantages. End-to-end learning involves entrusting the entire chain—from sensors (e.g., cameras, radar) to vehicle actions (steering, throttle, brakes)—to a learning model for holistic mastery, rather than decomposing the problem into numerous human-ruled submodules. End-to-end systems are divided into narrow and broad interpretations. Narrow end-to-end refers to directly mapping raw signals to control commands via a single neural network. Broad end-to-end emphasizes preserving raw information, minimizing artificial compression, and achieving holistic goals through data-driven approaches, even if partial engineering interfaces remain.

Narrow end-to-end autonomous driving architecture (single neural network model for perception, decision-making, planning, and control)

Broad end-to-end autonomous driving architecture (neural network model for perception and decision-making, excluding control module)

Broad end-to-end autonomous driving architecture (neural networks for perception and decision-making, with manually designed data interfaces between modules)

To understand it more straightforwardly, traditional autonomous driving divides a vehicle into perception, localization, prediction, planning, and control modules, each optimized separately and then integrated. The end-to-end approach trains a large network to learn the overall mapping from input to output, using data to define “good” standards rather than relying on engineers to specify rules for each step.

What exactly has changed in perception?

In early autonomous driving systems, perception tasks primarily focused on 2D or 3D detection, aiming to identify objects in images (e.g., vehicles, pedestrians, lane lines) and provide labeled bounding boxes to downstream modules. However, this “box”-centric data format created a semantic gap with subsequent path planning modules.

Recently, a mainstream trend is to project data from multiple cameras and sensors into a unified Bird’s Eye View (BEV) space. BEV integrates information from different perspectives into a spatially consistent coordinate system, greatly facilitating the fusion of path planning and dynamic information. Thus, the widespread adoption of BEV has reshaped the interface between perception and planning, making it more comprehensible and usable for end-to-end learning models.

However, BEV remains 2D and lacks height information. Solutions have proposed extending representation capabilities to 3D by introducing dense spatiotemporal field representations like “occupancy networks” (Occupancy, or OCC). Occupancy networks clarify data such as “what occupies a spatial point over future frames and the probability of occupation,” incorporating time, space, and uncertainty to better model dynamic interactions.

Currently, the concept of “world models” is gaining traction. The core idea is to build a model capable of reconstructing and simulating world dynamics, enabling the system to “see the present” and “imagine future events.” World models can generate training data (addressing real-world long-tail sample shortages) and serve as internal simulators during decision-making to evaluate the consequences of different actions. World models are not only tools for upgrading perception/cognitive abilities but also crucial supplements for end-to-end training and validation. However, if the data generated by world models deviates significantly from real-world distributions, it can mislead training.

Diagram of end-to-end autonomous driving architecture evolution

How does the decision-making layer learn?

After completing environmental perception, several approaches exist to entrust decision-making and planning to learning models. One is imitation learning, which quickly acquires foundational capabilities by fitting human driving data but lacks generalization and performs poorly when deviating from demonstration data. Another is reinforcement learning, which learns robust strategies through trial and error but relies on simulation environments to avoid real-world risks. Combining both approaches is also common—initializing models with imitation learning and then optimizing long-term rewards in simulations via reinforcement learning. The report considers these methods as candidate solutions for achieving end-to-end decision-making.

World models play a pivotal role in the decision-making layer by generating multiple plausible future scenarios based on the current state, aiding the decision module in “forward-looking thinking.” This means the system does not need to repeatedly trial-and-error in the real world but can evaluate the potential consequences of different actions in its internal simulated environment, thereby selecting safer and more effective strategies. This mechanism is valuable for handling long-tail and extreme scenarios but may introduce decision risks if generated scenarios deviate significantly from real-world distributions. Thus, generated data must be used cautiously.

Additionally, a compromise approach is “modular end-to-end.” This scheme uses neural networks at the perception end to output rich intermediate representations (e.g., BEV features or spatiotemporal occupancy fields) while retaining or running a relatively lightweight and interpretable model in parallel at the decision-making and control layers. Modules interact via feature vectors rather than relying on human-readable labels. This approach is easier to validate and debug in engineering practice, making it a feasible transitional solution for many domestic manufacturers moving toward fully end-to-end systems.

What are the hard engineering problems that must be addressed?

To transition end-to-end autonomous driving from theoretical derivation to mass production, several practical bottlenecks must be overcome, including data, computational power, validation, interpretability, and continuous learning. These challenges collectively form the main barriers to current technological implementation and determine the focus and pace of industry competition.

End-to-end models demand extremely high requirements for data scale, quality, and coverage of long-tail scenarios. Unlike language models, which can rely on vast amounts of publicly available text, autonomous driving requires extensive real-world driving videos, vehicle states, and corresponding human driving behavior data, covering rare scenarios such as nighttime, rain, snow, construction zones, and temporary obstacles. Tesla currently leads in data scale, having built an efficient data closed loop (closed-loop) system through shadow mode, automatic labeling, and replay training.

End-to-end training also adheres to the “scale law,” where larger models, more data, and longer training times typically yield performance improvements, driving investment in large-scale cloud GPU clusters. Vehicle-side and cloud-side computational power are key competitive elements in the autonomous driving industry. Vehicle-side systems must meet low-latency and high-reliability requirements, while the cloud handles large-scale training tasks. Currently, most teams rely on training resources at the thousand-GPU level.

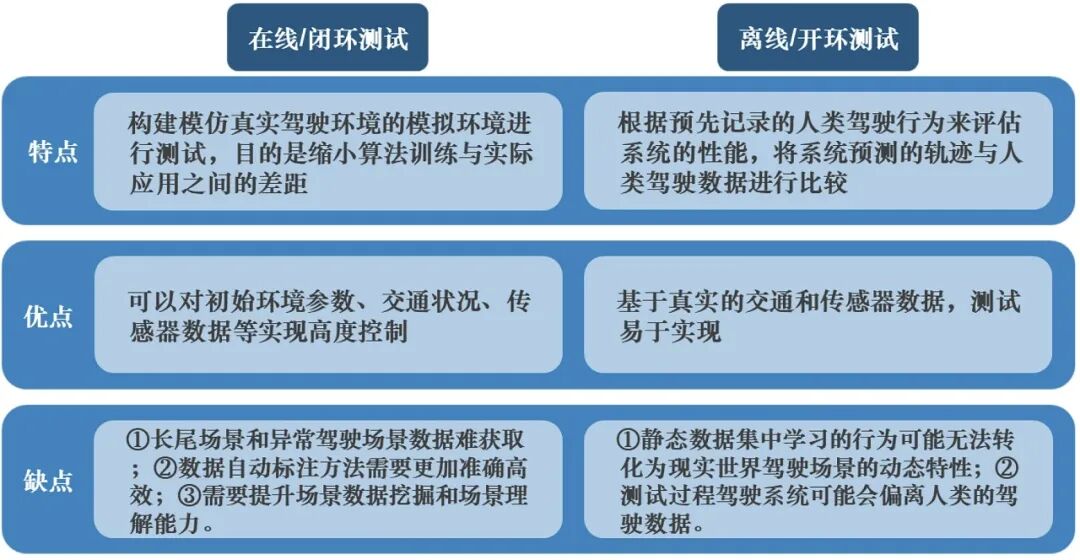

Validation is another major challenge for end-to-end systems. Traditional offline metrics alone cannot adequately assess real-world performance. Open-loop (offline) evaluation compares model outputs with human trajectories, which is simple but lacks interactivity testing. Closed-loop (online) simulation can test system interaction and recovery capabilities, but constructing high-fidelity simulation environments covering long-tail scenarios is itself challenging. A validation system integrating offline evaluation, closed-loop simulation, and real-world shadow testing/progressive deployment can be constructed. While world models partially address long-tail data gaps, the validation risks introduced by their generation biases cannot be ignored.

Key characteristics, advantages, and disadvantages of online/closed-loop testing vs. offline/open-loop testing

Interpretability and catastrophic forgetting are two unavoidable issues for end-to-end systems. End-to-end models are inherently “black-box,” requiring interpretability for engineering implementation and regulatory compliance, especially the ability to trace decisions in accidents or abnormal situations. Strategies to address this include paralleling visual-language models (converting intermediate representations into readable descriptions), modular checkpoint design, and incorporating rule constraints during training. Regarding catastrophic forgetting, when models are fine-tuned with new data to improve specific complex scenarios, their original capabilities may be weakened, with version rollback cases already occurring in practice. Mitigation strategies include old sample replay, weight freezing, and other techniques.

Differences in technological directions

In terms of technological path selection, the industry exhibits different evolutionary strategies. Tesla adheres to a pure vision-based approach, constructing a single end-to-end model using BEV+Transformer+occupancy networks, enabling rapid iteration through massive shadow mode data and replay training mechanisms. In contrast, mainstream domestic manufacturers such as Huawei, XPeng, and Li Auto generally adopt “modular end-to-end” or “dual-system” architectures, pursuing performance upper limits while retaining system interpretability and emergency handling capabilities.

It is important to clarify that technological implementation requires not only cutting-edge concepts but also solid engineering foundations. The efficiency of data closed loop (closed-loop) systems, the scale of computational power deployment, and the completeness of validation systems collectively determine whether end-to-end systems can be stably implemented and continuously evolved. Thus, “data + computational power” are the core elements of end-to-end competition, explaining why resource-advantaged leading enterprises maintain a clear first-mover advantage in the end-to-end implementation process.

Final remarks

The realization of end-to-end autonomous driving relies on a complete technological chain. At the perception level, evolution from traditional detection to BEV and spatiotemporal occupancy networks provides richer environmental representations for decision-making. At the decision-making level, integrating imitation learning, reinforcement learning, and world model simulations equips the system with predictive and planning capabilities. Engineering implementation relies on data closed loop (closed-loop) systems, computational power clusters, and multi-layered validation systems as support, while addressing practical challenges such as model interpretability and catastrophic forgetting. Current technological development remains critically constrained by data quality and computational power scale, determining the actual progress of end-to-end systems from conceptual verification to mass production.

-- END --