Decoding IROS 2025: Six Definitive Trends Shaping China's Robotics Industry

![]() 10/24 2025

10/24 2025

![]() 571

571

On October 19, 2025, the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2025), one of the two top global academic events in the robotics field, officially opened at the Hangzhou International Expo Center. With 'Frontiers of Human-Robot Collaboration' as its core theme, the event attracted over 7,000 experts, scholars, and corporate representatives from 69 countries and regions, along with nearly 200 leading robotics companies, to jointly explore the deep integration and innovation of artificial intelligence and robotics technologies.

This marks IROS's return to mainland China nearly two decades after its 2006 Beijing edition, reflecting the rapid advancement of China's robotics research capabilities. As the host, China's robotics industry responded to the conference theme with a panoramic display of embodied AI technologies. From bionic and agile humanoid robots to industrial solutions penetrating various sectors, and core components defining performance boundaries, Chinese companies collectively outlined a distinct industrial landscape characterized by 'software-hardware integration, open-source collaboration, and vertical deep cultivation.'

Key Highlights

· Open-source ecosystems act as accelerators for technology popularization

· Cutting-edge technologies and commercialization drive progress in tandem

· Industry giants empower sectors with scenarios and data

· Full-link construction forms the cornerstone for physical world understanding

· Full-stack self-research and vertical integration build core competitive barriers

· 'Perception-decision-control' closed loops continuously evolve driving capabilities

01 Open-Source Ecosystems Accelerate Embodied AI Popularization

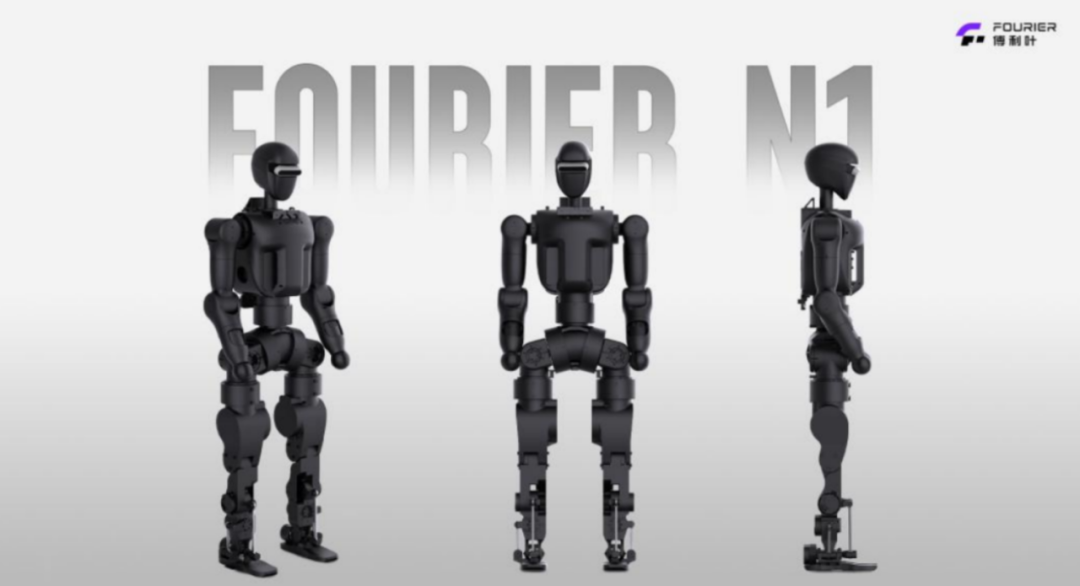

Case Study: Fourier N1 Open-Source Humanoid Robot, Fourier Nexus Open-Source Platform

In the era of rapid embodied AI development, Fourier continues to advance its open-source strategy of 'hardware openness + algorithm sharing + data interoperability,' building a global collaboration system through the Fourier Nexus open-source platform. Currently, the Fourier platform has opened key modules covering robot structural design, actuator control, motion planning algorithms, and multimodal perception interfaces, providing a complete experimental and R&D foundation for research, education, and developer communities.

Under the 'fully open-source' philosophy, Fourier's research achievements also collaborate globally. Currently, multiple research papers based on Fourier's GR series platform have been successfully published in authoritative journals, demonstrating the strong support capabilities of Fourier's open-source technology system at both academic research and engineering application levels.

Core Technology: The N1's open-source approach goes beyond philosophy—it includes a complete bill of materials (BOM), directly processable structural drawings, detailed assembly guides, and basic operational software code. This deep openness strategy aims to enable global developers to fully replicate the N1 in the shortest time, significantly lowering the R&D threshold for hardware.

Embodied Applications: During its R&D phase, the N1 underwent long-term, high-intensity testing, accumulating over 1,000 hours of outdoor complex terrain tests. This ensures the robot can stably traverse slopes of 15°-20° and climb 20cm-high stairs, demonstrating its motion adaptability in unstructured environments.

02 Dual Breakthroughs in Technology and Commercialization

Case Study: Stardust Intelligence Half-Body Robot Astribot S1-U, AI System DuoCore

Stardust Intelligence showcased its upgrades in full-stack 'body-teleoperation-model' technologies, with core highlights including cable-driven transmission, ultra-remote teleoperation systems, and the AI system DuoCore.

As the world's first team to achieve mass production of this technology in humanoid robots, Stardust Intelligence adheres to the philosophy of 'not adapting AI to robots, but making robots natively compatible with AI,' creating the AI system DuoCore to produce an AI robot best suited for future needs. It successfully mass-produced cable-driven AI robots, forming differentiated advantages over traditional rigid robots.

Core Technology: The cable-driven solution transparently transmits motor force to the end-effector, enabling precise force feedback. This not only grants the robot fine force perception akin to 'a blind person opening a door' but also enhances safety in human-robot interactions through passive compliance, making it resistant to damage even during actions like 'table knocking' or 'drumming.'

Data Engine: At the venue, Stardust Intelligence offered a 'hands-on' teleoperation experience, intuitively demonstrating system robustness and safety. However, RUI's core value lies as a data engine for AI training. It efficiently collects high-quality, multi-dimensional operational data from a first-person perspective, far surpassing industry data acquisition efficiency and greatly accelerating AI model iteration.

03 Strong Entry from Scenario-Driven Players

Case Study: Meituan Robotics 'Full Suite'

At the 2025 Hangzhou International Intelligent Robotics and Systems Conference (IROS 2025), the Meituan Robotics Research Institute showcased its latest progress in low-altitude logistics and embodied AI, from technological breakthroughs to commercial validation, through its academic annual conference.

According to a representative from the Meituan Robotics Research Institute, since its establishment in 2022, the institute has focused on building an open research platform connecting industry and academia, using real-world scenarios to feed frontier research and accelerate the application of scientific achievements. Over the past year, the institute has accelerated innovation in core areas like low-altitude logistics and embodied AI, persistently driving efficient conversion of cutting-edge academic exploration into actionable industrial value.

Core Strategy: By upgrading cloud-based visual large models, it enhances recognition capabilities for complex small targets like kites, balloons, and cranes. Its strategic positioning has evolved to 'retail + technology,' where retail serves as the stage and technology acts as the tool to expand and optimize that stage. Through its strategic investment department, it has invested in a batch of robotics companies including Unitree Robotics, Autovariable Robotics, and Xinghaitu, building an 'alliance circle.'

Application Landing: After extensive testing and validation, related achievements have been applied in complex delivery scenarios like parks. Additionally, through the 3rd Low-Altitude Economy Intelligent Flight Management Challenge, it attracted 120 university teams from around the world, including all-overseas teams for the first time, reflecting China's global influence in the low-altitude economy.

04 'Full-Modality' Embodied Data Full-Link Solution

Case Study: ZeroPower Wheel-Arm Humanoid Robot ZERITH H1

ZeroPower's newly launched Wheel-Arm Humanoid Robot ZERITH H1 in late May this year adopts an 'anthropomorphic' structure, paired with a 7-axis high-precision robotic arm with a 1.8m reach, capable of touching the ground below and extending up to 2m, thus achieving a vast operational space throughout the body.

ZeroPower's strategy clearly focuses on commercial service scenarios like hotels and restaurants, attempting to achieve 'single-point breakthrough' in vertical scenarios and enable networked capability migration. Reportedly, the company has secured tens of millions of yuan in orders and plans to deliver 500 robots in 2025 for mass production.

Core Technology: To address the common 'data modality missing' issue in embodied AI model training, ZeroPower introduced a 'full-modality' embodied data full-link solution based on its self-developed embodied data management platform for end-to-end data management. It adopts a 'guided' data acquisition workflow to help users quickly master complex task data acquisition capabilities, ensuring data quality and improving acquisition efficiency.

Model Iteration: The solution integrates training visualization tools, supporting recording, monitoring, and data analysis throughout the model training process to help developers scientifically adjust parameters and efficiently iterate their embodied AI models.

05 Full-Stack Self-Research Constructs Technological Closed Loops

Case Study: Magic Atom with its 'Full Family' (Bipedal Humanoid Robot MagicBot Z1, Wheeled MagicDog-W, Industrial-Grade MagicDog Y1)

Magic Atom has achieved a hardware self-research rate of up to 90%, covering key components like full joint modules, dexterous hands, and reducers, and has self-developed a general-purpose embodied AI large model, accomplishing a bidirectional technological innovation breakthrough in software and hardware.

In terms of ecosystem construction and commercialization, Magic Atom launched the 'Thousand Scenarios Co-Creation Plan' in March 2025, aiming to collaborate with 1,000 partners to jointly create 1,000 humanoid robot application scenarios.

Core Technology: It lies in constructing a 'full-stack self-research' R&D system with a 90% hardware self-research rate, covering core components like joint modules, dexterous hands, and reducers. Meanwhile, it has independently developed a general-purpose embodied AI system. This system aims to reduce supply chain risks and manufacturing costs, laying the foundation for mass production and commercialization.

Data-Driven: Magic Atom collaborates with strategic partners to continuously collect real-world data from production environments, reportedly accumulating millions of high-value training samples. These data optimize its self-developed 'Atom Vientiane ' large model, which adopts a fast-slow dual-system end-to-end technical route to enhance robot understanding and task execution capabilities in complex scenarios.

06 Fusion Evolution of Vision and Touch

On the IROS 2025 exhibition floor, as robots dexterously grasped objects or autonomously navigated complex environments, a silent revolution in perception unfolded. Robots are evolving from 'seeing' to 'understanding' and from 'touching' to 'touching accurately,' with sensor technology companies leading this perception revolution by equipping robots with 'super senses.'

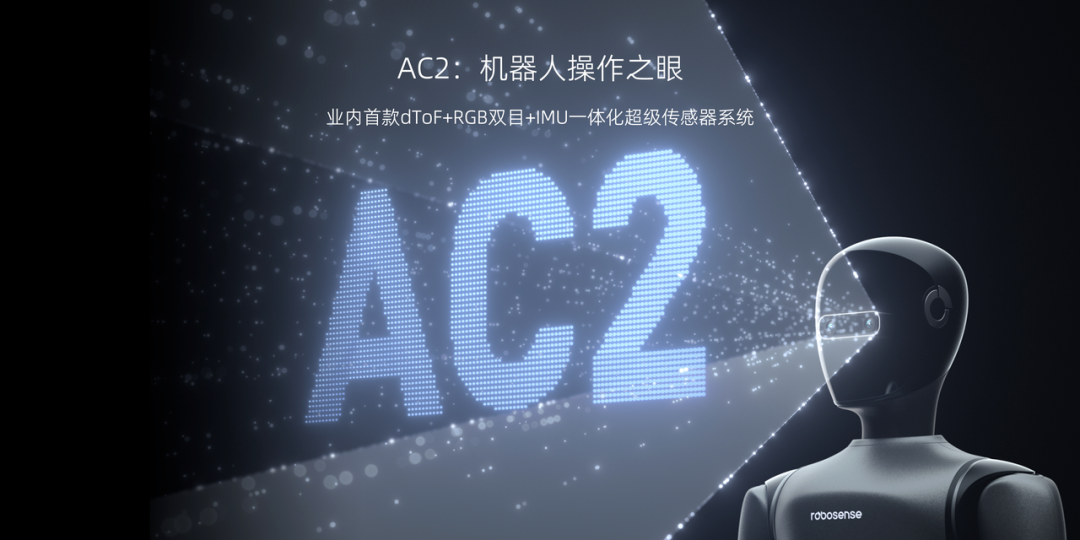

RoboSense: Released the AC2 vision system, positioned as the 'eyes for robotic operations.' It is not a simple sensor stack but a deep fusion of solid-state area dToF sensors, RGB stereo cameras, and IMUs (Inertial Measurement Units). This integrated design enables it to output fused or independent depth, image, and motion data, forming a coherent perception field.

DynamiVision: Its core technology originates from bionics, with its event camera (Event Camera) mimicking biological retinas. This mechanism offers significant advantages: microsecond-level latency, extremely high temporal resolution, and over 130dB high dynamic range. This means in extreme scenarios with strong light, weak light, or rapid motion, robots avoid blurring or overexposure like traditional cameras, maintaining 'quick eyes and fast hands.'

* Image sourced from the internet. Contact for removal if infringement occurs.