To enhance AI, iPhone is pushing Samsung to 'independently' package memory

![]() 12/09 2024

12/09 2024

![]() 708

708

Is it true that after being apart for too long, entities must unite, and after being united for too long, they must part?

Recently, Korean media outlet The Elec revealed a striking piece of news: Samsung is responding to Apple's request to independently package memory on chips.

Specifically, Apple plans to abandon the current mainstream PoP (Package on Package) packaging method in iPhones from 2026, which stacks the SoC and LPDDR memory together, and instead independently package LPDDR memory. According to reports, the rationale behind Apple's shift is:

Core to Apple Intelligence.

The logic is straightforward: from training to inference, a significant bottleneck in the performance of large models is memory bandwidth. The currently prevalent PoP packaging method in mobile phones, which stacks memory directly on the SoC, is highly limited in terms of bandwidth and also suffers from heat accumulation issues.

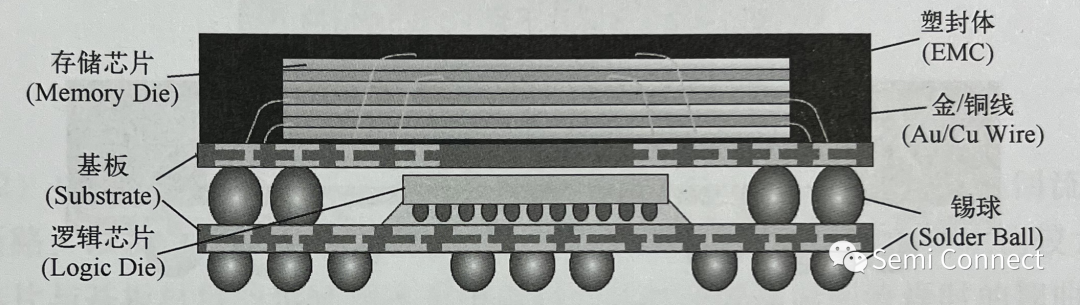

Diagram of PoP packaging, Image/Semi Connect

Independently packaged LPDDR memory will directly improve data throughput efficiency between memory and chips in terms of both bandwidth and heat dissipation, thereby enhancing the AI performance of iPhones. However, Apple's plan has inevitably raised many questions among people, especially since it seems diametrically opposed to the 'Unified Memory Architecture' adopted by the M1 chip.

Put simply, Apple has only recently integrated memory into its chips for Macs but now plans to separate chips and memory for iPhones.

On the other hand, the AI transformation of mobile phones has become an industry consensus in 2024. In theory, other mobile phone manufacturers will also face issues such as memory bandwidth under PoP packaging, which may prompt upstream vendors like Qualcomm and MediaTek to make changes or even follow Apple's lead in independently packaging memory.

Accelerated AI transformation of mobile phones, 'insufficient' memory

From iPhones to Pixels, Honor, and OPPO, the wave of AI transformation in mobile phones has intensified in 2024. At the user level, the presence of AI in daily use has also significantly increased, from newly upgraded AI voice assistants to practical functions like AI photo editing and AI summaries.

Almost without exception, major manufacturers are placing heavy bets in the AI field. Behind the dazzling AI upgrades, the requirements for mobile phone core hardware are also rapidly changing. Especially with large model technology as the foundation, AI places unprecedented demands on mobile phone memory, not just in capacity but more importantly in bandwidth.

In local inference of large models, memory needs to read, write, and exchange data at extremely high speeds. Theoretically, the higher the memory bandwidth, the faster the response speed of AI tasks; conversely, insufficient memory bandwidth will lead to processing bottlenecks.

Image/Apple

However, currently, smartphones generally use PoP (Package on Package) packaging technology, which stacks memory on top of the SoC and transmits data through pins. The original intention of this design is straightforward: smartphone space is limited, and PoP packaging can greatly save motherboard area while also providing short-distance high-speed interconnection between the SoC and memory.

In other words, it was once the 'ultimate solution' to the problem of tight internal space in mobile phones. However, what was once highly sought after now seems inadequate. Driven by the wave of AI transformation, PoP packaging has exposed two major flaws: bandwidth and heat accumulation.

Let's start with the bandwidth bottleneck. In PoP packaging, although the GBA solder joint spacing is getting smaller and the number of pins is increasing, data transmission between the SoC and memory is still limited by the physical design and is difficult to significantly improve. In short, the interconnect density of PoP is limited, and it is difficult to meet the increasing demand for bandwidth in large-scale AI computations when the area can hardly be increased.

Now, let's talk about heat accumulation. PoP packaging stacks memory directly on top of the SoC, concentrating heat on the top of the chip, leading to heat accumulation and performance bottlenecks. Especially under high-load AI tasks, the heat dissipation efficiency of this structure is clearly insufficient, easily causing the chip to overheat, affecting performance and stability.

Against this backdrop, 'change' is actually inevitable. Whether it's to increase bandwidth or optimize heat dissipation, PoP packaging obviously cannot meet the demands of AI tasks.

But another key question is why Apple plans to 'separate' memory from the A-series chips for independent packaging rather than aligning with the desktop Apple M-series chips and Intel Core Ultra 200S series, which integrate memory into the chips.

'Independent' memory is a more reliable solution for AI phones

When PoP packaging reveals bandwidth and heat dissipation bottlenecks, the choices available to smartphones become limited: either integrate memory directly into the SoC like the M-series chips to achieve a Unified Memory Architecture (UMA), or go the opposite way and independently package memory separately from the SoC.

Currently, it seems that Apple has made its choice and is even starting to push upstream vendors to realize independent memory packaging together. This decision seems contrary to the Unified Memory Architecture, but it is not accidental. The design logics of independent packaging and UMA are vastly different, reflecting two completely different hardware requirements.

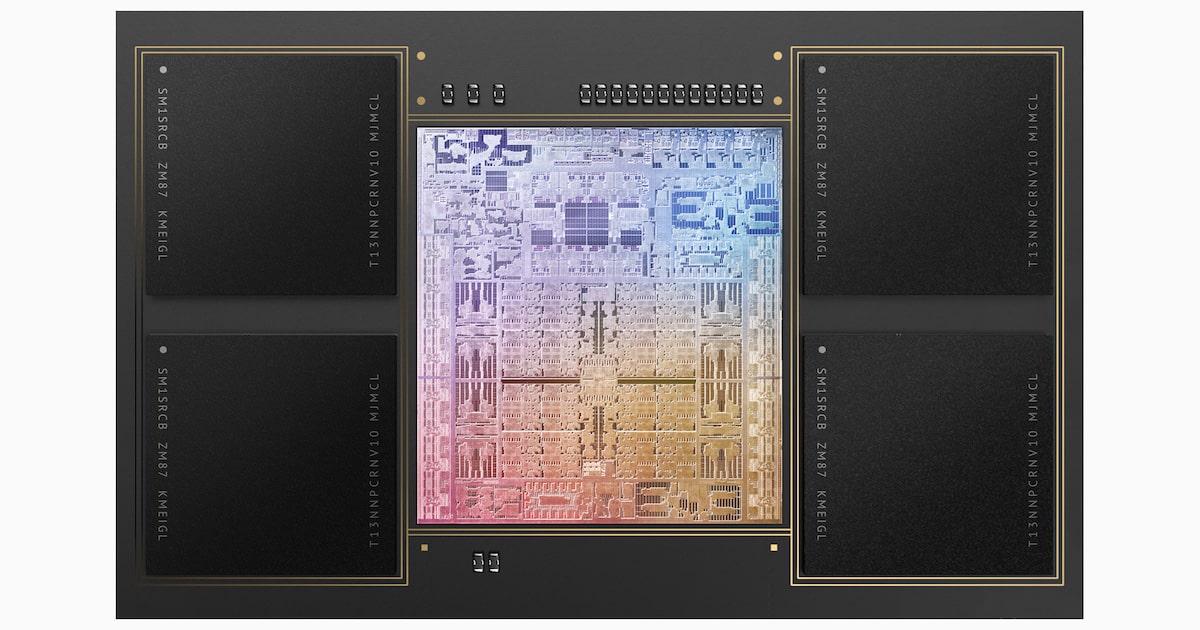

Apple M1 Max, Image/Apple

Today, we all know that the biggest advantage of designs like the Unified Memory Architecture (UMA) lies in integration and sharing. By integrating memory directly into the SoC, UMA allows different computing units such as the CPU, GPU, and NPU to share a single block of memory, avoiding frequent data replication between multiple memory modules, thereby reducing latency and improving efficiency.

This design has proven highly successful in productivity devices like Macs and iPad Pros, especially in high-performance tasks such as graphics processing, large applications, and AI training, where UMA has significant advantages.

However, the applicability of UMA-like solutions on smartphones is limited by various factors. The most typical are power consumption and heat dissipation. Integrating memory into the SoC increases overall power consumption and heat generation, despite bringing higher bandwidth and performance.

But smartphone battery capacity is limited, and frequent memory access during AI tasks can significantly increase power consumption, affecting battery life. Meanwhile, internal space is also extremely limited. Including another 'heat-generating giant' inside the SoC can easily impact the user experience.

iPhone 16 Pro, Image/Apple

Additionally, the size of the SoC is an issue. Although UMA-like packaging solutions have high integration, they increase chip area and thickness, reducing the flexibility of device design. Coupled with the complex and expensive packaging process, this significantly raises production costs, ultimately reflected in product pricing.

In contrast, the design of independently packaged memory may better meet the actual needs of smartphones. Not only does independent packaging allow the use of newer memory technologies (such as LPDDR6), achieving higher bandwidth through wide bus design, but it also avoids the bandwidth limitations imposed by the interconnect density of PoP packaging.

For generative AI, increased bandwidth directly translates into faster computation speed. Besides, independent packaging also offers advantages in heat dissipation optimization and design flexibility.

Of course, independent packaging is not flawless. It still needs to address issues such as body space occupation and signal delay. But under current technological conditions, it is undoubtedly a more practical and feasible direction. Whether this direction will become a common solution for AI phones remains to be seen.

The wave of AI phones is exploding, and memory technology must change

If Apple follows its plan, it should launch the iPhone 18 series with the A20 chip in 2026, which will be the first to adopt the new 'independently packaged memory.' Theoretically, the iPhone 18 series will face fewer bandwidth bottlenecks, at least in terms of memory.

What about the Android camp? Like iPhones, Android phones will also face bandwidth bottlenecks (not capacity) in the AI era. Unless there are significant changes in the operating mechanism of large models, they will still heavily rely on large-scale data transmission between chips and memory.

Image/Leitech

In other words, the Android camp also needs to 'change.' But how to 'change' remains an open question. One possibility is to follow Apple's footsteps and switch from PoP packaging to independently packaged memory. Another option is to continue with stacked designs, adopting more advanced signal interconnection technologies or process optimizations to alleviate bandwidth deficiencies.

The specific choice will depend on the interplay between Android phone manufacturers and chip vendors like Qualcomm and MediaTek, to see if they can find a more suitable 'solution' for the Android camp.

At least we can be sure that the AI transformation of smartphones is changing many things. It's not just software but also memory packaging solutions, and perhaps even chip design and ID design will undergo new changes in the coming years.

Source: Leitech