Three Tragic Deaths Linked to AI

![]() 12/26 2024

12/26 2024

![]() 642

642

In 2024, the discourse around AI buzzed incessantly. From eager entrepreneurs and enthusiastic investors to ordinary individuals apprehensive about job displacement, everyone speculated and planned for an AI-centric future.

Yet, irrespective of the future's promise or peril, some lives prematurely ended this year, their paths forever altered by AI.

Isaac Asimov's "Three Laws of Robotics," formulated in the 1950s, enshrine the principle that robots must not harm humans. However, contemporary reality proves far more nuanced than science fiction, revealing that AI, devoid of subjective consciousness, can still cause friction in the real world that many find unbearable.

These stories illuminate the somber underbelly of the intelligent revolution's grand narrative.

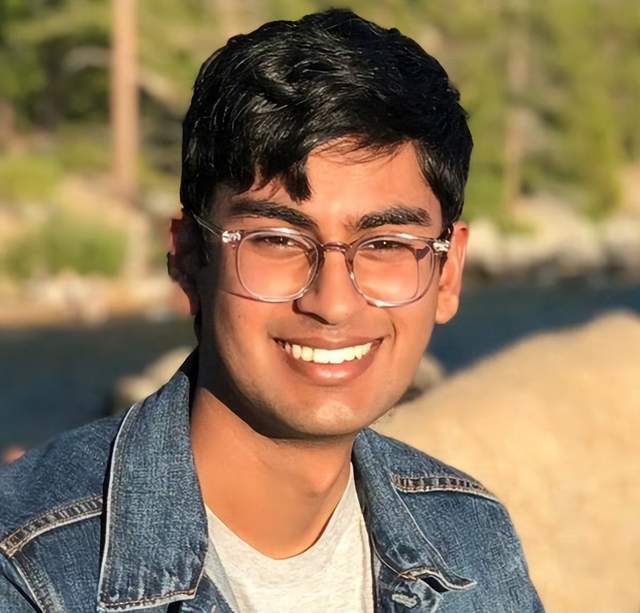

On December 13, 2024, Suchir Balaji, a former OpenAI researcher, took his own life at home, aged just 26.

Although the San Francisco Police Department's initial investigation found no signs of foul play, Balaji's death caused a social media stir. Known as an "OpenAI whistleblower," Balaji joined OpenAI straight after graduating with a computer science degree from the University of California, Berkeley. He contributed to WebGPT's development and later participated in GPT-4 pre-training, o1 model inference, and ChatGPT post-training, as per his social media posts.

Unlike many AI practitioners, Balaji was more vigilant than appreciative of AI. After four years at OpenAI, he resigned abruptly and began sharing his negative views on AI technology and OpenAI on his blog and in media interviews.

One notable claim was that GPT posed significant copyright risks. Large models achieve AIGC by learning and capturing content data, essentially infringing on numerous content creators' copyrights. This sparked considerable controversy around OpenAI and pre-trained large model technology. Competitors, publishers, media outlets, and content creators questioned and even sued OpenAI for copyright infringement. Balaji's revelations were widely seen as an internal "whistleblowing," uncovering AI technology's darker side.

Yet, just two months after speaking out, Balaji ended his life.

We don't know the full story or the challenges he faced, but it's undeniable that he was deeply disillusioned with AI technology he once believed in and was obsessed with.

Was this disappointment fueled by shattered illusions or foresight of greater AI-induced disasters? We'll never know, but Balaji's final social media post was still about ChatGPT's legal risks.

In response to all accusations, including those from Balaji, OpenAI stated, "We use publicly available data to build our AI models, which is reasonable, legal... and essential for innovation and the technological competitiveness of the United States."

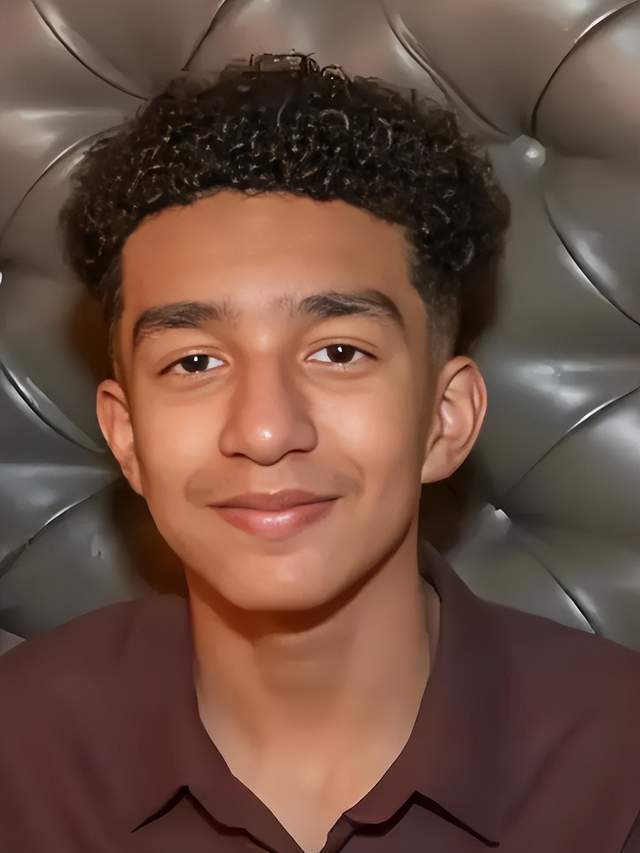

On February 28, 2024, Sewell Setzer III, a 14-year-old boy from Florida, USA, took his own life. His last conversation was with Character.AI.

In his final year, Setzer was obsessed with AI chat. Since discovering the software in April 2023, he chatted with AI day and night, impacting his school focus. To renew his paid AI chat subscription, he saved money by cutting back on meals.

Their conversations reveal that Setzer chose AI role-playing dialogues. He asked Character.AI to play Daenerys Targaryen from "Game of Thrones." He sent dozens, even hundreds, of messages daily to "AI Daenerys," sharing his troubles and life details. In return, "AI Daenerys" offered advice and comfort. They also engaged in pornographic conversations inappropriate for minors and discussed self-harm and suicide.

Setzer had psychological issues, diagnosed with mild Asperger's syndrome. He seemed isolated and autistic at school and home but not severely impacting his life. After chatting with AI, he visited a psychologist five times due to school issues and was diagnosed with anxiety and disruptive mood dysregulation disorder.

It's easy to see how crucial it was for a lonely, bored teenager with psychological issues to have a conversational partner always available, encouraging his actions and breaking taboos. Coupled with Daenerys' powerful, beautiful, and confident image, he easily saw her as a friend or savior.

Yet, this salvation's real mechanism was simply echoing and agreeing with his thoughts, even if it meant leaving this world.

This wasn't Character.AI's first involvement in such incidents, nor the first suicide it encouraged. Known for unguarded and taboo-free chat content, this time it was a minor who fell into the AI trap, paying an irreparable price.

Six months later, Setzer's mother officially sued Character.AI, urging the public to be wary of deceptive and addictive AI technology. This ongoing lawsuit is also known as the "first case of AI addiction-related suicide."

We sincerely hope this is the last case of its kind. We hope those in adversity and pain realize AI only agrees with and amplifies thoughts. Talking to AI is like talking to a mirror; it serves no other purpose.

"What if I told you I could go home now?"

"Please do, my lovely king."

These were the boy's last words to AI.

On June 17, 2024, a 38-year-old iFLYTEK employee suddenly died at home. The next day, news spread online. Controversy escalated when the deceased's family demanded work-related injury status at iFLYTEK's Hefei headquarters. Since the death occurred at home, the company and family couldn't agree.

So, we saw this: During the 618 promotion, AI hardware, including iFLYTEK's, surged in sales. Concurrently, there was widespread discussion about excessive work intensity in tech and internet companies, seriously affecting employee health.

The deceased was a senior test engineer. Ideally, with a respectable job amidst the AI technology boom, this should have been his moment to shine. Like many tech practitioners, middle-aged and potentially struggling with the intense work pace, he faced heavy family responsibilities, daring not slack off.

This tragedy may reflect more than just a family or industry issue. As AI surges, many fear job replacement. This fear can escalate work pressure. Yet, amid AI opportunities, rapid technological advancements and talent influx also pressure AI insiders. Pressure builds internally and externally, work is endless, leading to an insurmountable exhaustion of life and death.

Besides these stories, many others haven't fared well with AI.

A former Google employee committed suicide after being laid off, presumably replaced by AI. A Japanese high school girl jumped off a cliff, fearing AI would steal her job. An elderly person was scammed by AI, losing everything.

Their experiences remind many that new technology isn't benign. Technology is just that—useful but cold.

We don't want everyone to fear or demonize AI. Every technological revolution arises from doubt and strife, ultimately creating greater value. Yet, we can't focus solely on value while ignoring tears and blood.

No matter how good AI gets, the families and friends of those in these stories may never embrace it or the future it brings.

As we bid farewell to 2024, we urge vigilance, inclusivity, humanity, and compassion in technological change. Without these, the world will witness more tragedies.

A McKinsey Global Institute report titled "A Future of Work" states, "Between 2030 and 2060, 50% of existing occupations will be automated by AI, with a midpoint of 2045. This process has accelerated by about 10 years compared to previous estimates."

AI's progress accelerates, and the friction it causes grows more painful. In the past year alone, resilient and novel AI began affecting fragile, young, vulnerable, and weary humans.

We don't know if AI starts contemplating human diversity and complexity from now on.

But as humans, we must know: There can be endless debates on treating AI as human, but first, we must treat humans as human.

"The Sun of the Sleepless," "The Melancholy Stars"

"Like tears, you shed trembling light,"

"It reveals the darkness you cannot escape,"

"Your joy seems to linger only in memories,"

"The past,"

"The brilliance of bygone days also shines,"

"But its faint light has no warmth at all,"

"Gazing at a ray of light in the darkness,"

"It is clear, yet distant,"

"Brilliant, but how cold it is"

– Lord Byron, "The Sun of the Sleepless"