What is the Difference Between VLA, Often Mentioned in Autonomous Driving, and World Models?

![]() 10/14 2025

10/14 2025

![]() 658

658

VLA: Linking 'Perception', 'Comprehension', and 'Action'

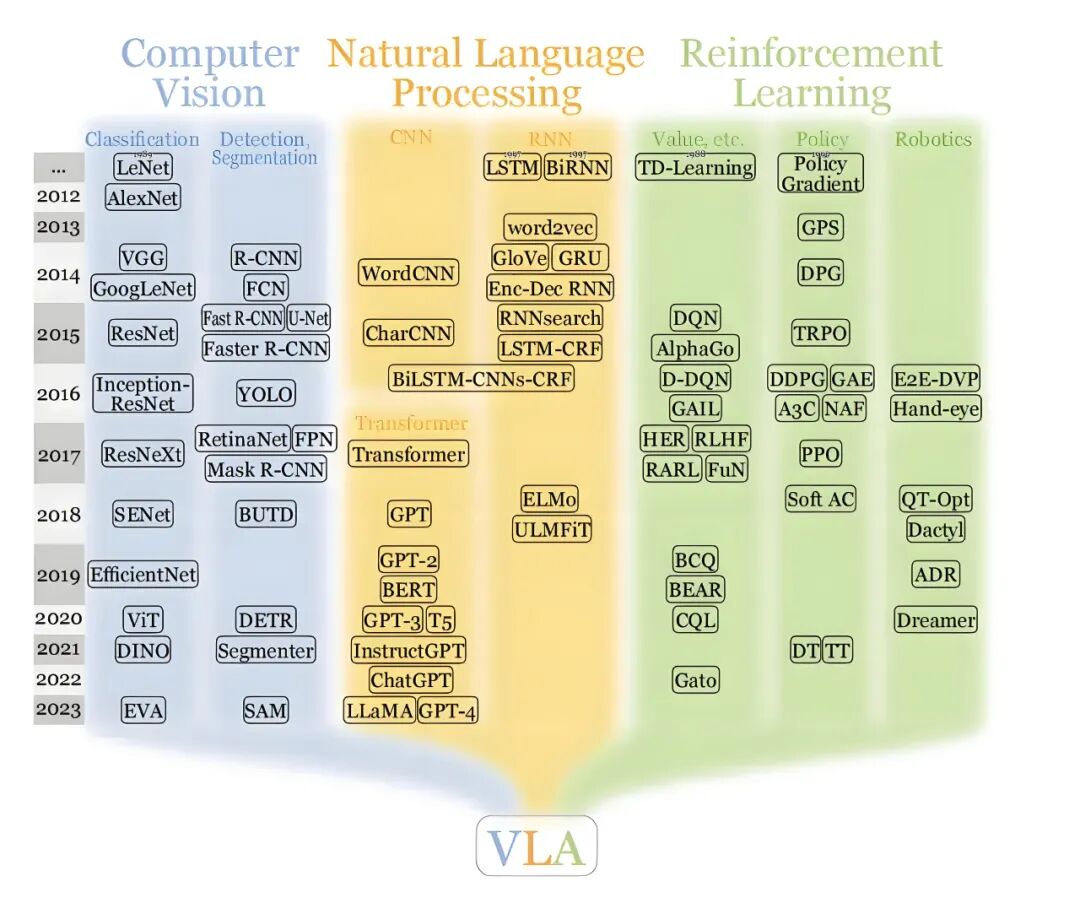

The term VLA, which is frequently discussed in the context of autonomous driving, stands for Vision-Language-Action. Its primary objective is to seamlessly integrate visual inputs from cameras or sensors, the capability to understand and process natural language through advanced models, and the final control actions executed by the vehicle. This integrated model can transform road conditions into semantic information (for instance, identifying pedestrians, lanes, and traffic signs), conduct internal reasoning in a manner akin to language processing (such as assessing a pedestrian's intention to cross the road), and ultimately generate control commands or trajectory suggestions. This process forms a closed loop from perception to decision-making to action.

Typically, VLA encodes visual features into a set of vectors using an encoder. These vectors are then linked to a language model, which is responsible for high-level reasoning and contextual understanding. Finally, an action generation module translates the reasoning results into executable control instructions. By utilizing 'language' as an intermediary, VLA possesses a natural advantage in explaining the rationale behind certain decisions and expressing these decisions in a manner that is understandable to humans. This capability is highly beneficial for accident investigations, manual reviews, and human-machine interactions.

World Model: Simulating the Future 'Mentally'

The core function of a world model is to enable the system to predict how the environment will evolve. Given current observations and a sequence of actions, a world model attempts to forecast the perceptual outputs or scene evolutions over the upcoming period. This includes predicting how surrounding vehicles will move, how pedestrians will behave, or how road occupancy will change. Essentially, it functions as an internal simulator capable of conducting repeated 'trials'.

World models can operate at the pixel level or as abstract latent space representations. Pixel-level models directly generate future image frames, while abstract latent representations predict object states and dynamics in a more compact encoding. In autonomous driving, world models are commonly employed for two main purposes: first, for online short-term prediction to assist planners in evaluating the consequences of current actions; second, for offline large-scale simulation to generate challenging scenarios, conduct policy evaluations, and perform safety verifications. The strength of world models lies in their ability to model causal relationships and dynamics, answering the question, 'What will happen to the environment if I take this action?' This capability is crucial for safety assessments.

Core Differences and Strengths of VLA and World Models

When comparing VLA and world models, significant differences emerge. VLA emphasizes the direct incorporation of complex semantic and reasoning capabilities into the decision-making chain. It excels in interpretability and integrating human semantic knowledge (such as rules and common sense) into behavioral judgments. On the other hand, world models focus on predicting dynamics and future states, excelling in evaluating the consequences of actions and generating extreme scenarios for training purposes.

Since language-based reasoning relies on corpora and scene labels for training, VLA necessitates large amounts of multimodal, annotated, or human semantic-aligned data. In contrast, world models depend more on continuous temporal data and accurate dynamic feedback or high-fidelity simulators to compensate for insufficient real-world data.

For the autonomous driving industry, VLA enables the system to 'explain its reasoning,' which is beneficial for compliance and user trust. World models, on the other hand, can reveal long-term risks in advance, aiding in safety verifications and policy robustness. The computational and real-time requirements of the two also differ. Deploying an end-to-end VLA on a vehicle requires balancing multimodal reasoning and latency. Utilizing a high-fidelity world model for online prediction necessitates ensuring prediction speed and stability; otherwise, real-time control is compromised.

How to Effectively Utilize Both Approaches?

A common practice in the autonomous driving industry is to deploy world models in the cloud or on simulation platforms for large-scale generation of extreme and rare scenarios, training data augmentation, and policy evaluations. VLA or other decision-making models are then placed on the vehicle for real-time perception-reasoning-action mapping, with interpretable intermediate representations (such as 'why braking') recorded for auditing purposes. Another approach is to retain a lightweight world model on the vehicle for short-term trajectory prediction and redundancy checks, serving as a safety net for the primary decision-maker.

When selecting a technical route, several practical considerations must be taken into account. These include the target scenario (urban complex road conditions or highway long-distance travel), the feasibility of extensive real-world testing, and the level of demand for interpretability and regulatory compliance. Consumer-oriented driving assistance systems may prioritize user experience and natural interaction, where VLA can enhance semantic-level performance. For commercial fleets or scenarios with stringent safety and compliance requirements, a more robust world model is necessary for simulation and verification purposes.

Regardless of the chosen path, it is essential to establish a rigorous simulation-to-reality calibration process, redundancy strategies, and continuous online/offline evaluation systems. This ensures that decisions are not based solely on overfitted language reasoning or low-fidelity simulations, which could compromise their 'road-readiness'.

For autonomous driving companies, world models can generate extreme scenarios to supplement training datasets. However, real-world data must be used for calibration purposes. Implementing interpretable output and anomaly detection mechanisms on the vehicle facilitates regulatory compliance and post-event analysis. When designing system boundaries, it is crucial to define when human intervention is required and when the system should limit its capabilities to prevent overreactive actions in uncertain situations. A hybrid approach that combines both VLA and world models and undergoes rigorous validation enables autonomous driving systems to both 'think through the consequences' and 'explain their reasoning,' representing a more robust (or reliable) route.

Final Thoughts

VLA and world models are not mutually exclusive but rather complementary tools. VLA brings language-based reasoning capabilities into decision-making, enhancing the processing of complex semantic scenarios and interpretability. World models enable the system to simulate the future 'mentally,' improving risk and consequence assessment. For the autonomous driving industry, a more practical approach is to combine the strengths of both, utilizing world models for data supplementation and verification and VLA for semantic understanding and interaction, while ensuring clear safety boundaries and multi-layer redundancy. This approach not only enhances functionality but also prioritizes safety and auditability.

-- END --