OpenAI Unveils Its First Agent: ChatGPT Revolutionizing AI, Ushering in the Era of AGI?

![]() 01/26 2025

01/26 2025

![]() 691

691

Rumors about OpenAI launching an "Agent" have been circulating for some time. Since last November, leaks have consistently indicated that OpenAI would introduce its first agent in January 2025, with overseas tech media The Information narrowing the timeframe to the end of this month.

Now, OpenAI has finally initiated the era of agents by announcing its first agent, Operator. On the morning of January 24, Beijing time, OpenAI's live event captivated the global tech community, with Operator stealing the show.

As a genuine agent, Operator simulates human operational behavior on computers, directly interacting with webpages through clicks, scrolls, inputs, and other actions to accomplish various tasks. In essence, Operator functions like a digital employee with "autonomous consciousness," capable of browsing webpages, filling out forms, ordering products, making restaurant reservations, and more, thereby replacing us in mundane or even complex operations.

Operator start page, Image/ OpenAI

Prior to this, OpenAI had already taken a stride forward by introducing the "Tasks" feature, transforming ChatGPT from a purely passive AI chatbot into an AI digital assistant capable of actively executing tasks. The emergence of Operator marks OpenAI's official entry into the age of agents, achieving an evolution from "passive information processing" to "active task completion"—a significant step towards AGI.

When ChatGPT Learns to 'Surf the Web'

It's important to note that Operator is currently in the research preview stage, available exclusively to ChatGPT Pro users in the US ($200/month), with Plus users unable to access it. Unlike Claude's Computer Us and Wisdom's GLM-PC agents, which directly operate users' computers, Operator operates through a "browser" in the cloud.

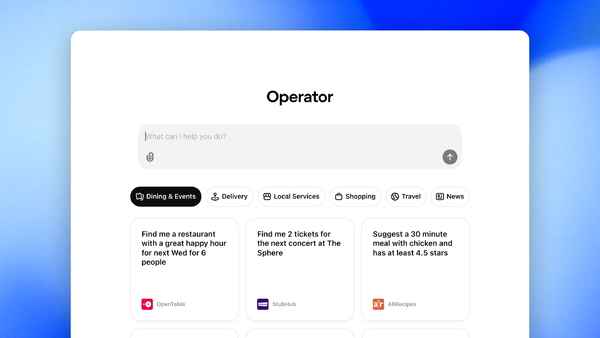

Left: Conversation, Right: Cloud browser, Image/ OpenAI

To truly grasp the significance of Operator, let's delve into practical scenarios and observe how AI, akin to an experienced "web surfing veteran," navigates the digital world to complete various tasks during OpenAI's live demonstration.

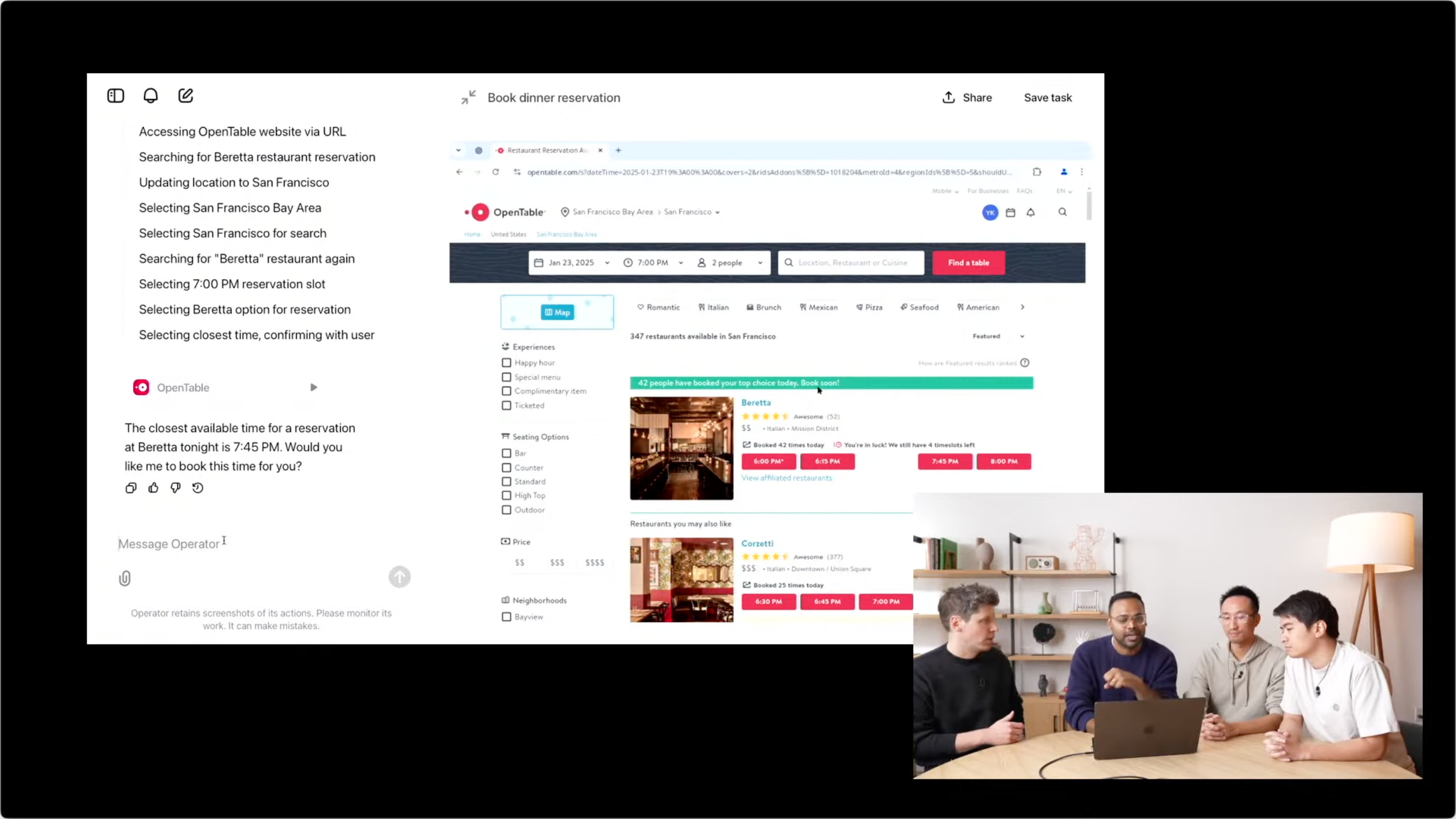

For instance, in OpenAI's live demonstration, Operator was tasked with booking a table for two at Beretta (restaurant) for 7 PM that evening. This instruction, which involves mere searches and filters on a food reservation website for humans, poses a significant challenge for AI.

Upon inputting the restaurant reservation request, Operator initially analyzes the demand and then directly opens a cloud browser backend, initiating a step-by-step search for the restaurant, reviewing options, and starting the reservation process. Users can observe each click, scroll, and input of Operator through the window, much like human operation.

Snapshots allow users to review each step of AI's thinking and operation, Image/ OpenAI

Operator's performance is indeed impressive. It swiftly launches the built-in browser and begins "observing" the screen content, locating search boxes and various filter options by analyzing the webpage's structure and elements. The entire process flows seamlessly, as if a real person is handling it.

Intriguingly, when Operator initially found no availability at Beretta for 7 PM that evening, it re-searched for a "reservation" close to the user's requirements, ultimately informing and proactively asking if the user would prefer to book for "7:45 PM tonight."

AI recommends a nearby time after searching, Image/ OpenAI

Similarly, when the "7:45 PM tonight" slot was taken, Operator presented two alternative reservation times, "6:15 PM tonight" and "8:15 PM tonight," for the user to choose from.

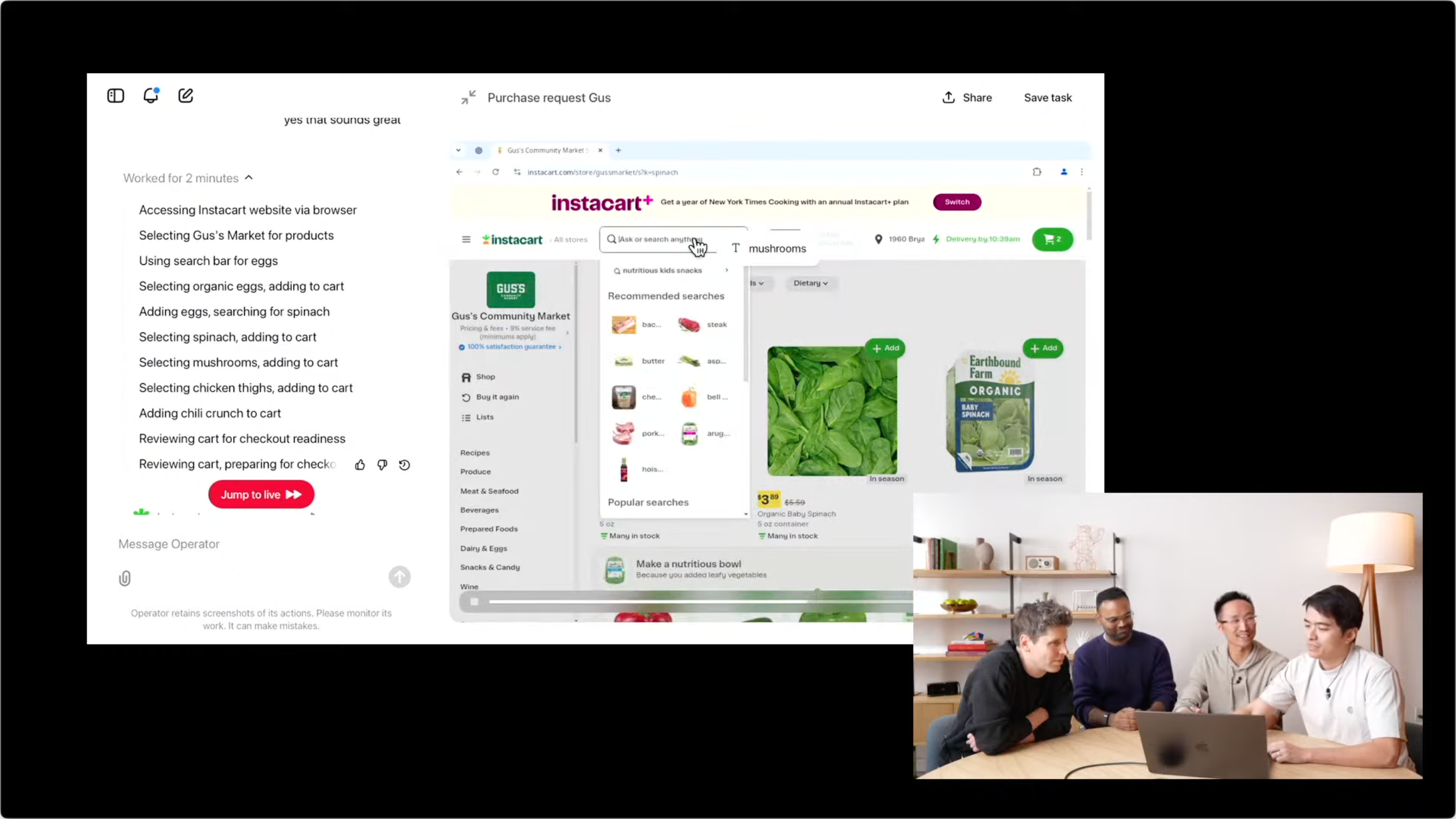

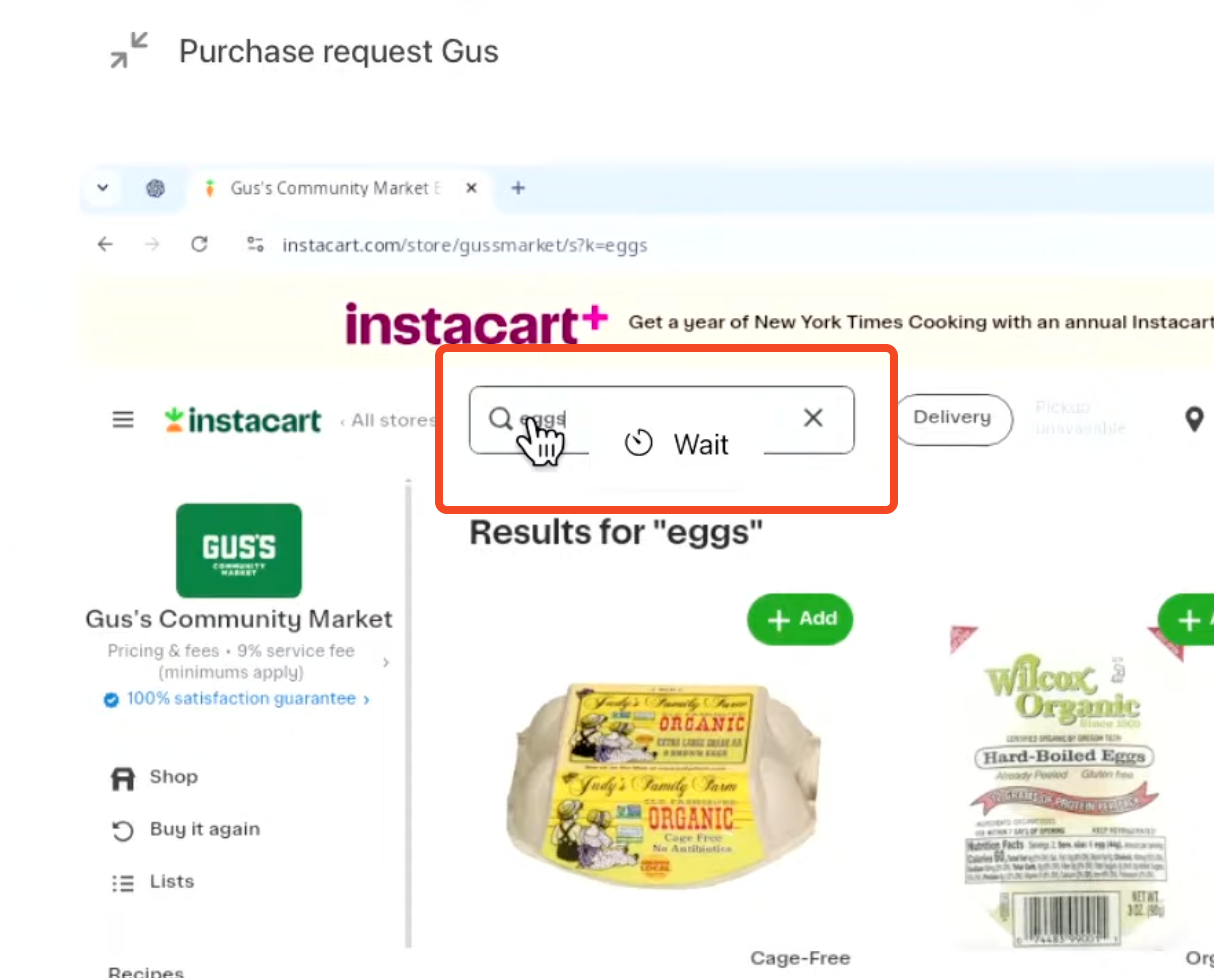

Moreover, in a task to purchase a set of groceries, it's evident that Operator can execute tasks consecutively by continuously searching for items and adding them to the shopping cart. Before final checkout, Operator will request user takeover for final confirmation and payment (users can log in and retain login status), allowing temporary additions or modifications.

Grocery shopping (2x speed), Image/ OpenAI

Combining OpenAI's previously launched "Tasks" feature, one can easily envision a future where Operator regularly restocks household items.

Based on official demonstrations and tests shared by a few users, Operator has demonstrated robust adaptability and versatility in shopping, ticket booking, and other scenarios, excelling in completing diverse tasks.

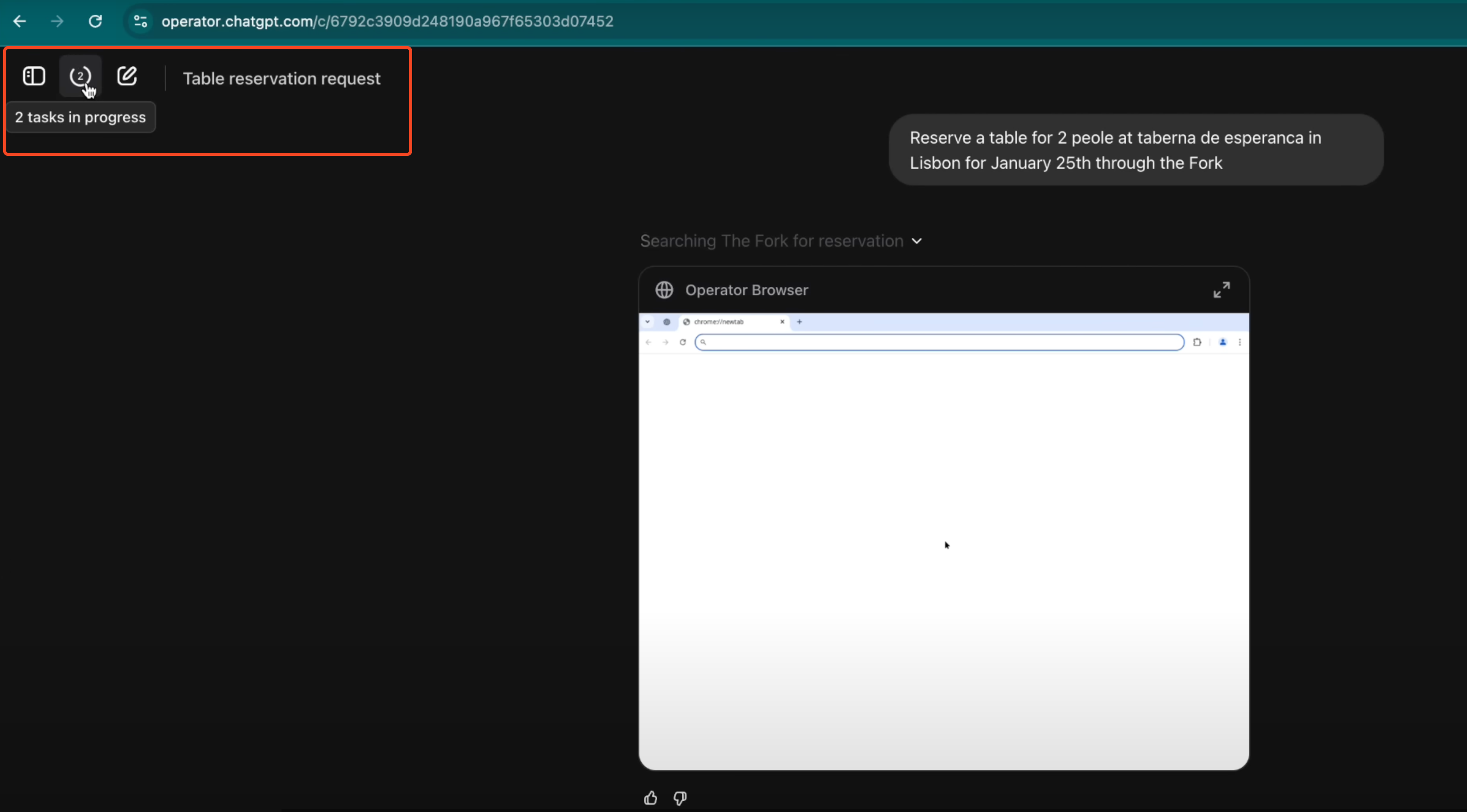

Additionally, as mentioned earlier, users can observe each step of Operator's operation or opt not to, allowing Operator to simultaneously execute another task or simply attend to their own work, confirming actions after receiving reminders from Operator.

Running multiple tasks simultaneously, Image/ YouTube

Both official demonstrations and tests by YouTube bloggers underscore this point. But how does Operator achieve all this?

The Key is GPT-4o-Based "CUA"

Operator's ability to operate a computer like a human is inseparable from the "Computer Use Agent (CUA)" tailored by OpenAI. Built on GPT-4o's visual capabilities and advanced reasoning techniques, CUA enables AI to "understand" and "operate" computer interfaces, effectively giving AI the ability to interact with graphical user interfaces (GUI) like humans.

CUA's primary task is to "understand" the screen content. By analyzing screenshots, it comprehends various information such as images and text, identifying elements on webpages like buttons, links, text boxes, etc. This process mirrors how humans observe the world with their eyes.

Even knows to wait, Image/ OpenAI

Subsequently, based on user instructions and observed content, CUA reasons and judges to determine the next operation. For example, when a user requests a restaurant reservation, CUA deduces the need to visit the restaurant reservation website and input keywords into the search box. This process mirrors human thinking.

Concurrently, CUA executes corresponding operations, such as mouse movements, clicks, keyboard inputs, etc. These operations are highly precise, similar to how we operate computers using mice and keyboards. Thanks to this versatile interaction capability, Operator doesn't require websites to provide API interfaces and can be used on almost any webpage.

To ensure operations are intelligent and coherent, CUA operates through an iterative loop, continuously "observing," "thinking," and "acting" until the task is completed. When encountering challenges or making mistakes, Operator can self-correct using its reasoning abilities. When difficulties arise or user input is required, Operator hands control back to the user.

Moreover, OpenAI wisely chose to run the browser in the cloud rather than directly operating users' computers like Claude Computer Us and Wisdom's GLM-PC agents, which could lead to issues of "occupation," "privacy," and "environment."

Claude Computer Use, Image/ Anthropic

The first two issues are straightforward. "Occupation" means that when the agent operates the computer, the user cannot perform other operations simultaneously and must wait. The "privacy" issue is self-evident, as users' computers often store sensitive files and information.

"Environment" refers to the complex operating environment of users' actual computers, which may encounter various system and software bugs, and even software permission issues when simply launching software, regardless of whether it's Windows, macOS, or Linux.

In contrast, OpenAI appears to avoid "overreaching" by limiting the usage scenario to the most common "browser" and ensuring a unified, private, and background-runnable operating environment through cloud operation.

While OpenAI isn't the first large model vendor to create a true agent, the combination of these technologies and product design not only achieves a leap from "passive information processing" to "active task completion" for AI, enabling Operator to complete diverse tasks, but also makes Operator surpass Claude Computer Us or Wisdom's GLM-PC to a certain extent, making it more suitable for mainstream use.

AI Changes the World, Agents Change AI

Over the past year, agents have nearly become a consensus in the AI industry. However, many vendors' promoted "agents" are merely simple context customizations. For example, role-playing "agents" only preset a character setup text and then split and execute questions.

Essentially, they are still software modules rather than truly autonomous agents.

As an application or interaction window in the era of large models, a genuine agent should be capable of operating and acting like a human, such as operating computers and executing tasks, directly replacing humans in all unnecessary operations.

Image/ Wisdom

This distinction is crucial, separating conceptual hype from genuine technological breakthroughs, and also highlighting the value of Claude Computer Use, Honor YOYO Agent, and today's OpenAI Operator.

However, it's essential to understand that Operator and other similar "true agents" are still in their early exploratory stages. The core challenge remains "versatility." Even with today's OpenAI Operator, agents haven't yet achieved truly human-like universal interaction capabilities and don't support arbitrary websites and programs.

Coffee automatically ordered by YOYO Agent, Image/ Leitech

The internet is a dynamic world, facing countless websites and interaction designs. Keeping agents adaptive is a long-term challenge that needs addressing.

Nevertheless, the immense value of agents as "AI applications" is undeniable, freeing us from tedious and repetitive operations and allowing us to dedicate more time and energy to more creative and meaningful work. More importantly, it significantly enhances interaction efficiency and lowers the interaction threshold.

Take a practical example. Recently, short videos and social platforms have been popular among college students returning home during the Chinese New Year, helping the elderly uninstall malicious apps and turn off advertisement settings. A key reason is that smartphones have a high interaction threshold for the older generation.

In contrast, large models have brought unprecedented natural language interaction capabilities, while agents are attempting to further liberate human hands and brains.