Insight: Why Are Specialized Chips and Edge Computing the Linchpin for Overcoming XR Experience Barriers?

![]() 02/02 2026

02/02 2026

![]() 505

505

As the global semiconductor market hurtles towards a trillion-dollar valuation by 2026, edge AI is transitioning from a peripheral role to a central engine of growth. Projections from the World Semiconductor Trade Statistics (WSTS) indicate a surge of over 25% in the global semiconductor market by 2026, nearing $975 billion. This expansion is primarily fueled by the deep integration of edge computing and specialized chips, which are reshaping the industry landscape.

In the XR (Extended Reality) sector, the synergy between specialized chips and edge computing is pivotal in overcoming critical experience barriers. The stringent requirements of XR devices—low latency, high computational power, and minimal power consumption—cannot be met by cloud computing alone, nor can general-purpose chips strike an optimal balance between energy efficiency and performance. The mutual enhancement of specialized chips and edge computing directly addresses this challenge, facilitating the shift of XR from conceptual prototypes to widespread commercial deployment.

Amid escalating competition for cloud-based computational resources, intelligent restructuring at the device level has emerged as a crucial factor for industry breakthroughs. The bidirectional empowerment of specialized chips and edge computing is redefining the rules across the entire value chain, from technical architecture to commercial ecosystems.

01 The Underlying Logic of Co-Evolution: A Two-Way Interaction Driven by Demand and Technical Adaptation

The synergy between specialized chips and edge computing fundamentally represents a precise alignment of 'scenario demands' and 'technical supply.' The core driving force arises from three major contradictions that traditional architectures have failed to resolve: the conflict between real-time demands and cloud latency, the contradiction between device power constraints and computational needs, and the tension between data privacy protection and centralized computing. The scenario-based optimization capabilities of specialized chips provide the key to unlocking edge computing bottlenecks.

From the demand perspective, the fragmented nature of edge scenarios imposes extreme energy efficiency requirements on chips. The XR industry serves as a prime example, with consumer-grade VR/MR headsets operating at 4W-8W per device. To achieve rendering demands exceeding 90 frames per second and maintain latency below 20 milliseconds to prevent dizziness, chips must undergo stringent tests to balance energy efficiency.

According to Imagination, the edge device market, with annual shipments in the billions, sees power efficiency as a direct determinant of commercial viability. Industrial quality inspection terminals must limit power consumption to 3W-5W, while autonomous driving chips, though tolerating 45W-60W, demand millisecond-level latency and high computational density.

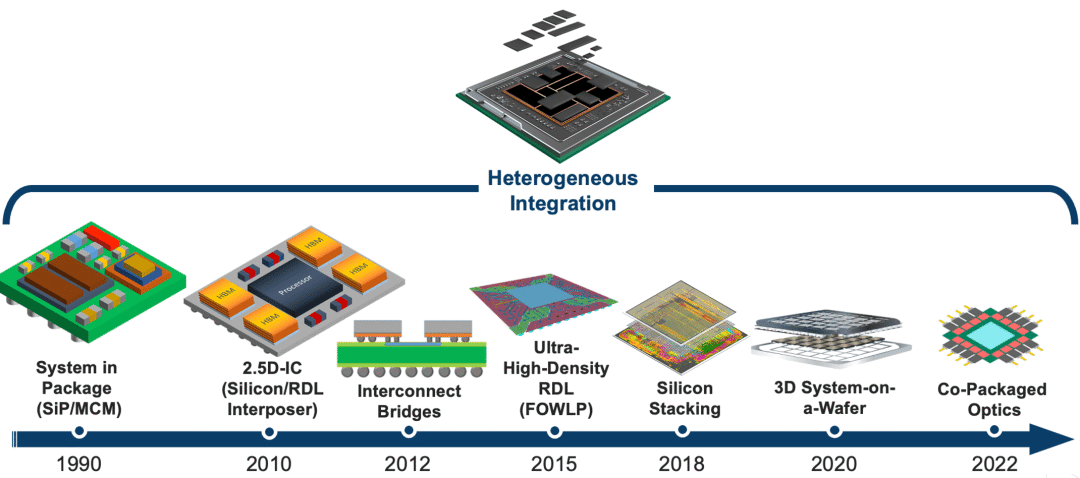

Specialized chips achieve significant improvements in energy efficiency through algorithm-hardware co-design, eliminating redundant modules from general-purpose computing. Architectures like compute-in-memory enhance energy efficiency by over 30% compared to traditional designs, while Chiplet technology increases computational density by 40% and reduces costs by 35%—capabilities beyond the reach of general-purpose chips.

From the technical side, the widespread deployment of edge computing drives iterative advancements in specialized chip architectures. The trend of migrating AI models to the edge, exemplified by 7-billion-parameter large language models and generative AI, necessitates efficient inference within limited hardware resources.

This requires specialized chips to evolve from single-purpose units to heterogeneous integration. Current mainstream architectures widely integrate CPU, GPU, NPU, and DSP modules, as seen in chips like NVIDIA Orin and Horizon Journey 5. These chips enable collaborative scheduling of tasks such as LiDAR point cloud processing, multi-object tracking, and path planning, perfectly aligning with the complex demands of edge scenarios.

02 Technological Path Iteration: A Paradigm Shift from 'Isolated Optimization' to 'Systemic Collaboration'

The co-evolution of specialized chips and edge computing transcends mere hardware upgrades, forming a virtuous cycle of 'architectural innovation—energy efficiency breakthroughs—scenario deployment—feedback optimization.' Its technological evolution exhibits three key traits.

Architectural Heterogenization—The Core Path to Overcoming Edge Computational Bottlenecks

Traditional monolithic architectures struggle to balance the diverse demands of edge scenarios, making heterogeneous integration the mainstream direction for specialized chips. This approach integrates computational modules with distinct functions—such as CPUs for basic operations, GPUs for graphics, NPUs for AI inference, and DSPs for signal processing—onto a single chip. This enables each module to specialize and avoid energy waste from over-reliance on a single unit.

Under this design philosophy, the precise embedding of specialized computational units has become standard. For instance, ARM extended its Cortex-A series CPUs with dedicated matrix multiplication instructions, reducing data movement across modules via an 8x32 brick architecture to enhance efficiency. Balancing 'specialization' for specific tasks with 'programmability' for multi-scenario adaptability remains the core challenge in chip design.

Qualcomm's Hexagon DSP offers a robust solution, integrating scalar, vector, and tensor processing units with asynchronous multithreading and low-power island technology. This enables efficient execution of XR perception and localization tasks while maintaining power control, achieving an optimal performance-energy balance.

Over the next 3-5 years, heterogeneous architectures will evolve from 'simple module assembly' to 'intelligent dynamic scheduling.' Adaptive scheduling engines with real-time awareness will become widespread, dynamically allocating hardware resources via reinforcement learning algorithms. This is expected to reduce edge task latency by over 40%, further unlocking synergistic value.

Development of heterogeneous integration. Source: Semiconductor Engineering

Scenario Customization—From 'General Adaptation' to 'Precision Empowerment' in Niche Markets

The fragmented nature of edge scenarios drives specialized chips toward 'tiered computational power and scenario-specific customization.' Based on computational capacity, three clear segments emerge: low-computational chips (≤1TOPS) dominate smart homes and wearables, accounting for 52% of shipments by 2025 due to cost and power efficiency. Mid-computational chips (1-10TOPS) serve smart cameras and industrial controllers, balancing performance and cost with a 31% market share. High-computational chips (≥10TOPS) focus on premium sectors like XR devices and robots, with products exceeding 100TOPS growing rapidly and expected to capture 57% of the XR high-end headset market by 2027.

In smart manufacturing, defect detection systems powered by domestic specialized chips achieve response times under 15 milliseconds with over 99.7% accuracy. For smart transportation, edge-specific chips enable real-time traffic scheduling without cloud interaction, cutting bandwidth costs by 60%.

This scenario-deep, demand-aligned customization model builds a core competitive moat for specialized chips, rendering general-purpose alternatives obsolete.

Ecosystem Integration—The Key to Overcoming 'Hardware Silos'

The ultimate form of co-evolution is ecosystem restructuring, with competition escalating from isolated chip battles to full-stack ecosystem duels.

International giants leverage hardware-software synergy to build barriers: NVIDIA opens six dedicated AI model families, achieving full-scenario coverage from cloud to edge via its CUDA ecosystem; AMD integrates its ROCm software platform with AI tools, breaking through with a unified 'Zen5+RDNA3.5+XDNA2' architecture.

In contrast, domestic firms accelerate ecosystem development through scenario advantages: China's RISC-V ecosystem, gathering over 100 enterprises, launched standardized solutions in 2025 covering computational expansion and communication optimization, aiming to disrupt X86/ARM dominance.

However, ecosystem fragmentation remains a pain point. Edge application scenarios are 10 times more fragmented than cloud environments, with 65% of edge AI projects failing to scale beyond proof-of-concept (POC) due to immature software toolchains and inconsistent interface standards across vendors. Thus, ecosystem collaboration hinges on establishing a 'chip-algorithm-application' closed loop, lowering developer adaptation costs and technical barriers through open-source operator libraries and unified standardized interfaces.

03 Industrial Landscape and Challenges: Structural Competition Amid Opportunities

The global edge AI chip market exhibits a 'international giants dominate high-end, domestic firms capture mid-to-low end' dynamic. By 2025, the market will exceed $35 billion, growing at a CAGR of over 45%, with Asia-Pacific accounting for 58% and China reaching RMB 153 billion.

Internationally, NVIDIA, Qualcomm, Intel, and AMD control the high-end market, with Qualcomm's IoT revenue reaching $1.549 billion in Q1 2025, up 36.1% year-over-year.

Among domestic firms, leading companies have launched XR-dedicated chips deployed in multiple consumer AR glasses, supporting 4K rendering and low-latency spatial positioning. This drove domestic chip market share in XR terminals from 8.2% in 2023 to 19.5%.

Yet, the industry faces structural challenges, particularly the conflict between cost and scalability. Advanced SoC chip development costs $500 million to $1 billion, while niche XR segments in edge markets ship only millions of units annually, making it difficult to amortize R&D costs and constraining innovation for smaller players.

Technical bottlenecks also loom large. As Moore's Law slows, annual transistor performance gains drop below 10%. Data movement between memory and computational units consumes over 60% of total energy, severely impacting chip efficiency.

Moreover, the trade-off between compliance and security intensifies. Embedding hardware-level security modules for data protection often requires 5-10% of silicon area and causes over 15% performance degradation, posing a universal challenge of balancing security, performance, and cost.

04 Conclusion

The co-evolution of specialized chips and edge computing represents a fundamental restructuring of terminal intelligence ecosystems, not merely a technical superposition. By 2030, global edge AI chips are projected to handle over 70% of AI inference workloads, with China's self-controlled chip share potentially exceeding 45%. A low-power, high-responsiveness, scalable distributed intelligent infrastructure is taking shape.

For enterprises, future competitiveness hinges on 'collaborative capabilities.' Hardware firms must deepen scenario demands and enhance heterogeneous architectures and energy efficiency. Software firms should align algorithms with chip characteristics, building lightweight models and toolchains. Terminal manufacturers ought to drive cross-sector collaboration and participate in ecosystem standardization.

Only by breaking boundaries across technology, scenarios, and ecosystems can firms seize opportunities in this co-evolutionary race, jointly ushering in a new era of terminal intelligence.

By R Star

(All uncredited images are sourced from the internet)