Musk Officially Announces Grok-2 Beta! Will xAI Continue to Embrace Open Source?

![]() 08/13 2024

08/13 2024

![]() 710

710

"

Following the official open sourcing of the large model Grok-1 by its subsidiary xAI, Tesla CEO Elon Musk has dropped another bombshell in the large model market.

On the evening of August 11, local time, Musk revealed on Platform X that the beta version of the AI model Grok-2 would be released soon. In fact, Musk had already confirmed on Platform X in July that Grok-2 would be released in August, and in response to a user's question about training data, he stated that the model would make "significant improvements" in this area.

Source: X

In March of this year, Musk stated that Grok-2 would surpass the current generation of AI models in "all metrics."

As a mixture-of-experts (MoE) model trained from scratch by xAI, Grok has been iterating at an astonishing pace since the launch of its first version in November 2023, with the Grok-1.5 large language model and the first multimodal model Grok-1.5 Vision being successively released in March and April of this year.

However, surpassing all current AI large models may not be as straightforward as it seems for Grok-2.

Surpassing all current AI large models in all metrics? Really or fake?

When xAI launched its first-generation large language model Grok in November 2023, it stated that the design inspiration for Grok came from "The Hitchhiker's Guide to the Galaxy." Initially, Grok primarily supported the Grok chatbot on Platform X for natural language processing tasks such as question answering, information retrieval, creative writing, and coding assistance.

The initial version, Grok-0, had only 33 billion parameters. After several improvements, Grok-1 boasted 314 billion parameters, making it the largest open-source large language model in the world at the time in terms of parameter count.

Even though only 25% of these parameters are activated for a given token, Grok-1 still has 86 billion activated parameters, which is more than the 70 billion parameters of LIama-2. This means that Grok-1 has vast potential when processing language tasks.

Grok-1 adopts a mixture-of-experts system design, where each token selects two experts from eight available ones for processing. Under this architecture, depending on the specific content of the inquiry, the model will only activate different expert submodules for reasoning. With a fixed throughput, this allows the model to complete reasoning and provide answers more quickly. This gives Grok-1 faster generation speeds and lower reasoning costs, translating to a better user experience and cost-effectiveness.

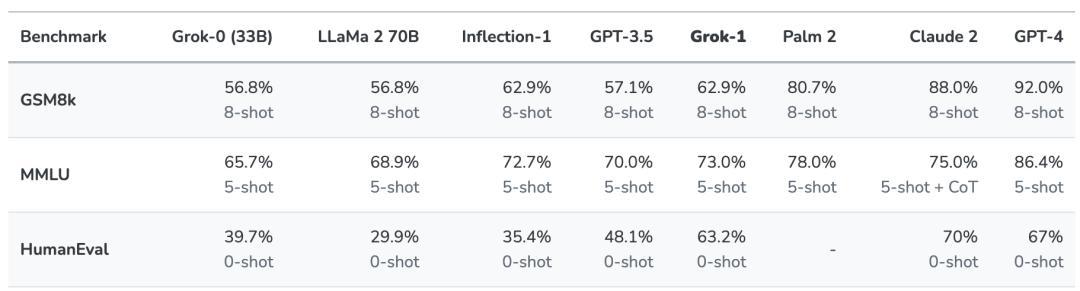

According to data published by xAI, Grok-1 outperformed Llama-2-70B and GPT-3.5 in a series of benchmarks such as GSM8K, HumanEval, and MMLU, although the gap with the top-tier GPT-4 is still evident.

Source: xAI

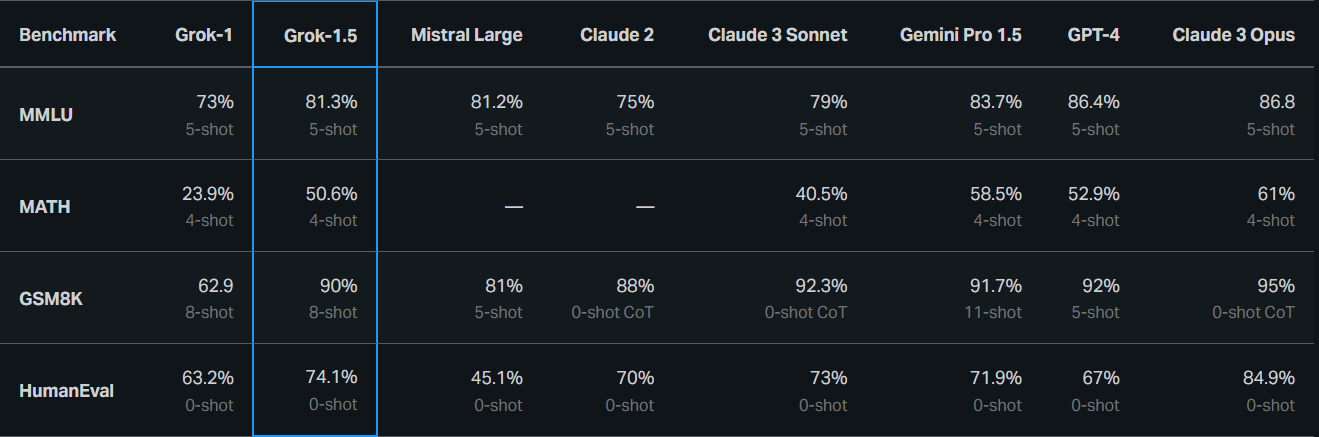

With Grok-1.5, the situation has changed significantly. Not only does Grok-1.5 have improved reasoning capabilities and a context length of 128k, but its performance in coding and math-related tasks has also been significantly enhanced.

In official tests, Grok-1.5 achieved a score of 50.6% on the MATH benchmark and 90% on the GSM8K benchmark, which cover a wide range of elementary to high school competition problems. Additionally, it scored 74.1% on the HumanEval benchmark, which evaluates code generation and problem-solving abilities.

Source: xAI

The overall performance in benchmarks is very close to that of GPT-4, and Grok-1.5 even surpassed GPT-4 in the HumanEval test.

Shortly after, xAI released the multimodal model Grok-1.5V, which is touted as bridging the digital and physical worlds. Not only does it perform comparably to top-tier multimodal models like GPT-4V, Claude 3 Sonnet, and Claude 3 Opus in various benchmarks, but it can also process various visual information such as documents, icons, screenshots, and photos, supporting the ability to understand memes and write Python code.

Although Musk and xAI have not yet disclosed detailed information about the Grok model, based on the model's iteration trend, it seems that Musk's claim that Grok-2 will surpass the current generation of AI models in "all metrics" is not unfounded.

Larger parameter counts, stronger performance, and faster speeds are almost certain upgrades. What I'm most looking forward to, however, is the release of Grok-3 around the end of the year. After all, Musk has stated that this model will "match or exceed" the yet-to-be-released OpenAI GPT-5, which is considered the next major breakthrough in the field of large language models.

Source: Weibo

If Grok-3 can indeed reach the aforementioned level, its impact on Musk's companies, such as Platform X, which has stagnated in user growth, and Tesla, which focuses on FSD, would be significant. Platform X could leverage the large model to provide users with a more intelligent chatbot, creating differentiation for its social platform. Tesla, on the other hand, could use the large model's language capabilities for "chain of thought" processing, helping vehicles "end-to-end" decompose complex visual scenarios and address some limitations of current autonomous driving systems. There were rumors of Grok-1.5 being applied to Tesla's FSD V13 as early as its release.

Regardless, it is highly likely that Grok will change the way large models iterate and are applied. It is also certain that the competition between open-source large models, represented by Grok, and closed-source large models is intensifying.

Open Source vs. Closed Source: The Battle for Large Model Strategies

Musk is a staunch supporter of open source and has publicly expressed dissatisfaction with OpenAI's closed-source business model, even taking legal action against OpenAI and its CEO Sam Altman for alleged contract violations, demanding the restoration of open source access.

The creation of xAI was largely driven by a desire to prevent a monopoly in the field of artificial intelligence. Ironically, OpenAI's "openness" does not compare to that of xAI. Musk delivered on his promise to open source Grok-1 with 314 billion parameters, complying with the Apache 2.0 license that allows users to freely use, modify, and distribute the software for both personal and commercial purposes.

While OpenAI is a leader in AI and it is unrealistic to expect them to open source the code behind their ChatGPT model unless they choose to do so, it is undeniable that open sourcing large models has become a major trend both domestically and internationally.

Internationally, after Llama2 announced its free commercial availability last July, it quickly became the preferred open-source large model for developers worldwide. Shortly thereafter, Google entered the open-source large model competition by releasing Gemma, which surpassed Llama2-13B (with 13 billion parameters) with its 7 billion parameters. In the domestic market, Alibaba announced the open sourcing of Tongyi Qianwen Qwen-72B, a large language model with 72 billion parameters, which outperforms the benchmark Llama2-70B and is touted as the strongest open-source Chinese model.

The debate between open-source and closed-source large models has always been a hot topic, and many industry leaders have shared their views. Baidu CEO Robin Li is a proponent of closed source, believing that under the same parameter scale, open-source models are inferior to closed-source models in terms of capability. To match the capability of closed-source models, open-source models would require a larger parameter scale, leading to higher reasoning costs and slower response speeds.

Source: World AI Conference 2024

Wang Xiaochuan, CEO of Baichuan Intelligence, one of the "Big Five AI Models," is a supporter of open source. He believes that open source and closed source are not mutually exclusive but rather complementary, and their coexistence may be the optimal solution. He predicts that 80% of enterprises will use open-source large models in the future because closed-source models may not offer better product adaptation or may be cost-prohibitive.

There is no right or wrong in the views of Robin Li and Wang Xiaochuan; they are simply different choices. The choice between open-source and closed-source large models is essentially determined by the business model.

Closed-source large models offer advantages in protecting intellectual property and ensuring data security and compliance but may be limited in terms of flexibility and customizability. Open-source large models, on the other hand, represent a mature internet business model. Although the ultimate goal is still monetization, open-source models involve multiple participants, more akin to an ecosystem that drives progress collectively, such as through rapid iteration, experimentation, and shared responsibility.

I personally find the statement by Luke Sernau, a senior software engineer at Google, quite accurate: The iterative progress of open-source models has threatened the survival of some closed-source models because open-source projects essentially tap into the free labor of the entire planet.

This is precisely the root of the debate between open-source and closed-source large models: Both developers and users tend to prefer the best open-source projects, resulting in a clustering effect that may be more pronounced than that of closed-source models.

Final Thoughts

In line with Musk's philosophy, the upcoming Grok-2 is likely to be open-sourced as well. Facing the intensifying open-source large model war, companies such as xAI, Google, Meta, Alibaba, Mistral AI, Databricks, and more open-source large model vendors continue to iterate and improve performance and efficiency. After all, no one can be certain of maintaining or expanding their advantage in this rapidly evolving technological revolution.

Source: Tesla

The influence Musk brings to xAI is short-lived; the future of xAI ultimately depends on the actual performance of Grok. It could potentially be integrated with Platform X and Tesla's business to create a groundbreaking AI large model application, or it could merely remain a "paper parameter" or even a "technical future commodity" like Sora. The definitive answer will only become clear on the day Grok-2 is released.

Source: Leitech