OpenAI Sora's vision, will the AI industry fulfill it?

![]() 08/14 2024

08/14 2024

![]() 641

641

In the field of AI-generated videos, Sora is practically the only one left that's still a promise.

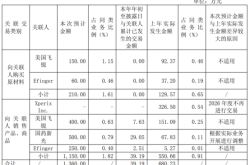

In the past two months, AI-generated video applications have continued to explode both domestically and internationally, with Kuaishou, ByteDance, Zhipu AI, Shengshu Technology, and Aishi Technology leading the charge in China, and Google, Luma, and Runway making waves overseas. It's like the Eight Immortals Crossing the Sea, each with their unique approach.

While there are still differences in the capabilities of different platforms, their overall usability has greatly improved, and they offer a comprehensive range of stylistic features. The only drawback is that AI video tools still fall short when it comes to integrating seamlessly into workflows. Sora's vision now rests on the shoulders of these up-and-coming players to fulfill.

Abandoning the promise, the explosion of AI-generated video applications

Both the industry and the public view video as a key area for AI application deployment. At SIGGRAPH 2024, NVIDIA CEO Jensen Huang invited Meta CEO Mark Zuckerberg for a dialogue, and both agreed that video capabilities will be the evolutionary direction of AI large models.

Song Jiaming, Chief Scientist of Luma AI, which originated from NVIDIA's research group, said in a conversation with a16z partner Anjney Midha that video is connected to the 3D world. From a learning perspective, video data enables models to better understand and reason about the 3D world. As such, real-time, high-quality video generation will ultimately drive the development of embodied AI.

Video serves as this 'bridge,' and now numerous AI companies are racing to cross it first, especially since OpenAI's Sora remains a promise unavailable to the outside world, leaving room for other platforms to further develop.

(Compiled from public information)

(Image source: Tianyancha)

Behind these extended battle lines lie the experiments of these companies. Part of it concerns business models, while the other part revolves around the prospects of technological application.

Companies like Keling, Jimeng, and Vidu have all introduced subscription models, attempting to popularize their applications among consumers. Wang Changhu, founder of Aishi Technology, previously told Caixin that Aishi's current strategy is primarily focused on the 2C (consumer-facing) market, broadly collecting feedback from users both domestically and internationally to better iterate on its underlying models based on user experience. As for more distant applications, it's still too early to discuss them primarily because the 2C charging model cannot cover costs.

Luma AI has adopted a To C product form, but it originally focused on the 3D field. Its entry into the video generation space is an exploration of more possibilities in 3D generation and reconstruction, using video to drive 3D development. This holds significant application prospects in the industry, such as mass-producing 3D materials for films.

Crucially, Luma AI's aspiration goes beyond simply selling technology or materials; it aims to establish a platform akin to TikTok, essentially a 3D-based ecosystem. Wang Changhu also expressed in a conversation with Zhang Peng, founder of GeekPark, that Aishi Technology is targeting “platform opportunities in the AIGC era,” but the specific form of this platform remains unpredictable since the AI industry will not grow by replicating existing platforms.

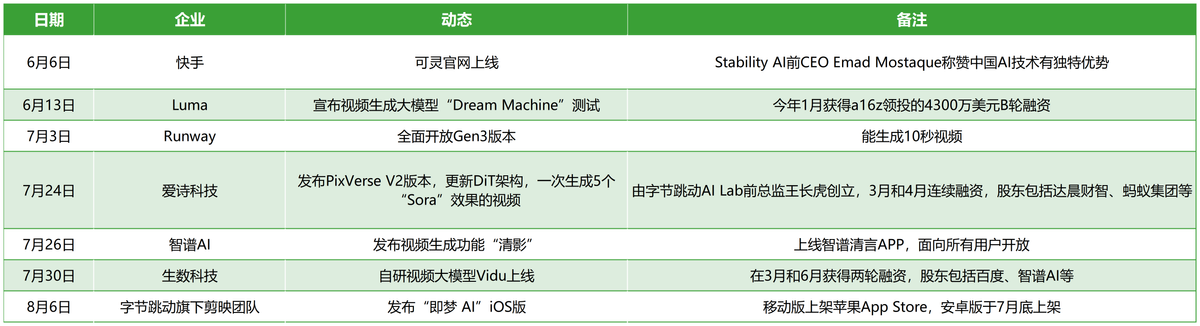

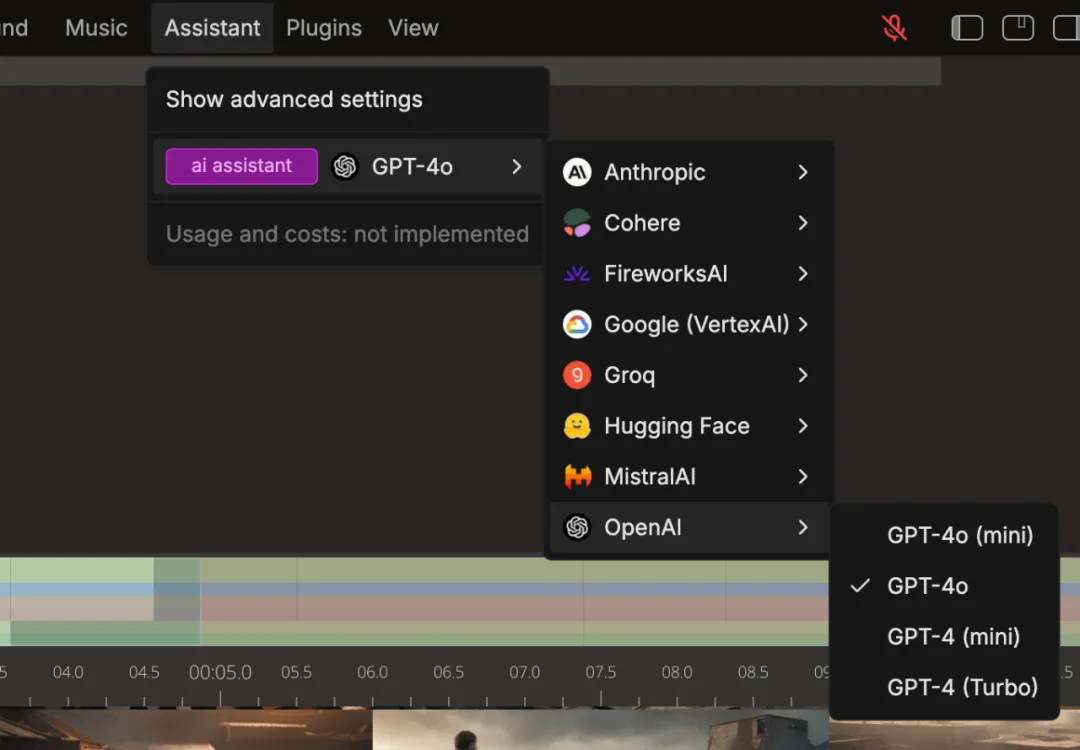

Moreover, applications that fully integrate AI-generated videos into workflows are already taking shape. Open-source video editing tool Clapper has recently gained popularity for its ability to harness various AI technologies and prompt AI agents to generate and iterate on stories, bypassing the manual editing process altogether.

(Image source: Synced)

This underscores that the evolution of AI-generated videos is far faster than we might imagine. Currently, the industry's focus undoubtedly lies in generation speed and efficiency. However, large models do not offer a definitively clear commercial model direction, which largely depends on the team's choices. In this process, in addition to commercialization, AI companies must also consider how to avoid compliance and cost dilemmas. As such, maturing text-to-video generation is no easy feat; it's akin to the nascent stages of ChatGPT.

The 'Achilles' heel' and breakthrough point of AI-generated videos

a16z has previously opined that giants tend to be slower in converting scientific research into commercial products due to heightened concerns over legal security and copyright issues. Regardless of whether this explains Sora's continued absence, the logic holds true for the issues the industry as a whole faces.

1. The 'gap' in commercialization: Current AI-generated videos struggle to meet clients' demands

Bloomberg reported that OpenAI has been trying to pitch Sora to Hollywood with limited success. The first commercial ad created with Sora, a Toys 'R' Us ad released in June, not only incorporated old footage but also failed to disclose that it was fully AI-generated in the accompanying press release.

Director Nik Kleverov even mentioned in a since-deleted post that Native Foreign, the creative agency behind the ad, contributed around a dozen staff members, with Sora supporting 80% to 85% of the production process. This is hardly encouraging news for AI-generated videos aiming for efficiency and cost-effectiveness.

2. Challenges in training costs and accessing high-quality datasets

Video can be seen as a series of images, and while there are numerous publicly available image datasets, the same cannot be said for videos. OpenAI faced accusations of illegally using YouTube videos for training, while NVIDIA was recently exposed by the media for collecting vast amounts of data from Netflix and YouTube to train its Cosmos project, supporting the development of its AI products towards the real world. It can download video content equivalent to 80 years' worth of footage every day.

This highlights two key points: Firstly, similar to Huang and Luma's perspectives, the development of AI-generated videos is indeed crucial for AI's entry into the 3D world, as evidenced by NVIDIA's approach: text – image – video – 3D model – real world. Secondly, the lack of video datasets poses a significant challenge. Apart from copyright issues, these video data also lack labels. Stefano Ermon, a professor at Stanford University, noted that there is currently a lack of methods to filter and select good videos, and that considering their labels and descriptions is another hurdle after selection.

3. The bubble of AI assets: For AI to be valuable, it must solve important and complex problems for users. However, its current progress pales in comparison to the nascent stages of technologies like the internet.

In a recent interview, Benchmark partner Michael Eisenberg quoted his friend Gavin Baker, founder of Atreides Management, on the development of large models: 'Foundation models are the fastest-depreciating assets in history.'

His example comes from the founder of Seeking Alpha, who operates in the high-frequency trading space where business and data are constantly updated. The trained models can only handle routine tasks like writing reports but fail to keep up with the rapid pace of data updates, let alone meet the demands of financial forecasting.

Moreover, while other technologies' development paths are relatively deterministic, the early days of the internet, despite its significant bubble, already demonstrated clear application routes. In contrast, AI is fraught with uncertainties. While the marginal cost of internet development is almost zero (or largely borne by operators and users), the marginal cost of AI growth involves substantial fixed assets, currently shouldered by entrepreneurs themselves. The more they invest, the weaker the marginal improvement becomes, and significant early investments may prove to be traps.

Technological revolutions must be accompanied by industrial revolutions, which in turn require the guidance of phenomenal products. AI desperately needs a successful scenario. At present, AI-generated videos have yet to demonstrate such promising results.

Arin, founder of Perplexity, offers another perspective: the value of foundation models essentially reflects the value of the teams behind them, akin to how Sora represents OpenAI and Wenxin represents Baidu. It's not that Sora will revolutionize video; rather, the outside world believes that Sora, led by OpenAI, has the potential to do so. When Sora fails to deliver the breakthroughs we anticipate, who will step up to shoulder this responsibility in this field?

From this perspective, the key may lie in who can first truly integrate AI-generated videos into the workflows of a commercial system, much like Clapper's exploration in video production. This poses an even greater challenge, as it involves integration with other domains like meteorology, urban planning, film and television, automobiles, and manufacturing. Perhaps Sora will unveil a more concrete achievement someday this year, or perhaps another startup will disrupt our understanding of AI videos.

Source: Songguo Finance