Domestic AI video players take seats, entering the business model competition

![]() 08/29 2024

08/29 2024

![]() 562

562

Preface:

After six months of unremitting efforts, China's development in the field of large artificial intelligence models has finally reached a critical juncture.

From AI-driven dance performances, stick figure animations, to the ability to generate high-quality videos lasting 5 to 16 seconds, the overall level of AI video generation technology has achieved a qualitative leap.

The main players in the domestic AI video market have basically been established and have entered the business model competition stage.

Author | Fang Wensan

Image source | Network

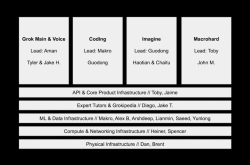

DiT technology architecture has become mainstream in the AI video field

Previously, the technical paths for AI video generation were mainly divided into two categories: one was the diffusion model technology path, with participants including Runway, Pika Labs, etc.;

The other is the technical path of generating videos based on the Transformer architecture's large language model.

At the end of last December, Google released VideoPoet, a generative AI video model based on the large language model, which is seen as providing another solution and possibility for the video generation field beyond diffusion models.

Diffusion models introduce noise into images, transforming them into their original mosaic state, and then use [neural networks].

For example, the UNet structure based on Convolutional Neural Networks (CNNs) subtracts predicted noise from images at specific time points to obtain noise-free original images, i.e., the final generated images.

Each technical path has its advantages and disadvantages, and it is difficult for a single model to achieve a fundamental breakthrough in video duration and image quality.

Sora chose a technical architecture that combines diffusion models and Transformer models – the DiT (Diffusion + Transformer) architecture.

Specifically, Sora replaced the U-Net architecture in the diffusion model with the Transformer architecture.

Therefore, since Sora's release, the DiT technical architecture has become the mainstream technical architecture for players in the AI video field.

Data, algorithms, and computing power determine the cost of AI videos

The cost of data investment is high. Adobe, which has always attached great importance to copyright, initially planned to purchase videos from photographers and artists during model training and pay based on video length, with costs ranging from $2.60 to $7.25 per minute (approximately RMB 20 to 50 yuan per minute).

Training an AI large language model requires billions of parameters, and the amount of data required to train a video model is even greater.

The computing power cost required to operate AI video applications is also significant. AI videos cannot calculate costs through marginal effects like bridges.

Each user requires computing power, and the more users use the service, the greater the demand for computing power.

Under the dual pressure of data costs and computing power costs, participants in AI videos cannot afford to directly reduce prices as generously as participants in large models.

If participants hastily adopt a price reduction strategy to "land grab," they risk exhausting their funds due to computing power costs, and the user experience may also suffer, leaving them in a difficult position.

Therefore, most AI video participants are cautious and choose to focus on value-added features.

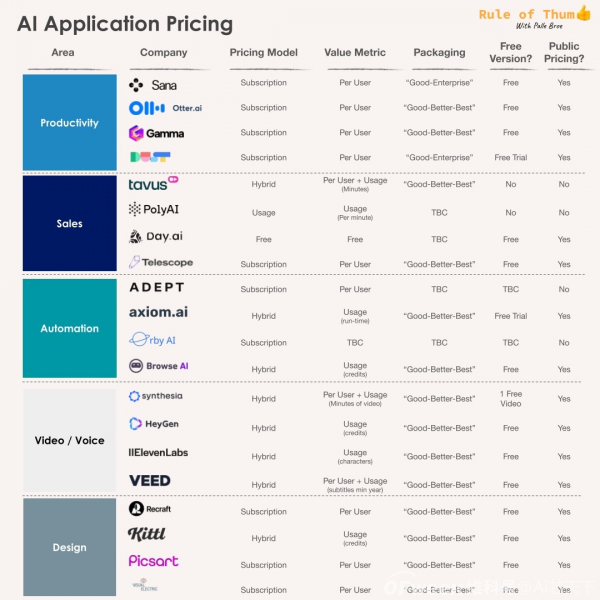

A report on AI application pricing strategies indicates that only 26% of AI companies adopt a Freemium hybrid model, while the vast majority (71%) still use the traditional SaaS subscription pricing model.

Zhipu Qingying offers an "[acceleration]" model. Ordinary users can use Qingying unlimited times for free, but to shorten the queue waiting time, they can purchase acceleration vouchers for RMB 5 to accelerate for an entire day.

The most creative pricing model is undoubtedly Kimi's large model from the Dark Side of the Moon.

Since March of this year, Kimi has consistently ranked among the top 5 domestic AI products and has even crashed due to excessive traffic.

To balance user experience and computing power requirements, Kimi introduced a tipping function.

Users can obtain the "[peak priority use]" function for different durations by paying amounts ranging from RMB 5.20 to 399.

It is not difficult to see that domestic AI products hold an open attitude towards exploring commercialization models.

Their own advantages also affect product characteristics

Kuaishou's Keling excels in the natural fluidity of human posture, while Zhipu Qingying and Aishi Technology's PixVerse are more vivid in color representation.

In particular, Kuaishou's Keling, relying on the platform's vast video data advantage, can quickly generate videos that conform to the Chinese context and aesthetic habits, focusing on high-intensity simulation of physical world characteristics and successfully solving many "eating" issues that are difficult for many AIs.

Shengshu Technology's Vidu is more prominent in movement amplitude and speed, while ByteDance's Jimeng excels in animation style and can precisely control the speed of object movement.

Zhipu Qingying and Shengshu Technology's Vidu perform particularly well in generation speed, completing the process in just 30 seconds, while the generation time for mainstream domestic and international products is approximately 5 minutes.

ByteDance's Jianying launched Jimeng AI, which innovated the story mode based on video generation, enabling a rapid transformation from creative ideas to finished products, from prompts to characters and scenes.

In addition, Alibaba DAMO Academy's AI video product [Xunguang] and Baidu's AI video model UniVG, although still in beta testing, have great potential in controllable editing, semantic consistency, and other aspects based on official information.

Domestic products still need upgrading to generate high-quality content

Challenges faced by AI video generation include accuracy, consistency, and richness. There is a significant difference between actual experience and promotional videos released by companies.

AI video generation technology still needs to overcome many challenges to achieve commercial applications.

Currently, most AI video generation technologies, both domestic and international, support video generation at 480p/720p resolution, with relatively few supporting 1080p HD video.

The quality of materials and computing power directly affect the quality of generated videos.

However, even with high-quality materials and powerful computing power, it does not guarantee the quality of generated videos.

Models trained with low-resolution materials may produce corrupted or repetitive videos when attempting to generate high-resolution videos, such as multiple hands or feet appearing.

These issues can often be addressed through methods like upscaling, restoration, and redrawing, but the results and details are often unsatisfactory.

In China, most AI video generation technologies can support videos of 2-3 seconds, with those capable of reaching 5-10 seconds considered relatively advanced.

There are also individual outstanding products, such as Jimeng Technology, which can generate videos up to 12 seconds long.

However, compared to Sora technology, which has claimed the ability to generate 60-second videos (though not yet publicly available for use), its specific performance cannot be verified.

The rationality of generated content is equally important.

Theoretically, AI can continuously output videos, even for up to an hour, but users typically do not require surveillance videos or looping landscapes. Instead, they prefer short films with exquisite visuals and storytelling.

Although Jimeng Technology has made breakthroughs in video length, the generation quality is not ideal, such as the deformation of the protagonist, a little girl, in later stages.

Similar issues exist with Vega AI, while PixVerse technology generates lower-quality visuals.

In contrast, Morph technology performs well in content accuracy but with a video duration of only 2 seconds.

Yiying Technology has excellent visuals but struggles with understanding text, resulting in missing key elements like rabbits, and the generated videos tend towards a comic style, lacking realism.

Currently, many film and television shorts claiming full AI production actually use image-to-video or video-to-video technologies.

Video coherence is crucial, and many AI video tools achieve video conversion by predicting subsequent actions from single frames, but prediction accuracy still relies heavily on luck.

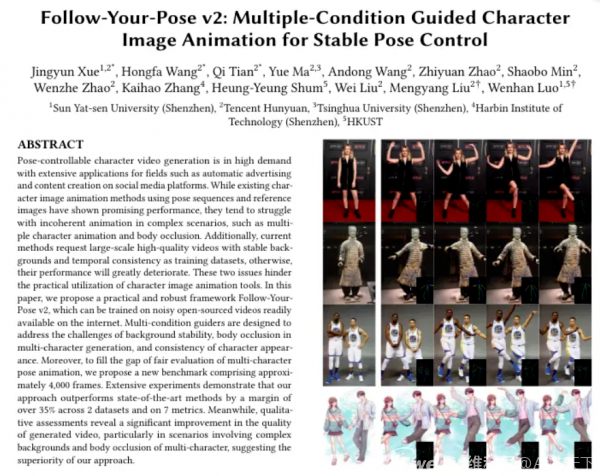

In achieving consistency in video protagonists, these technologies do not solely rely on data generation.

However, these technologies are still in the exploratory stage, and even with technology stacking, the issue of character consistency has not been fully resolved.

Current generation durations and effects show little difference

Currently, mainstream AI video tools on the market mainly generate video clips lasting around 4 to 10 seconds.

Vidu Effect: Shengshu Technology's Vidu, newly launched, offers two duration options: 4 and 8 seconds, supporting up to 1080P resolution.

In terms of processing speed, it takes only 30 seconds to generate a 4-second video clip.

Aishi Technology's PixVerse V2 can generate individual 8-second video clips and offers a one-click function to generate 1 to 5 consecutive video segments, ensuring consistency in the main image, visual style, and scene elements between segments.

Zhipu AI's Zhipu Qingying can produce 6-second videos in approximately 30 seconds, with a clarity of up to 1440x960 (3:2).

Kuaishou's Keling generates 5-second videos but offers an extension function to lengthen them to 10 seconds. However, Keling is relatively slow in video generation speed, typically taking 2 to 3 minutes.

From a technical accumulation perspective, although domestic AI video generation enterprises are all deploying the DiT architecture, they are still catching up with Sora in terms of video generation duration and effects.

Domestic AI video large models have embarked on commercial exploration

Compared to chatbot tools like ChatGPT, AI video generation is seen as the golden track for large model technology commercialization.

There are two main reasons for this: firstly, AI video generation tools have obvious charging advantages.

Currently, most AI video generation tools adopt a membership system for consumer-end users.

Taking Keling as an example, its membership is divided into three levels: Gold, Platinum, and Diamond.

After discounts, the monthly prices for these three tiers are RMB 33, 133, and 333, respectively, providing 660, 3000, and 8000 "Inspiration Points," which can generate approximately 66, 300, or 800 standard videos.

Zhipu Qingying's pricing strategy is as follows: During the initial test period, all users can experience it for free;

Paying RMB 5 grants one day (24 hours) of high-speed access benefits;

Paying RMB 199 unlocks one year of high-speed access benefits. However, commercialization in the AI video generation field is still in its infancy.

For enterprise-level users, these tools support charging through API interface calls.

For example, in addition to the membership-based charging method, Zhipu AI also opens API interfaces on its open platform and charges a fee.

On the other hand, AI video generation tools blur the boundaries between creators and consumers, especially on platforms like Kuaishou and Douyin, where video bloggers are both consumers and creators who can use AI video tools to produce content.

This large C and small B consumer group is extremely important and may even be the most critical, as the boundaries between ToB and ToC are increasingly blurred.

However, from a commercial ecosystem perspective, there are differences in monetization strategies between large enterprises and startups.

Industry-leading video platforms like Douyin and Kuaishou can leverage their vast user bases to encourage users to create relevant content by providing AI video generation tools, thereby enriching their video ecosystems.

These large platforms do not need to directly sell tools but can commercialize through users.

For startups, directly selling tools in the Chinese market is unrealistic, and only industry giants may have opportunities in the future due to their large user bases.

For large model startups, selling tools can only target ToB in China and not ToC.

Targeting enterprise clients is the feasible path to commercialization.

Enterprises are willing to pay because they can generate revenue through video delivery, supporting corresponding cost expenditures.

Therefore, in the process of AI video commercialization, success on the consumer side largely belongs to industry giants, while opportunities for entrepreneurs lie on the enterprise side.

Currently, C-end users lack clear direction in developing video applications using AI video large model platforms, and the platforms themselves struggle to predict how C-end users will utilize these videos.

Internet giants are likely to play a leading role

The core competitive elements are data, scenarios, and users. Data is crucial for training high-quality models, and scenarios determine a product's market adaptability and commercial potential. Internet giants excel in these three dimensions.

Currently, monthly active user growth in mobile internet is slowing, while monthly active users of AIGC apps are growing rapidly, with a penetration rate of 5% in June 2024 and room for further growth.

Future traffic distribution will be largely dominated by AI, with users naturally flowing towards platforms that offer more user-friendly, entertaining, and accessible content consumption.

This explains why ByteDance and Kuaishou attach great importance to video generation projects. ByteDance positions Jianying as a P0-level project led by former CEO Nan Zhang;

Kuaishou positions Keling as a strategic project led by technical expert Pengfei Wan, with support from Cheng Yixiao, pooling the company's data, computing power, and financial resources.

On the other hand, professional full-process film and television creation platforms still have strong user barriers.

Conclusion:

According to data from Toubao Research Institute, China's AI video generation industry had a market size of RMB 8 million in 2021 and is expected to grow to RMB 9.279 billion by 2026.

According to Qiming Venture Partners, AI investment in the primary market reached $22.4 billion in 2023, exceeding the cumulative total of investments in the previous decade.

Numerous industry experts predict that 2024 will be a significant turning point in the field of AI video generation, known as the 'Midjourney Moment'.

[References]: Pacific Tech: "Well-Priced, Domestic Sora Brands Thrive"; East West Culture & Entertainment: "AI Video in June: Players Are Ready"; Dingjiao: "Half a Year Later, Where Has AI Video Gone?"; Guangzhui Intelligence: "AI Video Frenzy: Big Companies Go Left, Startups Go Right"; TechNews: "Testing 4 Domestic Leading AI Video Big Models"; CITIC Securities Research: "Domestic AI Video: High Usability and Cost-Effectiveness, Kuaishou's Kailing Initiates C-end Paid Services"; First Track Capital: "Exploring the Large-Scale Video Generation Model: Who Will Be the Next Frontier King?"; Ebrun: "AI Video Boom! 100,000 Videos a Day, Flooding Douyin, Kuaishou, and Xiaohongshu"; China Business Network: "The Battle for Video Generation: In the GPT-3 Era, 'Be the First to Act When Others Don't Understand'"