Suddenly removed from shelves, where is the open-source model hailed as “the strongest in the Chinese server” headed?

![]() 09/18 2024

09/18 2024

![]() 534

534

The removal of QwenLM, the open-source code of Aliyun's Q&A model Tongyi Qianwen, from GitHub has sparked a crisis of trust. Despite Alibaba Cloud's proactive stance on open sourcing, they still face challenges in monetizing their efforts. The outbreak of price wars among large models, combined with a mismatch between technology and market demand, makes commercialization uncertain.

@TechNews Original

In the second half of 2024, domestic AI large models have faced significant challenges.

Recently, the AI code-based application Cursor was released, and its powerful programming capabilities garnered significant attention. Shortly thereafter, OpenAI released their latest model, ChatGPT o1, on September 12, which elevated logical reasoning and programming capabilities to a new level, demonstrating true general reasoning capabilities. Competition in the international AI arena has intensified.

Also in September, the entire series of open-source code for Tongyi Qianwen QwenLM, hailed as the "strongest in the Chinese server," was removed from GitHub (one of the world's largest open-source code hosting platforms). Access to projects, including the new open-source king Qwen2.0, returned 404 errors. This not only triggered a crisis of trust among practitioners regarding the stability of open-source models but also exposed the difficulties faced by domestic large models on the path to commercialization.

Part.1

Removal Controversy, Perfunctory Responses

"The team hasn't disappeared; our GitHub organization was flagged for no apparent reason, so you can't see the content. We're already in contact with the platform, but we don't know the reason yet," said Lin Junyang, Alibaba's senior algorithm expert and head of the Tongyi Qianwen team, in a swift denial on social media following the incident.

However, this response failed to satisfy AI practitioners. Prior to this, some of them had just experienced the fallout from Runway's sudden removal of their open-source models from Hugging Face. Runway, known for its Stable Diffusion series, abruptly cleared its open-source models overnight, leaving countless developers who relied on these models stranded.

While the QwenLM model code has since been restored on GitHub, the Tongyi Qianwen team has not provided any further explanation for the incident. Practitioners are left feeling more uncertain and pessimistic about the potential for similar incidents to affect open-source model projects in the future.

Open sourcing is a key strategy for Alibaba's Tongyi large model.

Alibaba Cloud CTO Zhou Jingren once stated in a public forum, "Feedback from developers and support from the open-source community are crucial drivers of technological progress for Tongyi's large model."

The training and iteration costs of large models are extremely high, making them unaffordable for most AI developers and small-to-medium enterprises. From this perspective, Tongyi's "omni-modal, full-scale" open-source strategy, coupled with its long-standing reputation, has won it a dedicated following among developers.

Eager developers eagerly await every open-source move. As of October 2023, Alibaba Cloud's open-source community "Mota" boasted over 2,300 models, with more than 2.8 million developers and over 100 million model downloads. Alibaba's latest open-source model, QWen2, gained global popularity, with Qwen2-72B topping the Hugging Face open-source large model rankings just two hours after its release and subsequently defending its position at the top of the most authoritative open-source model testing rankings. Alibaba's latest quarterly earnings revealed that downloads of Tongyi Qianwen's open-source models have surpassed 20 million.

Tongyi's open-sourcing of its large model broke the monopoly held by overseas closed-source large models over domestic developers. As Alibaba Cloud CTO Zhou Jingren put it, "Alibaba Cloud's original intention is not to commercialize the model by keeping it in our hands but to help developers. Our open-source strategy is fully aligned with this goal." In his view, open sourcing is "the best and only way" to innovate in AI technology and models.

Part.2

Open Source vs. Closed Source: Who Wins?

Since the dawn of the large model era, the debate between open source and closed source has raged on.

Baidu's founder Robin Li declared at the Baidu AI Developer Conference in April this year that "open-source models will increasingly lag behind." Later, in an internal speech, Li expressed his explicit views on the limitations of open-source models, noting that while they are convenient to access and use, they often encounter issues such as low GPU utilization rates and high inference costs in commercial applications. In contrast, another internet mogul, Zhou Hongyi, stated, "I have always believed in the power of open source."

Setting aside the verbal sparring between industry leaders, open source and closed source, two distinct development approaches, each have their merits in the current large model landscape.

From a factional perspective, open-source models like Meta's Llama series, Stanford's Alpaca, and Alibaba's Tongyi large model demonstrate rapid progress and innovation driven by the community. Meanwhile, models like OpenAI's GPT series, Anthropic's Claude, Baidu's Wenxin, and Huawei's Pangu opt for closed-source formats, maintaining technological leadership and commercial application advantages.

The open-source model fosters technology sharing and innovation, while the closed-source model safeguards commercial interests and technological advantages, supporting the commercialization of large models.

Some practitioners argue that from a model perspective, open-source models currently lag slightly behind closed-source models. However, as more open-source models iterate, their capabilities are quickly catching up. For instance, Tongyi's Qwen2.5 model has surpassed GPT-4 Turbo in overall performance.

"In terms of model quality, open-source models, due to their open code, are more easily tested and improved by the community. In contrast, closed-source models leverage high-quality data corpora, abundant data, computational power, advanced algorithmic capabilities, and robust financial support during development, ensuring high-quality outputs," said the practitioner.

The same practitioner also noted that data security is crucial for large models, as training involves sensitive user data, and data scraping may involve malicious content. Open-source models prioritize security and privacy since they are accessible to a wider audience. Security experts can review the code to identify and fix potential security risks. Conversely, closed-source models, with their proprietary code, rely on dedicated security teams for protection and vulnerability patching, mitigating external attack risks. In the eyes of industry insiders, open source and closed source are not mutually exclusive.

Part.3

Tongyi Qianwen: Where to Go From Here?

Beyond the debate between open source and closed source, achieving commercialization is the pressing challenge facing large models.

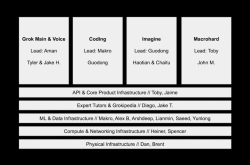

Alibaba's Tongyi large model ecosystem can be broadly divided into two layers: the large model base and application-end product models. In September 2022, DAMO Academy launched the "Tongyi" large model series, establishing the industry's first AI base. After over a year of technological advancements, Tongyi has evolved from its initial version to version 2.5. To cater to diverse computing resource demands and application scenarios, Tongyi has introduced eight large language models ranging from 500 million to 110 billion parameters, along with models tailored for specific applications like Qwen-VL (for visual understanding) and Qwen-Audio (for audio understanding).

In addition to developing the underlying large model, the Tongyi team has also invested heavily in application-end products. At last year's Alibaba Cloud Computing Conference, CTO Zhou Jingren unveiled eight product models, including Tongyi Lingma (intelligent coding assistant), Tongyi Zhiwen (AI reading assistant), Tongyi Tingwu (AI work and learning assistant), Tongyi Xingchen (personalized character creation platform), Tongyi Dianjin (intelligent investment research assistant), Tongyi Xiaomi (intelligent customer service assistant), Tongyi Renxin (personal health assistant), and Tongyi Farei (AI legal advisor). Meanwhile, the Tongyi Qianwen app was officially launched, allowing all users to experience the latest model capabilities directly through the app. Developers can integrate these models into their AI applications and services via web embedding, API/SDK calls, etc.

Given Tongyi's open-source nature, commercialization poses a complex challenge.

Upon analysis, "TechNews" categorizes current large model commercialization models into four broad types. On the consumer side: Firstly, offering API interfaces and charging users based on usage. Secondly, leveraging large models to empower products, leading to increased demand and pricing, as seen in paid subscriptions for ChatGPT and Midjourney. On the business side: Firstly, generating additional traffic through AI functionalities and subsequently monetizing through advertising. Secondly, empowering enterprises internally through AI to enhance efficiency and reduce costs, as Baidu's Wenxin model does for its products.

At present, Alibaba appears to be exploring both ToB and ToC commercialization paths simultaneously. In April 2023, Alibaba announced that all its products would integrate the "Tongyi Qianwen" large model for comprehensive transformation. In terms of enterprise empowerment, Alibaba Cloud is opening up its AI infrastructure, including its Feitian cloud operating system, chips, and intelligent computing platforms, along with Tongyi's capabilities, to all enterprises. In the future, each enterprise can either leverage Tongyi Qianwen's full capabilities or train its own enterprise-specific large models by combining its industry knowledge and application scenarios. Meanwhile, the eight product models, including Tongyi Lingma, Tongyi Zhiwen, and Tongyi Tingwu, have garnered recognition from many consumer-end users.

While the path to monetization remains unclear, the price war among AI large models has already commenced. Since May this year, several domestic large model vendors, including ByteDance, Alibaba, Baidu, and Zhipu AI, have adjusted their pricing strategies. Tongyi Qianwen's flagship model, Qwen-long, has seen its API input price plummet by 97%, from 0.02 yuan per thousand tokens to 0.0005 yuan per thousand tokens.

This profoundly reflects the competition among large model vendors in technology, market, and strategy. From a commercialization perspective, pure price wars can attract users in the short term, creating a head-start effect. However, in the long run, without technological innovation as a foundation, it's challenging to sustain a competitive edge.

Large models offer immense potential for application, but implementing them can be challenging. For instance, applying large models directly to specific scenarios like healthcare or legal consulting reveals practical challenges.

Amid the broader push for cost reduction and efficiency enhancement, B2B customers are increasingly focused on cost-benefit analysis when selecting large models. Accurately identifying market demands and providing tailored solutions for niche market enterprises is crucial for Tongyi's commercialization strategy.

In the consumer market, AI technology is not yet indispensable for most consumers, and the functionalities of various applications are not yet irreplaceable.

This dilemma for Tongyi's commercialization mirrors the challenges faced by most AI large model enterprises.