Crash Right After Launch? iPhone 17 Camera Malfunctions, Issues Becoming the Norm in the Industry

![]() 09/22 2025

09/22 2025

![]() 499

499

The flaws do not overshadow the merits.

One of the benefits of buying an iPhone is that, as an international brand, any issues it encounters are closely watched and reported by people worldwide.

As expected, problems arose soon after the genuine devices were obtained...

After all, it was the new product launch day. As soon as I woke up in the morning, I opened social media and, as expected, was greeted by a flood of posts about the iPhone 17. Lei Technology had also sent someone early to queue at the Tianhuan Apple Store to get the product firsthand and create content for everyone. Interested friends, don't change the channel!

Interestingly, amidst the voices of joy over obtaining the new product and outrage over scalpers' price hikes, there were some differing opinions.

Perhaps Apple's Indian programmer buddies knew we were about to celebrate a festival. Before the review experts could even warm up their new devices, they discovered a rather amusing bug in the photography of their "flagship of the year," which they had purchased at great expense.

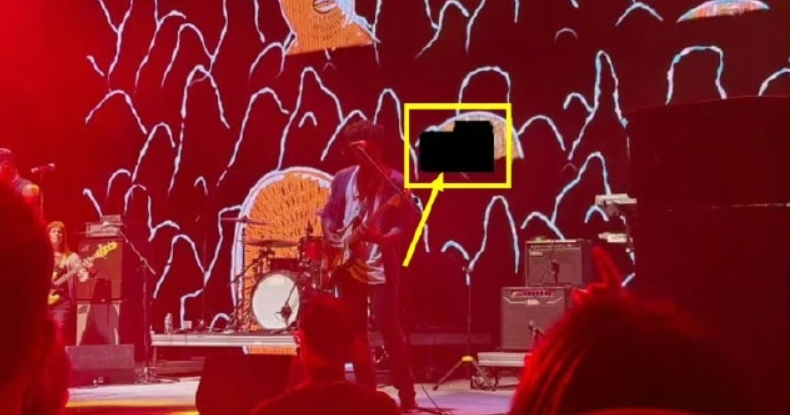

(Image Source: CNN)

You read that right—a black block surrounded by dozens of irregular white curves.

This isn't some concert stage setup. According to tech media outlet MacRumors, Apple has acknowledged camera malfunctions in the iPhone 17 series and iPhone Air. When exposed to direct, extremely bright LED lighting, photos may exhibit black squares and white curves. Currently, this issue can be reliably replicated, making it difficult to dismiss as a mere fluke.

Instantly, a wave of complaints arose.

Did the iPhone 17 just launch with a bug?

Here's what happened.

Recently, Henry Casey, a review editor from CNN Underscored, published an early review article on the iPhone Air. In addition to praising the product's performance and quality, he also highlighted his dissatisfaction with its speaker and imaging capabilities. The most crucial point he made was this:

"I also noticed a strange imaging issue during the concert. On both the iPhone Air and iPhone 17 Pro Max, approximately one out of every ten photos had small sections blacked out, including some white wavy lines on the box and the large LED board behind the band."

(Image Source: CNN)

Well, if this guy hadn't pointed it out, I might have found the faulty stage setup rather amusing...

Of course, joking aside, I was actually quite curious about the cause of this bug. After all, for many users, concerts and live events are among the most critical shooting scenarios for smartphones. Being unable to capture live moments would be a significant issue.

Apple's explanation was rather vague, merely stating that direct exposure of the camera to extremely bright LED screens could induce this situation.

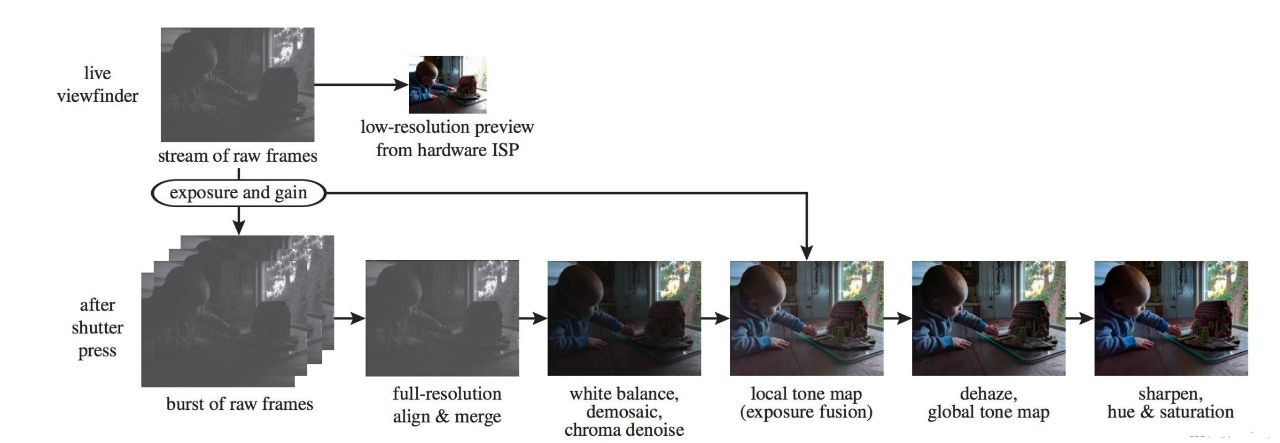

According to online discussions, modern smartphones emphasize "computational photography," with Apple being a leading proponent. Especially in high-contrast scenes like concerts, where half the area is pitch-black and the other half is as bright as day, the system automatically enables HDR mode to allow you to see details in the dark and outlines in the bright areas simultaneously.

After activating HDR, the iPhone rapidly captures several photos with varying exposure levels within a fraction of a second. For instance, one photo might be severely underexposed to ensure the stage lights and screen details don't blur together; another might be normally exposed to capture mid-tones; and yet another might be severely overexposed to reveal details in the darkest corners.

(Image Source: X)

Next, the ISP and NPU "stitch" these photos together to create the final, detail-rich image you see.

The problem lies with the concert's LED screen. It doesn't stay continuously lit but flickers at an ultra-high frequency imperceptible to the human eye (i.e., PWM dimming). When the iPhone captures photos in rapid succession, one photo might catch the LED at its brightest moment, resulting in overexposure; the next photo might capture it when it's off, resulting in a black screen.

When the algorithm encounters two photos with such drastically conflicting information in the same location, it becomes confused and can only output a jumble of corrupted, overflowing, or undefined data.

Ultimately, what appears in the photo are those eerie black boxes, white lines, and noise.

Of course, this is just one explanation from netizens, but one thing is certain: this isn't due to your lens glass shattering or your CMOS sensor being burned by a laser. At worst, it's a minor software algorithm bug.

Apple, as usual, stated that it plans to fix the issue through a subsequent software update but hasn't announced a specific patch release time yet.

When "Flipping Out" Becomes the Norm in the Smartphone Industry

At this point, some readers might be fuming:

"This phone costs over ten thousand dollars, and it still has such seemingly basic software errors? Cook, you old geezer, all you care about is making money!"

My assessment is: don't rush to judgment.

Such "flipping out" incidents with computational photography in specific scenarios are not uncommon in the entire smartphone industry. Apple is not the first to encounter this issue, nor will it be the last.

Let's take the controversial "P Moon Mode" as an example.

(Image Source: X)

A few years ago, didn't various manufacturers love promoting their "Super Moon" mode? Almost every manufacturer claimed their phone could capture detailed images of the moon with visible craters. And when photography novices tried it, sure enough, it worked, leading people to exclaim, "Wow, technology is amazing!"

However, some meticulous netizens conducted an experiment: they placed a blurry image of the moon on a computer screen and took a photo of it with their phone. The resulting photo was still a high-definition image of the moon. Some even discovered that as long as they found any round, glowing object at night, these phones could capture a clear image of the moon.

Ultimately, this issue was resolved by some manufacturers adding a feature toggle to their cameras.

Now, let's discuss the almost ubiquitous "HDR synthesis ghosting" that nearly everyone has encountered.

(Image Source: Google)

This phenomenon is even more widespread than "P Moon Mode" and better illustrates the algorithm's limitations in handling dynamic scenes. When you enable HDR mode and capture a moving object, such as a pet running around or a friend waving their hand, and then zoom in on the photo, you're likely to notice a semi-transparent "ghost" or double image along the object's edges.

The birth process of this "ghost" is similar to the iPhone 17's bug. As mentioned earlier, HDR requires capturing multiple photos in rapid succession for synthesis. If your subject moves during those fractions of a second, the algorithm may produce this phenomenon when attempting to align and merge the two photos.

To address this issue, Google designed a new spatial merging algorithm that decides whether each pixel should merge image content and pushed it to Pixel series products through a subsequent update.

Yes, just like what Apple is doing now.

Summary: A Minor Issue, the iPhone 17 Is Still Worth Anticipating

Has the iPhone 17 series "flipped out"?

My assessment is that while there are indeed some bugs, they're not major issues.

Ultimately, this situation arises because physical laws firmly cap the upper limits of smartphone imaging, making the use of algorithms inevitable. While computational photography has become an ideal solution to overcome these physical bottlenecks, it also brings a series of unpredictable problems.

(Image Source: Lei Technology)

If you're among the first batch of iPhone 17 users and encounter this issue, don't panic or rush to after-sales service.

Firstly, based on our shooting tests with the iPhone 17 Pro Max against a MiniLED high-brightness screen, it's extremely difficult to replicate this issue in real life. We tried for five minutes without encountering it once. It likely requires the complex environment of a concert to manifest.

Secondly, Apple's OTA update speed is quite fast. There's a good chance the next update will fix this issue, as it's not a hardware design problem. The problem might already be resolved before you even encounter it.

As for overreacting to patches, there's even less need for that.

Such situations are common in the industry. During the initial release of the Google Pixel 6, the under-display fingerprint recognition was as slow as a sloth, but it was quickly optimized through a software update. Domestic flagship models also frequently receive rapid updates after launch to fine-tune the camera's color science and focusing logic based on feedback from the first batch of media reviews and users.

The existence of "patches" proves that manufacturers are continuously striving to improve their products.

Of course, it would be even better if these optimizations could be completed before the product's official release.