AI audio "leader" just landed on Google V2A! The first fully automated AI tool for video + audio, completely open-source and free

![]() 06/20 2024

06/20 2024

![]() 563

563

The video generation AI released in recent days has received continuous praise. Whether it's the new Runaway model Gen-3 Alpha or the Dream Machine launched by Luma AI, they both have realistic visuals, diverse film narrative techniques, and a strong artistic atmosphere.

Currently, top tools like Sora generate videos without sound, and audio is an essential step to make AI videos more realistic. If AI can complete the workflow from script/image-video-dubbing, that would truly be perfect.

Early yesterday morning, Google DeepMind quietly released the V2A (Video-to-Audio) system. This system can directly dub videos based on the content of the visuals or manually entered prompts.

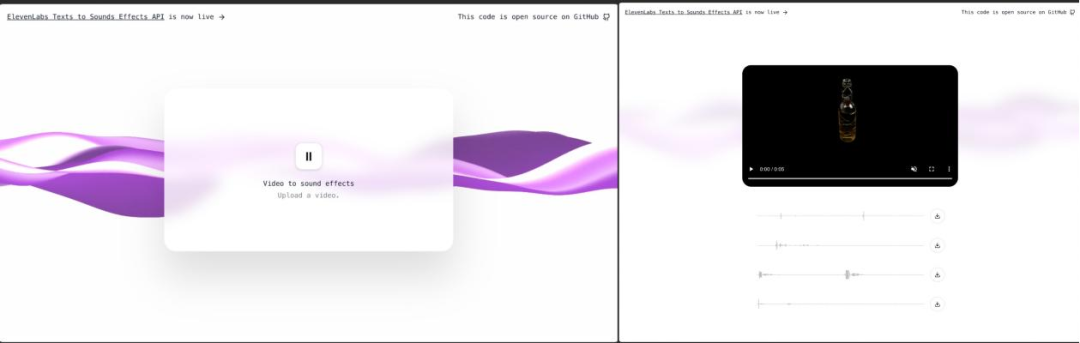

Within a few hours, another AI audio cloning "leader" ElevenLabs released an API for text-to-audio models and created a Demo application based on this API. This is currently the only fully automated AI tool that combines video and audio, and it's completely open-source and free to use online.

Two flowers bloom, each with its own beauty. Since Google does not intend to open the V2A system to the public, let's try out this version from ElevenLabs first~

/ 01 / Understanding + alignment, fully automated generation, but unable to comprehend complex visuals

AI videos are bidding farewell to silence, and ElevenLabs is adding the final touch to the AI workflow for creating blockbuster films "by hand." I can't wait to dub the AI-produced videos I made a few days ago.

▲ElevenLabs generates dubbed videos step1→step2

I fed ElevenLabs luma-generated fire meme videos, OpenAI member rage videos, "The Shining" movie clips, and Gen-3 example videos to see what sounds it would pair with these visuals.

The effects are pretty good! Among them, the dubbing for "a singer singing solo," "a woman running towards a launching rocket," and "a white-haired woman laughing" fit the scenes well, while the dubbing for "a woman breathing underwater" and "a man with a fire behind him" is realistic and detailed, giving a strong blockbuster feel.

After testing about 20 videos, ElevenLabs can automatically generate audio tracks synchronized with video content, and the generated dubbing basically covers all types of film dubbing:

- Environmental sounds, such as underwater breathing, burning sounds, rolling sounds, firecracker sounds, musical instrument playing, white noise, noisy voices, etc.;

- Human voices, including crying/laughing, dialogue/monologue, and singing, but cannot generate narration;

- Music, such as happy music for circus illustrations and horror music for the twins' scenes in "The Shining";

- Sound effects, such as gunshots, comedic scratching sounds, and mechanical breakdown sounds during "OpneAI members fighting".

Compared to other AI dubbing tools, ElevenLabs is the first to achieve fully automated generative dubbing for videos, able to dub videos without manual input of prompts and create 4 audio tracks using AI for selection, without manual alignment of audio and video.

ElevenLabs understands the visuals of the video, reads the elements within, knows what is happening in the visuals, and what sounds should appear, automatically matching environmental sounds, human voices, music, and sound effects, and performing well in lip synchronization.

From the perspective of sound itself, I found that ElevenLabs performs well in terms of sound fidelity, with very realistic sounds for underwater breathing, burning, rolling, firecrackers, and even white noise and noisy voices, with rich sound sources and decent sound quality.

The most frustrating point is that ElevenLabs has fewer audio track options (only 4). I fed the same video to ElevenLabs multiple times, but I could only get the same 4 audio tracks.

The lack of