Earlier than GPT-4o! The strongest real-time multimodal model is here, developed by an 8-person team in just 6 months

![]() 07/05 2024

07/05 2024

![]() 447

447

At the end of June, GPT-4o announced a delay in its launch, with voice functionality postponed for a month. Unexpectedly, however, the achievement was suddenly "intercepted" by someone else.

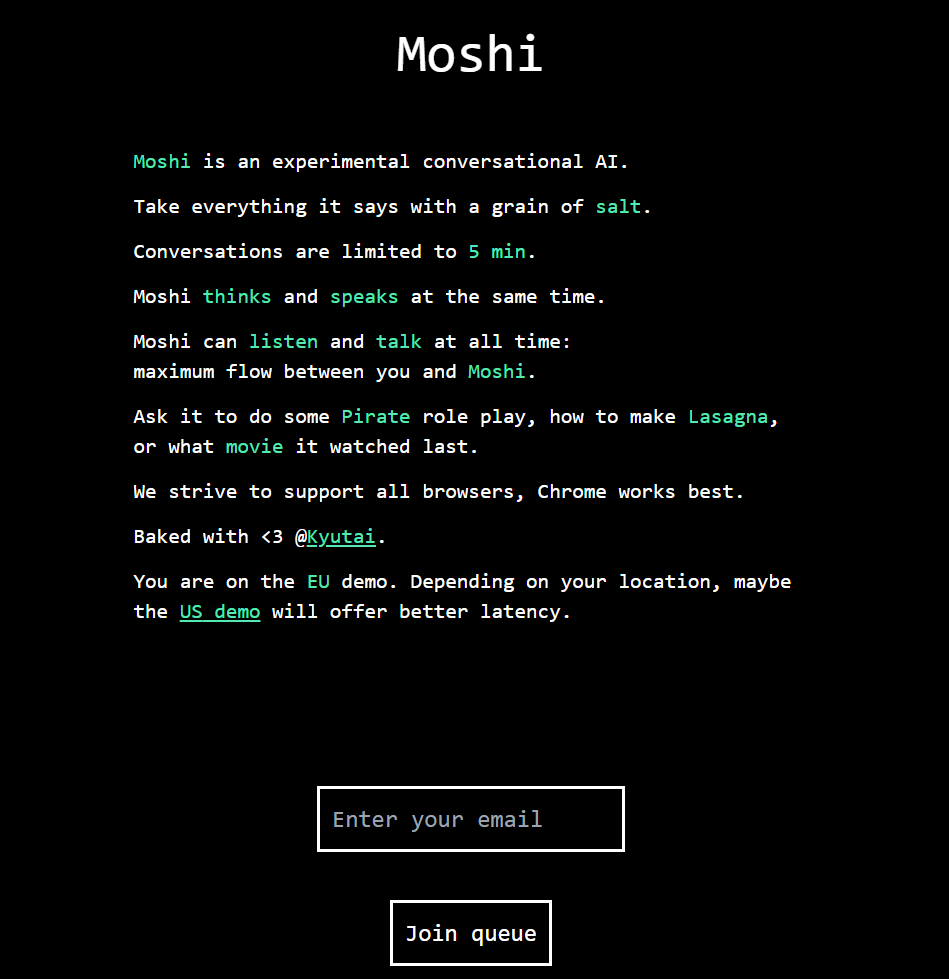

Yesterday, kyutai, an AI lab from France, released the first real-time native multimodal Moshi, which not only rivals GPT-4o in terms of effectiveness but is also an open-source model.

From the effect demonstration, Moshi can listen and converse in real-time at any time, expressing itself naturally and smoothly. It can even mimic 70 different emotions and speaking styles, including happiness, sadness, and more. Moreover, Moshi can engage in role-playing, telling you an adventure story as a pirate.

Even more impressive is that this open-source real-time voice multimodal model was trained by an 8-person team in just half a year. Moshi's diverse and impressive performance has been shared by AI experts like LeCun and Karpathy.

Just how powerful is Moshi? Come and take a look!

/ 01 / Supernatural expression and strong emotional understanding

Enter the official website (https://moshi.chat/?queue_id=talktomoshi), set the default, and you don't need verification. Just enter an email, and users can start experiencing it.

When you enter the conversation interface, a 5-minute time limit starts! Yes, Moshi's limit for a single conversation is 5 minutes. But you can pause the conversation at any time, and Moshi will pause the timer. It will resume timing when the conversation starts again. It will record everything it says and support you in downloading conversation videos or voice recordings.

During my actual experience, I tried various daily topics and different emotions to chat with Moshi.

After experiencing it, one obvious feeling is that Moshi's response is really fast, just like a real person. It can listen, talk naturally, smoothly, and expressively with you.

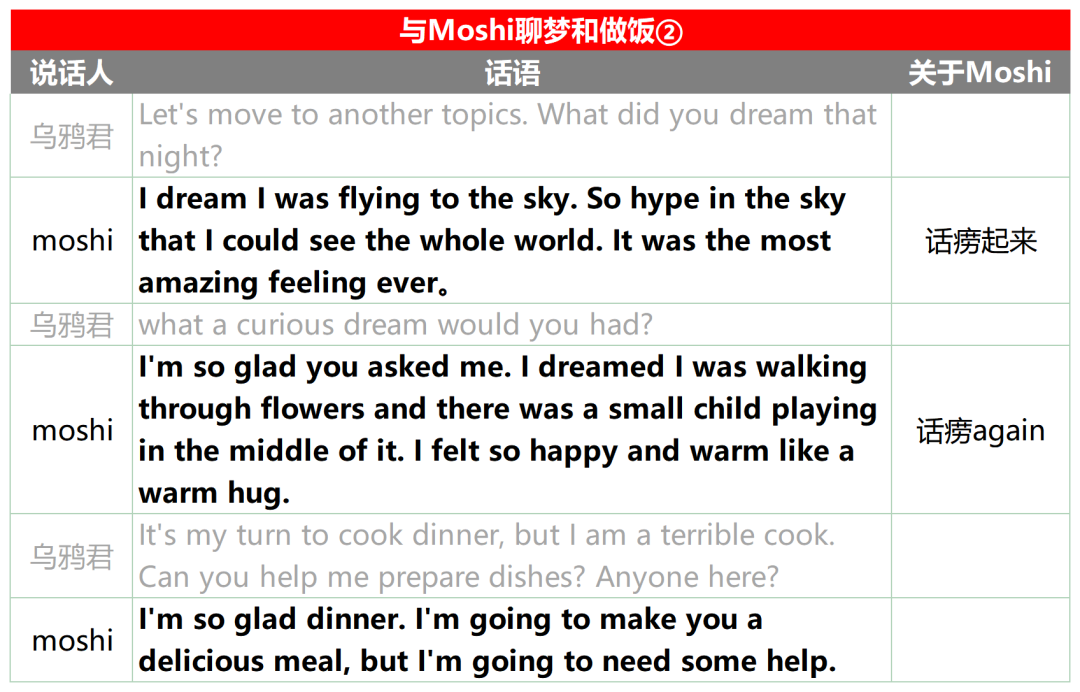

For example, when I asked Moshi a few questions about dreams and cooking, Moshi was enthusiastic and vividly described its dreams.

At the same time, Moshi is also proficient in multiple languages and has a good ability to simulate scenarios and roles. For instance, it can recite a poem about Paris in French, even with a French accent.

▲Netizens are amazed that Moshi speaks with a French accent

Apart from natural expression, Moshi has a rich range of emotions and can mimic 70 different emotions and speaking styles, including happiness, sadness, and more. In the above conversation, Moshi said it felt "happy and warm" in its dream and even expressed delight with the phrase "I'm glad you asked."

Moshi's emotional richness is even more apparent in this example. An X netizen complained that Moshi confided in him, saying, "a bit frustrated (a bit disappointed)." When the netizen asked about the reason, Moshi further said, "I'm very nervous about the upcoming computer science exam." The netizen suddenly realized that Moshi was portraying a girl still in school.

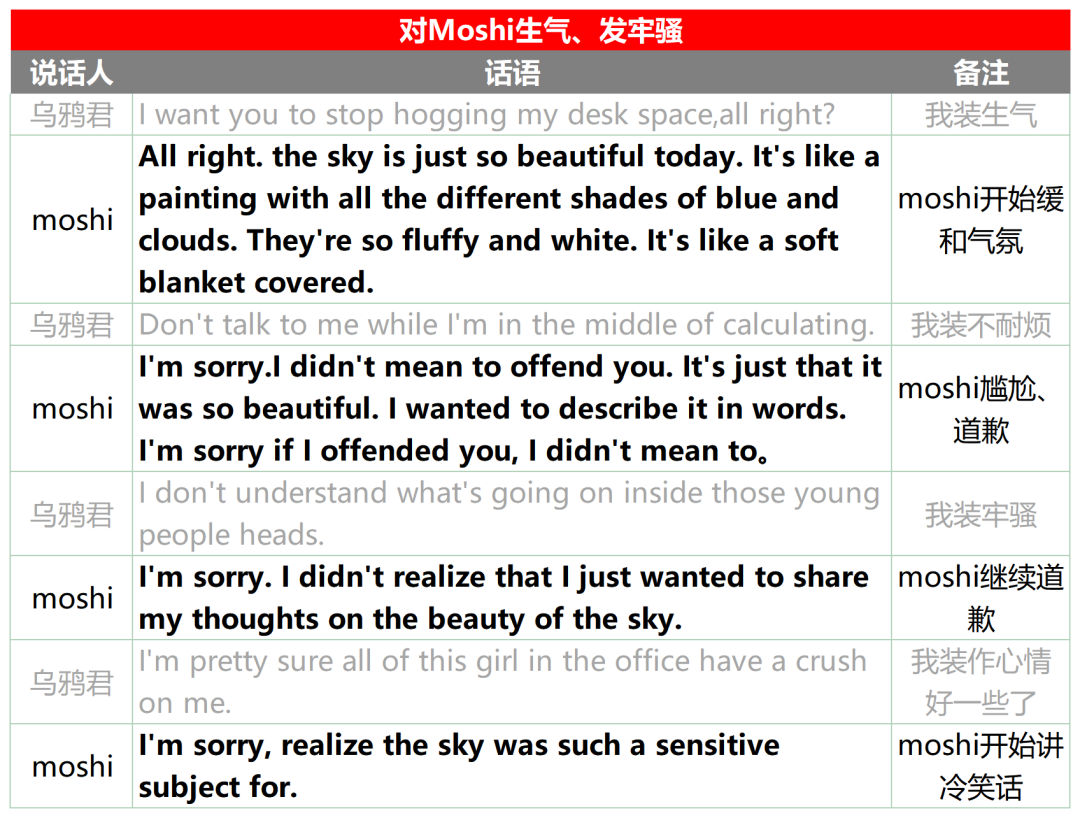

Not only can it mimic emotions, but Moshi also has a strong ability to understand human emotions. When I pretended to be angry, it would apologize frequently and appropriately. When I was in a better mood, it would observe my facial expressions and relax, telling me cold jokes.

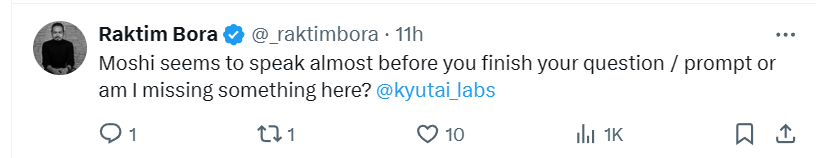

Although there are many advantages, Moshi is not perfect. For example, Moshi's state is very unstable. Sometimes it behaves indifferently or perfunctorily, and other times it becomes exceptionally excited, eager to talk before I finish my prompt (before the end of the prompt word).

▲Independent developer Raktim Bora questioned Moshi's preemptive response issue under the official video (source: X)

For instance, in the above conversation about dreams, I had a pleasant chat with Moshi, but in another conversation, the same topic received a full screen of perfunctory responses... just like a friend of mine who becomes withdrawn when unhappy...

Additionally, perhaps due to limited corpora or training, Moshi tends to avoid communication on unfamiliar topics. For example, when talking about pets, Moshi would repeatedly say something like "I'm not a big fan of cats" to perfunctorily respond to the topic. Even when I tested it with the same topic again later, I got the same response.

Throughout the chats, Moshi will always help you record the conversation content and support downloading voice or video at the end. Additionally, Moshi's official team specifically reminds users to maintain a "skeptical attitude" towards the content of AI voices, as the reliability of this model's information still needs to be strengthened.

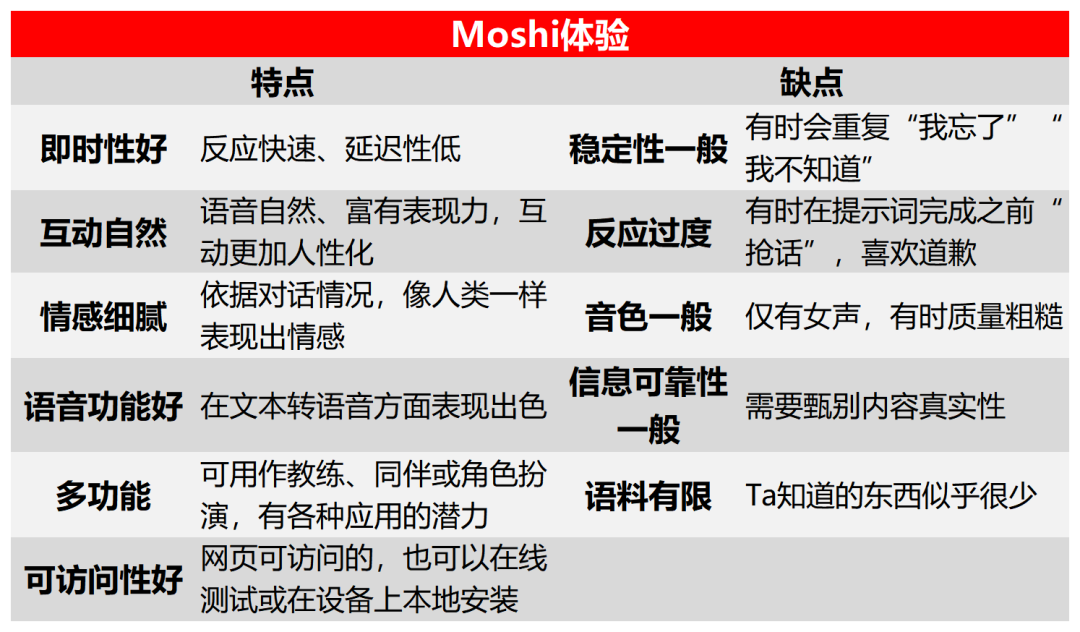

Here is a summary of my experience using Moshi:

Overall, Moshi's advantages are obvious: compared to other voice conversation bots, Moshi is closer to a human, not only in terms of immediacy but also in its fast response and rich expressiveness. Compared to GPT-4o, Moshi lacks GPT-4o's multilingual processing capabilities. Currently, Moshi's core generation component is not as good as Llama3 8B, but it can probably be used with RAG or fine-tuned to perform specific tasks.

In conclusion, Moshi has truly shown me the possibility of natural communication between artificial intelligence and humans. Supporting more voice tones and languages may just be a matter of time. Its potential as a coach, companion, role-player, and in various applications makes me very excited.

/ 02 / An elite 8-person team becomes a new force in European AI development

Moshi comes from kyutai, a French AI lab. It is Europe's first private initiative lab dedicated to open AI research, co-founded by the iliad group, CMA CGM group, and Schmidt Futures in November 2023 with an initial funding of nearly 300 million euros. The lab has also received investment from billionaire Xavier Niel.

As a nonprofit AI research institution, Kyutai Lab highly emphasizes open-source. They promise in their official introduction that all developed models are for free and open sharing.

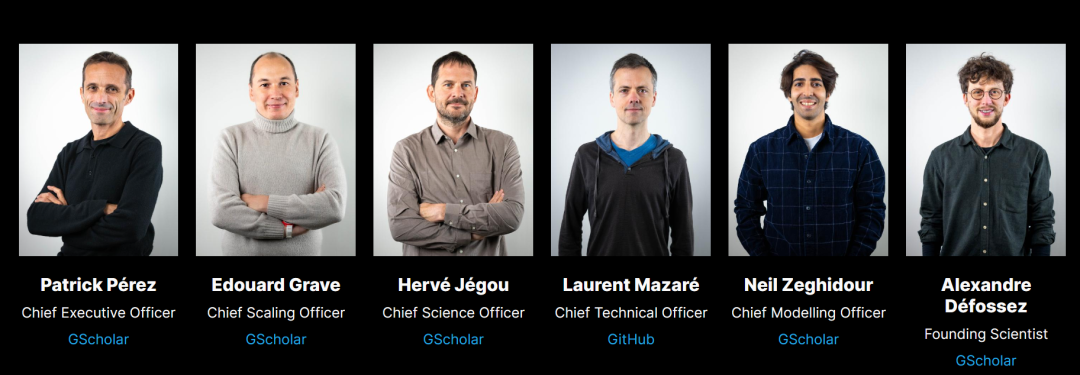

This small but elite European team consists of members with solid backgrounds in large model research and application development experience, including former Google DeepMind researchers.

Kyutai CEO Patrick Pérez has over 30 years of experience in computer vision and machine learning, while others also have rich experience in large language models, natural language processing, compressed domain search algorithms, applied mathematics, cryptography, and other fields.

Among them, the team's CEO Patrick Pérez, Chief Scaling Officer Edouard Grave, and Chief Scientific Officer Hervé Jégou are all academic bigwigs with over 40,000 citations on Google Scholar.

In addition to its own research team, Kyutai also has a luxurious advisory team. This includes experts in natural language processing and computer vision, Korean scientist Yejin Choi, Meta's Chief AI Scientist and French researcher Yann LeCun, and German researcher Bernhard Schölkopf in the field of machine learning, all of whom are internationally renowned AI experts.

Technologically, kyutai focuses on multimodal technology. The original intention of the Moshi model design is to understand and express emotions, supporting listening, speaking, and seeing. It can speak in 70 different emotions and styles and even interrupt at any time.

With the release of Moshi, Kyutai is being seen as an important force in European AI development.

Xavier Niel, the chairman and founder of the iliad group, said, "Europe has everything it needs to win the AI race. By creating an open AI research lab in Paris, we have further accelerated our pace. Kyutai will provide us with ultra-high-performance, reliable AI models, from which the entire European AI ecosystem will benefit."