Insights from Vinod Khosla, the first investor in OpenAI: AI deflation will be unstoppable

![]() 07/12 2024

07/12 2024

![]() 480

480

This June, The Information invited renowned venture capitalist and early investor in OpenAI, Vinod Khosla, to discuss his insights on the development of AI technology and how AI is reshaping human-computer interaction, the entrepreneurial ecosystem, and the future of professional services.

Vinod Khosla is a prominent Indian-American entrepreneur and investor. He is not only the founder and chairman of Khosla Ventures, a venture capital firm, but also the first investor in OpenAI.

During this interview, Vinod Khosla shared many valuable perspectives. Below are some of the core viewpoints excerpted by Wuya Jun from Vinod Khosla's discussion:

1. The cost of large models will be one-fifth to one-tenth of today's cost in a year. I suggest all startups ignore computation costs because any money you spend optimizing software will become worthless within a year.

2. Investing in cloud computing infrastructure is not wise because companies that buy GPUs to build cloud computing are likely to lose out to giants like Amazon and Microsoft.

3. All these applications will rely on large language models optimized for specific domains. Almost all professional knowledge will be commoditized by AI, whether it's primary care physicians, teachers, structural engineers, or oncologists. Each professional field could become a successful startup project. However, during this process, specialized domain knowledge will eventually be integrated into broader fields like primary care and mental health treatment.

4. AI will bring about significant deflation as the cost of many things approaches zero due to the disappearance of labor content. If you reduce the number of doctors to just 200,000, with 80% of doctors disappearing, consumers will spend $250 billion less on healthcare, leading to deflation.

5. In an AI economy prone to deflation, humans may pay higher values for experiences. I don't think Taylor Swift is just about music; it's a cultural phenomenon, an experience for young people.

/ 01 / Apple's Latest Moves

Jessica Lessin: I think there was significant AI news this week with Apple's AI announcement. Of course, a key part of it was Apple's collaboration with OpenAI. Vinod was an early investor. So I have to ask Vinod, what do you think of Apple's news?

Vinod Khosla: First, I haven't fully caught up with all the news yet, but I'll look into it this weekend. However, Apple does need to do something; Siri's reputation has been getting worse and worse, or perhaps even worse.

But more importantly, they've demonstrated something very significant: computers interacting directly with you. You won't need to struggle to retrieve information from computers; computers will retrieve information for you. I wrote a blog post a few months ago discussing the idea that computers will learn from humans, not the other way around.

From OpenAI's perspective, this cements OpenAI's position as having the best large language model. Obviously, many want this business, and many things have been evaluated. So it's great to see Apple recognize that the best AI is at OpenAI. I believe they also considered where the best AI would be in the next year or two. So in many ways, this is a validation for OpenAI and an important milestone in how humans interact with machines.

Jessica Lessin: I'd like to add that they did mention Gemini is coming soon, possibly in collaboration with Anthropic. I mention this not to detract from OpenAI's excellent model but because it raises a question for me: if Apple tries to rebuild an app store with this intelligent technology, aiming for broad collaboration and guiding users to different services, it reminds me of what they said when they first launched Siri, that they would guide you to OpenTable for bookings, etc. Vinod, what do you think about Apple's technical direction or, more broadly, the discovery of applications and services in AI, as it directly relates to the entrepreneurial ecosystem?

Vinod Khosla: First, it's wise for Apple to keep their options open, but they've made a clear decision to embed and integrate into their operating system, enough to make Elon Musk angry for disabling Apple devices. But I think the bigger thing is how we interact with computers. Siri was the beginning of the evolution of human-computer interaction interfaces, which will eventually become agent-based interfaces. I think this is big news.

Sam Lessin: Vinod, can I ask you a question? You and I discussed the future of large vs. small models last year. An interesting point Apple demonstrated is that they can do a lot with small models on devices. So in the long run, what position will expensive and competitive large models occupy? Will they just become content? I'm curious about your thoughts on how all this will evolve, even though you haven't fully caught up.

Vinod Khosla: I think the things small models do on devices will be different from what large models do. If you want human-level intelligence, you'll need large models; small models can't replace them, but they do excel in certain aspects. If you want low-latency responses, you need a short path, which requires small models on devices. Actually, I think Intel even bundles a small large language model with their new processors, but they're designed for responsive interfaces, not sources of intelligence.

Sam Lessin: Can you imagine a future where you can talk to many people, some with an IQ of 50, some with 100, and some with 10,000? The question is, would you be willing to pay more to ask someone with an IQ of 10,000 or someone with 70, who might know your email content and daily questions? Right now, we essentially throw all our questions at a very expensive PhD student. In the future, I think we'll be smarter about what level of intelligence different questions require. What do you think about this competition?

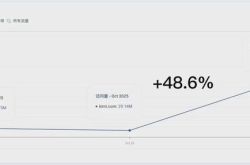

Vinod Khosla: I might disagree. I think "expensive large models" will become very cheap. I bet their cost will be one-fifth to one-tenth of today's within a year. I suggest all startups ignore computation costs because any assumptions you make and any money you spend optimizing software will become worthless within a year, so forget about it. Rely on market competition between cloud providers, Gemini, and OpenAI to drive costs down to insignificant levels, to the point where it doesn't matter. If you pay a certain monthly fee for iPhone services, and if this cost is 10% or less of that, it doesn't matter.

Jessica Lessin: Why do you think companies should ignore costs, which will drop so dramatically? What do you see?

Vinod Khosla: Because every large model owner is working to reduce costs.

Sam Lessin: Isn't the training cost of each large model increasing?

Vinod Khosla: The training cost of AI models is indeed rising, which is why I think open-source models struggle to sustain. However, once a model is trained, we should pursue the widest range of usage for two main reasons: first, from a business perspective, we want to generate the most revenue. The model with the lowest cost usually gains the widest range of applications, leading to the highest revenue. But more importantly, widespread usage generates massive amounts of data crucial for training the next generation of models. Therefore, for various reasons, we should strive to maximize model usage.

If we look at the long-term development, we'll find that AI model competition primarily occurs over a five-year timescale, not one year. During this period, costs will drop year by year. Currently, Nvidia charges a considerable fee from each user, but in the future, each model will be able to run on various types of GPUs or computing platforms.

Developers will want to generate as much data as possible. Therefore, I'm quite confident that in the coming years, revenue won't be the most important metric. Of course, we don't want to lose money to an unsustainable degree, but the primary goal isn't to make huge profits but to gain extensive usage and data to improve models.

I firmly believe AI has significant room for improvement in intelligence, whether in reasoning abilities, probabilistic thinking, or pattern matching. I'm confident we'll see astonishing progress every year. While there are many rumors in the industry, the main difference between companies lies in execution. For example, OpenAI excels in execution, while Google has excellent technology and talent but slightly lacks precision in execution.

Brit Morin: Some believe AI will eventually be monopolized and commoditized by existing tech giants. Vinod, what's your five-year outlook on AI? Which areas do you think are less likely to be monopolized by giants? Is this way of thinking appropriate?

Vinod Khosla: I think if you want to compete with companies like OpenAI and Google in the foundation model space, it's not a good direction. Large language models are likely to be dominated by big players who can train on large-scale clusters, pay for proprietary content, collaborate with platforms like Reddit, and access scientific articles from publications like Nature. If you follow the traditional route, big players do have an advantage.

However, we recently invested in a company called Symbolic Logic, which takes a completely different approach. This approach doesn't rely on massive data or computing resources but follows an orthogonal, high-risk, high-reward path. If successful, it will bring revolutionary changes. So even at the model level, there are different directions for innovation.

For example, my MIT professor friend Josh Tenbaum believes probabilistic programming will be an essential contribution in the future. Human thinking is probabilistic, which differs from simple pattern matching. So I think we're far from done with fundamental technology research. Currently, we're mainly developing Transformer models, but there are other models worth exploring. It's just that few dare to invest in models other than Transformers.

I'm particularly interested in areas that seem esoteric. For instance, Symbolic Logic uses a math called category theory, which most mathematicians are unfamiliar with. This was a bold investment we made 15-18 months ago.

Beyond models, there are many other areas worth paying attention to. I think investing in cloud computing infrastructure isn't wise because companies buying GPUs to build cloud computing are likely to lose out to giants like Amazon and Microsoft. These big companies are developing their custom chips to avoid paying Nvidia's high fees, and AMD might join the competition in a few years. But at the application level, opportunities are immense. I predict that almost all professional knowledge will be commoditized by AI. Whether it's primary care physicians, teachers, structural engineers, or oncologists, each professional field could become a successful startup project.

Of course, we've also invested in some very popular projects known to all, like Devin, who's building an AI programmer. They're not building tools for programmers, like Copilot, but an AI programmer. We recently invested in an AI structural engineer project. While it may sound strange, consider this: traditionally, a building structural design might take two months for one version. An AI structural engineer could provide five versions in five hours, significantly shortening construction timelines. This niche market could be worth billions of dollars.

Sam Lessin: But let me dig deeper. I agree that any professional knowledge that can be systematically described can be implemented by AI, faster and better. But the question is, can this really become a competitive business model, or will it eventually become a commodity accessible to everyone, changing how we work but not a good business opportunity?

Vinod Khosla: My view is that all these applications will rely on large language models optimized for specific domains. Each professional field has a different workflow. The idea that a general model can do everything is incorrect. Each field has its peculiarities, like which specific libraries or directories to use to determine available materials and prices, how to compose a more economical structure, etc. These details vary across fields. For example, an AI oncologist won't become an expert just by relying on GPT-6. It needs to master many details, like knowing which tests can determine which of 20 different chemotherapy drugs is most effective for a specific cancer. Currently, such extensive testing isn't often done due to high costs, and most oncologists don't have such extensive knowledge. But AI can change this, providing more precise treatment plans for patients.

Sam Lessin: I see, but where's the line? Obviously, there will be some lines, but in extreme cases, say, a general practitioner might not be a good market because summarizing and processing all a GP knows is relatively easy. While you might have a digital GP, it might be a hard market to profit from. Specialists might be different.

Vinod Khosla: I don't fully agree. I think this professional knowledge will eventually be integrated into broader fields like primary care and mental health treatment. I think these two areas could be larger markets because they involve more than just knowledge. In contrast, in oncology, there are few other factors beyond professional knowledge.

Jessica Lessin: Dave, what's your take on this? I know you've been closely following startups in this space. I'd also like to hear your thoughts on Apple. You were the first to mention on this podcast that you're interested in how Apple will operate with integrated chips and small language models. I feel many embedded features we're seeing in apps like Apple Mail are in line with your predictions.

Dave Morin: Yes, as you know, I've always believed Apple would be the company to bring small models to market and make them practically impactful for consumers and developers. A key focus I've been thinking about recently is that Apple has demonstrated excellence in three areas: technology building, platform building, and product building. Most large tech companies typically excel in one or two of these areas. So I think it's very impressive that Apple is truly applying its comprehensive technical strength in this field.

They're not just talking about technology, which we've seen many companies do over the past year. They're not just achieving this by bringing excellent products to consumers, though they've done that. What I find most impressive is the plethora of developer tools and platforms they launched yesterday. There's a lot of esoteric content here, but I think developers and entrepreneurs will be very excited about these new tools. These tools not only have a direct impact on the vertical industries Vinod mentioned but also provide a myriad of new possibilities for creating great new products.

However, what disappoints me a bit is that you can't directly access these local models, which many people haven't mentioned. They've set quite strict restrictions for developers. You can use their Swift programming language and a series of UI components to apply these models to your applications. But as a developer, you can't directly access the models yet. I think this is a very interesting but underreported detail in yesterday's announcement. Besides that, these are my initial thoughts.

/ 02 / Top Silicon Valley AI Investment Strategies

Sam Lessin: From my perspective, in the extreme case of seed investing, I think most seed investors entering the AI space are doing something foolish because a million or two dollars really can't make a big difference in value creation in this field. That's just how the game is played. So, to me, this is clearly an area where big companies win. The middle ground might still be debatable, and I'd love to hear your thoughts. You clearly have a different view from me. And at the seed stage, from a venture capital perspective, it's a disaster. I'm curious if you think I'm wrong, and I'd love to hear your thoughts.

Vinod Khosla: Let me give you some specific examples. In January or February 2023, a company came to me with a seemingly absurd claim. I said, okay, I'll give you $2 million in seed funding, and if you can prove it, we can talk about more investment. They came back in March this year and showed the promised results. I said, don't look for other investors; I'll write you a $15 million check tomorrow, and we did just that. We didn't wait for external pricing or other people's opinions. I don't care what others think. We took a gamble. That's how small seed investments can turn into big ones.

By the way, we have many seed investments in the robotics space. Truly small investments that will grow. Take Hartwell, the structural engineer project I mentioned earlier, as an example. It's a seed investment. It might never need $50 million or $100 million. It will grow from a seed investment into a profitable business. So I do think there's room for seed investments, but blindly following others' strategies is terrible. Giving $2 million to anyone who leaves DeepMind to start a company is a bad strategy.

Sam Lessin: I'm actually curious about your pricing principles in your seed round investment practices. Because, in my view, one reason why the seed stage in AI is so terrible is that, in my view, the seed stage in AI is so terrible because people coming out of DeepMind might not only fail in their seed rounds but also have absurdly high valuations, making it difficult for investors to make money. From an investor mindset, how do you find reasonably priced, high-quality projects in seed round investments in the AI space?

Vinod Khosla: I think you have to spend a lot of time thinking about which areas will be disrupted by large models and which will become obsolete as a function within these models. We've had a lot of discussions in this regard. We have many internal technical experts and good connections in these areas. But I estimate 80% of our seed investments are below a $25 million valuation. Occasionally, we'll bet like we did with Devin, where despite no revenue, we'll place a bet based on certain belief systems.We will pass on 80% to 90%, or even more, of billion-dollar valuation projects, but we will invest in 10% to 20% of them. We have indeed invested in Replit and Devin. Occasionally, we pay attention to high-valuation investments, and sometimes we make small, symbolic investments to maintain relationships.

Sam Lessin: When it comes to high-valuation investments, are you approaching them as an investor or from an ecosystem perspective?

Vinod Khosla: Devin and Replit are definitely investments. We also make some ecosystem and relationship-based investments. We know certain people very well, and when valuations go out of control, we might make symbolic investments, which is fine. We do quite a bit of that type of investing as well.

/ 03 / The Deflationary Effect of the AI Economy

Jessica Lessin: Do you believe the behavior of these models in building themselves has been legal so far? Secondly, not just for content creators but also for professionals you mentioned, such as doctors and those with specialized knowledge, intellectual property, and education in many other fields, what will their business models look like in an AI-driven world?

Vinod Khosla: This morning, I did a podcast with The Wall Street Journal, and the entire discussion topic was whether AI will bring significant deflation. I said, of course it will. It will bring massive deflation in many aspects we've discussed because the cost of many things will approach zero, as the labor component in these things will vanish. Maybe there will still be some land rent and capital expenditures, but it will lead to a hugely deflationary economy. If you reduce the number of doctors to just 200,000, with 80% of doctors disappearing, you're talking about spending $250 billion less on healthcare, which is deflationary. So, if most content is AI-generated, most music is AI-generated, it's all deflationary. Even though consumption in many of these areas will increase significantly, it's hard to predict what this model will do. I think it's very difficult to foresee where these aspects are headed.

Jessica Lessin: So, the content industry should be cautious.

Sam Lessin: There’s a question I’ve been pondering: instead of paying all these so-called copyright holders, why not hire, say, 10,000 smart individuals to talk to AI all day? It seems more efficient, maybe even just 1,000 people. Hire 1,000 smart individuals and say, "I won’t get permissions from anyone. I will just pay these 1,000 people full-time wages every day to talk about this." It’s essentially metadata.

Dave Morin: Isn’t that the Meta and YouTube model? Users provide content for free.

Sam Lessin: The content generation model of Meta has always been interesting. I do believe the answer to these questions reflects the interface these things represent; the medium is the message. I'm thinking, what would happen if you had a thousand people spending six hours a day talking to large language models about what they’ve learned? It’s almost like a modern oracle.

Vinod Khosla: Sam, in this potentially deflationary AI economy, we need to rethink what is valuable. I don't think Taylor Swift is just about the music; it’s a cultural phenomenon, a youth experience.

So, I do think the nature of human experience will reassess the value of things. Why have we shifted from genuinely well-manufactured goods to paying three times the price for handcrafted items that are less refined? Because it's the human experience we value, and different people value different things. So how all of this reconstitutes into a value system is hard to predict. All I can say is that there will be significant change. I don't think we can say how it will change.