How Do Autonomous Vehicles Tackle Edge Scenarios Such as the 'Ghost Probe'?

![]() 09/05 2025

09/05 2025

![]() 695

695

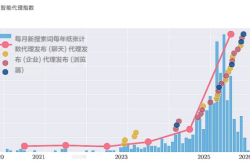

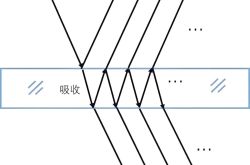

How should autonomous vehicles handle edge scenarios like the 'ghost probe'? For autonomous vehicles, regardless of whether they are in regular or edge scenarios, the process typically involves a sequence: first perceiving the environment, then comprehending it, followed by predicting future events, and ultimately selecting the safest and most feasible action under given constraints. This chain of operations may seem straightforward, but each step demands robust technical support.

How Do Autonomous Vehicles Interpret the Road Environment?

To accurately navigate around obstacles, autonomous vehicles must first "see" them. The autonomous driving system relies on a suite of sensors to perceive the surrounding world. Cameras provide rich visual information, millimeter-wave radar excels at measuring speed and penetrating through adverse weather conditions like rain and fog, LiDAR offers precise distance and three-dimensional shape data, and ultrasonic sensors are adept at close-range detection. Each sensor type has its blind spots and error patterns—cameras are susceptible to lighting variations, radar may return noisy signals, and LiDAR performance can degrade in the presence of strong reflections or during snow and rain. Consequently, the system does not rely on a single sensor but integrates multisource data to form a more robust "joint observation," thereby minimizing occasional misjudgments. The sensor data streams must also be strictly time-synchronized; otherwise, discrepancies between the "seen" image and speed information can lead to downstream errors. This is why high-quality autonomous vehicles incorporate stringent time synchronization mechanisms and frequent sensor self-checks.

After "seeing" obstacles, the next step is to "understand" them. This "understanding" encompasses obstacle detection (identifying highlights in sensor point clouds or images as pedestrians, bicycles, vehicles, or fallen cardboard boxes), obstacle tracking (associating the same target across consecutive frames to determine speed and acceleration), and semantic understanding (determining whether the obstacle is likely to move, if someone is pushing it, or if it is a static object at the road boundary). Modern autonomous driving systems commonly employ deep learning models for object detection, combined with physics- and statistics-based tracking algorithms (such as Kalman filters or their extensions) to estimate target motion states. The core challenge in the "understanding" phase is managing uncertainty. For instance, when a child's head suddenly appears from behind bushes in front of the vehicle, the camera may capture only a brief partial silhouette, the radar return may be weak, and the LiDAR points may be sparse. The autonomous driving system must assess whether this poses a danger with extremely limited information, neither becoming complacent due to incomplete data nor triggering drastic actions due to a single noise event.

Following the "understanding" of obstacles, the next step is to "predict" their future trajectories. Prediction does not aim to determine the exact actions of an obstacle but rather to provide a probability distribution—it may continue straight, suddenly turn back, or run toward the middle of the lane. Regular behaviors (such as other vehicles following lanes or pedestrians walking on sidewalks) are easier to predict, while sudden behaviors (like a child chasing a ball that rolls into the road) are more challenging. To address this uncertainty, the autonomous driving system simultaneously generates multiple "possible futures," also known as scenario trees or probability samples, and then evaluates the consequences of its responses under these scenarios. Current technologies propose using deep models (such as sequence models or interactive prediction networks) combined with physical models to enhance prediction quality. However, predictions can never be 100% accurate, so planning and control must incorporate fault tolerance, responding optimally to the most likely scenarios while maintaining protection against low-probability but high-consequence events.

With the detection, tracking, and prediction of surrounding objects complete, the system enters the "decision-making and planning" phase. This step determines the vehicle's next action—emergency braking, steering left, gently turning right, or decelerating to observe. Decisions must balance safety, comfort, and legal compliance. Pure emergency braking is often the most conservative and reliable choice, but in many cases, braking may cause a rear-end collision or loss of control on icy roads. In contrast, avoiding obstacles requires more complex trajectory planning, considering available road space, the positions of surrounding vehicles, and dynamic feasibility (such as the relationship between steering angle and speed). Therefore, modern autonomous driving systems use constrained optimization methods (such as model predictive control) to generate feasible trajectories under vehicle dynamic constraints while incorporating safety terms (minimum distance to obstacles), comfort terms (avoiding excessive lateral acceleration), and regulatory terms (not crossing lines illegally) into the cost function. Regardless of the decision, a "safety layer" or "rule layer" constraint must exist—no matter what action is suggested, if it leads to an unacceptable collision risk, the safety layer will intercept and choose a more conservative action.

Once a decision is made, the "controller" translates the trajectory into specific throttle, brake, and steering commands. This involves precise vehicle dynamic models, tire-road friction coefficients, brake response delays, and other physical details. In sudden "ghost probe"-like edge scenarios, the ideal operation achieves smooth but rapid actions—quickly reducing speed to avoid collisions without slamming the brakes and causing vehicle instability or passenger injury. On low-adhesion surfaces (wet or icy roads), vehicles require earlier and gentler actions than on high-adhesion surfaces, so autonomous driving systems typically adjust braking strategies based on road condition estimates. The vehicle may also cooperate with underlying stability control systems like ABS and ESC to maintain controllability.

What Considerations Are There in Obstacle Avoidance Design for Autonomous Driving Systems?

To execute the aforementioned steps effectively, time is the most critical scarce resource. The shorter the delay in the perception-decision-control chain, the more effective the system's actions will be. Therefore, key path computations should run near-real-time on the vehicle, while less urgent, high-cost models can be executed in the cloud or backend for future learning and improvement. Additionally, the system should incorporate warning and "preparatory action" mechanisms. When detecting suspected obstacles with insufficient information, the autonomous vehicle can gently decelerate while preparing executable avoidance trajectories, enabling immediate execution once the obstacle is confirmed and thus shortening the overall reaction time.

Autonomous driving systems must also consider redundancy and diversity in their design. Sensor redundancy prevents blind spots caused by single-sensor failures; algorithm diversity (such as simultaneously using rule-based collision judgment and learning-based prediction) allows one method to compensate when another fails. Functional safety standards (such as ISO 26262) require systematic analysis of failure modes that could lead to hazards and cover these scenarios through hardware and software redundancy, fault detection, and safe degradation mechanisms. "Safe degradation" does not mean irresponsibly stopping the vehicle on the roadside but bringing it to a safer state, such as decelerating and steadily pulling over or issuing clear takeover requests to the human driver.

Another widely discussed and often criticized aspect is takeover design. For certain Level 2/Level 3 systems, human-machine takeover (handover) is a common strategy, but swiftly transferring control from the system back to the human poses many issues—the human's attention may not be focused, and prolonged takeover times can lead to danger. Therefore, the system can consider taking emergency actions (such as braking or avoiding) within an extremely short timeframe while issuing clear prompts and necessary auditory/vibratory warnings to the driver for later participation or confirmation. High-level autonomy (such as full automation) requires the system to handle emergencies autonomously in a wider range of scenarios, minimizing reliance on humans, which places high demands on perception and prediction capabilities.

Common strategies for sudden obstacles fall into two categories: active avoidance and passive deceleration. Active avoidance means the vehicle changes its lateral position to bypass obstacles while ensuring no conflict with other traffic participants; passive deceleration prioritizes reducing speed to avoid collisions. When selecting a strategy, the decision module must evaluate the worst-case scenarios of various potential trajectories and take actions that do not lead to unacceptable consequences under all reasonable circumstances. Some companies use formalized rules like "Responsibility-Sensitive Safety" to define acceptable distances and speeds, providing clearly defined operational boundaries for unforeseen behaviors. The advantage of formalized rules is verifiability, but overly strict rules may lead to frequent over-conservative behaviors; fully data-driven methods, on the other hand, may fail in extreme corner cases.

To ensure precise obstacle avoidance by autonomous driving systems, substantial effort must be invested in testing processes. Massive "corner cases" can be generated on simulation platforms, including scenarios like small animals crossing, luggage suddenly appearing in the middle of the road, overturned cargo boxes, wind-blown advertisements misleading sensors, or reflective materials causing sensor errors at night. Simulation allows developers to repeatedly verify strategies and parameters in a safe, controlled environment. Meanwhile, real-world closed-course testing and gradually expanded road testing are also indispensable, as simulation cannot fully replicate sensor noise, real dynamics, or complex social behaviors. Real-world testing should also pay special attention to the system's redundant performance and fault recovery capabilities under edge conditions.

Many sudden situations actually stem from "unseen" scenarios or rare behaviors. By aggregating extensive road test data, near-miss collision events, and user-reported anomalies into a data platform, models can be continuously annotated, trained, and improved, enabling better responses to similar situations in the future. Data governance, labeling consistency, and scenario replay capabilities are key assets for enhancing the system's ability to handle "sudden obstacles."

Final Thoughts

When facing "ghost probe"-like edge scenarios, many believe that braking as quickly as possible is always the safest option, but braking can pose greater dangers in dense rear traffic or on wet roads. Others think "LiDAR can solve everything," but relying solely on LiDAR can also fail under certain optical reflection or occlusion conditions. The most reliable solution is to combine multiple technologies into a mutually complementary protective network. Rest assured, a mature autonomous driving system will make the safest actions in an instant before the driver can react, while managing control, information, and responsibility orderly to minimize risks, rather than hastily pushing decision-making to an unprepared person.

-- END --