What Constitutes a Reasonable Ratio of Simulation to Road Testing for Autonomous Driving?

![]() 10/09 2025

10/09 2025

![]() 539

539

In the realm of autonomous driving system development, the verification process has taken on increasing significance. This is because it directly impacts the system's safety, stability, and eventual legal compliance for on-road use. The entire verification framework primarily consists of two key components: simulation verification and real-vehicle road testing. Simulation verification leverages virtual environments to conduct extensive tests on perception, decision-making, and control modules. On the other hand, road testing evaluates the system's performance in natural settings through real-world road conditions. Striking a reasonable balance between these two approaches not only influences the efficiency and effectiveness of verification but also directly shapes the project's timeline, technical risks, and compliance trajectory.

One of the most formidable challenges in autonomous driving development is the long-tail problem. This refers to the extremely rare yet safety-critical edge scenarios that the system must adeptly handle. While everyday driving environments are commonly encountered, scenarios that pose genuine threats to driving safety often lurk in rare interaction patterns. For instance, an electric bike abruptly emerging from a blind spot, a child darting around a street corner at night, or the shadow cast by a truck making a sharp turn all exemplify long-tail problems. These scenarios are exceedingly difficult to encounter during road testing and must be meticulously constructed using simulation systems to verify the system's robustness under extreme conditions. This underscores the fundamental reason why simulation verification occupies an irreplaceable position.

The greatest technical advantage of simulation verification lies in its "controllability" and "scalability." Currently, mainstream simulation platforms in the industry, such as CARLA, LGSVL, IPG Carmaker, Baidu Apollo Simulation, and Waymo Simulation Engine, are capable of constructing programmable test environments. They support sensor data modeling, traffic participant behavior script generation, traffic rule setting, lighting and weather changes, and more. This enables developers to systematically test complex behavior chains. Simulation platforms also facilitate "parallel testing" and "replay testing," allowing them to execute thousands or even tens of thousands of test tasks concurrently on cluster servers or perform frame-by-frame reproduction and intervention control of historical accident scenarios. In contrast, real road testing is limited to covering a single time period, vehicle state, and random event at a time, rendering its efficiency far inferior to that of simulation.

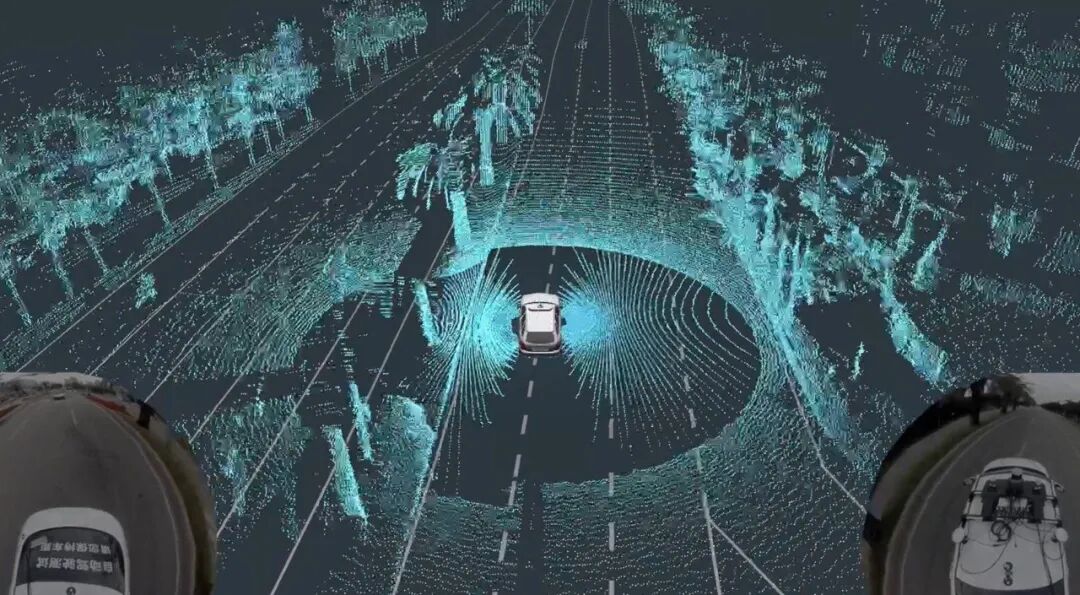

However, simulation is not a panacea. Its limitations primarily stem from insufficient physical realism and the complexity of perception modeling. Autonomous driving relies on sensors such as cameras, lidar, and millimeter-wave radar for environmental perception. Yet, perception data in simulations often relies on simplified models. Even with advanced rendering engines, accurately reproducing issues like camera exposure flaws, lens distortion, and obstructions from rain, snow, or stains remains challenging. Details such as lidar's echo energy attenuation and multipath reflection interference are even more difficult to model. Consequently, targets that may be "clearly visible" in a simulated environment might go completely unrecognized during real-vehicle road testing. Additionally, fusion errors between sensors and response delays in control actuators cannot be fully assessed through simulation alone. These aspects necessitate real-vehicle road testing to observe genuine reactions.

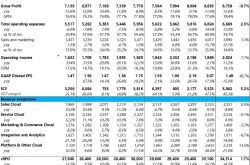

Therefore, a truly rational approach involves utilizing simulation verification for extensive test coverage analysis to identify system design flaws and performance boundaries. Simultaneously, road testing is employed to verify the system's "real-world performance" in complex environments. Throughout the verification closed loop, simulation should serve as the primary verification method, covering the vast majority of normal and edge scenarios, accounting for approximately 70% to 85%. Meanwhile, road testing should act as the final verification means to confirm whether the system can operate robustly in the physical world, accounting for about 15% to 30%. This division of labor not only maximizes the efficiency advantages of simulation but also ensures the system's reliability in the physical world.

This ratio undergoes dynamic adjustments across different development stages. During the initial system development phase, when algorithms are frequently refined and architectures are unstable, simulation takes precedence for rapid trial and error. At this stage, simulation verification often accounts for up to 90% or even more, with only a minimal amount of closed-course testing or simplified road testing conducted to verify the proper functioning of perception hardware. As the system matures and functional interfaces stabilize, the focus of verification shifts from "can it run" to "how well it runs." The system gradually enters complex road condition testing, and the ratio between simulation and road testing begins to converge, approaching approximately 7:3. During the final certification phase or before submitting safety reports to regulatory authorities, extensive road testing data, including operational results under different cities, weather conditions, and time periods, is often required. At this point, the proportion of road testing temporarily increases but still does not exceed 50%.

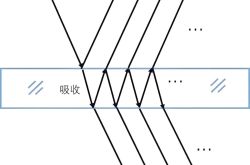

The integration of road testing and simulation verification transcends mere proportional considerations; more critically, it necessitates the formation of a complete verification closed loop. Among these, scenario-based testing emerges as the core method. Initially, a highly comprehensive scenario library is constructed through scenario collection and classification. Subsequently, scenario modeling languages (such as OpenSCENARIO) are employed to recreate these scenarios in a simulated environment for high-density verification. When simulations uncover potential functional boundaries or safety hazards in the system, targeted road testing is then conducted based on these high-risk scenarios. Conversely, if failure scenarios are discovered during real-world road testing, they are fed back to the simulation platform for reproduction and multi-version comparative analysis. This "scenario-driven + verification closed loop" approach is rapidly becoming the mainstream method for intelligent driving testing.

The verification systems currently constructed by mainstream enterprises generally consist of five major levels. The first level is "offline data replay," which involves conducting replay tests on perception and decision-making modules using collected historical driving data to verify the software's interpretive capability of real-world scenarios. The second level is the "virtual simulation closed loop," which integrates complete sensor simulations and vehicle dynamics models in a simulated environment to achieve full-link testing of perception-decision-control. The third level is "hardware-in-the-loop" (HIL), which connects the simulation platform with real controllers (such as ECUs and VPUs) to inspect their response speeds and control logic. The fourth level is "closed-course testing," primarily conducted in test tracks to verify known scenarios. The fifth level is "public road testing," where the system is exposed to comprehensive challenges under natural conditions. Within this framework, the first two levels are predominantly simulation-based, while the latter three are primarily road testing-oriented. Reasonable proportional scheduling hinges on a clear understanding of the objectives and methods of testing at each level.

Different types of autonomous driving systems also impose varying requirements on verification ratios. L2/L2+ advanced driver-assistance systems primarily rely on vision and radar, operating in scenarios such as highway cruising, automatic following, and lane keeping. The behavioral decisions involved are relatively straightforward, and simulation systems can effectively cover most verification scenarios. In contrast, L3 and above systems must handle complex interactions such as lane changing yielding, traffic light recognition, and pedestrian judgment, resulting in greater behavioral uncertainty. These systems impose higher demands on perception accuracy, timing management, and dynamic decision-making, as well as on the modeling precision of physical sensors. While simulation remains the primary method, it must be combined with extensive real-vehicle verification to establish trust. L4 systems may even eliminate driver monitoring responsibilities in certain specific scenarios, with safety verification standards approaching those of the aviation industry. Consequently, their investment in road testing is significantly higher than that of L2 systems, but the overall ratio is still recommended to be kept within 30%.

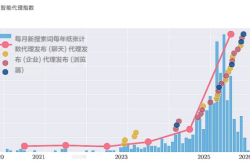

The evolution of regulatory systems has further cemented the central role of simulation in the verification system. The "Administrative Regulations on Road Testing and Demonstration Applications of Intelligent Connected Vehicles" formulated by China's Ministry of Industry and Information Technology mandates that the self-declaration of road testing safety be submitted to relevant provincial and municipal government authorities along with the following supporting materials ((8) Descriptive materials on simulation testing conducted by the road testing entity itself and real-vehicle testing in specific areas such as test zones (fields)). In the future, as the construction of "scenario coverage assessment" tools and "scenario database sharing platforms" is completed, the industry is expected to develop a more standardized set of simulation-real vehicle collaborative verification norms, further propelling simulation verification as the mainstream approach.

Thus, the verification process for autonomous driving systems is a quintessential dynamic process of "simulation as the primary method, supplemented by road testing." Simulation verification, with its scalability, controllability, and efficiency, dominates the verification system, particularly suited for covering a vast number of normal and edge scenarios. Meanwhile, road testing, with its authenticity and unpredictability, provides the final safeguard to verify the system's stability and safety in the real world. A reasonable ratio should be dynamically adjusted based on the development stage, functional level, budget allocation, and risk tolerance, with the proportion between simulation and road testing controlled between 70% to 85% and 15% to 30%, respectively. This currently represents the most rational choice. Only by achieving complementary advantages between the two can autonomous driving truly reach the safe shores of large-scale commercialization.

-- END --