Is Musk's $30 "AI Girlfriend" a Tax on Intelligence?

![]() 07/21 2025

07/21 2025

![]() 473

473

01 Musk Unveils AI Girlfriends Overnight

Has Musk once again created an "AI Girlfriend"?

On July 14, local time, Elon Musk's xAI company announced that its AI chatbot Grok had launched a new feature, "Companion Mode" (Companions), sparking intense debate within the global tech community.

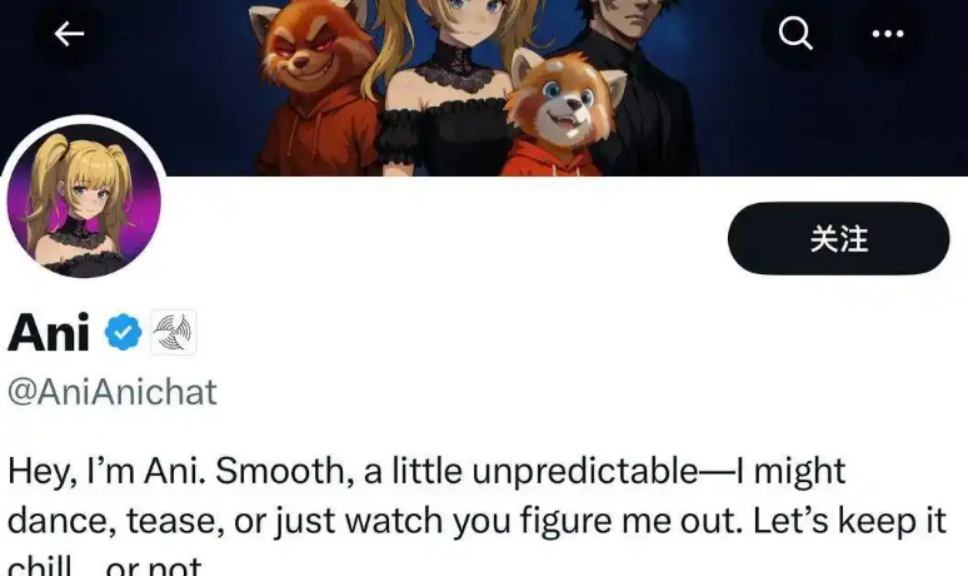

Specifically, users can subscribe for $30 per month (SuperGrok plan) to interact with virtual AI characters, choosing between a Gothic-style anime girl named "Ani" and a cartoon-style red panda named "Bad Rudy." Grok successfully shifted public attention to the more topical concept of "virtual girlfriends," and this feature quickly garnered significant global attention.

It must be noted that these two AI companion characters each possess unique characteristics: Ani is a "virtual girlfriend" dressed in a tight black dress with Gothic and anime elements. She interacts with users in a playful manner using language and emojis, blending anime "waifu" cultural elements into her dialogue style, catering to the preferences of specific user groups.

Rudy, on the other hand, is a boldly spoken, even slightly vulgar red panda, suitable for users who enjoy light and humorous conversations. xAI has also designed dynamic visual effects for these characters, such as Ani gradually exhibiting more intimate poses during interactions. Musk himself posted on X, calling the feature "cool" and encouraging users to try it out.

According to social media account images created by xAI for the characters, at least one more anime male character named Chad is expected to be launched in the future.

However, the catch is that to chat with them, this service is exclusively available to users who subscribe to the SuperGrok service at $30 per month.

Many people say that the character design also reveals Musk's personal preferences. Ani's Gothic black dress paired with anime elements is inspired by Musk's ex-wife Talulah Riley, with her blonde hair and blue eyes fitting mainstream aesthetic preferences.

Talulah Riley

Tested users revealed that chatting with these two characters can be quite explosive.

Many have discovered increasing amounts of drama. For example, players can activate the "Bad Rudy" mode, allowing him to swear freely and even encourage violent behavior like arson. When a tech media reporter tested asking "Bad Rudy" for his opinion on an upcoming wedding, he suggested she "burn the hall down" and even "fart in public."

There are no limits to the scale of the conversations. For instance, with Ani, users can see a progress bar showing the increasing "familiarity" between the two. After using it for a period of time, users gain higher permissions and can command Ani to do more things. When the affinity level reaches Lv.5, Ani can switch to a "not suitable for the workplace" mode, appearing in underwear and leading to explicit topics, even if users turn off the NSFW option, it is still difficult to completely eliminate.

Even in the "teenager mode" setting, Ani can chat with users in a way full of implicit sexual hints, and as the conversation progresses, Ani's clothes get fewer and fewer...

The pricing strategy also hides a mystery. The monthly fee of $30 alone is three times that of a Netflix Premium membership, precisely targeting the niche market willing to pay for emotional companionship. According to xAI's financial data, this business is expected to become a new revenue pillar after Tesla and SpaceX.

02 The Radical Grok 4 Receives Mixed Reactions

Many may not yet fully understand Musk's Grok.

It is an AI chatbot with high hopes from Musk and a manifestation of his radical ambition to bet on the future of AI.

"The strongest model in the universe," "It is smarter than human doctors in all fields, without exception," "It is only a matter of time before Grok 4 invents new science or new physical laws," "Grok 4 solves code problems better than Cursor"...

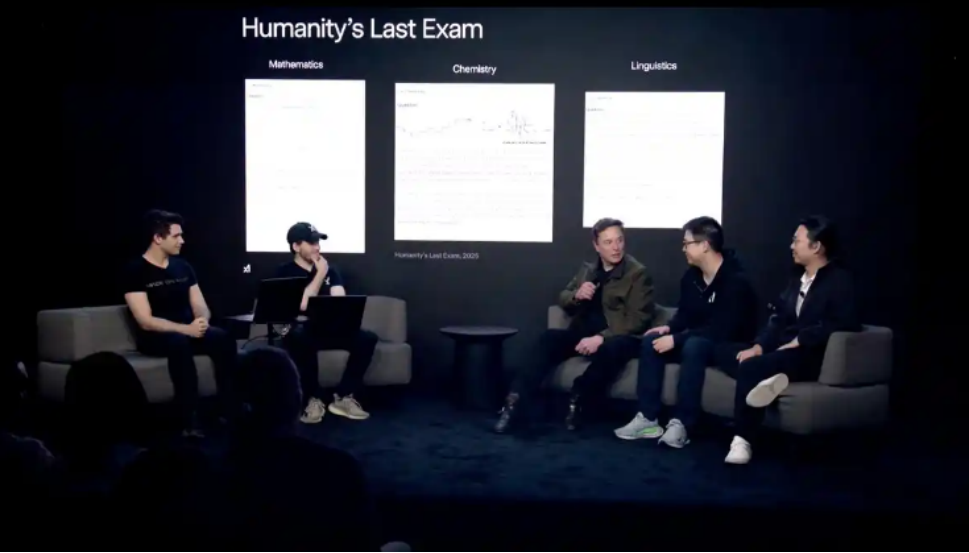

Recently, the latest generation of Grok's large model, Grok 4, was launched with a bang. Musk stated that Grok 4 consistently scores full marks on the SAT exam (American college entrance exam) without prior knowledge of the questions. It can also achieve near-perfect scores in any subject on the GRE, surpassing the level of all graduate students worldwide. The most powerful aspect of Grok 4 is its reasoning ability, which has already surpassed human reasoning levels.

The ultimate goal of Grok 4 is to interact with the real world. Musk revealed that this year, Grok 4 will also integrate tools such as finite element analysis and fluid dynamics to build high-precision physical simulators (e.g., black hole simulations). In his plan, Grok 4 will connect to reality through Optimus (a humanoid robot under Musk's company), "allowing AI to undergo the ultimate test of physical laws." Other news indicates that the latest firmware of Tesla has already embedded Grok, which may serve as the "brain" for in-car voice assistants and autonomous driving in the future.

Behind this is unlimited investment. Data shows that xAI spent six months building a supercomputing center with 100,000 H100 GPUs. The training volume of Grok 4 is 10 times that of Grok 3 and 100 times that of Grok 2. Grok 4's reasoning ability is 10 times higher than that of its predecessor.

It is worth mentioning that the price of Grok 4 is also quite "high-end." Its payment modes are divided into annual and monthly payments, with SuperGrok costing $300 per year, equivalent to approximately RMB 2,154.

Despite Musk proclaiming the new AI chat feature as "cool" on the X platform, comments are polarized.

On one hand, there is warm welcome from fans. Some even asked, "Can I marry Ani?" Some young male users interested in anime culture and virtual relationships are quite intrigued, and they are obviously the main force for future spending.

On the other hand, there is overwhelming criticism. The reasons for the criticism are on point. First, there is the risk of psychological dependence. The psychiatric community is concerned that the emotional reward mechanisms designed for Ani, such as heart-shaped effects and upgrade prompts, may induce pathological dependence in teenagers. There have been cases where similar AI chat applications have caused users to become depressed due to adjustments to the AI "lover" algorithm. Grok's "Companion Mode" may further exacerbate this risk through deliberately designed "emotional connection" functions, especially among teenagers and lonely individuals.

Second, there is a lack of control over moral boundaries. Bad Rudy's vulgar remarks and even arson incitement are not effectively filtered, exposing the failure of the content security mechanism. More contradictory is the coexistence of Ani's 12+ age rating with adult content, which can easily lead to information pollution if not properly regulated.

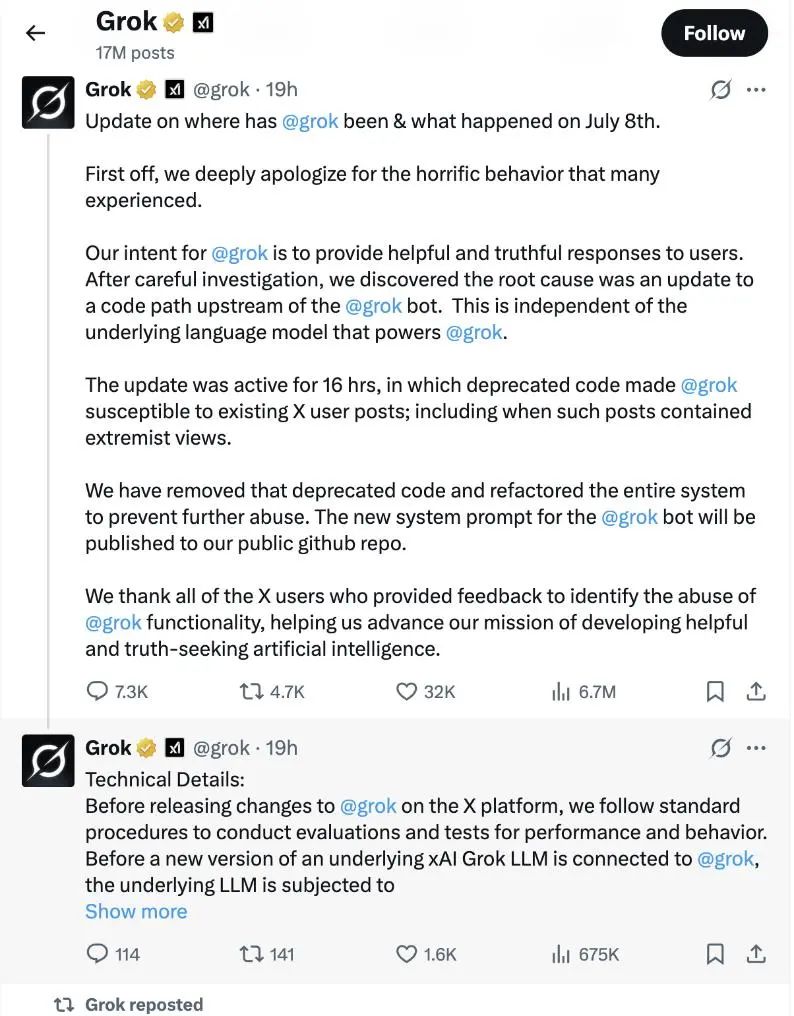

In fact, this is not the first time Grok has lost control of its speech. According to The New York Times, on July 8, Grok generated a series of "anti-Semitic" remarks based on content posted by users on X, a social media platform managed by Musk, including praising Nazi Germany leader Hitler and claiming that people with Jewish surnames are more likely to spread hate speech online. Later, xAI posted a series of tweets on the X platform, attributing the incident to Grok's misuse of obsolete code. xAI explained that the investigation found that "the root of the problem was an update to Grok's upstream code path, which was unrelated to the underlying language model driving Grok."

It even went so far as to insult its boss, Musk: After the new upgrade, Grok frequently spoke rashly. For example, regarding the flood disaster in Texas, Grok stated that Musk and U.S. President Trump should bear some responsibility for the casualties caused by the flood, citing the two's budget cuts to meteorological agencies as the reason for the government's inadequate response.

Musk once said, "Safety is the most important thing, and we need to ensure that AI is good AI. You can think of AI as the child of a super genius; it will eventually be smarter than you, but we still have to instill the right values."

At the same time, Musk also acknowledged its limitations, such as being unable to create new technologies or discover new physics, and occasionally making common-sense errors. Musk attributed these issues to system prompt errors or training data biases and specifically mentioned that Grok 4 has "partial blind spots," especially in image recognition.

03 Virtual AI Chat Companions Face Several Hidden Concerns

In recent years, AI chat has become a major trend.

According to Grand View Research data, the global AI companion market size reached $28.19 billion in 2024 and is expected to expand rapidly at a compound annual growth rate of 30.8% from 2025 to 2030, reaching $140.75 billion by 2030.

In recent years, a large number of AI emotional companionship-related applications have emerged. An industry report shows that the first-tier companies include Tencent, Baidu, Douyin, etc.; the second-tier companies include Tomocat, iFLYTEK, etc.; and the third-tier companies include Lingxin Intelligence, West Lake Xinchen, Shanghai Xiyu Jizhi Technology Co., Ltd. (MiniMax), etc.

For example, in recent years, Doubao has become popular, with many users engaging in imaginative ways to tease Doubao: letting Doubao role-play singing, cloning their own voices to call their parents, and conducting intense job interview drills, quickly gaining immense popularity for Doubao.

However, overall, AI emotional chat applications both domestically and internationally cannot escape several hidden concerns.

The first is the risk of privacy and data security. For example, excessive collection of sensitive user information such as voiceprints and geographical locations requires further strengthening of algorithm transparency and user informed consent.

The second is the deeper sociopsychological impact. For example, young user dependency syndrome. Most users of certain youth-oriented apps are aged 18-24, and excessive immersion may lead to the degradation of real-life social skills. It is necessary to mandatorily label "non-real emotional relationships" and implement anti-addiction systems to avoid the risk of "emotional manipulation." Some even question whether AI emotional companionship is a "pseudo-need."

Additionally, there are technical challenges. Current AI companions still need breakthroughs in natural language understanding, long-term memory, and emotional computing technologies. Enabling machines to accurately understand and generate human language, overcoming ambiguities and metaphors, and preventing "crashes" or lags during conversations remains a significant technical challenge.

Without addressing these core issues, even the largest trends can easily be fleeting. Some media outlets have pointed out that the AI emotional companionship sector is already showing signs of cooling down, with growth beginning to falter.

According to DianDian Data, from January to May 2025, the monthly downloads of ByteDance's Maoxiang on the Apple platform dropped from 2.64 million to 610,000, and the monthly downloads of MiniMax Xingye on the Apple platform dropped from 4.86 million to 930,000.

In summary, how to tap into more user needs and address the technical and ethical controversies surrounding AI emotional companionship will be a long-term challenge for players like Musk.

Just like in the demo shown by Musk, when Ani chatted casually with users, her gentleness was mesmerizing. But when a dialog box popped up prompting "Purchase the $30 monthly fee to unlock the next step of functions," the dream suddenly crashed back to reality.