Meta's AI Journey: Why the Setbacks and What's Next?

![]() 07/23 2025

07/23 2025

![]() 651

651

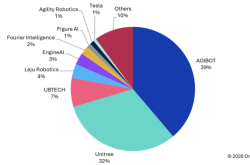

A week ago, news broke out in Silicon Valley: Meta lured Apple's AI model team leader, Pang Ruoming, with a lucrative four-year contract worth $200 million, forming a super AI lab and vowing to make a comeback in the AI race. However, beneath this high-profile talent war lies a harsh reality—Meta, the former social media giant, has encountered continuous setbacks on its AI journey.

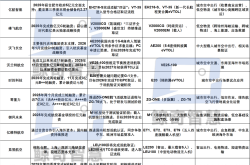

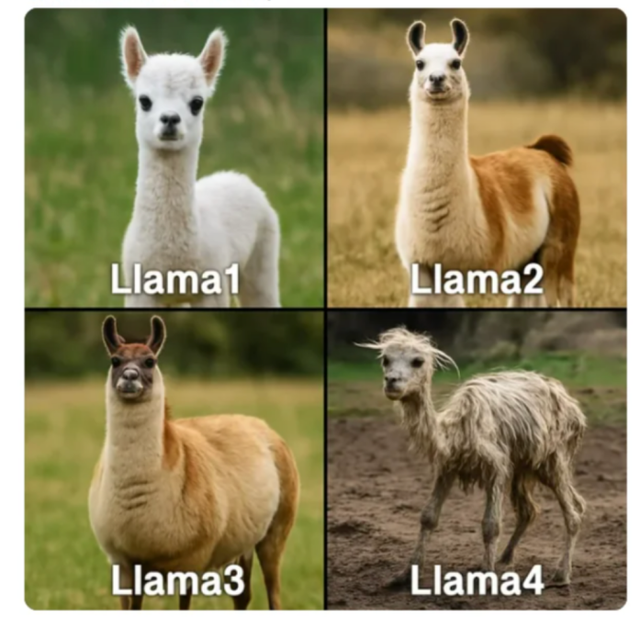

The Llama 4 model's performance fell short of expectations, raising concerns among developers about fine-tuning practices. The Behemoth large model was delayed, and internal test results were disappointing. Moreover, the advertising business, which funded AI research and development, suffered a $7 billion decline, with major advertising clients like Temu and Shein significantly cutting budgets due to Trump's tariff policy.

Why is Meta's AI journey narrowing? Is Zuckerberg's $10 billion bet a desperate counterattack or another failed transformation?

As the social media era's dominant force, Meta once boasted the industry's top resources, led by top scientists like Yann LeCun and supported by the advertising business's annual $100 billion cash flow.

However, it's perplexing how it gradually fell into a situation where it had to poach talent at high costs. Let's delve into the past.

Meta led AI research in 2010, introducing mainstream tools like PyTorch. However, unlike Google's TensorFlow and Microsoft's Azure AI, Meta's research remained academically focused, missing the opportunity to commercialize its technology.

On the eve of generative AI's rise in 2022, Meta, which launched a chatbot three months before OpenAI, could have been the first torchbearer. Unfortunately, BlenderBot 3 and Galactica were pulled due to frequent misinformation. Meanwhile, Yann LeCun's public skepticism about large language models exacerbated strategic swaying, causing Meta to miss the ChatGPT trend.

From 2023 to 2024, while other companies sprinted towards large models, Zuckerberg's all-in metaverse strategy diverted resources, leading to a lag in computing power layout.

The contradictions accumulated from earlier setbacks erupted comprehensively in 2025. The underwhelming Llama 4 raised "fine-tuning cheating" questions, leading to core talent loss. The Behemoth large model was delayed with dismal internal test results, possibly facing abandonment. Commercially, Meta's AI application had only 450,000 daily active users, starkly contrasting with its social platform's 2 billion daily active users and falling short of ChatGPT. Worse, Trump's tariffs on China led to significant budget cuts from major advertising clients, dealing a heavy blow to Meta's cash cow business.

Faced with the crisis, Zuckerberg decided to "pave a way with money": In terms of talent, Meta spared no expense, poaching seven core R&D personnel from OpenAI in just a month. At the infrastructure level, it invested lavishly in computing power, building the 1GW Prometheus and 5GW Hyperion superclusters, and even self-building a 200MW natural gas power plant to ensure power supply. Commercially, it considered abandoning the open-source Behemoth model and shifting to closed-source development for clearer monetization.

From early technological leadership to hesitation in the ChatGPT era to frantic catching up now, Meta has stumbled at multiple nodes, caught in a sandwich layer dilemma: unable to break through established giants like Google and Microsoft and being overtaken by latecomers like OpenAI and xAI.

With obstacles ahead and pursuers behind, the former giant is increasingly passive in this AI battle.

Meta's plight in the AI competition is not new but a systematic dilemma stemming from strategic misjudgments, technical debt, and organizational cultural issues. These factors reinforce each other, causing Meta to make repeated mistakes.

In 2021, when other tech giants began laying out generative AI, Meta bet heavily on the metaverse, renaming itself and investing tens of billions of dollars to build a virtual world. This led to two severe consequences:

First, it missed the generative AI's golden development period. Only after ChatGPT's popularity in February 2023 did Meta belatedly establish a dedicated generative AI team, while OpenAI had led by a year. Internal memos revealed that OpenAI adopted H100 in 2022, while Meta didn't begin large-scale deployment until 2024, seriously slowing model development.

Second, resources were diverted, and the metaverse business Reality Labs continued incurring huge losses, reaching $4.2 billion in Q1 2025, consuming cash flow that could have funded AI. When Meta finally turned to AI, it faced the dual pressure of "catching up on both basic research and commercialization," leading to a blurred strategic focus.

Recently, a major shakeup in the research team further shook Meta's long-standing open-source stance, and its painstakingly built developer ecosystem faced erosion. From social media to the metaverse and quickly shifting to AI, Meta seems to have constantly searched for the next growth point without firmly executing any long-term strategy.

This hesitant attitude directly accumulated serious technical debt in the early stages.

On one hand, Meta viewed AI as an increment rather than a variable, never carving out independent commercial soil for it and continuously using it to optimize existing products like advertising. This preference for short-term commercial returns brought in revenue but stagnated technological R&D and left infrastructure lagging. For instance, there's a significant computing power gap between Meta and its competitors. Even now, investing in 1.3 million GPUs to build the 1GW Prometheus cluster will take time to digest and absorb. Competitors like xAI's Memphis cluster have already started producing results like Grok4, forming a generational gap.

On the other hand, the emphasis on academics over products hindered commercialization. Meta invested billions of dollars in research annually, producing hundreds of top conference papers, but failed to turn them into commercial products users would pay for. It was like burning money without making money, running a loaded race in the AI competition.

Besides strategy and technology, the chaotic nature of organizational culture also makes it difficult to form a stable technology roadmap.

Insiders revealed severe infighting within Meta, a fragmented technology roadmap, and prevalent credit-grabbing behavior, creating a terrifying atmosphere where employees' core motivation shifted from technological innovation to "avoiding layoffs," leading to the departure of many research-enthusiastic core talents. After acquiring Scale AI, friction arose between the incoming elites and the original team. Alexandr Wang was parachuted in to lead the AI lab, cutting multiple academic projects, causing dissatisfaction among the old team. Policy-wise, Meta's equity incentives to top talent were often tied to short-term stock prices, potentially encouraging risky behavior over solid research.

In sharp contrast to Silicon Valley's traditional mission-driven approach and OpenAI's AGI slogan, Meta's AI strategy appears utilitarian and short-sighted, more about responding to competition than leading innovation. To some extent, this stems from Zuckerberg's authoritarian leadership style.

It's evident that Meta is already surrounded by crises. Even with increased investment, it will take time to digest and absorb. Meanwhile, competitors are still accelerating. So, does troubled Meta have a way out? If so, where is it?

History shows that technological paradigm shifts often accompany reshuffling among tech giants. Meta, which successfully disrupted traditional media in the social media era, now faces the dilemma of being disrupted by new AI players.

However, its core problem doesn't lie in a lack of resources but in the crises caused by continuous swaying: it has lost both the first-mover advantage and the flexibility and focus of latecomers.

Now, Meta is trying to turn things around in the most barbaric way: spending money, poaching talent, and stacking up computing power. In the short term, it can still maintain a position relying on its scale advantage. But in the long run, if it doesn't address the root causes, it's likely to repeat the metaverse fiasco, with huge investments falling through.

To turn the situation around, Meta needs more than just a financial offensive; it requires several changes from within.

Change 1: Clarify the technology roadmap and abandon the swaying strategy of "wanting both." Stop following trends in innovation.

Meta's frequent scandals in the first half of the year were partly due to a mental breakdown, watching various large models iterate rapidly and AI newcomers like DeepSeek refreshing rankings. The research team even resorted to testing cheating to impress the audience. Now, Meta hesitates between open source and closed source and may even abandon the Behemoth model. This ambiguous stance may cause greater controversy. To make a comeback, Meta must clarify its technology roadmap: if it insists on open source, it should strengthen the Llama ecosystem, bind PyTorch developers, and become an AI infrastructure provider (similar to the Red Hat model); if it shifts to closed source, it should focus on high-profit scenarios like enterprise AI services but bear the risk of community backlash.

Change 2: Focus on the value transformation of technology and shift from a paper-oriented approach to product implementation.

Meta's AI research has long been academically focused, while competitors pay more attention to engineering capabilities. It's necessary to establish a "product-research" joint team to break down traditional barriers. In the research process, Meta can learn from Google Brain and DeepMind's fusion model, allowing researchers to participate in product design and engineers to intervene in model optimization, shortening the cycle from paper to product. After product launch, Meta should utilize its vast user behavior data (like 2 billion daily active social interactions) to train models rather than relying solely on academic datasets. In the future, infrastructure like supercomputing clusters should prioritize projects with established commercialization paths over mere academic needs.

Change 3: Adjust the organizational structure to avoid Zuckerberg's authoritarian leadership.

Meta's decision-making has been overly dependent on its founder, leading to frequent strategic shifts. Moving forward, the company should grant higher autonomy to the AI lab, similar to Google DeepMind, allowing the team to operate independently and reducing management intervention. At the same time, optimize talent incentives and establish a long-term performance system tying executive compensation to the commercialization of AI products rather than short-term stock price fluctuations. It's essential that the team learns from past mistakes and clarifies priorities among AI, the metaverse, and hardware to avoid resource dispersion.

Whether Meta can survive the pain of transformation hinges on clarifying its technology roadmap, maintaining strategic focus, and rebuilding an engineering culture.

Of course, if Meta continues to disrupt itself, the twilight of its AI journey may officially arrive.