Huang Renxun and Wang Jian's Dialogue: Three Overlooked Key Insights

![]() 07/23 2025

07/23 2025

![]() 873

873

From the evolution of AI trends to business wars within the industrial chain.

This year, at the Chain Expo, Alibaba Cloud founder Wang Jian and NVIDIA founder and CEO Huang Renxun engaged in a dialogue lasting nearly half an hour.

Their discussion ranged from the next stage of AI, the path of model open-sourcing, the expansion of bioengineering boundaries, to the underlying logic of the relationship between AI and humans. Though they did not directly discuss products or commercial competition, both leaders unanimously pointed to several crucial issues shaping the future of AI technology.

At the crossroads of this dialogue, the outside world glimpses a signal: As the generative AI boom gradually wanes, industry discussions are shifting from parameters, data, and computing power to interfaces with the real world—ushering in a more "physically attributed" stage of AI.

Starting from this dialogue, this article attempts to dissect the following questions:

l As AI enters the era of "physical intelligence," how will the opportunities and challenges for hardware vendors, cloud providers, and even large model companies be reshaped?

l Regarding the key propositions of open source, bioengineering, and the relationship between humans and AI, what long-term judgments are revealed behind this conversation?

l From the statements of NVIDIA and Alibaba Cloud, what signals can be read about their strategic layouts for the next stage?

01

From Cognitive AI to Physical AI

Where lies the boundary of imagination for the next AI revolution?

During this dialogue, Huang Renxun presented a forward-looking judgment: Following cognitive intelligence and generative AI, the next wave will enter the era of "physical AI."

Physical AI refers to AI moving from the digital world into the physical world, possessing a complete chain of abilities including perception, reasoning, decision-making, and action execution. From this perspective, popular directions such as humanoid robots and autonomous driving can be classified under the category of physical AI.

Unlike generative AI, which is centered on "instruction-reasoning," physical AI emphasizes interaction capabilities with real-world scenarios. This means AI systems must autonomously understand external information and make continuous responses in uncertain physical environments, placing far higher demands on multimodal perception, agent systems, and real-time response capabilities than those of the present.

From the perspective of training paradigms, this also marks a shift in the training logic of large models.

In the past, models relied on big data for pre-training, but as we enter the era of physical AI, "post-training" and fine-tuning will become crucial. Mechanisms represented by reinforcement learning are no longer just "optimization patches" but key processes to ensure AI behavior aligns with human intentions. Behind this, the consumption of computing power will also escalate to the next order of magnitude.

As everyone knows, NVIDIA's ascendancy during the transition from cognitive intelligence to generative AI is inseparable from its early sustained investment in general-purpose GPU computing and the CUDA ecosystem. But if CUDA gave AI the "muscles" to think, physical AI means AI starts to "move," further driving the value reconstruction of the entire upstream industrial chain.

For example, sensor vendors with multimodal input capabilities (such as Sony, ADI) and precision reducer manufacturers (such as Harmonic Drive, Nabtesco) providing moving parts for robots will move from "marginal supporting roles" to the system core.

AI's "six senses" and "limbs" will all grow out of these hardware foundations.

In addition, the architecture of cloud computing will also face a new round of adjustments. The exponential growth in computing power demand will drive the gradual standardization of the IaaS layer into underlying infrastructure akin to "water, electricity, and coal," while the originally complex SaaS layer will transform into lighter interface forms. The true differentiation may return to business logic and product experience itself.

Simultaneously, the development of large models is approaching the critical point of the "Scaling Law." The paradigm of stacking parameters and improving capabilities that the industry has generally followed in the past is gradually losing effectiveness.

In other words, the evaluation criteria for model capabilities will shift from a single parameter scale to a comprehensive examination of overall performance: Do they possess the ability to process ultra-long texts? Can they perform multi-step reasoning in complex contexts? Can they adapt to different scenarios and achieve physical-level interactions? These are the core variables for competition in the next stage.

The impact behind this goes far beyond the technical level. For large model enterprises, organizational structures may be redefined. The traditional division of labor oriented towards engineering efficiency is difficult to support rapid iterations across modalities and scenarios. Future teams may need to transition from assembly-line-style coding to product-oriented system collaboration.

A fact easily overlooked is that the core application scenarios of future AI may revolve around the manufacturing industry.

Not only does AI control production lines, but AI is also directly embedded into product forms. It is foreseeable that from AI phones, AI computers to AI glasses, a batch of device categories natively equipped with physical AI will emerge in the future, potentially reshaping the way people interact with smart devices.

02

Three Key Propositions: "Open Source," "Bioengineering," "AI and Humans"

During this dialogue, Huang Renxun and Wang Jian both mentioned the importance of "open source" in AI development.

'Source' refers to source code and implementation details. In the past, open source versus closed source was more a dispute over technical routes; however, when we return to the context of "physical AI," it has gradually evolved into a commercial strategy and ecological choice.

As AI systems need to adapt to more diverse real-world scenarios, the requirements for customization capabilities and controllable boundaries are constantly increasing. Open-source models, due to their higher flexibility and transparency, are becoming a key foundation for AI to achieve "scenario-level" implementation. Especially in environments where product demand changes rapidly, enterprises that can autonomously adjust model behavior will undoubtedly be more adaptable.

Simultaneously, as the application boundaries of large AI models continue to expand, the division of related rights and responsibilities becomes increasingly important.

In open-source scenarios, the process of developers widely participating, using, and testing is itself a repeated practice of forcing the issues of model security, content generation boundaries, etc. This continuous co-construction and supervision helps gradually clarify the attribution of rights and responsibilities of AI models in practical applications.

NVIDIA's promotion of "open source" is not just lip service. As early as two months ago, its introduction of NVLink Fusion technology opened the NVLink ecosystem to third-party CPUs and accelerators for the first time, encouraging interoperability between external hardware vendors and its own chips by releasing IP and hardware interfaces.

However, questions also arise: When large models move towards open source, what will be the focus of competition?

The answer may lie in the ability to construct ecological closed loops. Just as many Apple users choose Apple because of its powerful software and hardware ecosystem, future large model vendors must also construct a complete ecosystem encompassing models, data, applications, and hardware based on open source.

But this also means that AI startups with smaller sizes and fewer resources may accelerate the loss of independent living space when facing giant platforms.

In addition to technology and ecology, another keyword repeatedly mentioned during this dialogue is "bioengineering."

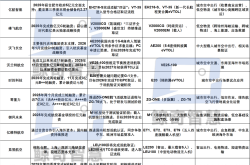

Earlier this year, NVIDIA jointly launched La-Proteina with the Canadian Mila Research Institute—an AI generation model for protein structures. The signal behind this move is clear: Although the pharmaceutical industry has a very high threshold and a very slow pace, once breakthroughs are made, its market space and social value are extremely considerable.

The discussion of "humans" itself runs through the entirety of the dialogue. Huang Renxun depicted a future relationship: AI will accompany you from birth to old age like a companion. This imagination sounds romantic but is not out of reach. Our relationship with AI is quietly shifting from "tool" to "symbiosis."

In fact, on mobile devices, AI has quietly embedded itself into our daily behaviors.

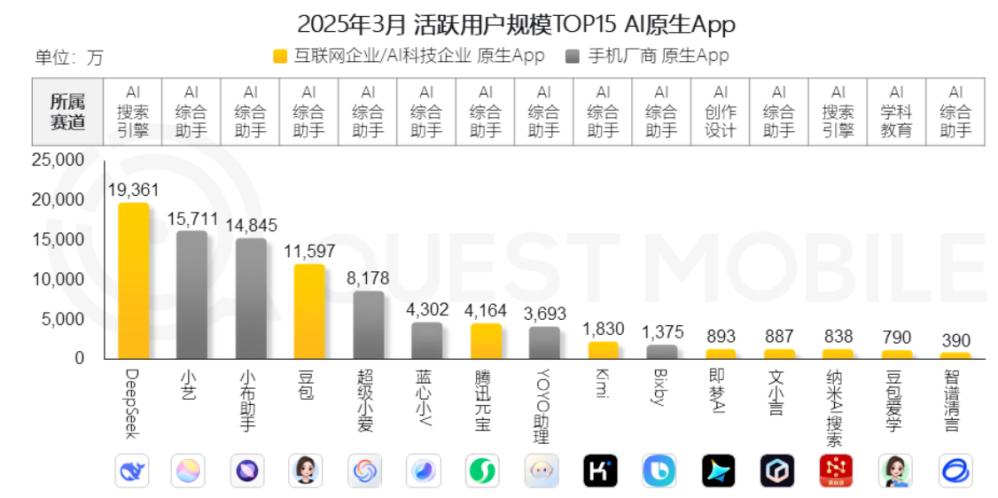

According to the QuestMobile 2025 AI Application Report, native AI assistants represented by Xiao Yi, Xiao Bu, and Xiao V have already occupied a considerable share in user size. Although the average usage frequency of these AI assistants by mobile phone manufacturers is still not high, this actually indicates one thing—the way AI and mobile phones are combined is far from being finalized.

As Huang Renxun said, AI will become your "digital partner." The form, position, and capability boundaries of this partner are the questions that all AI enterprises and developers truly need to answer in the next stage.

QuestMobile 2025 AI Application Report

03

NVIDIA and Alibaba Cloud's Next Decade: Where Are the Layouts and Breakthroughs?

Since the beginning of this year, NVIDIA has been repeatedly emphasizing a key positioning: The company is transforming from a chip manufacturer to an AI infrastructure builder.

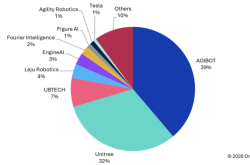

NVIDIA is also taking action to implement its AI Infra strategy. A typical case is its heavily invested cloud computing company CoreWeave, whose core business is to provide high-performance GPU cloud services for AI applications. Since its IPO in March, CoreWeave's market value has nearly tripled, now approaching US$73 billion, with its growth rate almost synchronizing with the heat of NVIDIA itself.

However, NVIDIA's layout does not stop at "cloud." As we mentioned earlier, the concept of "physical AI" also derives new technological paradigms at the computing power level—edge computing platforms.

The "edge" in edge computing, relative to "cloud," means deploying computing resources closer to data sources—at terminals, locally on devices, rather than far away in the cloud.

Although it may sound like a "non-mainstream" solution, it targets the core batch of scenarios in the era of physical AI: autonomous driving, robots, drones, industrial terminals. These fields have extremely high requirements for latency and real-time performance, which are obviously beyond the capabilities of traditional cloud architectures.

In contrast, the situation faced by Alibaba Cloud is much more complex.

When upstream hardware vendors continue to "reach out" downstream, providing both infrastructure and cloud services, it is clearly a signal of considerable pressure for Alibaba Cloud, which originated from IaaS. As a result, we see that Alibaba Cloud's strategy is also "moving downstream."

A typical internal strategic direction is the "IaaS + PaaS integration" model. Compared to traditional IaaS, which only provides bare resources, Alibaba Cloud hopes that customers will use its "middle-tier capabilities"—databases, big data platforms, Serverless, containerization, DevOps tools, etc. In other words, it aims to transform the cloud from a resource seller into a product provider, thereby evolving into an ecological platform.

This echoes our earlier discussion on "open source": Whether it's NVIDIA or Alibaba Cloud, they are both trying to escape the role of a single seller and move closer to system-level and platform-level ecosystems.

Open source is just a means; the essence is to seize control over downstream scenarios.

In the era of AI, the boundaries between hardware, computing power, models, data, and scenarios are becoming blurred. Whoever can occupy the fulcrum of "computing power + platform" earlier will have the qualification to reconstruct downstream rules. This battle may have already quietly begun beyond this fireside dialogue.