Why Edge AI Has Emerged as a New Competitive Frontier for Major Tech Firms

![]() 09/03 2025

09/03 2025

![]() 454

454

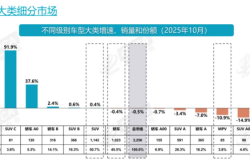

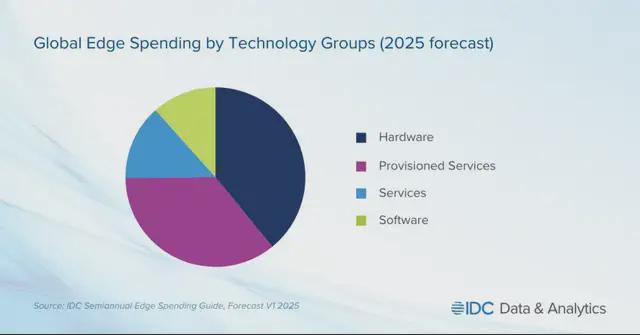

Early in the evolution of AI, Cloud AI dominated the industry with its immense computational power and centralized data processing capabilities. However, as application scenarios broadened, particularly in IoT, autonomous driving, and industrial control, Cloud AI's limitations became apparent. A study by International Data Corporation (IDC) predicts that global spending on edge computing solutions will reach nearly $261 billion by 2025, with a projected compound annual growth rate (CAGR) of 13.8%, surging to $380 billion by 2028. Retail and service industries will account for the lion's share of investments in edge solutions, comprising nearly 28% of global total expenditures. These figures vividly illustrate the industry's shift from the cloud to the edge.

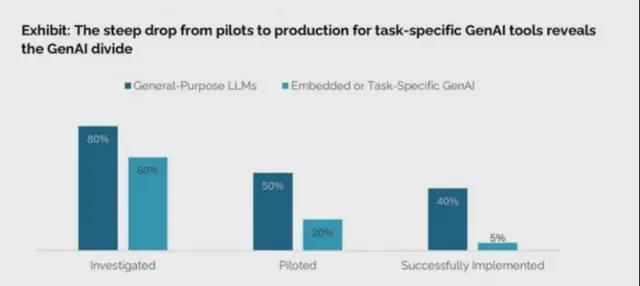

Concerns are mounting that artificial intelligence is heading towards a bubble. A report titled "The GenAI Divide: The State of Commercial AI in 2025" by the MIT NANDA project revealed that 95% of companies saw minimal productivity gains after developing generative AI tools. Even Sam Altman, CEO of OpenAI, acknowledged investors' overenthusiasm for AI, likening the current market to a bubble.

Nevertheless, insiders argue that such criticism primarily targets the cloud-based AI market and software algorithms.

01 The Necessity of Edge AI Generation

Currently, mainstream large language models on the market, including OpenAI's GPT, Google's Gemini, Anthropic's Claude, and domestically popular DeepSeek, rely heavily on AI cloud computing for generation tasks. This remote server-based model, leveraging its robust computational power, effortlessly handles complex demands such as large-scale model training and high-resolution image synthesis, offering high scalability—from daily Q&A for individual users to batch deployments at the enterprise level, it adapts flexibly to meet diverse user needs.

However, in enterprise-level applications or more intricate scenarios, the cloud model's shortcomings emerge: high latency, where response speed for complex tasks is susceptible to network fluctuations; strong network dependence, rendering it unusable in the event of a network disconnection; and critically, data privacy risks—a significant amount of raw data must be uploaded to the cloud for processing, increasing bandwidth costs and potentially leading to data leakage due to transmission or storage vulnerabilities, posing particular challenges in sensitive fields like healthcare and finance.

Hence, the advantages of Edge Generative AI are coming to the fore. It deploys generation capabilities directly on local devices—be it our phones, surveillance cameras, or even autonomous vehicles and industrial machines—with data processing occurring locally, ensuring sensitive information remains within the device, safeguarding privacy from the source. Additionally, Edge AI's low latency is a true "real-time scenario savior": autonomous driving necessitates millisecond-level road condition judgments, and industrial automation relies on immediate equipment fault warnings. These high-response-speed scenarios are precisely accommodated by Edge AI. Importantly, it eliminates the need for frequent data transmission, drastically reducing bandwidth demand and enabling independent operation even in remote areas without network coverage or industrial workshops with weak signals, providing greater stability and reliability than the cloud model.

The technical prototype of edge intelligence dates back to the 1990s, emerging as Content Delivery Networks (CDN). Initially positioned to provide users with network services and video content distribution through servers distributed at the network's edge, its core goal was to alleviate the load pressure on central servers and enhance content transmission and access efficiency.

However, with the exponential growth of IoT devices coupled with the proliferation of 4G and 5G mobile communication technologies, global data generation volume has soared, entering the Zettabyte (ZB) era. Under this backdrop, traditional cloud computing architectures have gradually exposed their shortcomings: data must be fully transmitted to the cloud for processing, leading to high bandwidth consumption and latency issues due to transmission distance. Furthermore, cross-network data circulation poses privacy leakage risks, making it challenging to meet the needs of scenarios requiring high real-time and security standards.

In the 21st century, to address the pain points of cloud computing, the concept of edge computing was formally proposed. Its core idea is to shift the data processing link from the cloud to edge nodes close to the data source, significantly reducing the amount of data uploaded to the cloud by completing preliminary screening, processing, and forwarding of data locally, thereby alleviating bandwidth pressure and lowering latency. However, edge computing at this stage primarily focused on optimizing the data processing process, without integration with artificial intelligence (AI) technology or involvement in the deployment and application of AI algorithms.

It was only after 2020, with the maturation of AI technology (especially lightweight models and low-power computing technology), that edge computing and AI began to deeply integrate, giving rise to "edge intelligence" as an independent fusion technology. Its core feature is deploying AI algorithms (including inference and training processes) on edge devices (such as IoT terminals and edge servers) close to the data generation end, enabling real-time data processing and low-latency decision-making while avoiding raw data uploads to the cloud, thereby ensuring data privacy security from the source.

Throughout the development of edge intelligence, it can be distinctly divided into three core stages: The first stage focuses on "edge inference," where the model training process still relies on the cloud, with the trained model then pushed to edge devices for inference tasks; the second stage enters the "edge training" phase, utilizing automated development tools to achieve full-process edge deployment of model training, iteration, and deployment, reducing dependence on cloud resources; the third stage, representing the future development direction, is "autonomous machine learning," aiming to endow edge devices with autonomous perception and adaptive learning capabilities, enabling model optimization and capability upgrades without human intervention.

This does not imply that Cloud AI will be supplanted. For ultra-large-scale model training and complex tasks involving cross-device collaboration, the cloud's powerful computing power remains irreplaceable. The future trend is likely to be a "cloud + edge" complementarity: the cloud handling the training and optimization of the underlying model, while the edge is responsible for real-time deployment and data processing in local scenarios. This synergy not only leverages the cloud's computational power advantages but also accommodates the edge's privacy and real-time nature, ultimately driving AI technology into various industries more securely and efficiently.

Data Source: precedenceresearch Semiconductor Industry Horizontal Tabulation

Data Source: precedenceresearch Semiconductor Industry Horizontal Tabulation

Market research firm data indicates that the global edge AI market size is projected to exceed $140 billion by 2032, a substantial increase from $19.1 billion in 2023. Precedence Research data shows that the edge computing market could reach $3.61 trillion by 2032 (CAGR 30.4%). These figures foreshadow the vast development potential of Edge AI and explain why major tech companies are turning their focus to this new blue ocean.

02 Giant Layout, Seizing the Initiative

The Edge AI chip race is fiercely competitive among major tech companies. As the core hardware support for Edge AI development, the chip field has witnessed trends towards computational power and architectural innovation in recent years.

Apple has actively deployed self-developed Edge AI chips in the iPhone series. Taking the newly released iPhone 16 series as an example, its A18 chip is deeply optimized for AI functions. The A18 adopts a second-generation 3-nanometer process, integrates a 16-core neural network engine, and can perform up to 35 trillion operations per second. This formidable computational power enables instant Face ID recognition and smooth Animoji generation, with response speeds entering the millisecond era. Simultaneously, thanks to the chip's local processing capability, data does not need to be uploaded to the cloud, fundamentally averting privacy risks associated with cloud transmission and constructing a robust privacy defense for users.

NVIDIA, a leader in graphics processing and AI computing, has also achieved notable results in the Edge AI chip layout. Its Jetson series of Edge AI chips is specifically designed for edge devices such as robots, drones, and smart cameras. Taking the Jetson Xavier NX as an example, this chip integrates 512 NVIDIA CUDA cores and 64 Tensor Cores, providing up to 21 TOPS (trillions of operations per second) of computational power while consuming only 15W of power. It offers powerful visual recognition and decision-making support for robots in complex and dynamic environments. In logistics and warehousing scenarios, mobile robots equipped with the Jetson Xavier NX chip can swiftly identify goods and shelf positions, plan optimal paths, and efficiently complete cargo handling tasks, significantly enhancing logistics operation efficiency.

Domestic companies have also made remarkable strides in the Edge AI chip field. IntelliFusion launched the DeepEdge 10 series in 2022, tailored for edge large models; in 2024, the upgraded DeepEdge200 adopted D2D Chiplet technology, paired with the IPU-X6000 accelerator card, which can adapt to nearly 10 mainstream large models such as Cloud Tian Tian Shu and Tongyi Qianwen, enabling real-time abnormal behavior recognition in smart security cameras and reducing the early warning response time to within 0.5 seconds.

Main Products of Domestic AI Computing Chip Companies Source: Minsheng Securities

Main Products of Domestic AI Computing Chip Companies Source: Minsheng Securities

On the evening of August 26, IntelliFusion released its 2025 semi-annual report. The financial report reveals that its revenue for the first half of 2025 was 646 million yuan, an increase of 123.10% compared to the same period last year; the net profit attributable to shareholders was -206 million yuan, with a narrowed loss of 104 million yuan year-on-year; and the non-GAAP net profit was -235 million yuan, with a narrowed loss of 110 million yuan year-on-year. Regarding the performance change, the company stated that during the reporting period, the revenue increase compared to the same period last year was primarily due to the rise in sales revenue from consumer and enterprise-level scenario businesses. The narrowed loss was mainly attributable to the simultaneous increase in revenue and gross margin during the reporting period.

Data Source: Company Financial Report Semiconductor Industry Horizontal Tabulation

Data Source: Company Financial Report Semiconductor Industry Horizontal Tabulation

Confronted with the realities of limited resources such as memory and computational power in edge devices, international technology giants like Google, Microsoft, and Meta have focused on researching and optimizing lightweight large models to achieve efficient operation on edge devices.

Google has actively explored this domain, successfully lightweighting some large models through innovative model architecture design and parameter fine-tuning. For instance, its Gemini Nano model, optimized based on the Transformer architecture, significantly reduces the number of model parameters and computational complexity while maintaining high model performance, enabling smooth operation on edge devices like smart security cameras and providing robust support for real-time video image analysis. In urban security surveillance networks, cameras equipped with the Gemini Nano model can real-time identify pedestrians and vehicles, monitor abnormal behaviors, and issue timely alerts, effectively bolstering urban security and prevention capabilities.

Microsoft took a different approach, introducing the phi-1.5 model, which boasts a relatively small parameter size but is unique in its selection of model training data. The model was trained using meticulously screened 27B token "textbook-level" data and excels in mathematical reasoning abilities, surpassing some multi-billion-parameter models. In the intelligent tutoring system within the education field, the phi-1.5 model can swiftly and accurately answer mathematical questions posed by students, providing detailed problem-solving steps and ideas, assisting teachers in teaching, and enhancing teaching quality and efficiency.

03 Where is the Breakout Point?

Smart home devices represent one of the most prevalent application scenarios for Edge AI. It enables smart home devices to evolve from "single instruction execution" to "behavior predictive services." Smart thermostats learn users' schedules and sleep cycles, dynamically adjusting the temperature in conjunction with outdoor weather conditions, ensuring comfort while reducing energy consumption by 15%-20%, far outperforming traditional devices. Devices represented by XiaoDu smart speakers, leveraging Edge AI, respond to high-frequency commands within 0.3 seconds and can also link cross-brand devices to form scenario services, such as the "Home Mode" automatically triggering lights, temperature adjustment, and music playback, driving the penetration rate of scenario linkage in China's smart home market to 38%, surpassing the global average.

Edge AI holds significant importance in the realm of wearable devices. The collaboration between Meta and Ray-Ban has led to the development of smart glasses capable of millisecond-level image recognition and local translation in cities like Shanghai. These glasses facilitate real-time translation of road sign text and recommendations for nearby stores, even without an internet connection, and have achieved cumulative shipments exceeding 2 million units. Chinese brands, on the other hand, emphasize in-depth health management. Huawei's Watch GT series, leveraging Edge AI, integrates heart rate, blood oxygen, and ECG data, achieving an 85% accuracy rate in screening for sleep apnea syndrome. This has helped over 100,000 users detect health issues in advance. OPPO's fitness tracker, meanwhile, adjusts exercise intensity in real-time based on user data, generates personalized plans, and forms a closed loop of "collection - analysis - advice" for health management.

In the industrial sector, the fusion of AI with IoT and robots is propelling factories towards an upgrade from "single-device automation" to "full-process intelligent collaboration". Edge AI processes production data in real-time, enabling full-chain intelligence that includes "fault prediction, process optimization, and quality traceability". Robots in smart factories have evolved from mechanical arms performing repetitive tasks to "intelligent production units" with "real-time decision-making capabilities". Arm's computing platform serves as an "efficient data processing base" for the Industrial Internet of Things. In industrial scenarios, a single smart device generates over 10GB of sensor data daily (including temperature, vibration, and pressure). Uploading all this data to the cloud for processing would not only consume substantial bandwidth but also introduce data delay (potentially up to several minutes). However, the edge computing capability of the Arm platform enables "local data filtering and analysis", where only "abnormal data" (such as vibration frequency exceeding normal ranges) is uploaded to the cloud, while a "device health report" is generated locally to promptly alert maintenance personnel.

In the long term, the profound value of edge AI lies in transforming artificial intelligence from a mere "tool attribute" to a "scenario attribute". When intelligence ceases to rely solely on remote cloud support and instead becomes embedded within specific life and production scenarios—from home thermostats dynamically adjusting temperatures based on user habits, to factory robots autonomously optimizing operational paths, to wearable devices customizing health solutions for users—artificial intelligence truly becomes integrated into the fabric of industry and daily life. This transformation not only mitigates the risk of technological bubble formation but also ensures that the value of artificial intelligence takes root in practical applications.