What are the common active safety-assisted driving features in autonomous driving?

![]() 09/08 2025

09/08 2025

![]() 640

640

As vehicle automation levels increase, so do the demands for driving safety. Compared to passive safety, which only takes effect after a collision occurs, active safety-assisted driving provides real-time perception of the surrounding environment, risk assessment, and, when necessary, active intervention. This enables early warnings or braking during the accident incubation phase, offering drivers a 'second pair of eyes' and a 'second foot.' From the earliest cruise control to today's comprehensive safety systems covering urban congestion, intersections, cyclist protection, and other scenarios, active safety-assisted driving represents the continuous refinement and upgrading of the 'perception-decision-execution' closed loop (closed loop).

Technical Foundations of Active Safety-Assisted Driving

1) Environmental Perception

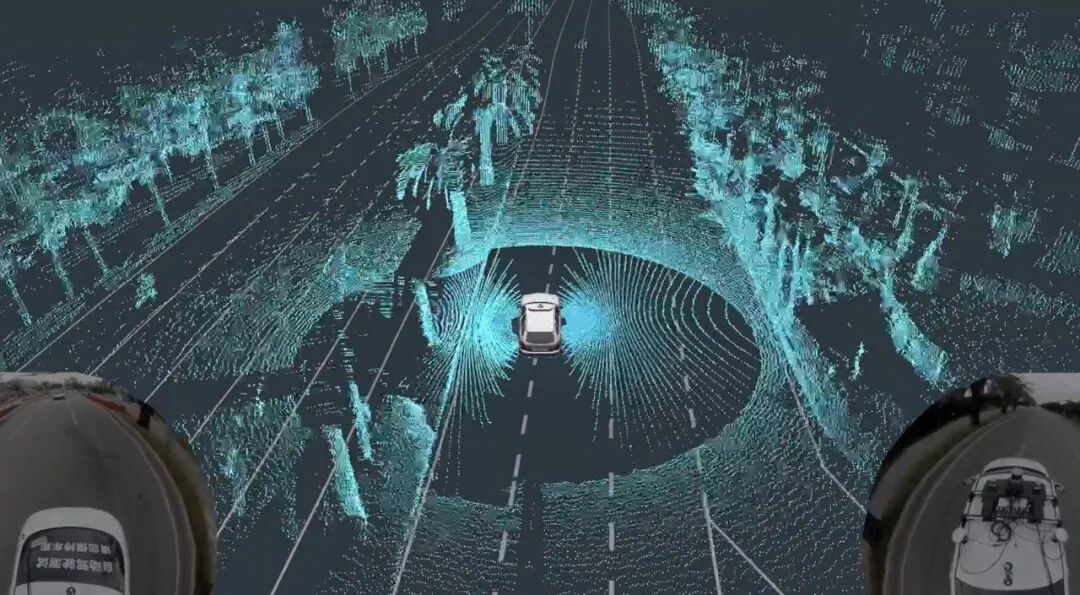

For precise warnings and interventions, active safety-assisted driving requires comprehensive and reliable perception of the vehicle's surroundings. In this regard, millimeter-wave radar, cameras, ultrasonic sensors, and LiDAR each play distinct roles while complementing one another.

Millimeter-wave radar transmits continuous waves in the 24GHz or 77GHz bands, using the Doppler effect to rapidly measure the distance and speed of moving targets ahead and behind. It maintains stable output even in adverse weather conditions like fog, rain, or snow. Cameras, on the other hand, capture rich image details with high resolution and precisely identify pedestrians, vehicles, traffic signs, and more through specially optimized convolutional neural networks, though HDR fusion and denoising algorithms are needed to enhance quality at night or in backlit scenes. Ultrasonic sensors, while limited to extremely short ranges (approximately 0.2–5 meters), provide cost-effective and reliable supplementation for parking and low-speed environments. In recent years, LiDAR has enabled 360-degree three-dimensional point cloud scanning. When combined with inertial measurement units for spatial-temporal synchronization, it generates centimeter-precision 3D environmental models, offering the most intuitive spatial information for complex scenarios. Signals from each sensor undergo RF front-end or image preprocessing, analog-to-digital conversion, filtering, and feature extraction before being input into subsequent intelligent algorithms.

2) Multi-Modal Fusion

Building on the parallel operation of multiple sensors, a core technology of active safety-assisted driving lies in fusing this data to extract the most reliable environmental representation. This process typically involves hierarchical fusion: at the lowest level, radar's distance-speed matrices and LiDAR's point cloud data are aligned into a unified coordinate system to deepen spatial understanding of obstacles. At the middle level, targets identified by the camera are matched with radar-tracked trajectories, assigning higher confidence labels to each pedestrian or vehicle. At the highest level, risk assessment networks incorporate historical motion information of all targets, lane topology from high-definition maps, and traffic rules into decision-making, yielding behavioral intent judgments. In recent years, the rise of end-to-end fusion neural networks has enabled multi-modal data to undergo joint learning within a single network structure, further enhancing real-time performance and robustness.

3) Core Algorithms

After sensor fusion, tracking and predicting various targets becomes the next challenge. For association problems in multi-target environments, algorithms like Joint Probabilistic Data Association (JPDA) and Multiple Hypothesis Tracking (MHT) effectively resolve mismatches. Extended Kalman Filters (EKF) and Unscented Kalman Filters (UKF) leverage vehicle dynamics models for precise state estimation of targets. When predicting 'where the target will go,' prediction models based on Long Short-Term Memory networks (LSTM) or Graph Neural Networks (GNN), combined with lane line information, traffic signals, and target turn signal status, provide reasonable trajectory inferences within just one or two seconds. For pedestrians and cyclists, the system further analyzes key human body points, recognizing head orientation and walking posture to determine if a pedestrian intends to cross the road. Throughout this process, data latency control and packet loss recovery are crucial; otherwise, outdated decisions or failure to trigger necessary warnings may occur.

4) System Architecture and Software Platform

The high performance and reliability of active safety-assisted driving systems rely on a clearly stratified, real-time responsive, and redundant Electronic/Electrical (E/E) architecture. Traditional automotive electronics predominantly use a 'distributed architecture,' where each functional module (e.g., AEB, LKA, BSD) corresponds to an independent ECU (Electronic Control Unit), communicating via protocols like CAN bus or FlexRay. While this approach offers clear structure and modular independence, it faces limitations in communication bandwidth, redundant control logic, and inefficient coordination, especially when integrating multiple functions with insufficient real-time responsiveness.

Consequently, an increasing number of automakers are transitioning to a 'centralized architecture,' integrating multiple active safety-assisted driving functions into one or more high-performance domain controllers (ADCs or Zonal Controllers) that uniformly execute algorithms and centrally schedule perception and control signals. Core functions like automatic emergency braking, lane keeping, and adaptive cruise control can be consolidated on a central computing platform for active safety-assisted driving. By utilizing multiple heterogeneous cores (e.g., NPU+CPU+DSP) to separately handle perception, decision-making, and control processes, response speed is significantly improved while reducing ECU hardware costs.

In terms of software platforms, active safety-assisted driving functions are typically deployed on operating systems compliant with the AUTOSAR (Automotive Open System Architecture) standard and adopt a Service-Oriented Architecture (SOA) to encapsulate modules, enabling communication between different functions through standardized interfaces. Mainstream perception algorithms predominantly run in Linux or QNX environments, while real-time control components utilize RTOS to meet millisecond-level response requirements. Autonomous driving chips (e.g., NVIDIA Orin, Mobileye EyeQ5, Huawei MDC) provide extensive acceleration libraries supporting real-time inference of convolutional neural networks, radar point cloud preprocessing, and trajectory planning algorithms, serving as the core computational backbone for modern active safety-assisted driving platforms.

5) Functional Safety and Redundancy Design

In active safety systems, every decision can directly impact driving safety; thus, functional safety design is regarded as the lifeline for technology deployment. The industry currently adheres to the ISO 26262 standard for systematic functional safety evaluation, requiring verification at the system, hardware, and software levels to ensure no function leads to loss of control during failures. Critical modules (e.g., AEB or LKA) must undergo ASIL (Automotive Safety Integrity Level) assessment, with levels ranging from A to D. ASIL D represents the highest level, necessitating redundant computational paths, redundant power supplies, and redundant actuators.

6) Data-Driven and Self-Learning Systems

Many traditional active safety-assisted driving systems rely primarily on rule-based approaches, such as geometric models based on lane lines to determine lane departure or Time-to-Collision (TTC) windows to assess collision risks. While effective in structured environments like highways with clear rules and stable data, these methods often falter in urban roads, congested traffic, or unstructured scenarios (e.g., construction zones, snow-covered roads).

Therefore, an increasing number of active safety-assisted driving systems now incorporate 'data-driven' modeling. For instance, in pedestrian behavior prediction, the system no longer relies solely on distance and direction to assess danger but models historical trajectories, body posture, and gaze direction through deep learning to predict potential behaviors over the next 2–3 seconds. In lane change assistance and adaptive cruise control, systems are gradually shifting from traditional PID rules to reinforcement learning or imitation learning controllers trained on data, yielding more natural and human-like driving outputs.

'Automatic data replay' and 'closed-loop learning' have become catalysts for the rapid evolution of active safety-assisted driving technology. During each test or real-world drive, the system automatically tags and uploads critical scenarios (near-miss events, false alarms/misjudgments, extreme weather) to the cloud for subsequent model optimization. Automakers and suppliers establish high-quality data platforms, automated annotation systems, and model training pipelines, creating a closed loop from production vehicles to training platforms that enables continuous evolution of active safety-assisted driving systems.

Overview of Active Safety-Assisted Driving Functions

1) Automatic Emergency Braking

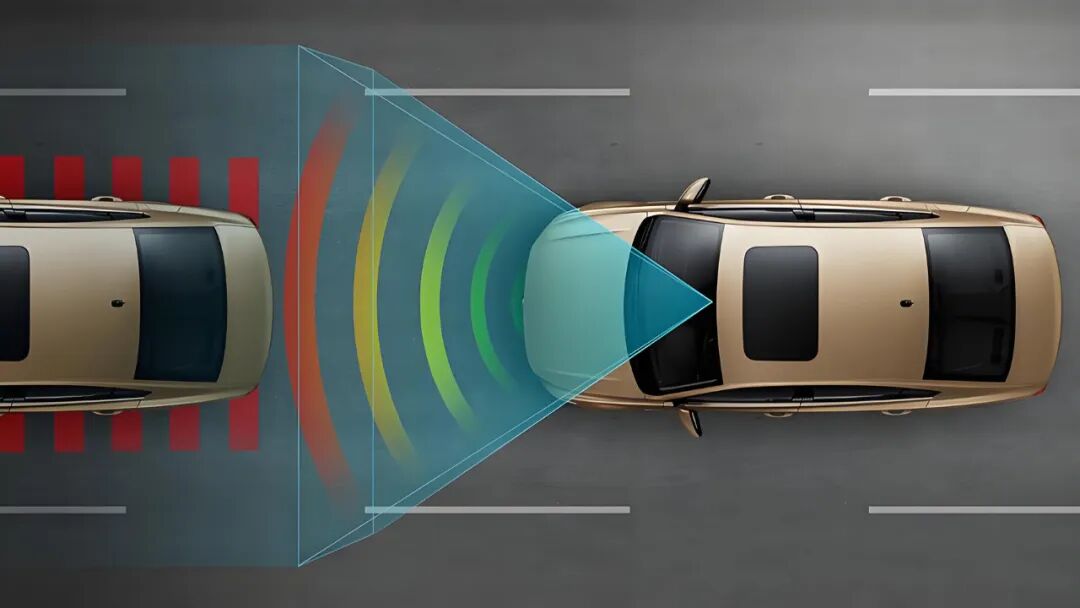

Automatic Emergency Braking (AEB) is one of the earliest mass-produced functions in active safety-assisted driving and represents its most technologically significant scenario. Each computational cycle, the system concurrently calculates Time-to-Collision (TTC) and Braking-to-Collision (BTC), incorporating vehicle braking performance curves and road friction coefficient models to determine whether safe stopping is achievable within the remaining distance. When detected risks exceed controllable limits and the driver fails to apply the brake promptly, the Vehicle Electronic Control Unit (VECU) issues a braking intervention command, engaging the ABS and Electronic Stability Control (ESP) subsystems to achieve optimal brake force distribution. This entire process must complete within tens of milliseconds, requiring exceptional consistency and reliability from the brake assistance system and brake sensors.

2) Forward Collision Warning

Prior to AEB activation, Forward Collision Warning (FCW) serves to alert the driver. By integrating tracked target information, the system continuously calculates collision risk indices and triggers warnings—via sound, steering wheel vibration, or dashboard flashing—when TTC reaches a predefined threshold (e.g., 1.5 seconds), prompting the driver to brake or steer promptly. FCW emphasizes 'providing reaction time'; through real-time monitoring of target distance and speed, it issues alerts during the initial accident phase, fundamentally reducing AEB activation frequency and mitigating braking impact on the driver.

3) Adaptive Cruise Control

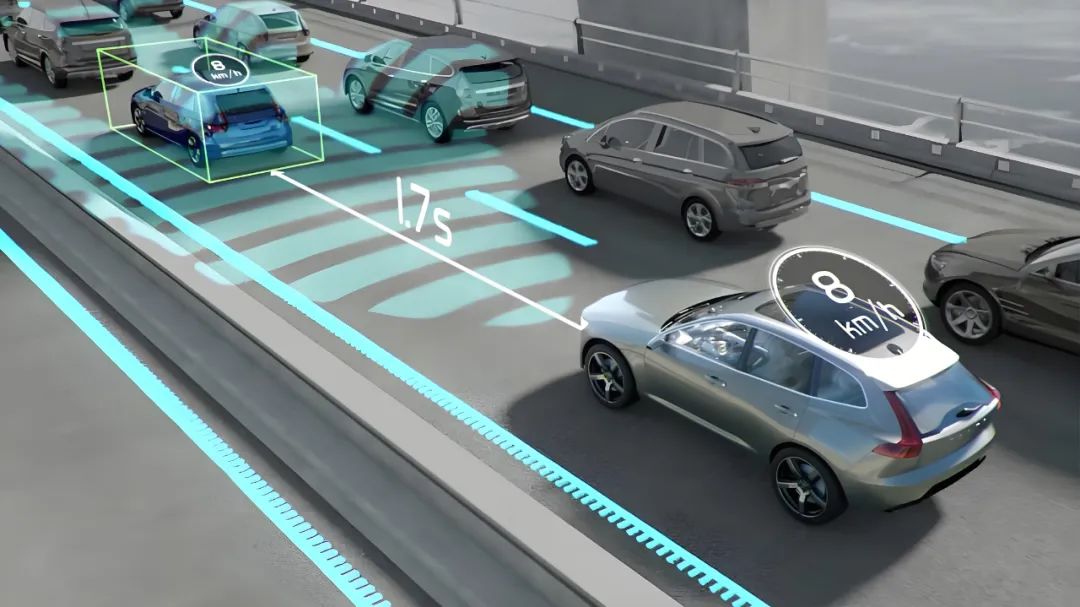

Adaptive Cruise Control (ACC) is among the core control modules enabling semi-autonomous driving. Its objective is to automatically follow a preceding vehicle without driver intervention, maintaining a set speed or safe distance while dynamically adjusting acceleration and deceleration based on traffic flow. ACC requires precise detection of relative distance and speed between the host vehicle and the preceding vehicle, typically handled by millimeter-wave radar using FMCW signal structures to compute Doppler information and distance profiles of obstacles ahead. Combined with target classification logic, it excludes non-vehicle objects like road signs or bridges from interference.

Once the perception layer confirms the presence of a target vehicle, ACC's decision module calculates a safe time headway (typically 1.5–2 seconds as a baseline) and predicts the host vehicle's optimal speed target based on the preceding vehicle's acceleration trends and road conditions. At the control level, the vehicle implements a longitudinal controller based on Model Predictive Control (MPC) or adaptive PID algorithms, considering factors like current speed, target speed, vehicle mass, gradient, and braking delay to smoothly regulate throttle and brake output, minimizing passenger discomfort. On intelligent driving chips, such controllers achieve feedback within 20ms–50ms through high-speed real-time data stream inference, ensuring stable following behavior in both high-speed and congested scenarios.

While ACC appears capable of handling most driving scenarios, misjudgments may occur during low-speed urban driving due to sudden stops by preceding vehicles, cyclists cutting in, or traffic signal recognition failures. Consequently, some manufacturers deeply couple ACC with camera perception systems to enhance robustness in complex scenarios.

4) Lane Keeping and Departure Warning

Another widely deployed active safety feature is Lane Keeping Assist (LKA) and Lane Departure Warning (LDW). These functions primarily rely on cameras to detect lane line shapes, types, and positions in real time, determining whether the vehicle has deviated from its lane and providing steering intervention or warning prompts accordingly.

Algorithmically, the camera first undergoes image distortion correction and enhancement before a deep neural network (e.g., SCNN or ENet) extracts boundary features of lane lines on the road surface. These features are then mapped into the vehicle coordinate system to construct a lane model. Quadratic curve fitting is commonly used for lane lines, and by incorporating camera posture and vehicle IMU data, the system estimates lateral deviation and angular error between the vehicle and lane centerline. When lateral deviation exceeds a threshold, the system activates warnings via sound or vibration feedback to the driver; if equipped with LKA, it applies subtle correction torque through the Electric Power Steering (EPS) system to guide the vehicle back toward the lane centerline.

More advanced versions, such as Lane Centering Control (LCC) and Highway Assist, incorporate tracking of the preceding vehicle's trajectory alongside lane geometry and vehicle dynamics models to achieve precise trajectory following on curved roads. Modern LKA modules also introduce robust fault-tolerant mechanisms, reducing intervention intensity when road markings are severely worn or obscured to prevent counterproductive corrections.

5) Blind Spot Detection and Lane Change Assist

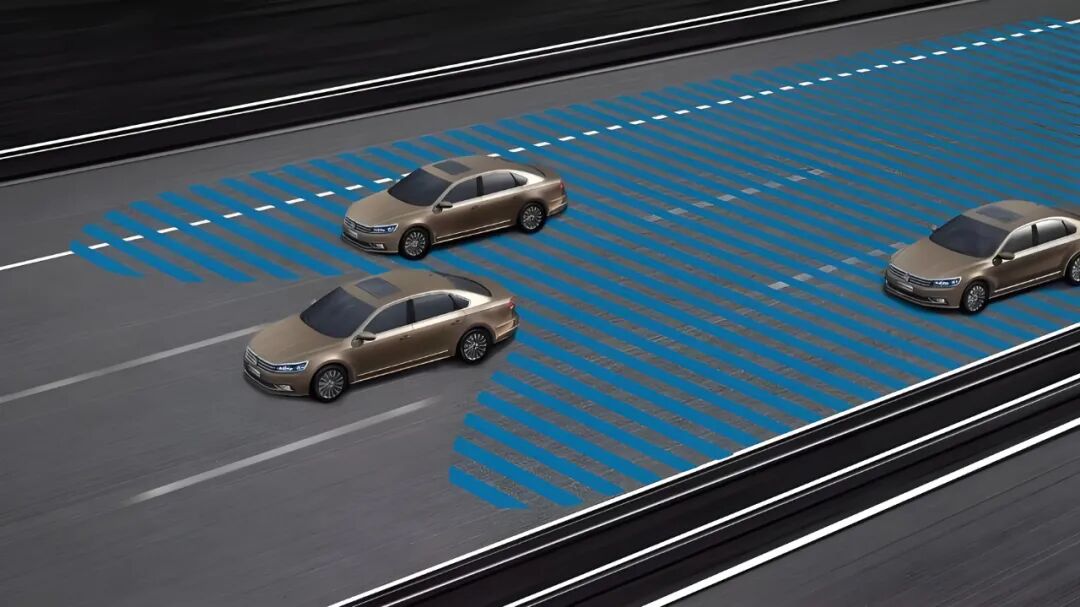

Blind Spot Detection (BSD) and Lane Change Assist (LCA) primarily address safety concerns in side and rear blind zones. These functions are mainly realized through 24GHz millimeter-wave radar installed at the rear corners, which offers a wide horizontal field of view and moderate detection range suitable for monitoring vehicles in adjacent lanes or rapidly approaching targets.

On the technical path, the BSD system continuously monitors the spatial area approximately 3–5 meters to the side and rear of the vehicle, analyzing the movement trends of target vehicles. If a target remains in this area for an extended period, the system activates warning symbols via the exterior rearview mirrors or dashboard icons. If a target is still present in the blind spot when the driver activates the turn signal, the system triggers stronger auditory or vibratory alerts. Some advanced versions of LCA also actively inhibit lane-changing maneuvers by providing steering resistance feedback or briefly delaying driver commands to prevent collisions.

Collaborating with BSD is the Rear Cross Traffic Alert (RCTA), which primarily operates during vehicle reversing. Side-mounted radar detects moving targets along horizontal paths, such as crossing pedestrians or vehicles, and issues braking or audible-visual warnings to prevent reversing accidents.

6) Traffic Sign Recognition and Speed Limit Assistance

With the maturation of computer vision technology, vehicles have begun to acquire the capability to recognize traffic signs, particularly in speed limit and restricted access sign identification, forming relatively mature mass-production solutions. The system primarily relies on forward-facing cameras, combining OCR (Optical Character Recognition) with convolutional networks to detect and interpret patterns, numbers, and color information within traffic signs in images.

During the recognition process, the system first extracts edge and shape features from the image, filtering for typical circular, triangular, and octagonal regions. It then performs character segmentation and classification to identify specific sign contents such as 'Speed Limit 60,' 'No Left Turn,' and 'School Zone.' Some technical solutions integrate results from high-definition maps and V2X communication modules for dual verification of recognition results, thereby enhancing accuracy. When a new speed-limited zone is detected, the system can proactively adjust the target speed of adaptive cruise control or issue warnings when the driver exceeds the speed limit.

7) Driver Monitoring

When utilizing advanced driver assistance functions, ensuring that the driver remains alert at all times is a prerequisite for the safe operation of the system. Consequently, Driver Monitoring System (DMS) technology has become a focal point. This system typically employs infrared cameras or TOF cameras installed near the steering wheel or dashboard to continuously analyze the driver's eye movements, head posture, and facial expressions.

Through facial keypoint extraction algorithms and eye-tracking models, the system can determine whether the driver is looking ahead, whether their eyes are closed for an extended period (drowsiness), and whether they are engaging in risky behaviors such as frequent glances downward (phone use). In some vehicle models, the system also monitors facial temperature and skin texture changes to assess driver fatigue levels or abnormal alcohol consumption. When potential loss-of-control risks are detected, the system can activate warning lights, steering wheel vibrations, voice prompts, or even trigger AEB or low-speed parking functions in different stages to ensure the continuity and safety of vehicle operation.

Final Remarks

Although current active safety assistance functions still fall under L1/L2 levels, they constitute the foundation for transitioning to L3/L4 autonomous driving. In L3 scenarios (such as automatic lane changing on highways, highway ramp entry/exit, and intelligent lane changing), requirements for environmental modeling, behavior prediction, and system stability significantly increase. While L2 active safety assistance focuses on 'assisting humans,' systems above L3 must possess the capability for 'decision-making takeover,' meaning they cannot rely solely on rules or triggers but must have a complete scene understanding capability and a highly reliable behavior generation system.

During this transition, the perception range and control capabilities of active safety systems are expanding. From AEB to pedestrian avoidance at intersections, from LKA to automatic lane centering in urban environments, from FCW to traffic light recognition and priority judgment in complex traffic scenarios. This integrated development will lead to future intelligent driving systems no longer distinguishing between 'active safety' and 'autonomous driving' but merging them into a unified intelligent driving stack, categorized by capability rather than function.

In the future, with the continuous maturation of algorithms such as image recognition, point cloud modeling, and graph neural networks, coupled with the leap in hardware computing power, active safety systems will evolve from passive response to proactive understanding, from rule execution to strategy generation, ultimately becoming the core 'brain' for vehicle perception-understanding-action integration. Simultaneously, it will provide a stable and reliable safety barrier for L3+ autonomous driving functions, safeguarding the bottom line while illuminating the path toward fully autonomous driving.

-- END --