Apple's new "chip" revealed, but collaboration with OpenAI faces backlash from Musk

![]() 06/12 2024

06/12 2024

![]() 738

738

Blue Whale News, June 11 (Reporter Zhang Han) Apple has always prided itself on releasing perfect products, but in the AI wave since last year, the market has not seen any significant moves from the company. However, Apple CEO Tim Cook has repeatedly signaled in interviews that Apple is already preparing for AI.

On June 10, Eastern Time, the much-anticipated Apple Worldwide Developers Conference (WWDC) officially kicked off. Consistent with previous foreign media reports, Apple spent more than half of the time introducing its progress in AI, announcing "Apple Intelligence" to support generative AI, and also releasing a new generation of operating systems, including iOS 18, iPadOS 18, macOS 15, watchOS 11, and visionOS 2. Additionally, Apple announced that Vision Pro will go on sale in China on June 28, starting at 29,999 yuan.

Image source: WWDC live video screenshot

Before introducing Apple's newly released AI products, Cook first set the tone by stating that Apple has been leveraging artificial intelligence and machine learning over the past few years to launch more powerful products that meet users' increasingly diverse needs. While the development of generative AI and large language models has brought many opportunities, embodying Apple's core product principles means that AI is far more than just a simple tool.

According to foreign media citing insiders, Apple's unique secrecy and its cautious approach to hardware and software upgrades have hampered its early efforts in AI. Now, finding itself in an unusual position, taking risks is a necessity.

Cook believes that the most important aspect of Apple's AI products is understanding user needs, and the AI functions used should be based on personal contexts, such as daily scheduling, interpersonal relationships, and communication. They should help users in crucial matters, be intuitive and easy to use, and deeply integrated into the product's user experience.

The launch of "Apple Intelligence" means that Apple has finally completed the AI puzzle, with a deeper understanding of users and a focus on personal scenarios, which Apple believes are its most competitive chips.

Apple's Late Arrival in AI

Craig Federighi, Apple's Senior Vice President of Software Engineering, pointed out that compared to some AI products on the market, the biggest advantage of "Apple Intelligence" is that it can better understand users' needs. Craig Federighi introduced that the large language model built into "Apple Intelligence" will be placed at the core of the iPhone, iPad, and Mac, capable of deeply understanding natural language, generating language and images, and simplifying the process of interacting with multiple apps.

Image source: WWDC video live screenshot

Even though understanding user needs means relying on user data as a foundation, Apple's position is that people should not hand over all the details of their lives to be analyzed on someone else's AI cloud. Therefore, Apple emphasizes the advantages of on-device processing, which can recognize personal data across various apps without collecting it. The foundation for achieving these functions is Apple's self-developed chip technology, which also implies a certain threshold. Only the A17 Pro and M-series chips can provide sufficient computing power, and only iPhone 15 pro and iPhone 15 pro Max can use AI functions. According to Apple, if local computing power is insufficient, calculations will be sent to a private cloud, allowing users to control where their data is stored and who can access it, while protecting privacy and security.

Specifically, the upgraded Siri has increased its ability to understand contextual context, allowing it to understand users more precisely based on their needs. When running, a glowing effect will appear around the phone, and user instructions will be presented in the form of content cards. Siri also has a wealth of information about functions and settings, able to answer numerous questions about how to operate on the iPhone, iPad, and Mac. Siri can also perform any operation within apps, such as finding and editing photos in the gallery. Siri can also add addresses sent in messages to contacts, retrieve flight details from emails, and automatically extract and fill in ID numbers.

In terms of more practical operations, Apple places more emphasis on focus mode but can filter prompt information based on content, with more important reminders appearing on the screen. The Notes app has added an image wand function that can generate images based on user-provided information. When sending text messages, it can also generate personalized emojis that better match the mood. It supports call recording, allowing users to record calls and transcribe them directly into text.

"Apple Intelligence" will be available as a trial version in American English this summer. This fall, its beta version will be released as part of iOS 18, iPadOS 18, and macOS Sequoia.

System-level Collaboration with OpenAI Angers Musk

In addition to on-device and private cloud solutions, Apple also announced a collaboration with OpenAI, planning to integrate ChatGPT into Siri in the future. Users will be able to use GPT-4o for free without needing to create a new account, and this request will not be recorded. Existing ChatGPT paid users can link their accounts to use subscription services.

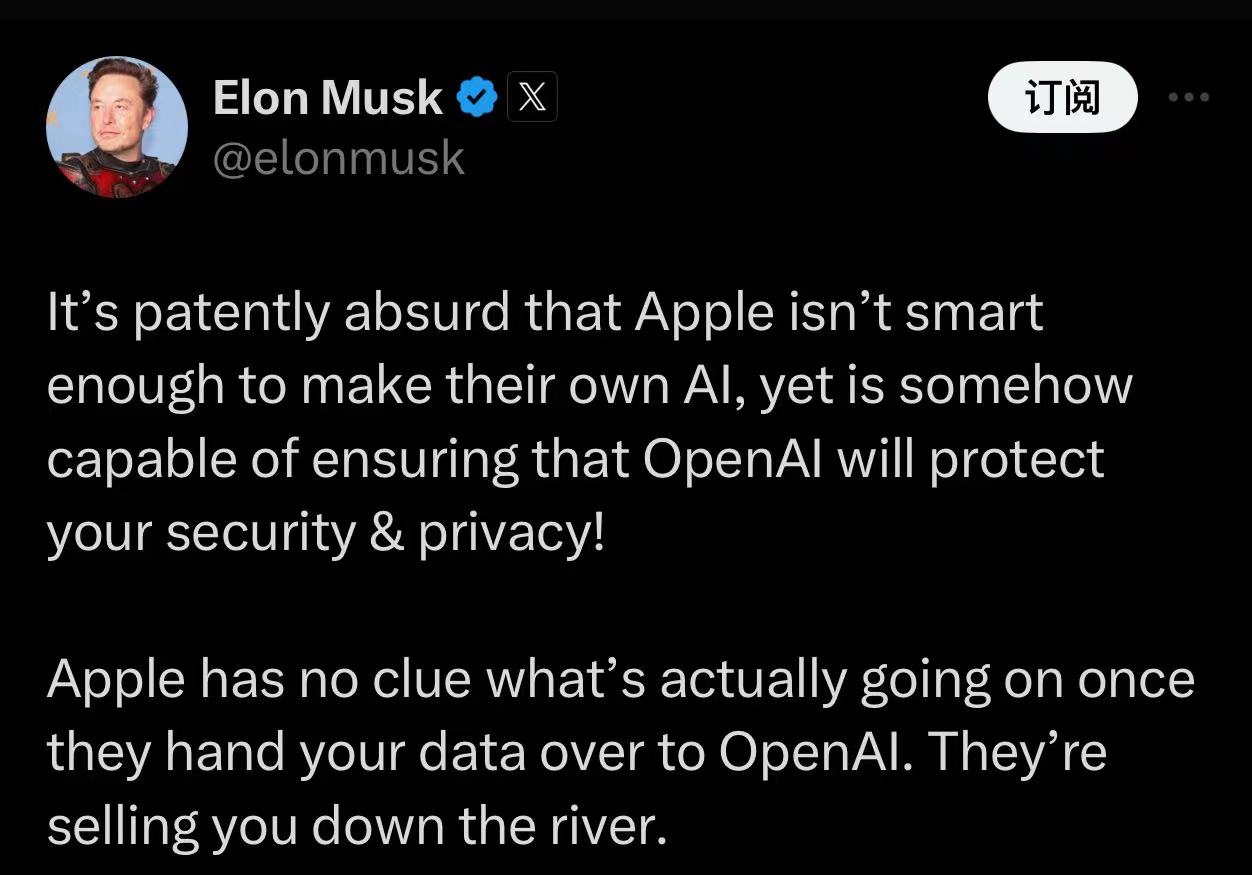

However, this news angered Tesla CEO Elon Musk, who posted multiple times on X bashing Apple. "Apple can't develop its own AI but can ensure that OpenAI protects your safety and privacy. This is clearly absurd. Apple has no idea what will happen to your data after it's handed over to OpenAI. They're completely selling out their users by doing this."

Image source: Musk's X screenshot

Image source: Musk's X screenshot

Musk also stated that if Apple integrates OpenAI at the operating system level, Apple devices will be banned from his company. Musk's AI company, xAI, which he founded last year, is currently one of OpenAI's strong competitors, with a valuation of $24 billion after its latest round of financing.

However, OpenAI CEO Sam Altman expressed on the X platform: "I'm very excited to be working with Apple and integrating ChatGPT into their devices later this year! I think you'll love it."

In over two hours of presentations, Apple only dedicated two minutes to introducing OpenAI's ChatGPT. On the one hand, Apple wanted users to have a better understanding of its AI-related features, but it also meant that Apple didn't want to "put all its eggs in one basket." Craig Federighi said at the end of his explanation that Apple will continue to add support for other AI models in the future. According to media reports, Apple has confirmed that it will integrate Google's Gemini model in the future.

However, the capital market was not impressed by this WWDC. On June 10, local time, Apple closed at $193.12 per share, down 1.91%, with a market capitalization of approximately $2.96 trillion, erasing $58 billion in value overnight and once again being surpassed by NVIDIA.