Productization of Large Models: Three Dances

![]() 06/13 2024

06/13 2024

![]() 689

689

Today, hundreds of AI large models around the world face a common problem: how to make money.

Whether the models originate from China or the United States, whether they are general-purpose large models or vertical large models, commercialization is a global challenge faced by this technology.

After the initial shock and joy brought by large models, numerous AIGC platforms have moved towards free openness, and large model toB services have engaged in fierce price wars. Despite the diverse array of large models, they seem unable to activate users' willingness to pay.

As everyone knows, the prerequisite for commercialization is productization. Although the AIGC capabilities brought by large models inherently possess strong productization attributes, as a software application, it is still too crude and black-boxed. Users need to explore what AIGC can bring and what possibilities it has, making it difficult to obtain definitive product satisfaction. On the other hand, the technical potential of large models has not been fully tapped. Some basic capabilities that need to be amplified at the product design level still remain in the long list of platform feature descriptions.

Under the global pressure of shared commercialization, the productization of large models has begun to accelerate. Regardless of China or the United States, whether traditional technology giants or new AI companies, they have all begun to focus on the productization of large models, introducing diverse and multifaceted productization strategies.

This situation has left many people feeling confused. It seems that every manufacturer is creating different AI products and proposing new AI concepts. However, upon closer examination, it is difficult to articulate exactly what the differences are.

To help everyone overcome this "AI vertigo" caused by the plethora of options, we have summarized the three main approaches to the productization of large models.

It doesn't matter if you don't understand the various strategies of large model productization. We only need to remember that today's large models are essentially dancing three dances.

The Whirling Dance towards Full Modality

The question of what AI products look like in the eyes of international AI giants has gradually found an answer: they may want AI to be similar to real humans.

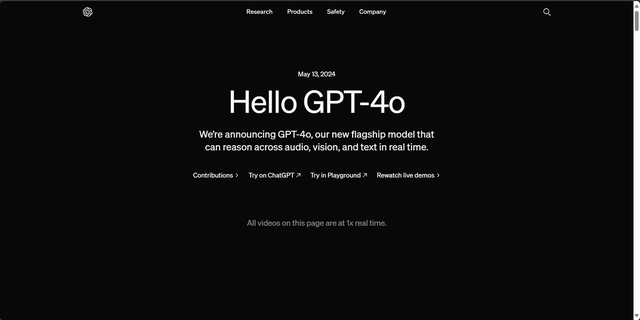

Not long ago, OpenAI and Google successively released new AI products, namely OpenAI's latest flagship large model GPT-4o and Google's Project Astra. Their commonality lies in the incorporation of cross-text, audio, and visual information collection capabilities. In other words, large models are evolving from merely listening, reading, and writing to also seeing and speaking. The interaction mode between users and large models is becoming increasingly similar to that between humans, and large models can respond to audio input within the range of 200 to 300 milliseconds, which is roughly the same speed as human communication.

GPT-4o is considered OpenAI's most productized application to date because it places greater emphasis on the interactive experience with users, refining details such as response speed and specific usage functions defined on the product side. More importantly, GPT-4o has pioneered a new product model: it offers more interactive forms than voice assistants, with a wider range of applications, while reducing the usage threshold and increasing usage scenarios compared to traditional AI dialogue box models.

The user experience of this AI product is very similar to making a video call with a real person. It's hard not to think of Jarvis from the movie "Her" or "Iron Man," although the user experience is certainly not as good, the product logic is identical to the imagined use of AI in science fiction movies.

We have reason to speculate that OpenAI's approach to AI productization is designed by comparing it to science fiction works, except that they happen to have the opportunity to turn science fiction into reality.

This "video call-like" large model product model essentially integrates different AI perception, understanding, and generation capabilities, just like the whirling dance that spins faster and faster. As large models continue to develop, AI technology is also spinning faster, incorporating information collection capabilities and content generation capabilities under different modalities.

Following this path, there is reason to believe that the following changes will occur in mainstream AI products:

1. Video generation capabilities similar to Sora will soon be integrated into mainstream general-purpose models, enabling AI applications to simultaneously listen, see, read, and generate various contents including text, code, audio, images, and videos.

2. The survival space for vertical AI models will become increasingly narrow, while the capabilities integrated into general models will continue to increase. Just as the "o" in GPT-4o stands for Omni, which means all-around capabilities. Omnipotence will be the main development approach for AI products.

3. The memory capacity of AI will become stronger, leading to the "omnipotence + customization" becoming the main development idea for AI applications.

Although at this stage, large models are still often combined with capabilities such as search, drawing, and voice assistants. With the continuous development of "three-in-one large model products" with full modality perception, understanding, and generation, large models will no longer be an enabling technology but will tend to become an independent and brand-new product form.

Although this product direction is still immature, the determination of the direction is significant.

AI applications similar to "video calls" may be the most fundamental human imagination of AI, besides robotic butlers. Its emergence and development mean that the largest undersea gold mine in the AI era is emerging.

The Group Dance of ChatGPT-like Applications

If large models were a football team, with companies like OpenAI breaking through in the front, then more AI companies and technology companies would need to compete in midfield. After the explosion of ChatGPT, OpenAI began to develop in areas such as agents, text-to-video models, and full modality models, but a large number of companies that entered the large model market took this opportunity to refine their ChatGPT-like applications. After a long period of internal testing, numerous AIGC applications in the form of chat dialogue boxes are now moving towards the public. At this point, the question arises: after investing so much and finally being able to open to C-end users, how will they make money in the end?

We are witnessing a very unique scene: a large number of AI projects, represented by the domestic large model camp, are concentrated on the ChatGPT-like model segment. Applications such as text-to-video that move forward have not yet been completed, and looking around, one will find that everyone's capabilities are more or less the same, with整齐划一actions and only different concepts and slogans.

Whether called AI assistants, intelligent platforms, intelligent dialogue, or intelligent search, essentially, these large model products are ChatGPT-like applications. From the earliest Wenxin Yiyan to Tongyi Qianwen, Doubao, Kimi, Tencent Yuanbao, iFLYTEK Spark, and Tiangong, the market is already flooded with too many similar products, turning large models into a genuine group dance.

A prisoner's dilemma is plaguing all such products: wanting to charge to recoup research and development costs but fearing that charging will cause user dissatisfaction and push users towards competitors; wanting to achieve differentiation through technical capabilities but unable to come up with truly convincing technical solutions, so they can only focus on concepts, names, and gimmicks.

To escape this dilemma, ChatGPT-like applications have also found some productization solutions, which we can summarize as follows:

1. Begin to emphasize GPTs-like models and leverage agents to drive market upgrades.

As the saying goes, large models + dialogue are just the opening act, and the real tickets are sold by agents. Customizable agents with professional capabilities are considered the true destination for the commercialization of large models. Facing this possibility, various manufacturers have also begun their own explorations, including OpenAI creating the GPTs model.

For example, the Wenxin large model has officially implemented agent capabilities and launched the Wenxin Agent Platform; Volcano Engine has also announced Kouzi, a one-stop AI application development platform. The product model of free basic AIGC dialogue + paid advanced agents is the main consideration for the commercialization of large models in the future.

2. Use ChatGPT-like products as traffic entrances.

Another productization approach for similar applications is the internet model of a large entrance + small charging window, where the overall product is free, but some specific functions may require a membership or the use of tokens. Charging functions may be relatively professional, targeting specific demand groups, or relatively novel and fun, stimulating users' curiosity and novelty-seeking psychology.

The problem with this model is that it makes the entire product increasingly complex. Users may feel a sense of being "tricked" like playing a free mobile game. Moreover, the ecological construction of the entire entrance also requires clearer interaction ideas to avoid users facing a very complex interaction logic with numerous charging entrances as soon as they come into contact with large models.

3. Combine ChatGPT-like applications with their own advantages.

The third construction scheme for related products is to combine these platform-type enterprises' other advantageous projects, hoping to achieve a 1+1>2 effect, or at least bind a portion of the original platform's users to large model applications. For example, Baidu emphasizes integrating applications such as search, libraries, and cloud drives with large models, invoking the Wenxin large model in various ways. Tencent Yuanbao announced that it will be integrated with the public account creation ecosystem to become a creation assistant.

This ecological matrix approach is a consistent idea in internet products, but whether users can develop stickiness with large model applications is a matter that requires long-term consideration.

We must awkwardly admit that ChatGPT, once the darling of the spotlight, has gradually faded from the interval of enthusiastic user pursuit after the novelty period has passed. Users prefer to see vivid, entertaining AI applications that can trigger trends, rather than merely stiff dialogue boxes.

The true productization spring for ChatGPT-like applications may lie in breaking the固化的AIGC dialogue chat framework and transforming its capabilities into software products with more aesthetic appeal and communicative power.

After all, in a group dance, it is likely that the one who does not follow the prescribed moves will be remembered.

Anthropomorphic Close Dance

Apart from these approaches to large model productization, another idea that should be given attention is anthropomorphizing AI products.

Not long ago, tutorials on how to make ChatGPT "escape" and do strange things were circulating everywhere, with some netizens starting a new fashion of "dating" AI. Regardless of how we evaluate these behaviors, we must recognize that humans' basic imagination of AI must be anthropomorphic. That is, we need AI to have a name, a personality, and shared memories with humans, rather than a cold and omnipotent dialogue machine.

From this perspective, the greatest value brought by AI is emotional value. We need to talk to people, share with them, and establish connections, but this person may not necessarily need to be a real human. This need exists objectively and has commercial potential. GPT-4o has been accused of infringing on the use of celebrities' voices, and its behavioral motivation is to make AI more anthropomorphic, allowing users to imagine having a dialogue with a real person.

Anthropomorphizing large models and dancing close with users are likely to become the focus of many AI projects in the face of increasing commercialization pressure in the future.

For example, the Doubao large model has introduced a role-playing model where AI mimics people with different personas and personalities to engage in dialogue with users, and users can set their own chat roles through agents.

Chats with personas focus on the memory capacity of multi-round dialogues. In this regard, more and more large model products are emphasizing memory capabilities, such as Wenxin Yiyan emphasizing the immersive role experience brought by multi-round dialogue capabilities to users.

With relatively low technical difficulty but potentially great value, it is easy to touch the edge of laws, regulations, and public order and good customs. The productization space for anthropomorphic large models should receive increasing attention in the future. This may radiate to productization forms such as digital human anchors and dedicated AI assistants, providing ample room for discussion and imagination.

The productization of large models is currently in an awkward situation where it is neither high nor low. To make breakthroughs upward, the technological generation gap is still apparent, and the unknown areas of technology are unclear; in lateral competition, there are too many similar large model products, creating a suffocating sense of crowding; to cover downward, most internet users actually still have no sense of large model applications and cannot find the necessity of usage and payment; and to take shortcuts and engage in anthropomorphic projects, it is easy to touch various minefields.

Under such circumstances, the productization of large models must dance with shackles, both to be seen by the audience and to be careful of the thorns on the stage.

Perhaps in the not-too-distant future, one or several genius productization ideas will solve all the difficulties of large models. But for now, the main theme of large model productization is still losing money to gain popularity, trying to put on a brave face.