Commonalities and Differences Between Autonomous Driving and Embodied Artificial Intelligence

![]() 10/27 2025

10/27 2025

![]() 644

644

In the current era, marked by the swift advancement of artificial intelligence and robotics, "embodied artificial intelligence" and "autonomous driving" have emerged as two highly promising fields. Although they share a common ideological foundation, they exhibit distinct characteristics in terms of technical implementation. Gaining an understanding of "what they are," "why they are similar," and "how they differ" not only aids in comprehending technological trends but also offers a clear path for cross-domain innovation.

What Exactly Are "Embodied Artificial Intelligence" and "Autonomous Driving"?

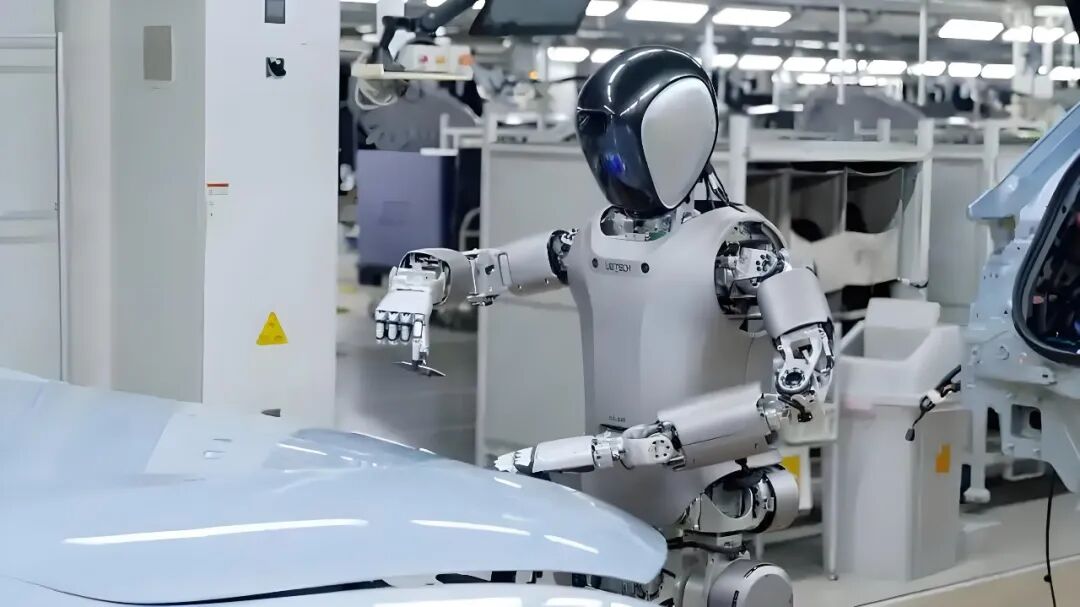

"Embodied artificial intelligence" pertains to an intelligent agent that not only possesses the capability for abstract thinking but also has a physical body. This physical form imposes mechanical constraints, specific sensor configurations, and actuator capabilities. Consequently, "intelligence" is required to establish a closed-loop system encompassing perception, decision-making, and action within these physical limitations. Research in the field of embodied artificial intelligence encompasses a wide range of entities, including bipedal robots, quadrupedal robots, robotic arms, drones, and more. The core objective is to tightly integrate perception and action, enabling intelligence to manifest within the "body." In essence, the structure of the "body" itself influences cognitive and learning processes.

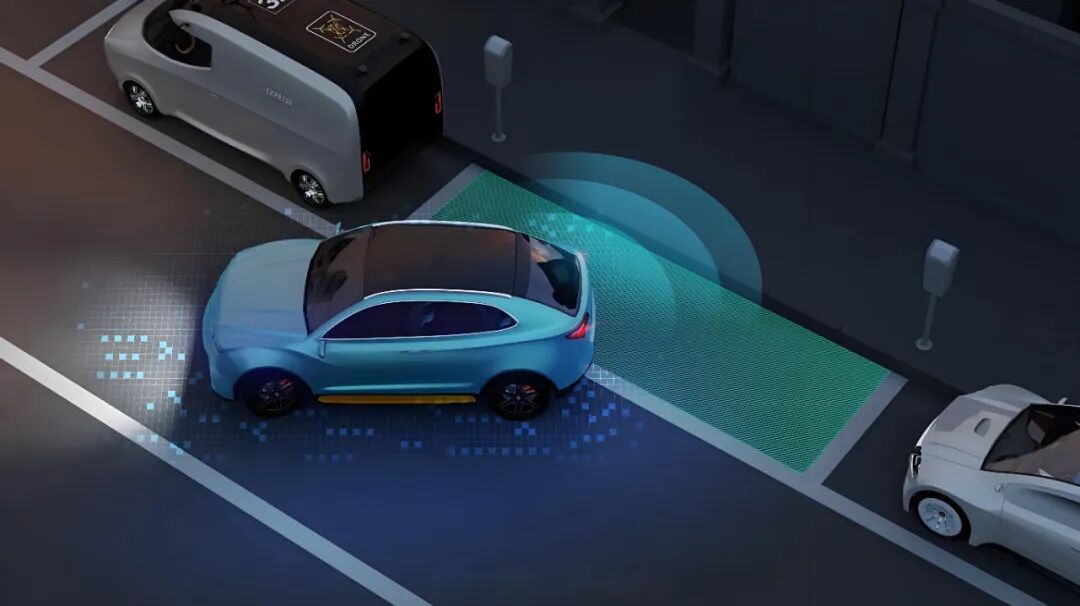

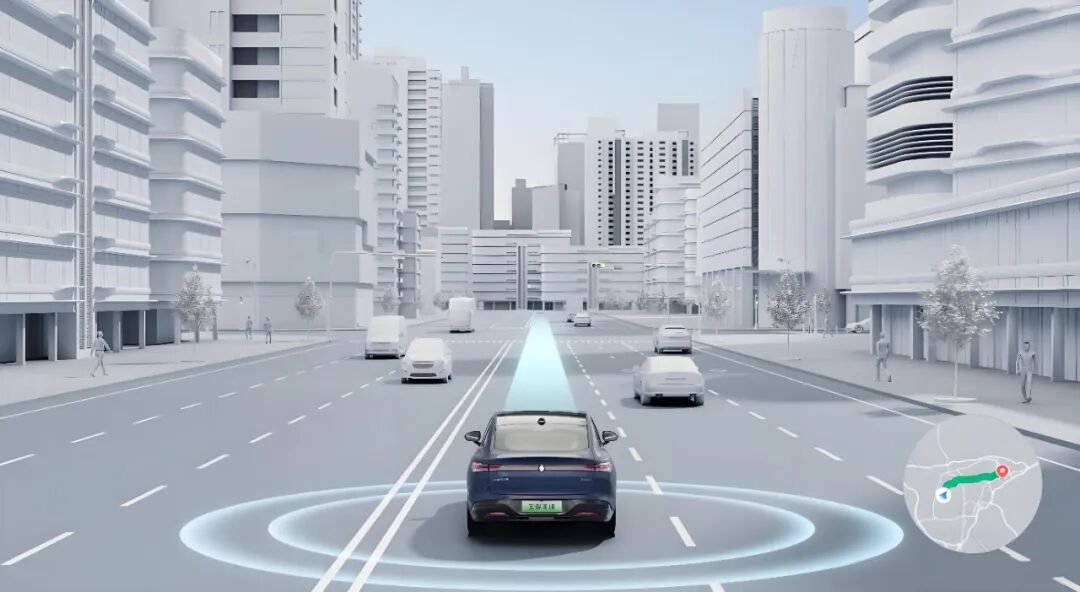

On the other hand, "autonomous driving" refers to an engineering system capable of safe and efficient movement on roads, with the aim of replacing or assisting human drivers in complex traffic environments. It necessitates the completion of a series of tasks, such as environmental perception, self-localization, behavioral decision-making, control instruction generation, and execution. This closed-loop process from perception to control bears a high degree of functional similarity to embodied artificial intelligence. If we consider a vehicle as a "body with wheels" and driving tasks as interactions between the body and the environment, then autonomous driving can be regarded as a specific implementation of embodied artificial intelligence in a particular form (ground vehicles) and application scenario (road traffic).

What Are the Commonalities Between the Two?

At the perception level, both rely on multi-modal sensor fusion. Cameras, LiDAR, millimeter-wave radar, inertial measurement units, and wheel speed sensors act as the "senses" for mobile robots in localization and obstacle avoidance. Similarly, they form the basis for autonomous driving in tasks like lane keeping, obstacle detection, and pedestrian recognition. Whether it involves environmental mapping (Simultaneous Localization and Mapping, or SLAM) or understanding the semantics of the surrounding environment, the core task is to integrate sparse, noisy, and occluded sensor data into a stable world model.

In terms of decision-making and planning, both encounter control problems in continuous spacetime. At the behavioral level, they must handle long-term strategies, such as navigating complex intersections or overcoming obstacles. Meanwhile, at the motion level, they are responsible for generating and executing short-term trajectories, ensuring factors like trajectory smoothness and dynamic feasibility. Methods such as path planning, model predictive control (MPC), sampling, and optimization are widely adopted in both fields.

Regarding learning and adaptation, techniques like reinforcement learning, imitation learning, and transfer learning are applied. Embodied artificial intelligence places emphasis on learning balance, gait, or object manipulation through trial-and-error in the physical world. In contrast, autonomous driving leverages learning methods to address unknown scenarios and long-tail decision-making problems. Both face challenges such as low sample efficiency, high exploration risks, and the transfer from simulation to reality. As a result, techniques including domain randomization, realistic noise modeling, and domain adaptation are employed.

Furthermore, both impose equally stringent engineering requirements on system robustness, real-time performance, and safety. Issues like sensor failures, network delays, and actuator malfunctions can disrupt the closed-loop stability. Engineering practices such as redundant design, fault detection and degradation strategies, and runtime monitoring are common in both types of systems. Assessment metrics also exhibit a high degree of overlap, with collision rates, failure counts, tracking errors, task completion rates, energy consumption, and operational efficiency serving as evaluation indicators for both.

What Are the Differences Between the Two?

For autonomous driving and embodied artificial intelligence, fundamental differences lie in their physical form and dynamics. Vehicles belong to non-holonomic freedom systems, constrained by tire friction and braking capabilities. The execution of actions is highly dependent on vehicle dynamics models and tire-road interactions. In contrast, research objects in embodied artificial intelligence may include multi-degree-of-freedom or deformable robots, such as robotic arms capable of flexible operation in three-dimensional space or multi-legged robots able to adjust their gait on uneven terrain. This leads to different control strategies and modeling focuses. Vehicles place emphasis on tire models and road friction estimation, while other robots may need to address nonlinear coupling, contact mechanics, or even flexible body modeling.

Differences also exist in task priorities and interaction rules. Autonomous driving must adhere to traffic regulations and comply with social rules alongside other road users, including pedestrians and other vehicles. This necessitates the incorporation of rule constraints, interpretability, and legal liability traceability mechanisms into decision-making. On the other hand, embodied artificial intelligence places greater emphasis on the precision and reliability of physical interactions, such as object grasping or walking on complex terrain. Evaluation standards for embodied artificial intelligence lean more toward task success rates and physical performance rather than regulatory compliance.

The distribution of perception problems also varies. Autonomous driving requires precise perception of moving object trajectories at long distances and prediction of their intentions, demanding high sensitivity to sensor observation range and latency. In contrast, certain embodied artificial intelligence tasks, like robotic arm assembly, rely more on near-field, high-precision tactile or force perception. As a result, tactile sensors and high-bandwidth control loops are particularly crucial in these systems.

Differences also emerge in data collection and annotation ecosystems. Autonomous driving can leverage large amounts of driving data, maps, and road network information. However, it faces challenges such as large-scale annotation, privacy, and compliance issues. Data for embodied artificial intelligence is typically scarcer, with different robot forms or tasks requiring specialized collection of real-world interaction data. Thus, sample efficiency, simulation accuracy, and the realism of physical simulations are particularly critical.

Can the Two Learn from Each Other?

Indeed, autonomous driving and embodied artificial intelligence have much to learn from each other. Many practitioners who have transitioned between the two fields find the process not overly difficult. Embodied artificial intelligence emphasizes the co-optimization of body design and control strategies, which offers valuable insights for autonomous driving. The mechanical structure, suspension system, and sensor layout of vehicles all influence perception performance and control feasibility. Conversely, the mature experiences of autonomous driving in safety engineering, redundant architectures, and large-scale road test data can enhance the reliability of embodied artificial intelligence in real-world deployments.

Moreover, both share similar task objectives and employ many common technologies. For instance, improving decision robustness under noisy, delayed, and partially observed conditions is a common bottleneck for both. Techniques such as uncertainty estimation, Bayesian methods, robust control, and runtime monitoring can be introduced across domains. Enhancing sample efficiency is equally crucial for both, and combining model-driven and data-driven methods can reduce risky experiments in the real world. Simulation-to-reality transfer technologies are vital for both, requiring accurate modeling of contact mechanics, sensor noise, and environmental diversity in simulations.

In terms of engineering processes, both emphasize closed-loop testing and hierarchical verification. The step-by-step verification path commonly adopted in the autonomous driving industry, "simulation - closed venue - limited scenario open road," can serve as a reference for embodied artificial intelligence deployment. In terms of institutional design, autonomous driving places high requirements on compliance, logging, and interpretability, capabilities that embodied artificial intelligence also needs to strengthen when entering human living environments.

Indeed, whether dealing with vehicles or robots, predictability of behavior, intention communication, and trust-building are extremely important during human collaboration. Adaptive feedback mechanisms, transparent state prompts, and controllable degradation strategies can significantly reduce friction between systems and humans, warranting joint attention from both fields.

What Is the Relationship Between Autonomous Driving and Embodied Artificial Intelligence?

Autonomous driving can be viewed as an important branch of embodied artificial intelligence. While both exhibit high technical overlap in perception, planning, learning, and safety engineering, differences in physical form, task objectives, and interaction environments lead to distinct focuses in research and engineering practices. Introducing insights from embodied artificial intelligence in control, tactile perception, and form design into vehicle engineering, while promoting experiences from autonomous driving in large-scale data processing, regulatory compliance, and verification systems to other embodied systems, can benefit both parties.

-- END --