What Are the Impacts of Fast and Slow Thinking, Often Mentioned in Large Models, on Autonomous Driving?

![]() 11/24 2025

11/24 2025

![]() 612

612

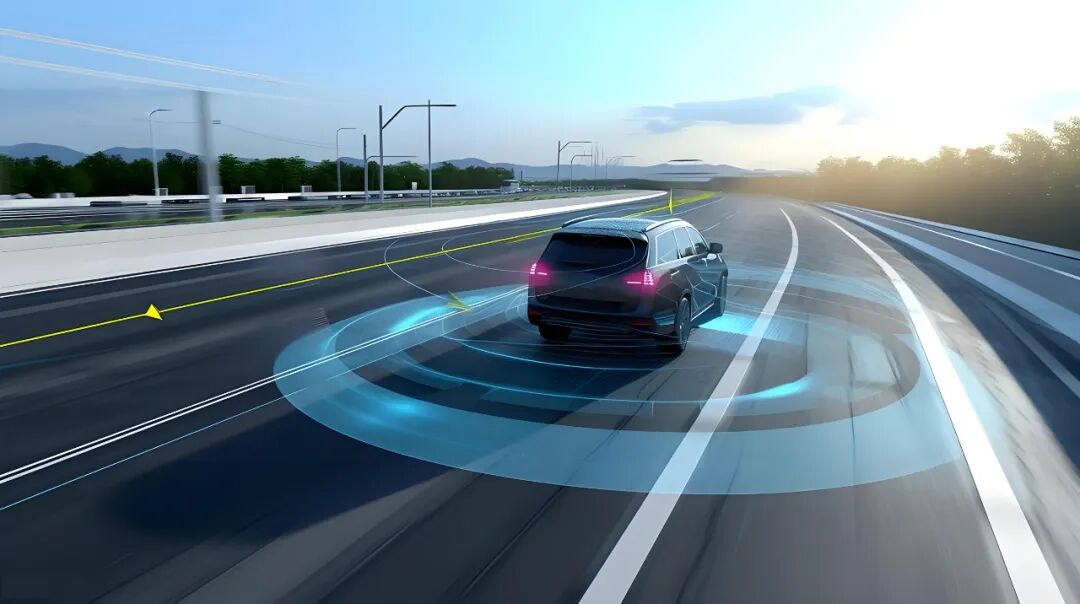

In July 2024, Li Auto unveiled a new autonomous driving technology architecture. This architecture is built upon an end-to-end model, a Visual Language Model (VLM), and a world model, marking a significant milestone in the company's fully self-developed intelligent driving research and development.

The algorithm prototype for this architecture drew innovative inspiration from the 'fast and slow systems' theory proposed by Nobel laureate Daniel Kahneman. The aim is to empower the autonomous driving system to emulate human thought processes and decision-making mechanisms. By integrating end-to-end and VLM models, Li Auto pioneered the industry's first dual-system solution deployed at the vehicle end. Furthermore, it successfully implemented the VLM visual language model on vehicle-end chips. This anthropomorphic design, where 'System 1' and 'System 2' collaborate, is designed to enable autonomous driving to efficiently and swiftly handle 95% of routine scenarios. Simultaneously, it ensures thoroughness and comprehensiveness in addressing the remaining 5% of complex and unknown situations, thereby delivering a smarter and more human-like driving experience. So, what exactly does 'fast and slow systems (thinking)' entail?

What Are 'Fast Thinking' and 'Slow Thinking'?

'Fast thinking' and 'slow thinking' can be understood as two distinct cognitive modes. Fast thinking is characterized by its rapid response, relying heavily on intuition and pattern recognition. In contrast, slow thinking emphasizes deliberate and gradual consideration, engaging in multi-step logical reasoning or long-term planning. When applying this framework to large models, fast thinking corresponds to the model making immediate, one-time predictions or decisions under time constraints. On the other hand, slow thinking involves drawing conclusions through multi-round reasoning, retrieving external evidence, and simulation verification. Neither mode is inherently 'smarter'; rather, they serve as tools applicable to different scenarios. Some tasks demand speed, while others require depth and thoroughness.

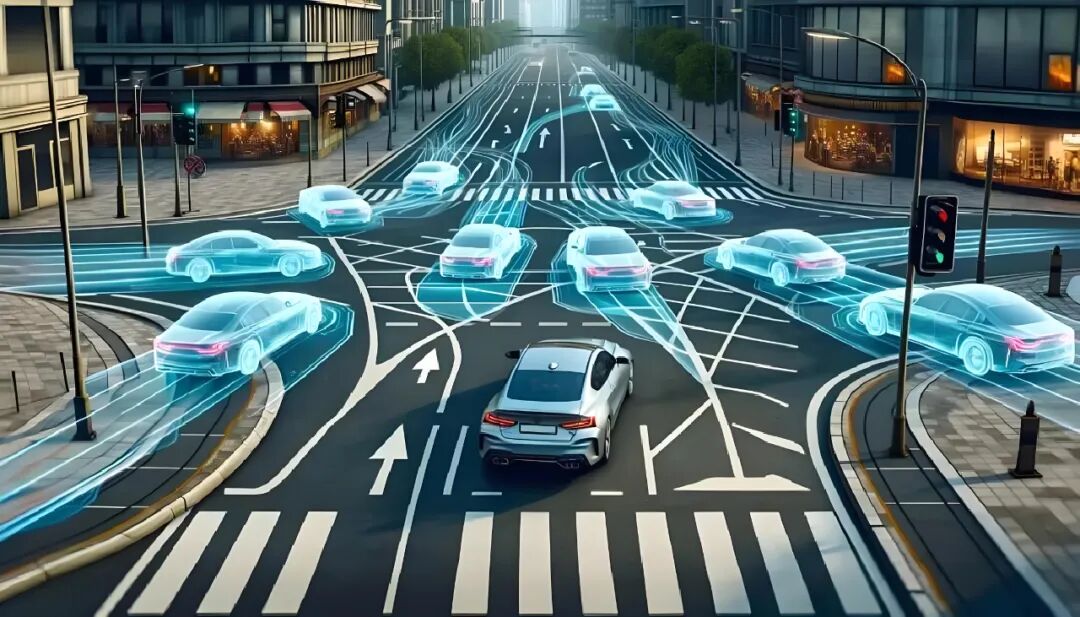

Image Source: Internet

Within large models, these two 'types of thinking' can be manifested in the model structure or operational strategies. Fast thinking is evident in lightweight forward inference, cache or index hits, and approximate decision-making mechanisms. Conversely, slow thinking is characterized by multi-step chained reasoning, retrieval-augmented generation, multiple sampling with self-verification, or delegating problems to larger models or simulators for in-depth computation. A judicious combination of these two capabilities enables the system to respond swiftly while effectively managing complex and uncertain situations.

Why Distinguish Fast/Slow Thinking in Autonomous Driving?

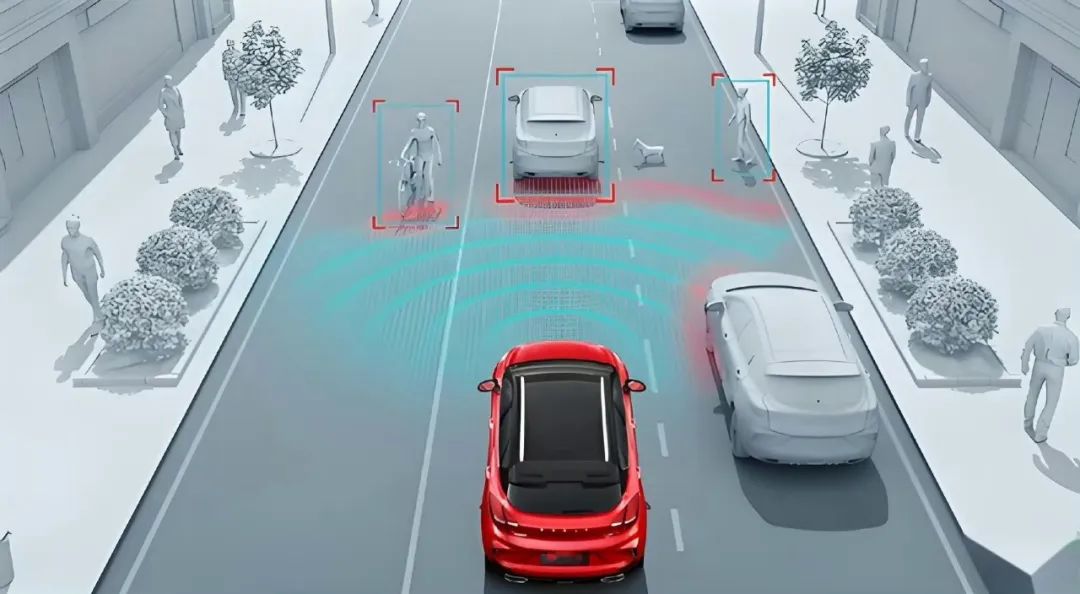

Autonomous driving necessitates both millisecond-level responsiveness and an understanding of situations that unfold over seconds or even minutes. During daily driving, vehicles are required to continuously make short-term decisions, such as lane keeping, following distances, and braking, all of which are highly sensitive to delays. Simultaneously, the system must assess situations over longer timescales, including predicting the intentions of other vehicles, navigating priority issues at complex intersections, and formulating strategic plans based on maps and traffic rules. Introducing the concepts of fast and slow thinking facilitates the design of a hierarchical, efficient, and verifiable system architecture.

Fast thinking assumes a role akin to 'reflexes' and 'low-level control' in vehicles. When the perception module detects obstacles or when radar, lidar, and cameras fuse to issue emergency warnings, the decision-making module must promptly output braking or evasion commands within an extremely short timeframe. This necessitates the system to possess high certainty, low latency, and verifiability, relying on optimized models and hardware. Slow thinking, conversely, handles more intricate reasoning tasks, such as completing scenes when visual information is incomplete, making multi-step interaction predictions in dense traffic, and conducting compliance assessments to generate safety strategies in rule conflicts or rare scenarios. Slow thinking can leverage more data, simulation tools, and external knowledge bases, allowing for inspection and rollback.

Image Source: Internet

These two capabilities complement each other. Fast thinking ensures immediate safety, while slow thinking enhances long-term correctness and system robustness. Without fast thinking, vehicles would miss response opportunities due to computational delays in emergencies. Without slow thinking, vehicles would be prone to logical errors or inappropriate responses in complex or ambiguous scenarios.

How to Integrate the Two Types of Thinking into the System?

Implementing 'fast' and 'slow' thinking involves more than merely splitting a large model into two; it necessitates constructing a hierarchical, asynchronous system with monitoring and fallback mechanisms. The perception and low-level control components typically operate on real-time operating systems at the vehicle end, employing pruned deep networks, deterministic filters, and rule constraints to achieve low latency and high reliability. This layer also incorporates confidence estimation and safety boundary mechanisms, triggering more conservative operations once uncertainty rises.

Image Source: Internet

The slow thinking module can be deployed at the vehicle end, edge, or cloud, depending on latency and privacy requirements. Its tasks encompass retrieving historical trajectories, running multi-model predictions, conducting forward-looking (proactive) simulations based on physical or world models, and utilizing larger language or reasoning models for semantic understanding and regulatory interpretation. To enhance robustness, the system can employ self-consistent sampling, majority voting from multiple inferences, and posterior verifiers to filter outputs. Additionally, 'folding' strategies or models derived from slow thinking back into the fast thinking layer is a common practice. This can be achieved through knowledge distillation, generating training samples, or directly updating small model parameters, thereby solidifying the outcomes of deep reasoning into low-latency runtime behaviors.

Key engineering aspects of fast and slow thinking include frequency stratification and interface definition. Different modules operate at varying frequencies, with information transmitted through clear asynchronous interfaces. High-frequency signals ensure control stability, while low-frequency slow thinking outputs serve as intention or strategy suggestions for high-frequency controllers. Redundancy and arbitration mechanisms are also crucial; when fast and slow thinking conflict, a verifiable arbitration rule set is needed rather than simply siding with one. The system must also possess comprehensive logging and traceability capabilities, with the slow thinking reasoning chain fully recorded to support auditing and replay.

Specific Applications and Precautions

Imagine a traffic scenario where the vehicle ahead suddenly slows down and moves closer to the shoulder, with pedestrians potentially crossing nearby. In this case, fast thinking is responsible for immediately calculating the safest actions, such as decelerating, maintaining the lane, or instantaneously evading when space permits. This step relies on sensor fusion and an optimized controller, with a timescale ranging from milliseconds to tens of milliseconds. Meanwhile, slow thinking operates in parallel, predicting the possible actions of the vehicle, pedestrians, and other traffic participants in the next few seconds to over ten seconds based on historical trajectories and surrounding vehicle behavior models, and assessing risks under various scenarios. If slow thinking determines a high probability of complex interactions, such as adjacent vehicles potentially cutting in, it will issue more conservative strategies to the fast thinking layer, like early deceleration or stronger warnings.

Image Source: Internet

Of course, vigilance must be maintained against the 'hallucinations' and uncertainties of large models. Slow thinking models may produce unreliable reasoning when lacking real sensor details, which is highly dangerous for autonomous driving. Therefore, slow thinking must be positioned as decision support rather than the sole arbiter. Implementing 'verifiable veto power' is crucial; any suggestion from slow thinking must pass a set of testable safety conditions before being adopted by the high-frequency controller. Another risk is latency and resource competition; if slow thinking occupies computational resources that should belong to fast thinking, the system's overall performance will suffer. Thus, resource isolation, priority scheduling, and model compression are necessary to avoid this issue.

Zhizhi Zuiqianyan advocates for continuously verifying slow thinking strategies in high-fidelity simulation environments and closed-loop tests to ensure they produce controllable results under extreme boundary conditions. It also suggests partially solidifying slow thinking conclusions into small fast thinking models through data distillation to balance deep reasoning with low-latency responses. Additionally, deploying multi-layer monitoring, including real-time health checks and confidence thresholds, and immediately triggering conservative modes or roadside stops when confidence decreases, is recommended. Meanwhile, maintaining the interpretability of slow thinking and recording reasoning trajectories support accident replay, liability determination, and regulatory compliance.

Final Remarks

Introducing 'fast thinking' and 'slow thinking' from large models into autonomous driving is not merely about placing two intelligent agents in the vehicle but designing them as complementary modules. Fast thinking ensures millisecond-level safety responses and verifiable low-level control; slow thinking provides cross-time dimensional predictions, strategy assessments, and complex semantic understanding. Clear interfaces, priority settings, redundancy, and arbitration mechanisms are essential between them to prevent 'deep thinking' from adversely affecting 'immediate reactions.'

The focus lies in hierarchical architecture, resource isolation, simulation verification, and solidifying knowledge output from slow thinking into fast thinking through distillation or regularization. Safety and traceability cannot be compromised; any slow reasoning suggestion must undergo rigorous safety checks before adoption. Only in this way can vehicles 'do the right thing' in emergencies and 'think clearly about why' in complex scenarios. The synergy of these two capabilities can truly enhance the robustness and deployability of autonomous driving systems.

-- END --