On-board AI Safety Specification ISO/PAS 8800: An Attempt to Verify the Safety of Automotive Artificial Intelligence.

![]() 11/24 2025

11/24 2025

![]() 498

498

Produced by Zhineng Zhixin

The development of assisted driving and autonomous driving technologies has accelerated comprehensively, and artificial intelligence systems have gradually become a critical part of the decision-making chain for vehicle functions. Unlike traditional vehicle systems primarily based on conventional rule-based logic, artificial intelligence poses unprecedented challenges to vehicle safety due to its data-driven, non-deterministic, and difficult-to-fully-explain nature.

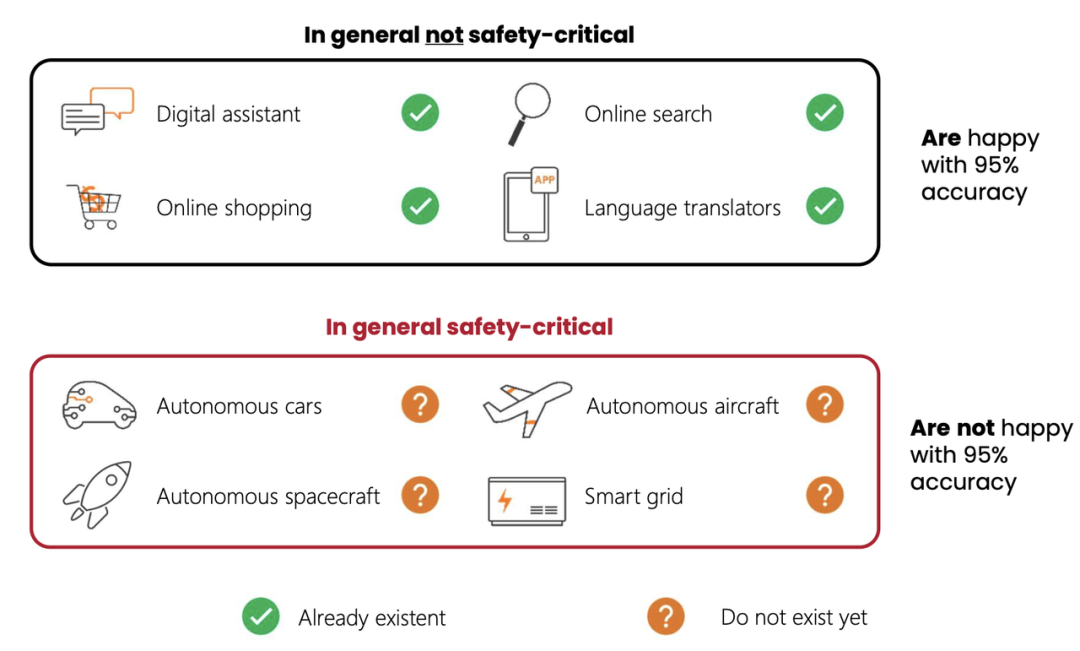

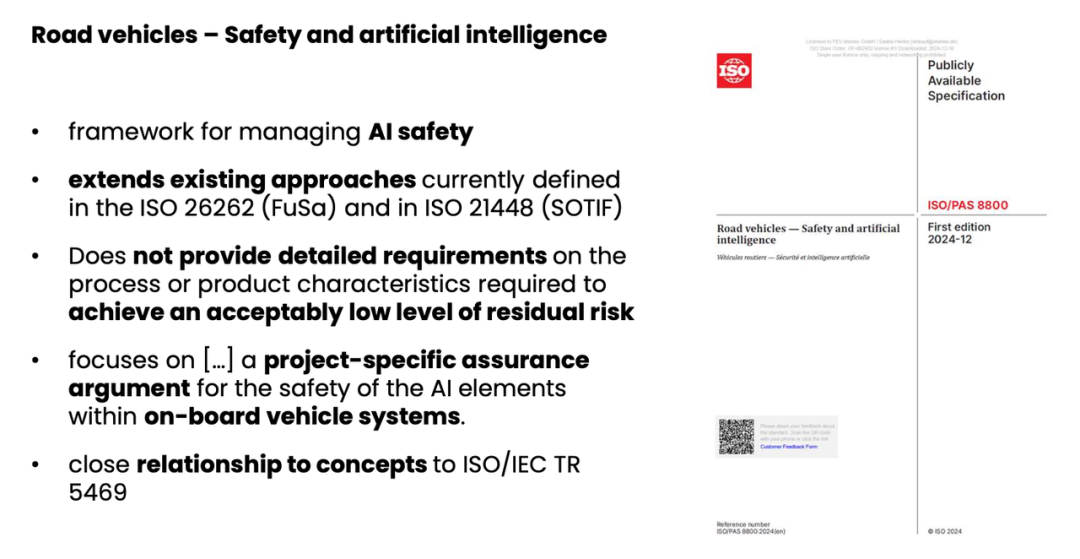

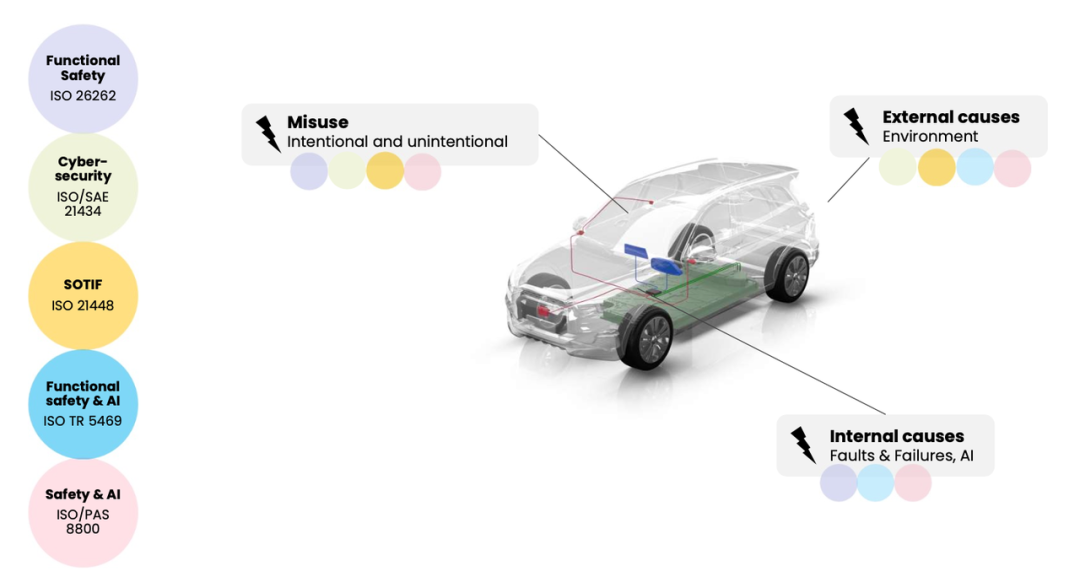

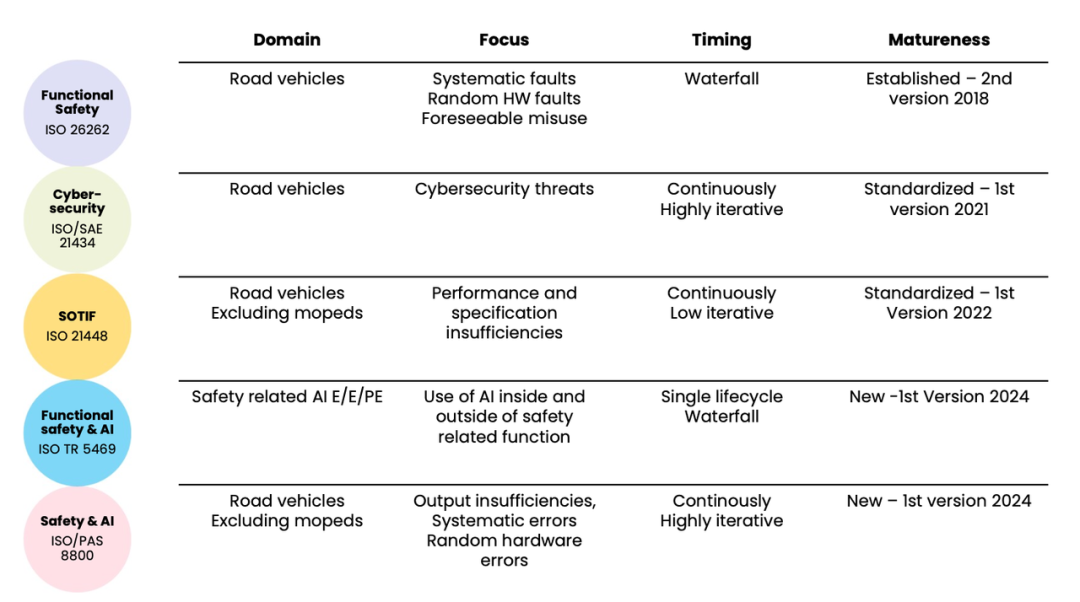

Traditional functional safety systems, such as ISO 26262, and ISO 21448 for anticipated functional safety, lay the foundation for automotive safety systems but struggle to directly cover the characteristics of artificial intelligence models.

The formally released ISO/PAS 8800 in 2024 truly marks the industry's first attempt to establish a specific safety verification framework for on-board AI systems.

This standard does not aim to provide strict quantitative thresholds or prescribe a unified process. Instead, through a framework structure, it enables different automakers, supply chain participants, and AI developers to jointly construct a 'project-specific' safety verification system. This is based on the overall content of ISO/PAS 8800, combined with its connections to existing systems, engineering practice challenges, and the industry's ongoing attempts at solutions.

Part 1: ISO/PAS 8800 - From the Dilemma of Safety-Critical Applications to a Trustworthy AI Framework

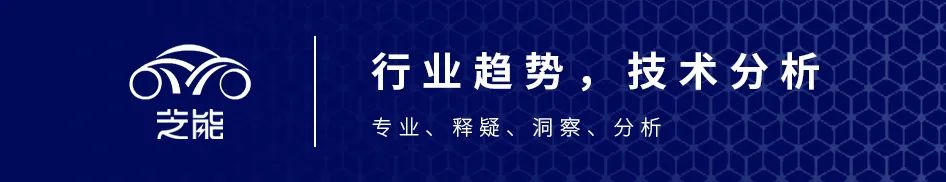

The automotive electrical and electronic architecture is becoming increasingly complex, and the role of AI systems in vehicles is undergoing structural changes. AI is mostly used in experiential, non-safety-critical scenarios, such as speech recognition, in-car personalization adjustments, or simple image classification.

The accuracy rate of such applications typically only needs to exceed 90% to meet experiential demands, and occasional errors do not lead to safety consequences. However, when AI begins to enter areas like autonomous driving, intelligent braking, pedestrian recognition, and collision avoidance decision-making, traditional accuracy metrics are clearly no longer sufficient.

A traffic sign recognition model with 95% accuracy may seem reliable in terms of experience but could mean one misjudgment every 20 recognitions, potentially leading to fatal risks in high-speed scenarios.

It is precisely because of this fundamental difference that when AI moves from non-critical to safety-critical scenarios, a structural fracture (disconnection) occurs in the entire technological system.

Traditional engineering systems rely on rule-based logic, verifiable state machines, traceable signal pathways, precise quantitative indicators, and clearly defined failure modes. In contrast, AI systems are composed of statistical models, probabilistic outputs, and data-driven behaviors, with enormous parameter counts and behaviors that are difficult to fully explain. Traditional verification methods are almost impossible to apply directly.

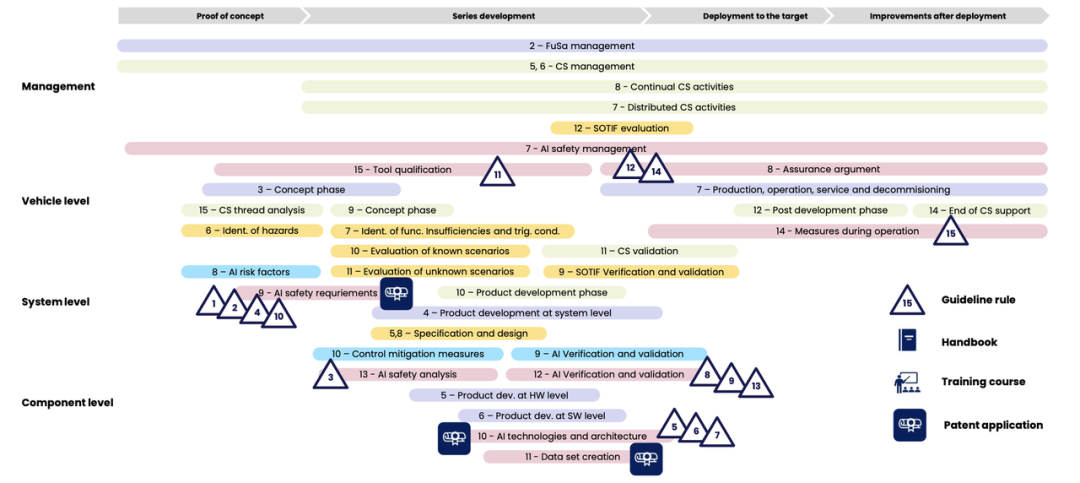

Starting in 2022, the international engineering community began to attempt to establish a set of working methods for safety-critical artificial intelligence applications, including a golden rule system, AI full lifecycle rules, and architectural design guidance. The EU AI Act, implemented in 2025, clarifies risk classification and transparency requirements for AI from a regulatory perspective, especially the regulatory framework for high-risk AI, laying the foundation for vehicle AI standardization.

The release of ISO/PAS 8800 can be seen as the industry's 'first attempt to systematically answer how on-board AI should be proven safe.' Its core positioning is not to replace existing standards but to serve as a bridge between artificial intelligence and automotive safety systems.

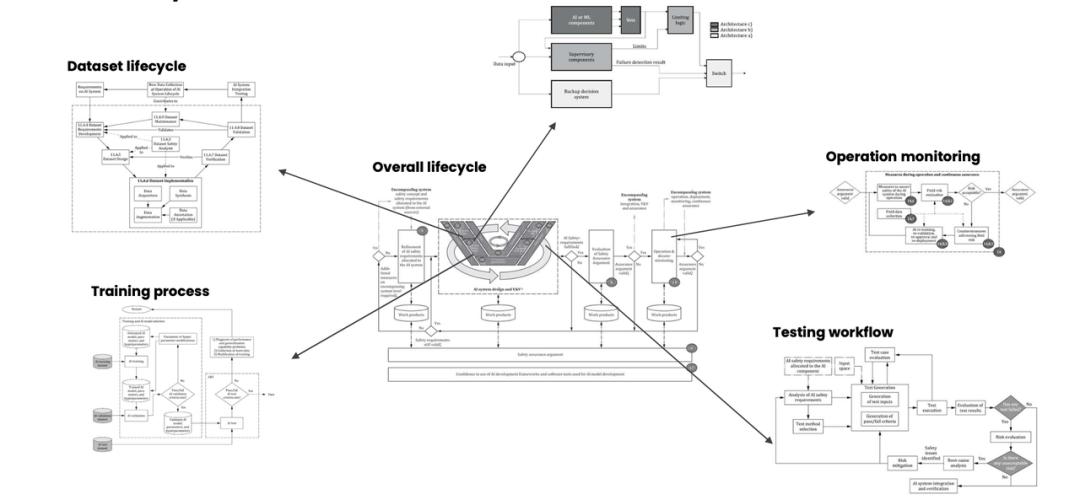

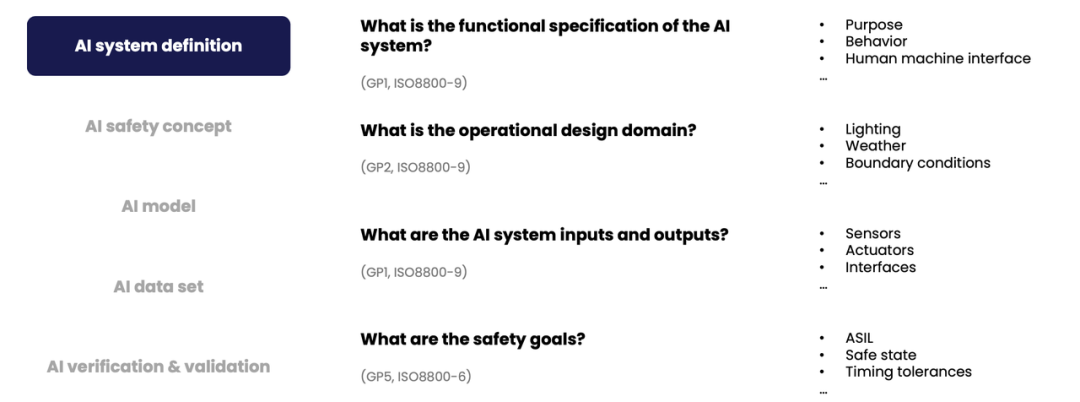

The standard clarifies the role of AI systems in vehicle safety architecture, describes a series of key questions that need to be answered when constructing safety verification, and points out how lifecycle aspects such as system architecture, dataset management, model training, verification and validation, and operational monitoring should be incorporated into the safety engineering system.

From the perspective of the standard itself, it is a 'safety verification framework' that specifies requirements for AI systems, including functional specifications, operational design domain, input/output definitions, safety goals, redundancy strategies, data plausibility verification, model interpretability, overfitting control, uncertainty measurement, label bias control, etc. However, it does not provide engineering quantitative indicators.

The reason for this structure is that the usage scenarios of AI vary greatly, making it difficult to cover all situations with unified indicators, especially since neural network models perform significantly differently across different vehicles, tasks, and sensors.

In practice, ISO/PAS 8800 guides developers to re-examine the role of AI from a systems engineering perspective.

For example, in vehicle perception systems, the model's output is no longer just about accuracy but requires answering engineering logic questions: Can the system provide reliable results under different lighting conditions, extreme weather, and boundary conditions? Can the model's uncertainty be monitored? Might the input data contain unreasonable signals? Have boundary behaviors been analyzed? For misclassifications, has a safety weighting system been adopted? All of these must ultimately be reflected in verifiable safety verification.

This also highlights the most important value of ISO/PAS 8800: incorporating artificial intelligence into a 'discussable, verifiable, and manageable' engineering system.

The standard also exposes fundamental challenges that the industry has not yet resolved. Due to the lack of mathematically rigorous definitions and a complete quantitative framework, AI safety verification still relies on project-specific circumstances, and engineering methods may differ among various automakers or supply chain participants.

Deep neural networks, as the core of AI systems, still lack in-depth descriptions in the standard due to their opacity and unpredictability, meaning the industry still needs more methods to fill this gap.

Part 2: Key Challenges and Viable Paths in AI Safety Engineering

To truly understand the significance of ISO/PAS 8800, one must grasp the core challenges of AI system safety from an engineering implementation perspective.

In vehicles, an AI model must go through multiple stages from definition to deployment, including functional definition, architectural design, dataset construction, model training, testing and validation, system integration, and operational monitoring. Each stage can affect its final safety.

Functional Definition and Operational Design Domain

Unlike traditional systems, where behavior can be precisely defined by rules, the functionality of AI systems is often implicitly determined by data. Therefore, it is necessary to additionally define very strict expected usage scenarios, input types, target behaviors, and boundary conditions in safety engineering.

ISO/PAS 8800 incorporates these elements into the primary part of safety verification, requiring engineering teams to clearly delineate the scenarios in which the AI model should function and adopt necessary protection mechanisms in the architecture, such as rule-based judgments, voting mechanisms, redundant models, or confidence thresholds, to prevent AI from outputting high-risk results when it exceeds its capabilities.

Dataset Issues

The capabilities of deep learning models are determined by data, and datasets themselves may suffer from biases, missing data, labeling errors, or insufficient rare scenarios.

ISO/PAS 8800 imposes explicit requirements on datasets, including the collection and systematic identification of extreme cases, verification of the labeling process, bias control, data augmentation strategies, and update mechanisms under environmental changes.

For engineering teams, this means that data management will become an essential part of safety engineering, not just a preliminary preparation for model training.

At the model level, ISO/PAS 8800 requires developers to provide interpretability analysis, reproducibility verification, overfitting control, uncertainty measurement, etc., and to provide corresponding engineering control methods in safety verification.

Especially reproducibility: for large deep neural networks, differences in training seeds, hardware variations, and parallel computing methods can lead to output differences. The standard requires developers to control the determinism of model training and, when necessary, provide hardware consistency analysis and configuration records.

The most challenging issues arise from the verification and validation stages.

Traditional software can achieve high-confidence verification through code reviews, path coverage, and boundary analysis, but AI models cannot be verified for completeness in these ways.

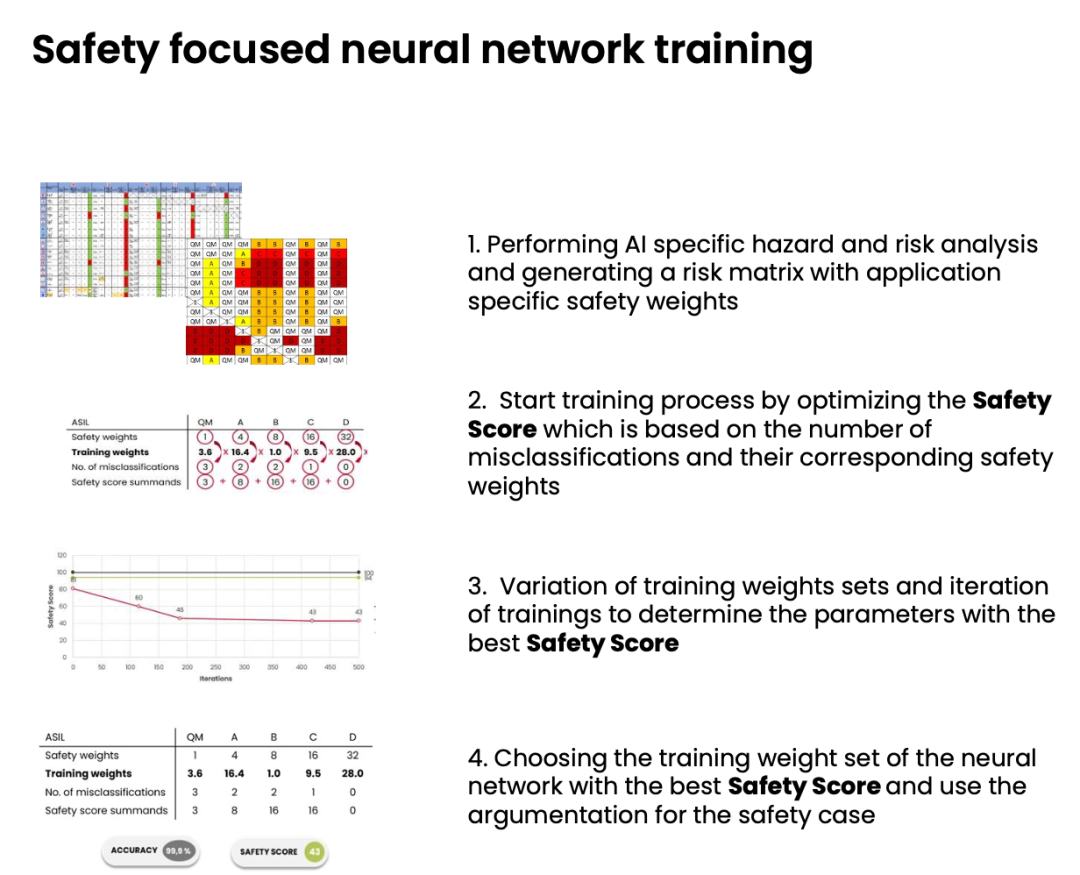

Therefore, ISO/PAS 8800 adopts an indicator-based verification approach, dividing verification indicators into three categories: performance-related, safety-related, and system-related.

However, the standard still does not provide final quantitative indicators, meaning safety verification must combine risk assessment methods from ISO 21448, such as GAMAB, ALARP, or MEM, for quantitative derivation.

In traffic sign recognition for SAE Level 5 vehicles, ASIL C requires the model's misrecognition probability to be below a specific threshold, meaning the system needs to achieve a safety-related accuracy rate close to 99.99%. Clearly, this figure is still difficult to achieve in most industrial systems.

The industry has begun to explore new solutions. Neural network training methods based on misclassification risk weights can enable models to not only pursue overall accuracy during training but also focus on reducing the occurrence of safety-critical misclassifications, thereby providing an indicator system usable for safety verification.

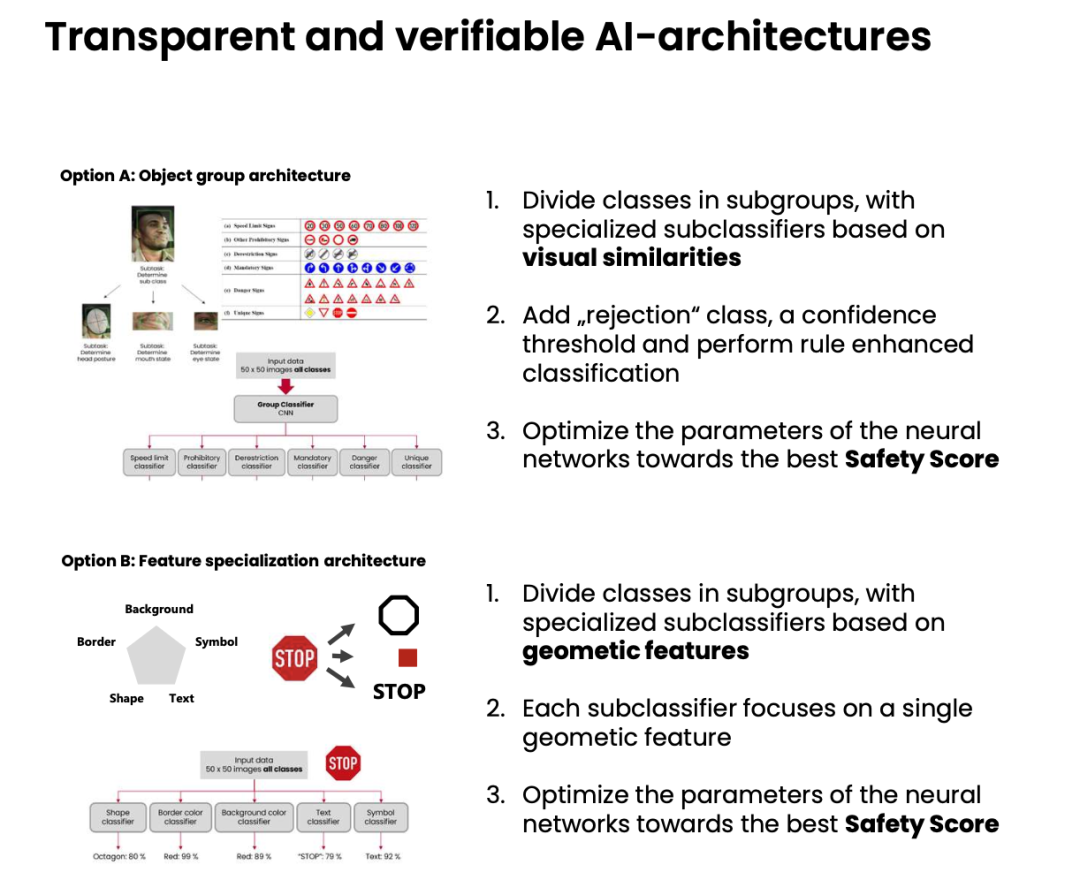

Solutions come from the AI architecture itself.

By introducing transparent and verifiable architectures, such as target grouping classifiers, parallel classification systems based on feature decomposition, and confidence rejection strategies, the interpretability of models can be reduced, and engineering controllability can be improved.

These architectures decompose complex classification problems into multiple independent sub-problems, with each sub-classifier targeting a single structural feature, thereby improving the overall system's interpretability and verifiability and ultimately making safety verification more transparent.

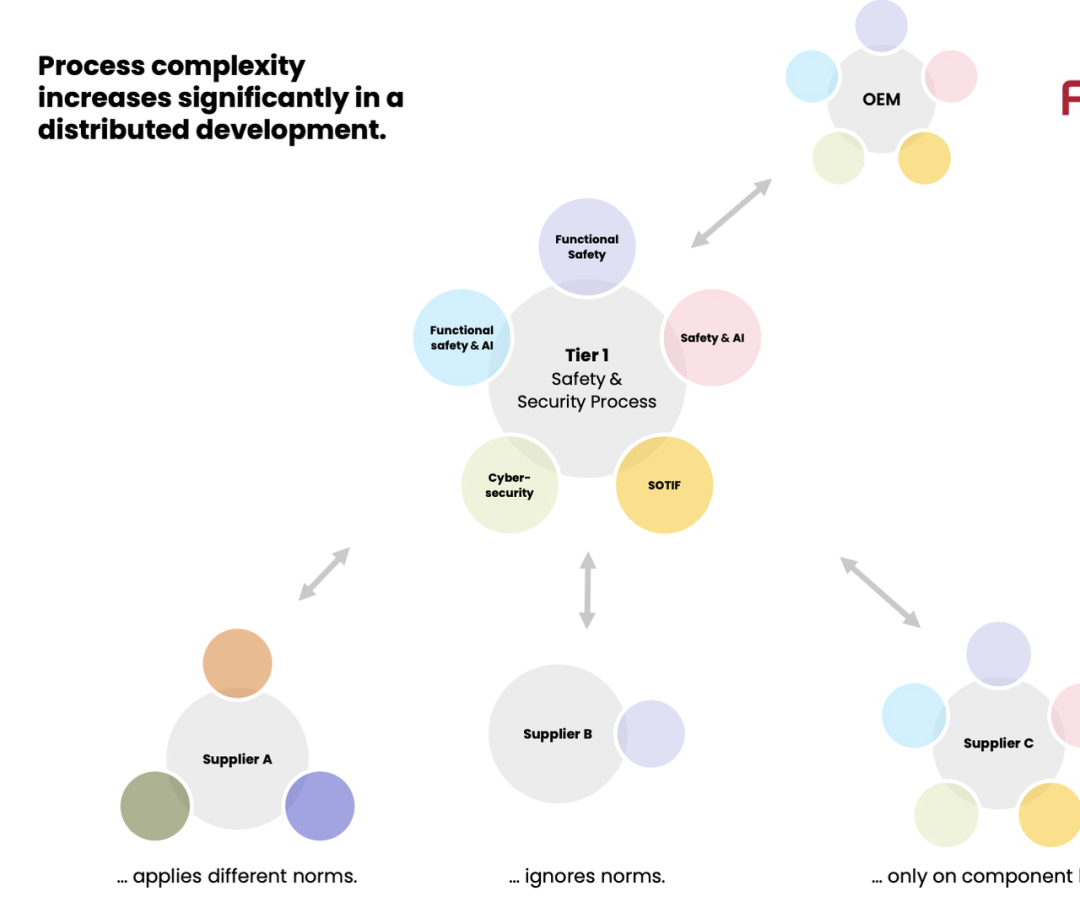

The standard system still faces enormous challenges.

Different norms—ISO 26262, SOTIF, cybersecurity standards, and artificial intelligence-related technical reports—differ significantly in terms of functionality, processes, and risk definitions. When development is distributed across multiple levels such as OEMs, Tier 1s, and Tier 2s, different teams may simultaneously adopt different norms or even overlook some.

Although ISO/PAS 8800 attempts to incorporate AI into the overall process, it lacks explicit integration guidance. This means the industry must construct its own cross-norm integrated development process, realigning tasks at the vehicle, system, and component levels to ensure AI safety activities run through the entire lifecycle.

AI safety is not a task that can be accomplished by a single team but is a cross-disciplinary system spanning architecture, safety engineering, data engineering, model engineering, testing engineering, and system integration engineering.

Summary

From a systems engineering perspective, ISO/PAS 8800 is not a mature standard that can be immediately implemented. However, as the industry's first formal attempt at safety verification for on-board artificial intelligence, it enables AI systems to be incorporated into an analyzable, verifiable, and traceable engineering system while also establishing a common language for OEMs and the supply chain.