Beyond the Robot Controversy: XPENG Unveils a Subtle Yet Impactful Physical AI Strategy

![]() 11/25 2025

11/25 2025

![]() 533

533

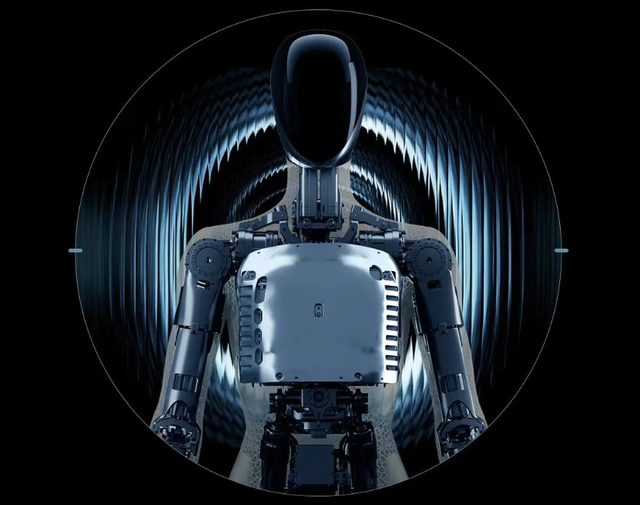

Recently, XPENG Tech Day has stood out as one of the most talked-about tech conferences in recent memory. The spotlight was cast on the embodied intelligent robot, IRON, which sparked a whirlwind of debate by appearing almost human-like in its movements and skin texture. Some were astounded by the technological leap, while skeptics questioned the authenticity of the footage, humorously suggesting, "Is there a real person operating it from behind the scenes?" This debate quickly overshadowed other aspects of the conference, casting XPENG in a light of over-marketing and sensationalism.

Yet, there was far more to XPENG's presentation than just the robot.

To be precise, IRON was merely the showpiece of the entire event. What truly warrants industry attention is the underlying architecture XPENG is striving to construct, known as 'Physical AI.' This architecture encompasses technological advancements such as the second-generation Vision-Language-Action (VLA) model, Robotaxi services, and flying cars, all aimed at addressing a fundamental question: How can machines autonomously navigate complex, dynamic, and unstructured real-world environments, akin to humans, through perception, understanding, and action?

Let's delve into the characteristics of XPENG's touted Physical AI and the technological depth hidden behind the robot controversy.

Robotaxi and Flying Car: XPENG's Strategic Vision

In the narrative of XPENG Tech Day 2025, the humanoid robot IRON undoubtedly stole the limelight. However, the true test of XPENG's 'Physical AI' strategy lies in two more challenging product lines—Robotaxi and flying cars.

These are not mere technological demonstrations but rigorous proving grounds for XPENG to transition its 'emergence' concept from the laboratory to the real world.

First to catch the eye are three self-developed Robotaxi models set to commence trial operations in 2026. Unlike the industry's heavy reliance on LiDAR and high-definition maps, XPENG insists on a pure vision-based approach, with the second-generation VLA model at its core, to establish an end-to-end perception-decision-execution closed loop.

This seemingly bold technological choice is, in fact, a well-considered strategy: Traditional modular systems rely on engineers to meticulously list scenarios and write rules, whereas end-to-end systems depend on models to 'learn' universal rules after exposure to vast real-world scenarios.

To validate whether this generalization capability can meet the demands of real-world commercial operations, XPENG has partnered with AutoNavi as its first global ecological collaborator. By integrating with AutoNavi's travel platform, XPENG's Robotaxi will directly confront real and massive user travel demands. This means AI drivers must handle extreme weather, dim night lighting, unprotected left turns, complex urban interchanges, and other long-tail scenarios around the clock. These scenarios cannot be fully replicated through simulation but are precisely the dividing line for L4 autonomous driving commercialization.

Meanwhile, the 'Robo' intelligent driving version for the personal market also serves as a data flywheel for technological evolution. Loaded on high-end mass-produced vehicles (such as the X9 Ultra), this version provides users with top-tier intelligent driving experiences while collecting a broader range and richer set of human driving data. Corner case data generated by tens of thousands or even hundreds of thousands of users during daily driving will be fed back in real-time to train the L4 algorithms of Robotaxi, accelerating their generalization ability in long-tail scenarios. This collaborative path of B-end (business-to-business) and C-end (consumer-to-consumer) advancement will also confer XPENG a unique advantage distinct from pure Robotaxi companies like Waymo.

If Robotaxi expands the battlefield on a two-dimensional plane, then flying cars challenge the limits in three-dimensional space. Flying cars must not only contend with traditional aviation challenges like wind shear, airflow disturbances, and airspace conflicts but also achieve centimeter-level precision takeoff and landing, automatic obstacle avoidance, and path planning amidst urban buildings.

XPENG AEROHT has developed two flying systems. The Land Aircraft Carrier is a split-type flying car designed for personal low-altitude flight, while the A868 is a fully tilting hybrid-electric flying car with a six-person cabin, focusing more on efficient intercity travel for multiple passengers.

It is reported that XPENG AEROHT's Land Aircraft Carrier has received over 7,000 orders, entered trial production at a mass production factory, and is set for large-scale delivery in 2026. The A868 flying car boasts a cruising speed exceeding 360 km/h and a range over 500 kilometers, entering the flight verification stage.

Furthermore, the Dunhuang Municipal Government has signed a strategic cooperation agreement with XPENG AEROHT to create the first low-altitude self-drive tourism route in northwest China. The initial phase plans to construct five exclusive flight camps, with the first batch scheduled for trial operation in July 2026. The route starts at Mingsha Mountain Crescent Moon Spring, connecting Crescent Moon Spring Town, Optoelectronics Expo Park, Yangguan and Yumenguan Tourist Areas, and ending at the Yadan World Geological Park.

It can be said that XPENG's Robotaxi and flying cars have transitioned from technological concepts to the implementation stage, representing a crucial hard test for the 'Physical AI' system in the real world. If this stage can be stabilized, it indicates that the foundational logic of VLA is feasible in reality. If numerous unreliable behavior issues are exposed, it suggests that the route itself still requires significant adjustments.

A deeper analysis reveals that the underlying logic behind these products is shared.

They all share the same brain—the second-generation VLA.

The Brain of Physical AI: Second-Generation VLA

If XPENG's accumulations in the intelligent driving field over the past few years represent quantitative change, then the introduction of the second-generation VLA marks a radical qualitative transformation.

Unlike traditional VLA (Vision-Language-Action), XPENG's technological route directly skips the L (language translation) step, achieving end-to-end direct generation from visual signals to action commands.

In the past, visual input typically needed to be first converted into semantic descriptions (e.g., 'There is a pedestrian crossing the road ahead') and then processed by a language model to generate action commands (such as 'Slow down, stop'). Although this paradigm is structurally clear, it introduces information loss, delay, and semantic ambiguity, becoming a performance bottleneck, especially in high-speed dynamic scenarios.

XPENG's second-generation VLA abandons this intermediate layer, directly generating control actions from raw visual signals. The images captured by the camera are processed by a neural network to directly output physical execution commands such as steering wheel angle, throttle/brake force, and aircraft tilt angle. The 'what you see is what you control' design significantly enhances the system's reaction speed, anthropomorphic degree, and environmental adaptability.

It is reported that to train this model, XPENG has utilized nearly 100 million clips of real-world scenario data, equivalent to the sum of all extreme situations a human driver might encounter over 65,000 years of continuous driving.

Through the release of the second-generation VLA, we can glimpse a highly ambitious 'all-product engine' technological route.

The end-to-end concept itself is not novel. Early in autonomous driving, academia proposed pure end-to-end driving models. However, such systems were often confined to closed tracks or specific conditions, struggling to migrate across platforms and tasks, let alone simultaneously control wheeled vehicles, aircraft, and even bipedal robots.

XPENG breaks this boundary by designing the second-generation VLA as a unified intelligent engine for its entire product line: On Robotaxi, it handles social interactions and mapless navigation in complex urban scenarios; in flying cars, it interprets three-dimensional airspace structures, airflow disturbances, and takeoff and landing postures; within the humanoid robot IRON, it coordinates 82 degrees of freedom for bionic movement and fine operations.

Notably, the second-generation VLA is XPENG's first mass-produced large model for the physical world. Thanks to this breakthrough, XPENG has deployed a model with a parameter scale in the billions on its Ultra version vehicle with 2250 TOPS of computing power, far exceeding the tens of millions of parameters commonly adopted in the industry for vehicle-end models.

However, this route requires more than just a brain; powerful chips and sufficient computing power are the underlying foundations supporting the stable operation of this system.

The Heart of Physical AI: Turing AI Chip and Intelligent Computing Cluster

Even the smartest brain needs a robust heart. In XPENG's constructed Physical AI system, this 'heart' is its self-developed Turing AI chip.

As one of the important releases at this Tech Day, the Turing chip adopts a dedicated NPU architecture with a single-chip computing power of up to 750 TOPS. It not only meets automotive-grade reliability requirements but will also be fully equipped across XPENG's entire product range, including Robotaxi, flying cars, and the humanoid robot IRON, forming a unified end-side AI computing platform. This means that regardless of the form the intelligent agent takes, its underlying execution units share the same high-performance, low-latency computing standards.

However, an end-side heart alone is insufficient. To enable the continuous evolution of Physical AI, XPENG has simultaneously built 'Xingyun,' the first 10,000-card-level intelligent computing cluster in China's automotive industry. This cluster has now expanded to a scale of 30,000 GPUs, dedicated to the training, simulation verification, and cloud-side collaborative reasoning of autonomous driving and embodied intelligent models, providing a continuous stream of intelligent 'blood' for the second-generation VLA.

The combination of the Turing chip and the 'Xingyun' cluster forms a complete closed loop from training to deployment, from the cloud to the terminal: The large model learns massive real-world data in 'Xingyun' to generate strategies; the Turing chip then efficiently executes these strategies at the terminal and feeds back new data generated during operation to the cloud, driving the next iteration.

Thus, the Physical AI represented by the second-generation VLA is no longer just a conceptual model in the laboratory but a truly cross-scenario, mass-producible, and evolvable technological system.

It can be said that XPENG's Tech Day this year reveals a signal: It aims to explain the world with the same logic, enabling machines to act based on the same understanding.

This is XPENG's ambition for the next decade—to construct a Physical AI system.

The so-called 'Physical AI' is not merely an algorithmic model running in a virtual environment but an intelligent agent that can truly embed into the real physical world, continuously interact with the environment, and autonomously act in dynamic and complex scenarios. It requires AI not only to see but also to comprehend and act accurately, understanding the constraints of the real world, such as gravity, friction, airflow, and social rules, and making safe, efficient, and anthropomorphic behaviors accordingly.

From this perspective, the robot controversy will soon fade, and the heat (popularity) of short videos will eventually dissipate. However, whether Physical AI can truly enable machines to understand the world and act in urban and airspace environments will be the truly compelling story in the coming years.

For XPENG, the real test has just begun. How to translate the visions from the conference into reality and bear fruit over the next decade is currently its biggest challenge and the focus of our continued attention.