One Year After Gaining Widespread Recognition: DeepSeek's Progress and Steadfastness

![]() 01/16 2026

01/16 2026

![]() 588

588

Remaining Far from Complacent

Written by: Chen Dengxin

Edited by: Li Jinlin

Formatted by: Annalee

DeepSeek has consistently been in the limelight.

According to disclosed information, DeepSeek plans to officially launch its next-generation V4 model in February 2026. This model is expected to boast programming capabilities that could outperform leading closed-source models such as Claude and the GPT series.

The accuracy of this news remains to be confirmed over time. What is undeniable is that, on January 27 of the previous year, DeepSeek emerged as the most prominent app, even surpassing ChatGPT in download rankings on the international stage.

This indicates that DeepSeek has made a global impact and is on the verge of celebrating its first anniversary.

In response, Jensen Huang sincerely commented, "Deepseek-R1 stands as a significant representative of open-source models, amazing the world and spearheading the advancement of open-source models in 2025."

Despite this, DeepSeek's journey in 2026 will not be without challenges: open-source models are thriving, and AI applications are continuously evolving, making it improbable for any single model to dominate as before.

What does the future hold for DeepSeek as it approaches its next anniversary?

Computational Power: No Longer the Sole Benchmark

Once, DeepSeek was relatively obscure.

As early as 2023, High-Flyer Quantitative, a frontrunner in the quantitative trading sector, displayed immense enthusiasm for the future of artificial intelligence. "Seventy years after the inception of artificial intelligence, astonishing intelligence has finally emerged, heralding a new era." Thus, DeepSeek was incubated, inadvertently achieving success.

The transition from High-Flyer Quantitative to DeepSeek follows a logical trajectory.

Quantitative trading involves digitizing investment behaviors and employing one or more algorithms for analysis to make informed judgments and decisions. The strength of AI capabilities determines the proficiency of quantitative trading.

Caijing Shiyiren once reported, "There are no more than five enterprises in China with over 10,000 GPUs, and apart from a few leading giants, it also includes a quantitative fund company named High-Flyer."

It is clear that DeepSeek's success is not coincidental.

Even so, for a considerable period, DeepSeek remained relatively unknown to the general public, only gaining recognition within the industry. It was hailed as the "biggest dark horse" of open-source large models in 2024.

It was not until the release of DeepSeek-R1 that it gained widespread attention and sparked a national fervor.

Previously, computational power was considered the core metric for evaluating the capabilities of large models. The belief that "more computational power leads to better performance" was regarded as gospel, not only keeping AI costs high for an extended period but also raising computational power barriers.

The total training duration of DeepSeek-R1 is approximately 80 hours, with a training cost of about $294,000, demonstrating that low cost and high efficiency are viable alternatives.

Subsequently, open-source large models gained prominence, and more internet companies shifted their focus, leading to a trend favoring open-source models.

In fact, open-source has become the shortest path for large models to be implemented, uniting dispersed forces to jointly create a thriving AI application ecosystem and seeking super traffic entry points in the AI era. This is a mutually beneficial situation.

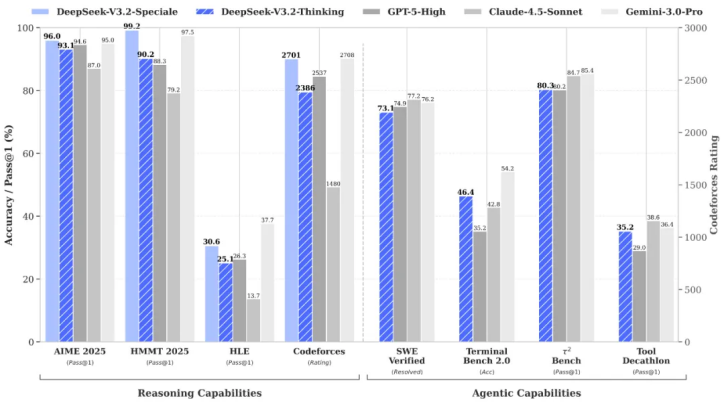

For instance, DeepSeek V3.2 has already fully matched GPT-5 in publicly available reasoning benchmark tests, only slightly trailing behind Gemini 3 Pro.

DeepSeek V3.2 has fully matched GPT-5

In other words, Chinese open-source large models have transitioned from followers to leaders.

According to the "2025 Global Unicorn Enterprises 500 Report," DeepSeek is valued at 1.05 trillion yuan, ranking as the fourth-largest unicorn globally and second only to ByteDance in China.

DeepSeek has become the second-largest unicorn enterprise in China

However, OpenAI, Meta, Microsoft, Google, and others continue to ramp up their investments in computational power.

Alone, OpenAI's AI infrastructure spending will exceed $1.4 trillion in the coming years, while Microsoft is also unwilling to lag behind, planning to invest hundreds of billions of dollars in the future.

It is evident that overseas players still place their faith in brute force.

Capturing User Attention, But Not the Ultimate Victor

Despite this, DeepSeek cannot afford to be complacent.

Firstly, competition among open-source large models is intensifying.

The underlying logic of open-source models is to foster a positive feedback AI ecosystem, expanding the user base to unlock greater commercial value.

Once this path proves successful, internet giants are entering the fray one after another, increasing their stakes, and DeepSeek no longer holds a monopoly.

Alibaba has open-sourced over 300 models, with more than 170,000 derivative models; Baidu has open-sourced ERNIE Bot, retaining 90% of its core capabilities, including key modules like multimodal understanding and logical reasoning; Tencent has open-sourced a series of models in fields such as vision, speech, and 3D generation...

As more players enter, the competitive landscape of open-source has subtly shifted from DeepSeek's dominance to diversified competition. The ultimate victor remains uncertain.

It is worth mentioning that imitators of DeepSeek have also emerged.

Jiukun Investment, as renowned as High-Flyer Quantitative, has released its open-source model IQuest-Coder-V1, focusing on the vertical field of programming capabilities, coinciding with the upcoming release of DeepSeek V4.0, which also emphasizes "better understanding of programmers."

This implies that newcomers are targeting DeepSeek's ecological niche.

In fact, overseas model companies like OpenAI, Perplexity AI, and Anthropic are also recruiting quantitative talents to draw inspiration and gain momentum.

Take OpenAI as an example; its current Chief Research Officer, Mark Chen, has a quantitative background.

Secondly, DeepSeek is passively engaging in the competition for application scenarios.

The industry consensus is that the ultimate goal of large models is application. Internet giants have made significant strides around application scenarios, and DeepSeek's competitive position is not optimistic.

Currently, Doubao is leading the way, becoming the frontrunner in AI applications.

According to QuestMobile data, as of September 2025, the monthly active users of AI applications on mobile and PC platforms reached 729 million and 200 million, respectively. Among them, Doubao surpassed DeepSeek, with monthly active user bases of 172 million and 145 million, respectively.

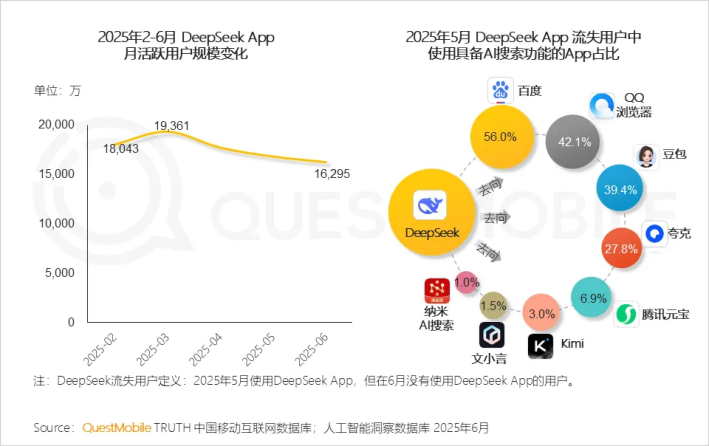

In comparison, DeepSeek's monthly active user base has shrunk by about a quarter from its peak.

Multiple media outlets have reported that users lost by DeepSeek have turned to similar competitors like Baidu, QQ Browser, Doubao, Yuanbao, and Kuaike.

Where DeepSeek's lost users have gone

In short, DeepSeek has fully captured user attention but is not the ultimate victor.

However, DeepSeek and internet giants are both competitors and allies: Tencent Cloud, Alibaba Cloud, JD Cloud, Huawei Cloud, Volcano Engine, and Baidu Intelligent Cloud have all integrated DeepSeek, providing users with more choices.

Liang Wenfeng, the founder of DeepSeek, stated, "In the long run, we hope to form an ecosystem where the industry directly utilizes our technology and outputs. We will only be responsible for the foundational models and frontier innovations, while other companies will build B2B and B2C businesses on top of DeepSeek."

From this perspective, DeepSeek does not intend to directly compete with internet giants but emphasizes differentiated competition.

Guo Tao, a senior AI expert, believes that DeepSeek will not disrupt the industry's existing competitive landscape: "AI large models like ERNIE Bot and Tongyi Qianwen have already established comprehensive ecological systems, supported by powerful enterprises like Baidu and Alibaba. They possess strong technical capabilities and can continuously invest in R&D for technological iteration and upgrading."

Source: DeepSeek Official Account

Thirdly, High-Flyer Quantitative's reputation is polarized.

As is well known, the large model industry is both technology and capital-intensive. Since its debut, DeepSeek has emphasized not seeking financing, with its confidence stemming from High-Flyer Quantitative's outstanding strength.

According to data from Simuwang, in 2025, the average return of 75 billion-dollar private equity firms with disclosed performance reached 32.77%. High-Flyer Quantitative ranked second in China's billion-dollar quantitative fund performance rankings with an average return of 56.6% in 2025.

In other words, High-Flyer Quantitative's strong performance provides a solid financial foundation for its incubated DeepSeek.

For users who can purchase related products, High-Flyer Quantitative is a trustworthy partner, but for many investors, it is seen as a symbol of "harvesting the masses."

"Quantitative trading is essentially a zero-sum game. What I earn is what you lose, and what you earn is what I lose," a belief increasingly recognized by more investors, leading to questions like "Why not harvest overseas?"

As a result, some investors have taken out their frustrations on DeepSeek to vent their dissatisfaction.

In conclusion, on the first anniversary of DeepSeek's rise to prominence, the outside world expects DeepSeek V4.0 to replicate the stunning effect of DeepSeek-R1, filling expectations to the brim. However, the AI competition is a marathon, not a sprint; it is a test of endurance and perseverance. Regardless of whether DeepSeek V4.0 can once again gain widespread attention, it will not change its role as a promoter.

The only certainty is that DeepSeek will continue to make a positive impact on the AI sector.