Domestic Large-Scale Models Make Concurrent Shifts: DeepSeek Takes One Path, Kimi Another—Is the Era of Practical AI Deployment Upon Us?

![]() 01/29 2026

01/29 2026

![]() 399

399

Will This Trigger a Fresh Wave of AI Advancements?

On January 27, two of the most highly anticipated domestic large-scale model startups unveiled their latest and most substantial open-source updates nearly simultaneously:

DeepSeek introduced and open-sourced DeepSeek-OCR 2, a significant upgrade to its groundbreaking DeepSeek-OCR from the previous year. Similarly, Kimi released and open-sourced K2.5, advancing its trajectory in ultra-long context processing, multimodal capabilities, and 'intelligent agent' functionality.

At first glance, these updates seem to be heading in different directions.

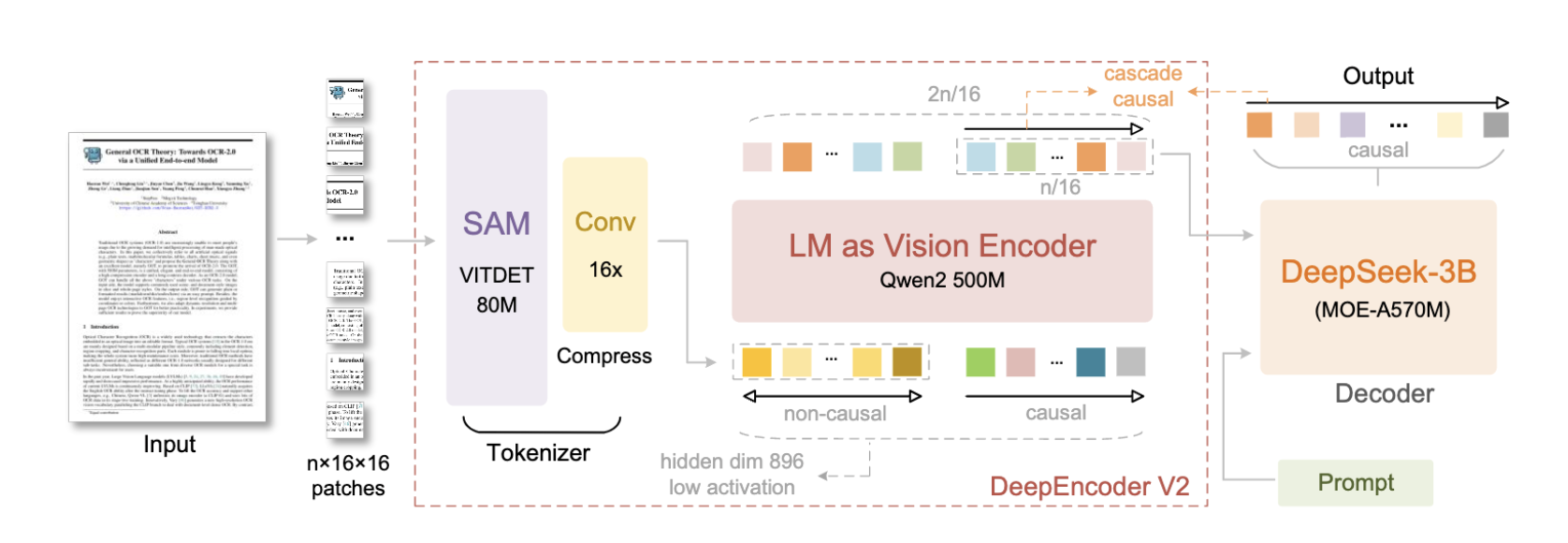

DeepSeek-OCR 2 revolutionizes how models 'read' information by introducing a novel visual encoding mechanism. This enables large models to grasp human visual logic and compress lengthy, expensive text inputs into higher-density 'visual semantics.'

In simpler terms, it aims to transform how AI 'reads documents.' Instead of processing entire files word by word, models can now analyze layouts and identify key points first. This means future AI-powered document reading, data retrieval, and table extraction could be faster, more cost-effective, and more reliable.

Image Source: DeepSeek

Kimi K2.5, on the other hand, takes a different approach: it's not just about answering questions but pushing AI toward 'practical execution.' With enhanced long-term memory, stronger multimodal understanding, and the ability to decompose and execute complex tasks, K2.5 aims to provide a 'digital assistant' experience rather than just a chat window.

Kimi claims K2.5 to be its most intelligent and versatile model to date, supporting both visual and text inputs, operating in thinking and non-thinking modes, and handling dialogue and agent tasks.

One focuses on improving input efficiency for language models, while the other emphasizes general intelligence and complex task collaboration. Together, they highlight a critical shift: large model upgrades are moving from enhancing 'parameter counts and dialogue capabilities' to fundamental, engineering-driven capability reconstruction.

AI is evolving beyond just having smarter brains.

From Input to Execution: Two Paths for Domestic AI Upgrades

DeepSeek-OCR, released last year, first made the industry realize that the traditional word-by-word input methods for large models could be reimagined. DeepSeek-OCR 2 now addresses a more specific and challenging issue: how models should 'read' complex documents.

Traditionally, AI processed documents in a mechanical manner. PDFs, contracts, and financial reports were broken down into text segments and fed sequentially into models. This approach had clear drawbacks:

On one hand, long documents quickly overwhelmed context windows, driving up costs and reducing efficiency. On the other, tables, multi-column layouts, and the relationships between annotations and main text were often disrupted during 'word splitting.'

DeepSeek's solution in OCR-2 is to further strengthen its 'visual encoding' approach, treating documents not as text strings but as visual objects to be 'read.'

Compared to its predecessor, OCR 2's key innovation lies not just in compression rates but in introducing human-like reading logic. It shifts from a CLIP (slicing) architecture to a Qwen2-based LM (language model) visual encoder. Models no longer process entire pages simultaneously and uniformly but learn to distinguish structures:

Where are the headings? Where are the tables? Which pieces of information are related? What needs to be read first, and what can wait?

Operational Diagram. Image Source: DeepSeek

In other words, it begins to understand that 'layout itself is part of the information.'

The direct value of this shift lies not in abstract praise like 'the model is smarter' but in tangible improvements to user experience. For example, when AI quickly reviews a multi-page report, it no longer needs to read every word to draw conclusions. When processing complex tables, column misalignments and field mismatches become less frequent.

More importantly, with highly compressed inputs, the same tasks can be completed at lower costs and shorter times. This is why DeepSeek-OCR 2 holds greater significance for real-world AI applications, potentially making AI more suitable for integration into document workflows, whether for retrieval, comparison, summarization, or structured information extraction.

In this sense, OCR 2 resolves not just a model capability issue but a long-standing 'usability friction' problem.

While DeepSeek-OCR 2 reimagines AI's 'input side,' Kimi K2.5 focuses on enhancing AI agents' ability to complete complex tasks.

Today, AI can answer even the most complex questions, but when tasks involve multiple steps, materials, and repeated context references, models often 'forget' earlier details or remain stuck at the suggestion stage. Despite AI's maturity, many users still encounter similar limitations.

In K2.5, Kimi continues to prioritize 'long memory + multimodal + intelligent agent' capabilities, essentially attempting to shift AI from 'answer mode' to 'execution mode.'

On one hand, ultra-long context allows models to retain conversations, materials, and intermediate conclusions over extended periods, reducing the need for repeated explanations. On the other, multimodal capabilities enable AI to process not just text but images, interface screenshots, and even more complex input forms.

More critically, K2.5 continuously strengthens 'intelligent agent' functions. Kimi no longer just tells you 'what to do' but attempts to break tasks into multiple steps and implements an 'Agent Cluster,' calling on different capabilities at various stages to deliver relatively complete results. This capability determines whether AI can truly integrate into workflows rather than remaining as mere consultation assistants.

This is why Kimi K2.5 emphasizes its 'greater versatility.' It pursues not extreme performance in a single capability but the ability to handle longer, more complex, and more work-like task chains.

Large Models Now Compete on 'Practical Usability'

Looking beyond DeepSeek-OCR 2 and Kimi K2.5, recent mainstream large model upgrades have followed a remarkably consistent direction. Whether it's OpenAI's GPT-5.2, Anthropic's Claude 4.5, Google's Gemini 3, ByteDance's Doubao 1.8, or Alibaba's Qwen3-Max-Thinking, they have all shifted focus from 'how powerful the model is' to a more practical question:

Making AI more deeply integrated into real-world work environments.

This is why this round of upgrades rarely emphasizes parameter scales or single-point capabilities. Instead, it repeatedly refines several key areas: retaining context, understanding inputs, handling workflows, and completing tasks.

First, 'memory' has become a collective priority.

Previously, large models excelled at short-term dialogue but struggled with long-term collaboration. As tasks grew longer and materials more abundant, users had to repeatedly provide background information. Nearly all recent model upgrades address this pain point: longer contexts and more stable state retention enable models to follow tasks through entire processes without 'losing memory.'

GPT-5.2 productizes long contexts and different reasoning modes, while Kimi K2.5 embeds ultra-long contexts into intelligent agent workflows, allowing models to remember intermediate results during multi-step execution. These changes enable AI to move beyond answering single questions to helping users complete entire tasks.

Second, there's a renewed focus on 'visual understanding.'

If past multimodal capabilities were about 'image recognition,' current upgrades prioritize 'true comprehension.' DeepSeek-OCR 2 represents a more radical and pragmatic direction: treating vision not as a precursor to text but as information itself, enabling models to first understand structures, layouts, and relationships before processing semantics.

This shift extends beyond document scenarios. Whether it's GPT, Claude, or Gemini, all are strengthening their ability to understand screenshots, interfaces, and complex images.

Image Source: Gemini

Real-world information is rarely organized in neatly aligned text. Only when models truly understand 'how information is organized in images' can AI naturally integrate into real environments rather than existing solely in text-based chat boxes.

Third, and most critically but often overlooked, is the shift in AI's role.

Previously, large models acted as 'advisors,' providing suggestions and answers but not taking responsibility for outcomes. Now, more models are being designed as 'executors.' Kimi K2.5's emphasis on intelligent agents essentially teaches models to decompose tasks, utilize tools, and manage workflows. GPT-5.2 combines different reasoning modes with tool invocation to reduce the gap between 'suggestion and execution.'

When AI begins handling entire workflows rather than individual questions, its value assessment criteria shift from 'accuracy of answers' to 'ability to complete tasks reliably.' This is why 'engineering' has become a recurring theme in this round of upgrades.

Domestic AI has been particularly active in this regard. DeepSeek, Kimi, Qwen, and Doubao all emphasize whether models are easy to deploy, integrate into existing systems, and operate in real business environments. Meanwhile, both domestic and international AI efforts over the past year have focused on hiding complex capabilities behind interfaces and services through stronger product packaging. The underlying goal remains the same: making AI move beyond 'demonstrations' to being 'usable' and 'practical.'

Final Thoughts

No model has achieved 'Artificial General Intelligence (AGI),' but when viewed over a longer timeline, more significant changes are occurring in less 'flashy' areas: input methods are being redesigned, tasks are being decomposed and managed, and models are required to maintain stability over longer periods and more complex workflows.

As models are seriously integrated into real-life and work environments, subjected to repeated validation and utilization, their value assessment criteria shift. The focus is no longer on who has larger parameters or more impressive answers but on who offers lower costs, fewer errors, and greater long-term reliability.

From this perspective, the significance of DeepSeek-OCR 2 and Kimi K2.5 lies not just in the specific problems they solve but in representing a more pragmatic consensus: AI's next step into the real world must extend beyond question-answering.

DeepSeek Kimi AI Intelligent Agent

Source: Leitech

Images in this article are from the 123RF Licensed Image Library.