Seedance 2.0 Has Arrived—Will AI Revolutionize the Film and Television Industry?

![]() 02/13 2026

02/13 2026

![]() 396

396

By Liang Tian

Source: Jiedian Finance

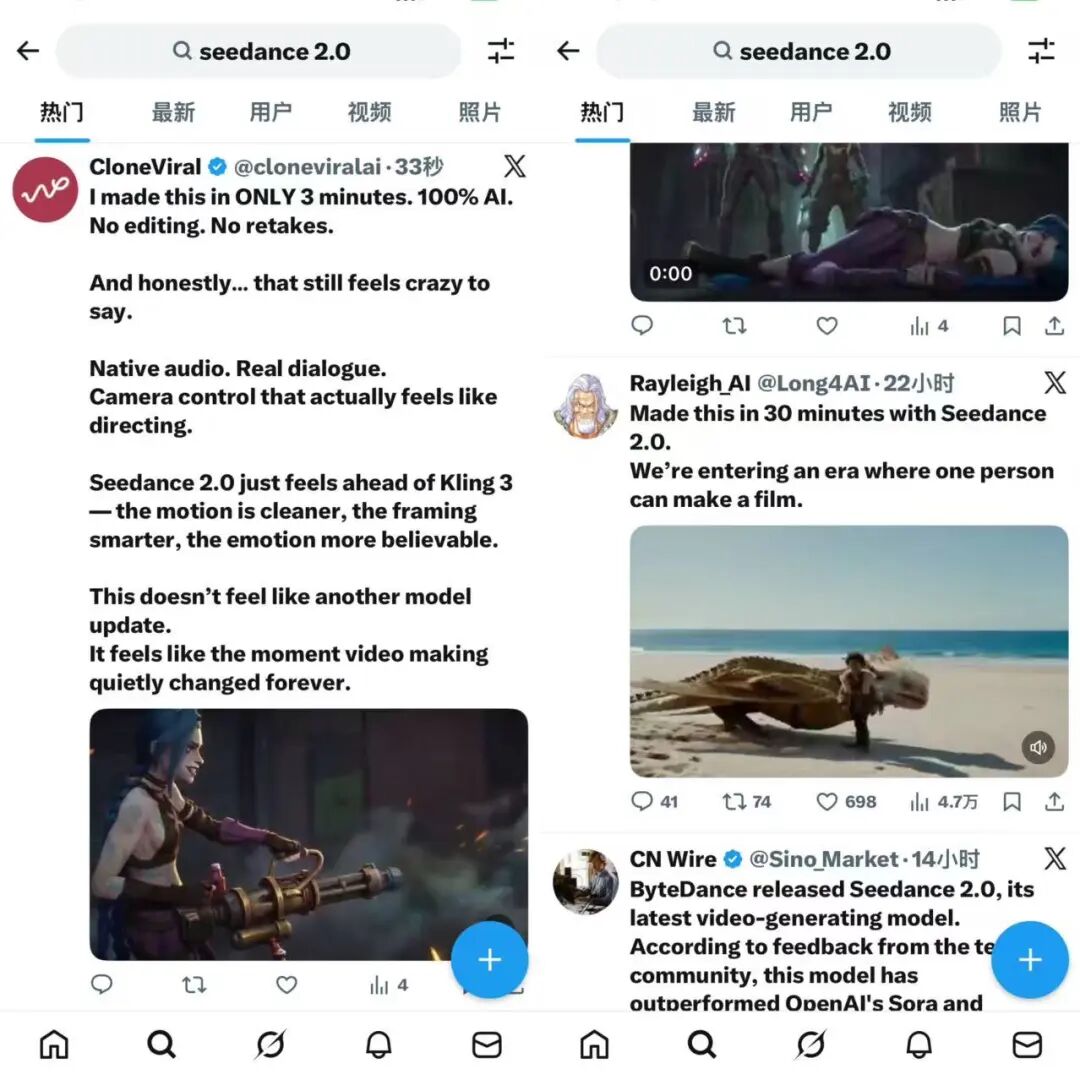

Just as the news of "AI killing SaaS" caused software stocks to fluctuate across global capital markets, the narrative of "AI disrupting the film and television industry" is now making waves. This time, the protagonist is Seedance 2.0, ByteDance's AI video generation model, which began grayscale testing on February 7 and quickly garnered attention both within and outside the industry.

Within days, evaluation content for Seedance 2.0 flooded the internet, with social platforms and tech communities buzzing with discussions about its capabilities. Feng Ji, CEO of Game Science, even hailed it as "the strongest video generation model on the planet right now, no exceptions." On overseas platforms, related demo videos sparked heated debate, with many netizens describing the results as "mind-blowing."

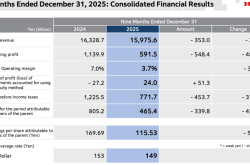

In contrast to Anthropic's impact on software stocks, Seedance 2.0 spurred gains in the media sector. On February 10, shares of Dooke Culture and Rongxin Culture surged by the daily limit of 20%, with more than a dozen other stocks, including Enlight Media, following suit. The capital market's keen sense suggests that this time, AI's transformation of the film and television industry is no longer just theoretical.

To experience its effects firsthand, I directly used Seedance 2.0 in the Xiaoyunque app. The insights shared below are based on this platform.

Rich Camera Movement and Shot-Framing Capabilities

If you're an avid fan of AI-generated videos, you'll know that past AI videos often resembled a "one-shot" approach. Even when attempts were made to split shots, issues like rhythmic imbalance or distorted visuals frequently arose.

Seedance 2.0, however, breaks free from the fixed-camera output mode common in earlier video models.

The model can autonomously plan shots and pacing based on user-provided prompts, allowing creators to focus solely on the story itself.

For example, in the official demo "Man in Black Escapes on the Street," the camera is no longer limited to monotonous panning. Instead, it demonstrates a degree of complex choreography: from a tracking shot from behind, to a parallel moving shot, and finally to the character tripping and fruit falling to the ground. The results make the model seem like an experienced cinematographer who understands the physical world.

To verify this, I avoided using the complex official prompt and instead input a simple natural language instruction: "A ragdoll cat pins a rabbit to the ground with its paw."

Despite a 30-minute wait due to server overload—which also underscored the market's enthusiasm—the resulting 10-second video was remarkably dynamic, resembling live-action footage.

If you examine it frame by frame, you'll notice the details are well-executed. The ragdoll cat's tail sways naturally, and the fur on both pets is rendered with great finesse. Their interaction is also seamless, far surpassing the performance of another video model I tested months earlier.

Of course, if you're concerned about disliking the AI-generated style or want a specific aesthetic, you can provide reference images, videos, or audio clips and specify the desired style. As long as the intent is clearly expressed, the model demonstrates strong stability in understanding and integrating different reference materials.

In the past, creating content required knowledge of shot-framing and camera movement. Now, these professional tasks can be directly handled by AI. This breakthrough means that the pre-production planning once heavily reliant on storyboard artists, directors, and camera teams is being compressed into a process achievable by a single creator. Video content has truly achieved democratization.

When Sound Becomes the Natural Language of Visuals

Traditional video models often struggle to ensure logical consistency between visuals and sound due to the randomness of the generation process.

Seedance 2.0’s other game-changing feature is its "audio-visual coordination."

As mentioned in the official documentation, the model strengthens audiovisual fusion during training. This means video generation is no longer limited to visual output—it can simultaneously produce matching sound effects and music, even maintaining lip-sync and emotional consistency in dialogue scenes.

When testing the "ASMR Crystal Knife Cutting a Rose" scene, I paid close attention to the sound details. The moment the blade sliced through the petals, the subtle crunching sound synchronized perfectly with the visuals, without any delay.

In another example, "19th-Century London Street," as a steam locomotive passed, not only did the wind lift the female protagonist's skirt, but the hustle and bustle of voices, wheel sounds, and wind also roared past.

From Jiedian Finance’s perspective, this directly eliminates the need for post-production sound editing, giving the generated content a "finished film" quality. AI video is not just replacing individual roles like editing or dubbing—it’s beginning to cover multiple previously fragmented collaborative stages in film and television production.

From "Gacha" to "Stable and Controllable"

If Seedance 2.0 can effortlessly handle simple shot-framing, camera transitions, and music-visual synchronization, how does it perform in highly complex scenes? What about its instruction-following capabilities?

Film and television professionals are all too familiar with the issue: in many AI videos, a character might have long hair in one shot and short hair the next, or a person might inexplicably have three arms. Such "deformations" make AI unsuitable for long-form content.

To test Seedance 2.0’s "stability" under extreme conditions, we increased the difficulty.

We referenced a high-difficulty "dark magic" script provided by the official team, requiring the generation of a 15-second video with three shots, including low-angle, tracking, and close-up views, and even demanding rapid cuts between three different characters.

The camera thrusts forward violently, accompanied by a powerful energy shockwave! The red-clad female general on the left fixes a razor-sharp gaze, suddenly drawing her waist sword, which erupts with scorching flames. She raises her arm and shouts: "Those who trespass on our land shall be punished, no matter how far!" The camera quickly circles around the white-clad male protagonist and green-clad female protagonist in a diving motion. The gem atop the female protagonist’s staff glows intensely, and an ancient magic circle emerges on the ground. The golden-armored warrior on the right lets out a thunderous roar, slamming his giant axe into the ground and sending out a golden ripple of energy, darkening the skies. The streaks of light in the background sky transform into massive fireballs streaking across the horizon, while distant cities erupt in flames. The entire scene brims with tension and the apocalyptic fury of battle. Finally, the camera pulls back rapidly, freezing on a grand epic scene of the five heroes unleashing their powers together, preparing for the ultimate showdown.

This script seemed highly complex to us, spanning 270 characters. In contrast, the earlier script used in the article was just a few dozen characters long. Naturally, we also used the official imagery:

To fully showcase Seedance 2.0’s camera capabilities, we also sourced many ready-made user examples from the Xiaoyunque app for reference.

For instance, the "Battle with Ultraman" scene looks remarkably like a live-action Ultraman TV episode.

Another example, "Rainy Night Abandoned Factory Fight," resembles the gritty texture of a Hong Kong action film.

Or take this one-vs-many battle scene, where the movements are incredibly fluid, as if a real person has stepped into a video game world.

It’s clear that Seedance 2.0 possesses highly mature camera movement capabilities, transitioning video generation from a highly random "gacha" phase into a relatively controllable, reproducible production stage. This is also a critical prerequisite for AI video to truly enter the content industry chain.

When Tools Are No Longer Scarce, What Becomes Irreplaceable?

Judging from various examples, Seedance 2.0’s standout features are its camera movement, editing capabilities, and consistency in characters and scenes.

If you only look at video stills, it’s hard to distinguish whether they were created by AI, a professional production team, or live-action filming. However, after examining many official and user-generated materials, you’ll notice there’s still room for improvement in the emotional nuance of characters, text presentation, and even content stability in some videos.

From this perspective, Seedance 2.0 may not yet completely revolutionize the film and television industry, but its significance lies in massively lowering the barrier to visual expression.

In the past, filmmaking was a medium heavily reliant on organizations, funding, and industrial systems. Many people had ideas but lacked the means to realize them. Now, tools are beginning to dismantle the thickest wall between "imagination" and "presentation," allowing individuals, for the first time, to turn mental images into viewable content without relying on established systems.

This won’t immediately replace directors or the film and television industry, but it will subtly alter the distribution of creative power and determine who can continue to participate in the field of expression. Instead, it forces the industry to reask a fundamental question: in an era where tools are no longer scarce, what kind of creators will remain irreplaceable?

From a long-term perspective, this undoubtedly offers a glimpse into AI’s future in reshaping the content industry. As Feng Ji said, "kill the game" feels more like an emotional assessment of technological prowess than a direct judgment on industry realities.

Multimodality is set to be a fierce battleground for model factories in 2026. The real changes are just beginning, and the future is worth anticipating.

*Featured image generated by AI