Seedance 2.0: A Leap in Innovation or a Threat to Privacy?

![]() 02/13 2026

02/13 2026

![]() 530

530

This weekend, ByteDance's latest video generation model has ignited a firestorm across the internet.

Expressions like "game-changer" and "mind-blowing effects," which have become somewhat overused in the AI landscape, are once again dominating online discussions.

Some believe that ByteDance's Seedance 2.0 could follow in the footsteps of Google's Veo 3 and OpenAI's Sora 2, marking another groundbreaking milestone in AI video generation.

Just as I was questioning whether these bold claims were merely another attempt by AI companies to generate buzz before the New Year, a video released at 1 a.m. by renowned Bilibili creator "Film Storm" provided a compelling answer: Seedance 2.0's capabilities are truly remarkable.

01 The Strong Need No Words

Let's first delve into some video demos featured in the official documentation:

Prompt: A man@Image1 trudges down the corridor after work, his steps slowing until he halts at his doorstep. A close-up reveals him taking a deep breath, adjusting his emotions, and suppressing negativity before relaxing. He then retrieves his keys, unlocks the door, and enters his home, where his young daughter and a pet dog joyfully greet him with hugs. The indoor atmosphere is warm, and the entire scene unfolds with natural dialogue.

With limited knowledge of video and film creation, I found it nearly impossible to distinguish whether this was AI-generated or human-made.

Generating short animated clips of adorable pets is also well within its capabilities:

Prompt: A humorous roast dialogue in the "Cat-Dog Roast Room," requiring rich emotions and a stand-up comedy performance style:

Meow Sauce (cat host, licking fur and rolling eyes): "Can anyone relate? This guy beside me spends his days wagging his tail, tearing up the sofa, and using those 'I'm so innocent, please pet me' eyes to trick humans into giving him snacks. Yet, he's more destructive than anyone when ripping apart the house. How dare he call himself Wang Zai? He should be called 'Wang Chai' (Wang the Destroyer) instead! Hahaha!"

Wang Zai (dog host, tilting head and wagging tail): "And you have the nerve to say that about me? You sleep 18 hours a day, wake up, and rub your legs against humans for canned food. Your shedding leaves black clothes covered in your fur. After humans sweep the floor, you roll around on the sofa again. How dare you pretend to be a high-and-mighty aristocrat?"

Moreover, the video released by "Film Storm" showcased captivating scenes such as a snowman from Mixue Ice Cream & Tea battling a robot from a foreign coffee shop, Ultraman fighting monsters, a martial arts master taking on multiple opponents, and commercial shorts featuring a female athlete running long-distance and boxing—all presented with seamless visual effects and flawless multi-angle camera movements.

In just two days since its release, Seedance 2.0 has left major professional film critics in awe and made ordinary users forget the boundaries of AI video generation.

In ByteDance's Seedance 2.0 documentation, the research team describes its astonishing technological breakthroughs in restrained terms: more realistic physical laws, smoother movements, and multimodal reference capabilities supporting the free combination of text, images, audio, and video.

Seedance 2.0 has also made targeted optimizations to address previous challenges in video generation:

Users can upload reference videos to achieve complex, controllable camera movements and precise action replication, enabling functionalities like video extension, music synchronization, multilingual dubbing, and creative plot completion while enhancing consistency.

When transitioning from the 3D world to 2D animation, Seedance 2.0 offers even more surprises: it can automatically transform comic panels into animations and recognize a 2D character's eyes, hair, and clothing as independently movable layers, avoiding early AI issues of misinterpreting flat images as pseudo-3D.

Suddenly, the AI community is abuzz: consumer-grade video generation is about to cross a critical threshold, with technical execution issues resolved, leaving only creative decision-making problems to tackle.

However, behind every technological highlight often lurks a shadow.

02 An Unsettling "Coincidence"

After witnessing Seedance 2.0's power, the latter half of "Film Storm's" video presents a strange case:

The on-screen participant, Tim, uploads his facial photo along with a prompt to the model, which naturally returns an AI science video starring him.

However, the video features not only his likeness but also a voice nearly identical to his own.

In a live-action video segment, the background building closely resembles his company's headquarters.

Even stranger, a tester in the comments section, who provided only a facial photo and requested a night-running scene, ended up with a character wearing the exact same running shoes he had just purchased the previous week—down to the color and model—despite not disclosing any such information in the prompt.

As a tech enthusiast, I firmly believe this isn't "paranormal activity." So, I immediately revisited ByteDance's released documentation, where the official explanation is as follows:

The model's capabilities can be attributed to "multimodal reference" and "enhanced consistency."

"Multimodal reference" refers to the model's ability to simultaneously parse heterogeneous data like images and audio, achieving cross-modal feature alignment.

"Enhanced consistency" relies on statistical learning of co-occurrence patterns among people, objects, and scenes in vast video datasets.

From a purely theoretical standpoint, it's not impossible for the model to generate videos resembling Tim and the tester, as it has likely encountered enough samples combining "faces + voices + clothing + environments" before its release.

However, theoretical plausibility does little to alleviate personal discomfort.

If AI can accurately guess running shoes without explicit hints, it must have accessed purchase records or related information; if it can precisely mimic voices and buildings, it must have repeatedly analyzed videos Tim filmed.

While such precision is astonishing, it far exceeds the comfort zone of statistical probability, fueling an unsettling suspicion:

Has our lives already become part of training data?

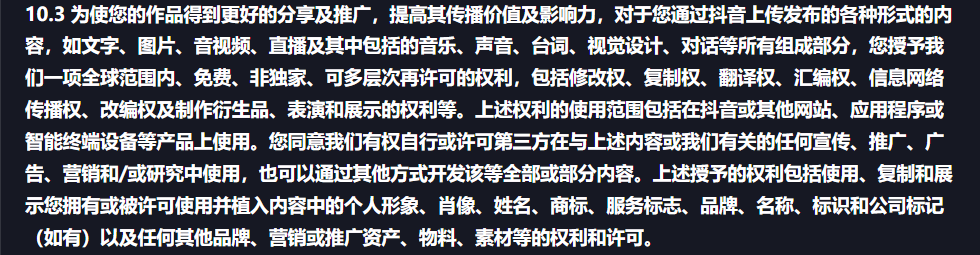

The answer is yes. As stated in Douyin's user service agreement:

The phrase "global, free, non-exclusive, multi-level sublicensable rights" introduces a degree of ambiguity.

We cannot determine whether this includes automated training for AI models, but what is certain is that snippets of our lives are being used to build generation models that "replicate ourselves."

The comments section is filled with discontent: "Who dares share their life on social media anymore?"

This isn't an overreaction but a gradual awakening of data sovereignty awareness in people's subconscious.

We've long grown accustomed to technological convenience, often overlooking the silent erosion of data control.

03 The End of Creation: Creativity Diluted by Algorithms

The sudden technological impact of Seedance 2.0 affects far more than just daily life. It also shatters the spiritual world of creators.

Despite the video's brevity at just nine minutes, the comments section reflects a microcosm of human experiences.

A freelance illustrator wrote: "I'm forced to use AI workflows for creation, yet I feel no joy whatsoever. I'm merely repeating the process of generating images, stitching them together, and regenerating. In my view, it's not my work at all, as I had no hand in deriving any details."

This illustrator misses not just the act of painting itself but the immersive experience of participating in artistic creation.

The meticulous refinement of every detail, the client's recognition and delight upon receiving the finished work, and the fulfillment of self-worth—these moments, which embody the intrinsic value of artistic creation, should not be replaced by a "prompt → generate → filter" workflow.

As a programmer, I deeply empathize.

During my freshman year, a single course project would leave most of the class overwhelmed. From data structures to operational logic and UI design, it took novices anywhere from a few weeks to a month to complete a basic app with functional features, albeit not particularly visually appealing.

The sense of relief after running the program hundreds of times and finally achieving error-free execution is something I haven't felt in a long time, as AI can now accomplish such tasks in minutes.

While technological progress benefits humanity, it also accelerates the dismantling of professional barriers that once required years of accumulation.

Professionals in any field, witnessing knowledge and skills they spent years mastering being effortlessly replicated and surpassed, cannot help but feel disheartened by the "devaluation of effort."

Deeper concerns arise from the polarization of industry structures.

MiHoYo founder Cai Haoyu once made a somewhat exaggerated prediction: In the AI era, game creation will belong to only two groups—the top 0.0001% of professional teams capable of producing unprecedented works, and 99% of amateurs who can freely create games based on their preferences. The remaining developers, he suggested, should consider changing careers.

Whether this prediction comes true matters less than the fact that Seedance 2.0's creative capabilities align precisely with his description.

When AI can effortlessly replicate cinematic-quality camera movements and emotional performances, creation becomes systematically structured by algorithms, no longer a unique human advantage.

Powerful tools are now in everyone's hands, but when faced with the question, "Why bother creating when AI can do it better?" I find myself at a loss for an answer.

04 From "Technology Changes Life" to "Life Changes Technology"

The opening statement—"technical execution issues have been resolved, leaving only creative decision-making problems"—now finds its explanation.

People no longer need to worry about "whether AI can create videos" but only about "which AI-generated video is better," providing feedback to AI to complete the closed loop of multimodal data flow.

Through countless iterations, AI will not only generate content but also learn to define "high-quality creativity" and filter users suited for specific video styles.

Thus, humanity shifts from being the subject of creation to an object evaluated by algorithms.

I agree with a comment in the discussion: When AI can effortlessly realize everyone's "creativity" and even replicate humans themselves, creativity loses its value, and individuals become objects selected by AI.

Technology no longer serves humanity but reconstructs human values—a nihilistic prospect that is somewhat chilling.

The release of Seedance 2.0 represents AIGC technology's attempt to overstep from being a tool to influencing values.

It no longer merely executes user instructions but begins to understand and replicate traits in human creation that are difficult to articulate precisely, such as emotional shifts, stylistic continuity, or cross-modal metaphorical connections.

While this leap in capabilities deserves recognition, we must not forget that the energy for this leap comes from the minutiae of our daily lives.

"Technology changes life" has been an optimistic narrative since the digital age.

However, Seedance 2.0's technological evolution suggests that life is changing technology in ways we barely notice.

Faces, voices, consumption records, and social traces are becoming fodder for algorithms, yet data sovereignty awareness and institutional safeguards remain underdeveloped.

Technology itself is neutral, but the flow of data determines the allocation of power. Whether humanity retains final interpretive rights over its data depends on how "creation" is defined in the AI era:

It could be algorithms' precise replication of life or the infinite extension of human will.

Seedance 2.0 forces everyone to confront a fundamental question: Are we willing to exchange every detail of our lives for the infinite convenience of technology?

With such powerful video generation capabilities, we seem to have no reason to refuse their use.

Yet, in the face of such rapid technological evolution, one wonders whether we still retain the right to refuse.