Chinese AI companies can take an unconventional path

![]() 07/23 2024

07/23 2024

![]() 525

525

There is a growing clamor for the implementation of large models, but there is still debate on how exactly this should be done. This is true both domestically and internationally, with different ideologies corresponding to different methodologies.

Some AI companies are busy updating their general-purpose large models and competing in rankings, developing popular products like video generation tools, with most giants falling into this category.

Others are focused on industry-specific large models or platforms, such as Glean abroad and China's Fourth Paradigm, which recently launched an AI digital human video synthesis platform aimed at making it easier for enterprises to leverage large model capabilities.

In addition, there are "shovel-selling" companies that prioritize serving the development of large models, like Scale AI, which has evolved from data annotation to full-stack AI data services and model building. If its transformation is successful, it could also become the second type of company.

This is not simply a debate about business models. The consensus that "all industries are worth redoing with AI" has long been established, but there are still many questions waiting to be answered. Especially for AI companies backed by China's industrial landscape, they may offer different answers.

The Direction of AI Large Models

Many companies can now claim to be in the AI industry, but having a formed model does not equal long-term value.

Currently, most enterprises utilize AI through general-purpose language large models, trained with industry-specific data corpora, which can be considered differentiated applications. However, this may be a shortcut method. Mike Knoop, co-founder of Zapier (a company exploring business process automation based on the no-code model, which aligns well with the autonomous capabilities of generative AI), believes that expanding language large models can only fundamentally promote the development of "memory," which is distinct from intelligence. They cannot comprehend business scenarios and needs, so they fail to fully exploit the value of AI.

(Image source: Zapier official website)

Furthermore, if we compare the curve of increased GPU computing power investment with the improvement of language large model capabilities, while there is indeed an incremental benefit, it is likely to exhibit diminishing marginal returns. Once all publicly available simple data on the internet is exhausted, relying on general-purpose language large models to overtake in the AI field becomes a fantasy.

This is even more detrimental to enterprises. They often engage in a "monkey picking corn" approach in pursuit of new technologies, starting with the intention to solve a specific problem but ending up in a conceptual race: using big data when it emerges, studying computer vision when it becomes popular, and then turning to AIGC and AGI as keywords in their strategic reports during the era of large models and generative AI. However, as time passes, the fundamental issues are forgotten.

The solution to this problem, or the answer to enterprises' AI large model needs, is actually in the hands of AI companies.

Sarah Tavel, partner at Benchmark, a prominent venture capital firm, believes that the first wave of AI use cases merely offered an API usage method. However, limiting AI technology to assisting single tools is not a reasonable outcome. The best development direction is to provide complete products and services tailored to industry-specific customer needs, embarking on large model ventures.

Alex Wang, the so-called Chinese-American prodigy and co-founder of Scale AI (which initially started with data annotation services and later transformed into an AI-driven full-stack data solution provider, including helping clients build usable large models and hosting services), believes that data, rather than algorithms or computing, is the bottleneck for AI model performance. Data ultimately comes from numerous vertical industries, implying that AI companies should delve into these industries to develop industry-specific large models that meet enterprise needs.

There are two key points to consider in this process:

Firstly, the data issue, as highlighted by Alex Wang, AI companies must have the ability to "understand" users and industries. Fundamentally, most companies that have gone through the digital age have vast amounts of data corpora stored in warehouses, but without assistance, they remain unutilized.

Secondly, the issue of management and iteration. Given the diverse industries and scenarios, current industry resources are unlikely to support a single company in building large models across all fields. How can this be addressed?

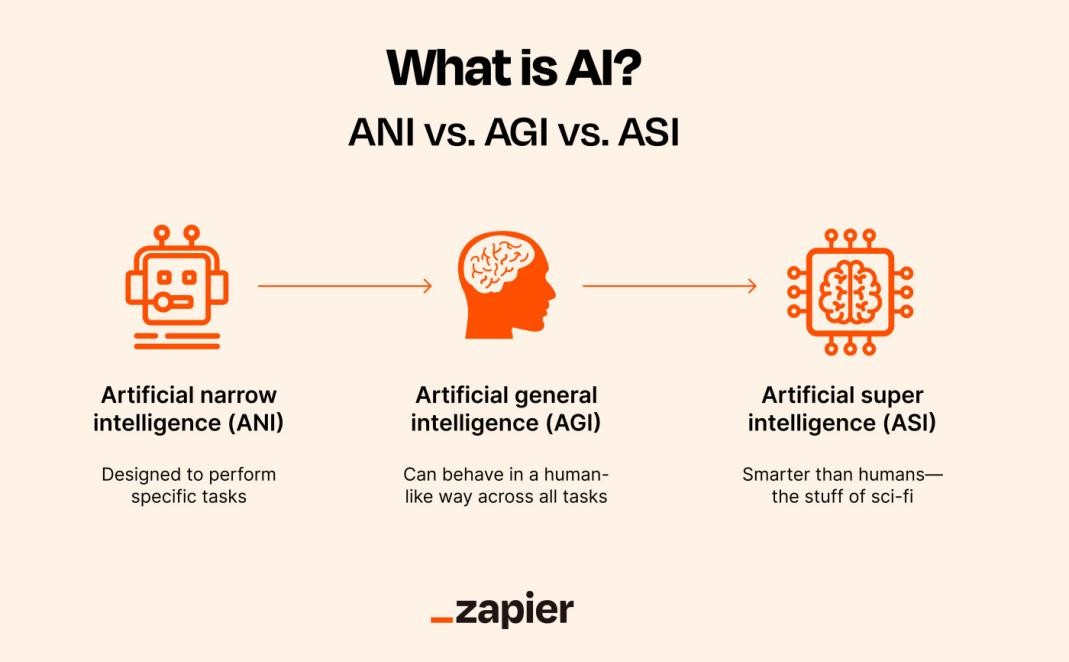

Both Fourth Paradigm and Zapier co-founder Mike Knoop point to automation as the key. Technically, AutoML, program synthesis, and neural architecture search all involve automation and optimization processes aimed at reducing manual intervention and enhancing efficiency and effectiveness. Mike Knoop believes that AGI exploration necessitates program synthesis and neural architecture search, while Dai Wenyuan, founder and CEO of Fourth Paradigm, mentioned in an interview with "Intelligent Emergence" that the foundational technology for building countless industry-specific large models is AutoML – automated machine learning.

(Image source: Microsoft Learn)

Dai Wenyuan says that AutoML is "the art of failure" that delivers greater value because Fourth Paradigm has encountered numerous scenarios, understanding how to align data and model development with specific scenario needs. Successes become achievements, and failures become nutrients, accelerating iteration through automation. As Alex Wang puts it, "Machine learning is a framework of garbage in, garbage out." But with high-quality industry data and continuous error correction capabilities, industry-specific large models will become a reality and achieve reliable implementation.

The top thinkers in this industry, to a certain extent, align with the logic of industry shaping. AI companies like Fourth Paradigm, rooted in China's complex industrial landscape, have even more potential for extension.

Creating Different AI Models: Ideas, Approaches, and Prospects

Some companies, represented by OpenAI, focus on general-purpose large models, with a horizontal development trend where the large model is everything. In terms of business models, they sell the capabilities of the large model alone. In contrast, companies like Fourth Paradigm, Glean, and even Palantir, which utilize AI technology to assist enterprises in making decisions to enhance overall work efficiency, take a different path. Their business models differ as well.

For instance, Glean provides an AI-powered enterprise search and knowledge management platform that integrates numerous third-party application functions, connecting directly to other SaaS products. In essence, it becomes part of the workflow. Meanwhile, Glean also helps enterprises train proprietary AI models using enterprise data, based on its self-developed "Trusted Knowledge Model." The company's starting point is that employees often struggle to find useful information within complex work systems. Glean leverages AI models and cross-application service capabilities throughout the workflow, building a new advantage upon traditional enterprise search.

Compared to Glean, Fourth Paradigm shares a similar focus on enhancing enterprise core businesses and implementing AI large models into operations, rooted in the same ideological core. This is because their philosophy stems from industry and enterprise needs, detaching from sole reliance on technology, parameters, or language. However, Fourth Paradigm delves deeper into predictive management of industry core business issues.

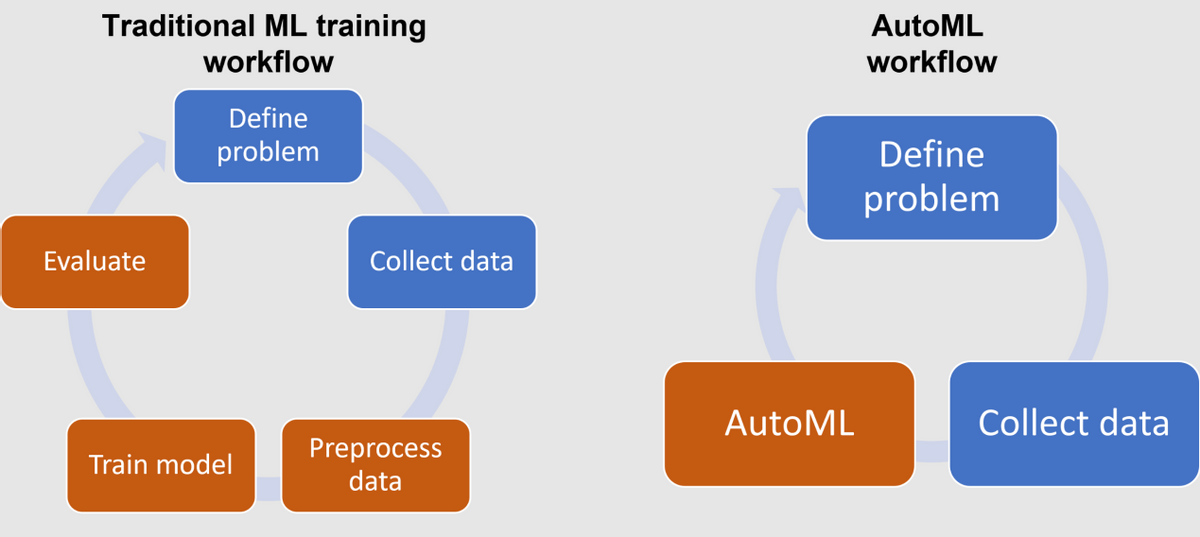

Fourth Paradigm released its industry-specific large model platform, ZKOS AIOS 5.0, earlier this year. Inheriting Fourth Paradigm's previous thinking on data governance and intelligent development, it places greater emphasis on helping enterprises tap into the potential of industry-specific large models. The core feature of AIOS 5.0 is building industry-specific large models based on X-modal data across various industries.

(Image source: Fourth Paradigm official website)

In terms of capabilities, it focuses on "Predict the Next 'X,'" where 'X' represents the diverse logic and outcomes across industries. On the usage level, based on supporting access to various modal data from enterprises, ZKOS AIOS 5.0 provides low-barrier modeling tools for large model training and fine-tuning, an innovative scientist service system, a North Star strategy management platform, a large model management platform, mainstream computing power adaptation and optimization, and other capabilities, enabling end-to-end construction, deployment, and management services for industry-specific large models. At the application level, considering China's high level of industrial and scenario complexity, it effectively provides an environment for the vertical development of industry-specific large models.

This is an excellent example of Chinese AI companies leveraging their industrial background for development. Dai Wenyuan once said, "We have a wealth of scenarios and data advantages in China. When we cover enough scenarios and piece together these models, you may ultimately achieve AGI." In contrast, many popular industry-specific large models today are still industry-specific language models, large but not refined. When segmented into more precise scenarios, while it may seem necessary to build numerous large models, each precise scenario has limited data load, and with the help of automation technology, AGI's application-level development is achieved through an alternative path.

As Mike Knoop observes, the reason AGI quickly encountered upward barriers after its rapid advancement is our overreliance on language large models, defining AGI as a system capable of completing most tasks. However, AGI should actually prioritize efficiently acquiring new capabilities to solve open-ended problems across various scenarios.

In fact, this may be the correct approach. In his speech at the 130th Commencement of the California Institute of Technology, NVIDIA CEO Jensen Huang mentioned that with the development of large models, computers are shifting from instruction-driven to intent-driven. "Future applications will do and execute things similarly to how we do them, assembling teams of experts, using tools, reasoning, planning, and executing our tasks." This logic inherently implies versatility. We are also witnessing large models entering the physical world, as decision-making in the physical world also follows discernible patterns.

A similar example is Palantir, whose valuation has more than doubled due to AI technology over the past year. Originally a To G big data company, Palantir leveraged data analysis and modeling simulations to aid decision-making. However, generative AI technology transformed its data processing methods, significantly advancing automation and data-driven decision-making, accelerating AI To B business expansion. Fourth Paradigm is establishing industry-specific large models in each deterministic scenario, empowering enterprises to master their applications and make effective decisions.

(Image source: Xueqiu)

Finally, returning to thoughts on future prospects. OpenAI's overemphasis on pushing general capabilities to the extreme has temporarily cost it the opportunity to dominate product advantages in specific domains. In contrast, companies focused on developing more flexible and open models have gained development opportunities. In terms of business models, OpenAI, with its subscription-based approach, will continue to "sell" large model capabilities, more akin to a tool. Meanwhile, companies like Fourth Paradigm, Glean, Scale AI, and Palantir sell technology and its add-ons and services, resembling a system.

Scale AI raised $1 billion in funding at a $13.8 billion valuation in the first half of the year. Five-year-old Glean raised a massive $200 million in its Series D funding round, with a valuation of up to $2.2 billion, equivalent to approximately 16 billion yuan in Chinese currency. Fourth Paradigm's valuation on the Hong Kong stock exchange is stable at around HK$22.4 billion, with its growth potential primarily tied to the development and revenue of the ZKOS platform. In the first quarter of this year, out of Fourth Paradigm's total revenue of 828 million yuan, the ZKOS platform accounted for 500 million yuan, or 60.6%. As application scenarios increase and revenue grows, its value will also be unlocked.

Ultimately, AI companies will inevitably be compared to industry giants on the path to AGI. But as long as they can produce more products that create value for enterprises in practical scenarios, the market will naturally assign them unique value. AGI is a vast concept, and all explorations are beneficial for the future.

Source: Hong Kong Stocks Research Society